By Frank and Bethine Church Chair of Public Affairs & Associate Professor, School of Public Service, Boise State University. Originally published at The Conversation

U.S. technology giant Microsoft has teamed up with a Chinese military university to develop artificial intelligence systems that could potentially enhance government surveillance and censorship capabilities. Two U.S. senators publicly condemned the partnership, but what the National Defense Technology University of China wants from Microsoft isn’t the only concern.

As my research shows, the advent of digital repression is profoundly affecting the relationship between citizen and state. New technologies are arming governments with unprecedented capabilities to monitor, track and surveil individual people. Even governments in democracies with strong traditions of rule of law find themselves tempted to abuse these new abilities.

In states with unaccountable institutions and frequent human rights abuses, AI systems will most likely cause greater damage. China is a prominent example. Its leadership has enthusiastically embraced AI technologies, and has set up the world’s most sophisticated surveillance state in Xinjiang province, tracking citizens’ daily movements and smartphone use.

Its exploitation of these technologies presents a chilling model for fellow autocrats and poses a direct threat to open democratic societies. Although there’s no evidence that other governments have replicated this level of AI surveillance, Chinese companies are actively exporting the same underlying technologies across the world.

Increasing Reliance on AI Tools in the US

Artificial intelligence systems are everywhere in the modern world, helping run smartphones, internet search engines, digital voice assistants and Netflix movie queues. Many people fail to realize how quickly AI is expanding, thanks to ever-increasing amounts of data to be analyzed, improving algorithms and advanced computer chips.

Any time more information becomes available and analysis gets easier, governments are interested – and not just authoritarian ones. In the U.S., for instance, the 1970s saw revelations that government agencies – such as the FBI, CIA and NSA – had set up expansive domestic surveillance networks to monitor and harass civil rights protesters, political activists and Native American groups. These issues haven’t gone away: Digital technology today has deepened the ability of even more agencies to conduct even more intrusive surveillance.

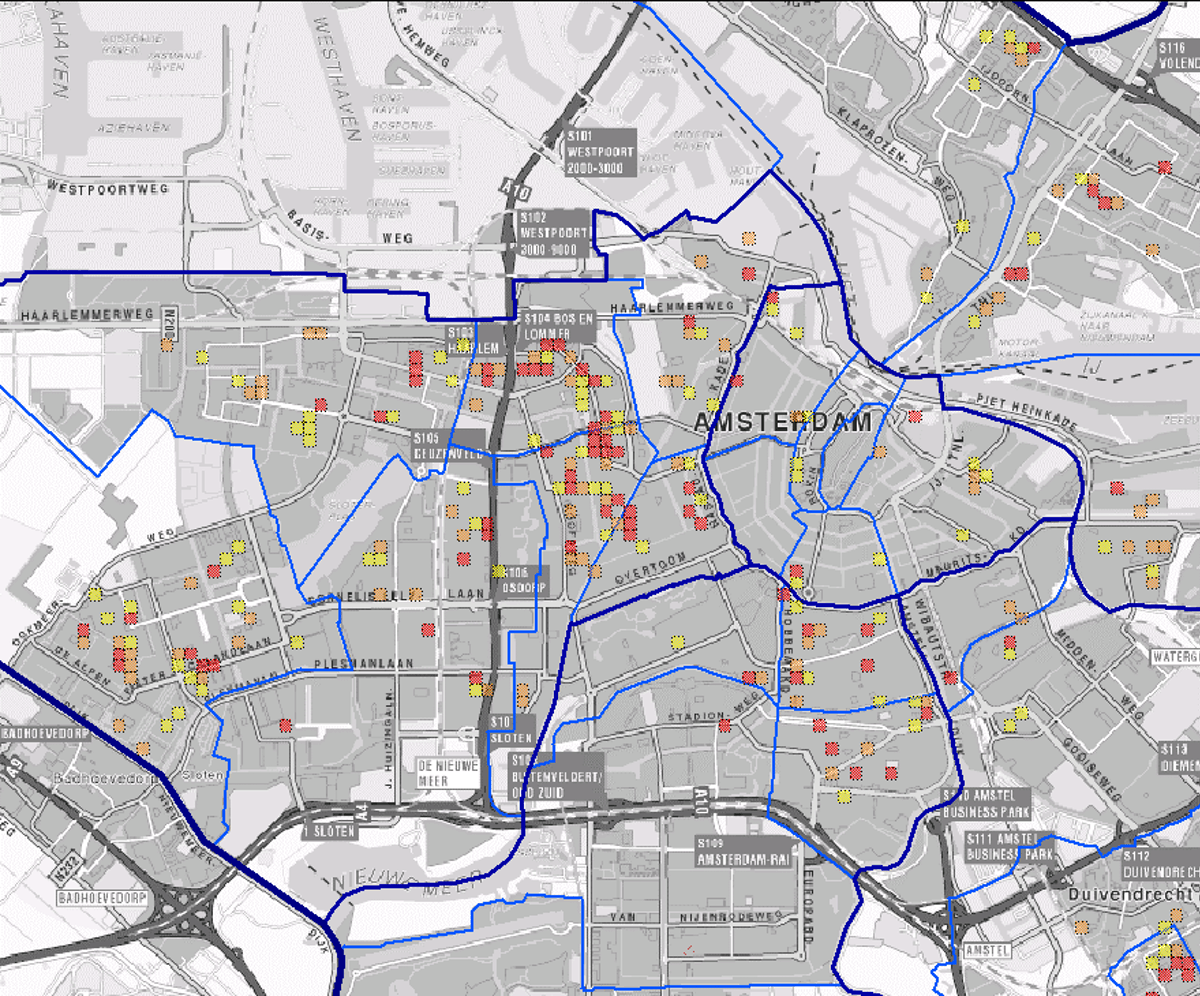

How fairly do algorithms predict where police should be most focused? Arnout de Vries

For example, U.S. police have eagerly embraced AI technologies. They have begun using software that is meant to predict where crimes will happen to decide where to send officers on patrol. They’re also using facial recognition and DNA analysis in criminal investigations. But analyses of these systems show the data on which those systems are trainedare often biased, leading to unfair outcomes, such as falsely determining that African Americans are more likely to commit crimes than other groups.

AI Surveillance Around the World

In authoritarian countries, AI systems can directly abet domestic control and surveillance, helping internal security forces process massive amounts of information – including social media posts, text messages, emails and phone calls – more quickly and efficiently. The police can identify social trends and specific people who might threaten the regime based on the information uncovered by these systems.

For instance, the Chinese government has used AI in wide-scale crackdowns in regions that are home to ethnic minorities within China. Surveillance systems in Xinjiang and Tibet have been described as “Orwellian.” These efforts have included mandatory DNA samples, Wi-Fi network monitoring and widespread facial recognition cameras, all connected to integrated data analysis platforms. With the aid of these systems, Chinese authorities have, according to the U.S. State Department, “arbitrarily detained” between 1 and 2 million people.

My research looks at 90 countries around the world with government types ranging from closed authoritarian to flawed democracies, including Thailand, Turkey, Bangladesh and Kenya. I have found that Chinese companies are exporting AI surveillance technology to at least 54 of these countries. Frequently, this technology is packaged as part of China’s flagship Belt and Road Initiative, which is funding an extensive network of roads, railways, energy pipelines and telecommunications networks serving 60% of the world’s population and economies that generate 40% of global GDP.

For instance, Chinese companies like Huawei and ZTE are constructing “smart cities” in Pakistan, the Philippines and Kenya, featuring extensive built-in surveillance technology. For example, Huawei has outfitted Bonifacio Global City in the Philippines with high-definition internet-connected cameras that provide “24/7 intelligent security surveillance with data analytics to detect crime and help manage traffic.”

Bonifacio Global City in the Philippines has a lot of embedded surveillance equipment. alveo land/Wikimedia Commons

Hikvision, Yitu and SenseTime are supplying state-of-the-art facial recognition cameras for use in places like Singapore – which announced the establishment of a surveillance program with 110,000 cameras mounted on lamp posts around the city-state. Zimbabwe is creating a national image database that can be used for facial recognition.

However, selling advanced equipment for profit is different than sharing technology with an express geopolitical purpose. These new capabilities may plant the seeds for global surveillance: As governments become increasingly dependent upon Chinese technology to manage their populations and maintain power, they will face greater pressure to align with China’s agenda. But for now it appears that China’s primary motive is to dominate the market for new technologies and make lots of money in the process.

AI and Disinformation

In addition to providing surveillance capabilities that are both sweeping and fine-grained, AI can help repressive governments manipulate available information and spread disinformation. These campaigns can be automated or automation-assisted, and deploy hyper-personalized messages directed at – or against – specific people or groups.

AI also underpins the technology commonly called “deepfake,” in which algorithms create realistic video and audio forgeries. Muddying the waters between truth and fiction may become useful in a tight election, when one candidate could create fake videos showing an opponent doing and saying things that never actually happened.

In my view, policymakers in democracies should think carefully about the risks of AI systems to their own societies and to people living under authoritarian regimes around the world. A critical question is how many countries will adopt China’s model of digital surveillance. But it’s not just authoritarian countries feeling the pull. And it’s also not just Chinese companies spreading the technology: Many U.S. companies, Microsoft included, but IBM, Cisco and Thermo Fisher too, have provided sophisticated capabilities to nasty governments. The misuse of AI is not limited to autocratic states.

> A critical question is how many countries will adopt China’s model of digital surveillance . . .

We already know the answer to that, and I am surprised such a naive question is asked. All countries.

Give any politician the ability to use a technology to oppress all political opponents in one fell swoop and gain moar power for themselves, while picking the pockets of those about to be oppressed to pay for the technology to oppress them, is the operating principle. Technology has morphed into a great evil.

And with AI, when the inevitable mass public disaster happens (I view it as disastrous already), try putting the system on the stand to answer questions. That chip ain’t talking.

I disagree that all politicians think like that, but AI give a new advantage to those who are willing to use dirty tactics, thus helping put them in power.

> I disagree that all politicians think like that,

Then they aren’t real politicians.

One can observe the digital noose tightening in real time as measured in weeks and months, and as the knot keeps getting pulled tighter, its starting to chafe the peasants. I beg to differ with those that believe all this data collection and surveillance is ultimately going to be useful. It’s mostly being done because data storage capacity is infinite and cheaper than dirt, especially when you count the special deals the data storage companies get on power.

Competing AI algorithms are set loose on this data and my sense is that it’s mostly a garbage in garbage out game. Its also a given that AI will be used to do harm intentionally by some actors. After all it’s just a tool, until it isn’t.

Indeed the terms AI or smart stuff are quite deceitful themselves. I wouldn’t get any confort because I live in a democracy as compared with authoritarian regimes. Even more, the laws that supposedly protect personal data don`t make me more confident. It is just that surveillance will be more subtle or hidden.

Exactly!

Following up on cnchal’s point above, the author’s technical knowledge seems hampered by a kind of political naïveté, wherein the U.S. regime, for example, although it has historically pursued and continues to pursue the very uses of technology the author associates with “authoritarian” governments, is somehow still not considered an “authoritarian” government. Rather the U.S. shows us that even governments committed to the “rule of law” [like the U.S. haha!] act just like authoritarian governments do when it comes to data collection and monitoring.

Except perhaps it turns out that “flawed democracies” like the U.S. are not quite as honest about what they’re up to as are “closed authoritarian” regimes.

I mean, the U.S. is currently contemplating the state execution of a journalist for the crime of publishing details about (among other things) the CIA’s interests in this and closely related fields as tools of social manipulation. Can’t let that cat out of the bag can we!

Whilst this may be a stretch, I am surprised you did not mention Google’s plan to build a smart city in Toronto…

Collateral damage coming?

In reality, complex machine learning still remains a remarkably opaque process even for AI experts. In 2017, for instance, a study by JASON researchers — an independent group of scientific advisers to the federal government (whose contract was reportedly recently terminated) — found testing to ensure that AI systems behave in predictable ways in all scenarios may not currently be feasible. They cautioned about the potential consequences, when it comes to accountability, if such systems are nonetheless incorporated into lethal weapons.

http://www.tomdispatch.com/post/176557/tomgram%3A_allegra_harpootlian_and_emily_manna%2C_the_ai_wars/#more

“Even governments in democracies with strong traditions of rule of law find themselves tempted to abuse these new abilities.”

Sputter… “tempted”…? Uh, has the author of this article been asleep for the past ten years?

Massive data collection by the NSA? Metadata? Snowden? William Binney? Utah data center? Director of National Intelligence James Clapper repeatedly lying to Congress? …?

Langley to Mr. Feldstein, come in, please.

Not to mention the millions of surveillance cameras instslled in the UK, on every street, every bus and every train.

Apparently he doesn’t think the US has “strong traditions of rule of law.”

He’s right. “Methinks the lady doth protest too much.”

Hang onto your old mimeograph machines; we may need them.

Some amazing organizing was based on them, and other cheap, non-digital printing modes.

You can’t kill what’s already dead. Since when has public opinion mattered on anything of real substance?