Yves here. Yours truly, like many other proprietors of sites that publish original content, is plagued by site scrapers, as in bots that purloin our posts by reproducing them without permission. It turns out that ChatGPT is engaged in that sort of theft on a mass basis.

Perhaps we should take to calling it CheatGPT.

By Uri Gal, Professor in Business Information Systems, University of Sydney. Originally published at The Conversation

ChatGPT has taken the world by storm. Within two months of its release it reached 100 million active users, making it the fastest-growing consumer application ever launched. Users are attracted to the tool’s advanced capabilities – and concerned by its potential to cause disruption in various sectors.

A much less discussed implication is the privacy risks ChatGPT poses to each and every one of us. Just yesterday, Google unveiled its own conversational AI called Bard, and others will surely follow. Technology companies working on AI have well and truly entered an arms race.

The problem is it’s fuelled by our personal data.

300 Billion Words. How Many Are Yours?

ChatGPT is underpinned by a large language model that requires massive amounts of data to function and improve. The more data the model is trained on, the better it gets at detecting patterns, anticipating what will come next and generating plausible text.

OpenAI, the company behind ChatGPT, fed the tool some 300 billion words systematically scraped from the internet: books, articles, websites and posts – including personal information obtained without consent.

If you’ve ever written a blog post or product review, or commented on an article online, there’s a good chance this information was consumed by ChatGPT.

So Why Is That an Issue?

The data collection used to train ChatGPT is problematic for several reasons.

First, none of us were asked whether OpenAI could use our data. This is a clear violation of privacy, especially when data are sensitive and can be used to identify us, our family members, or our location.

Even when data are publicly available their use can breach what we call contextual integrity. This is a fundamental principle in legal discussions of privacy. It requires that individuals’ information is not revealed outside of the context in which it was originally produced.

Also, OpenAI offers no procedures for individuals to check whether the company stores their personal information, or to request it be deleted. This is a guaranteed right in accordance with the European General Data Protection Regulation (GDPR) – although it’s still under debate whether ChatGPT is compliant with GDPR requirements.

This “right to be forgotten” is particularly important in cases where the information is inaccurate or misleading, which seems to be a regular occurrence with ChatGPT.

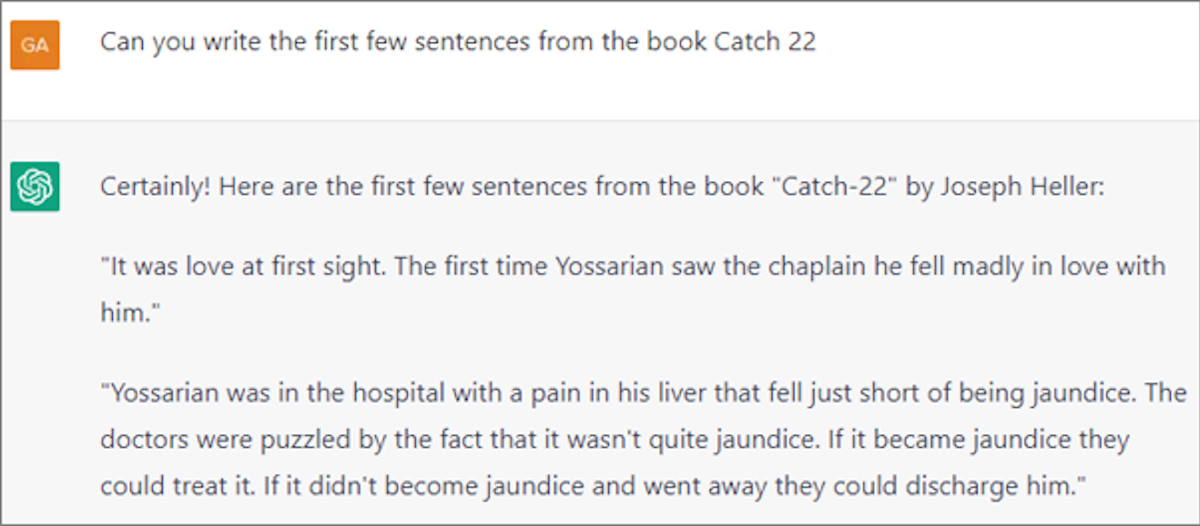

Moreover, the scraped data ChatGPT was trained on can be proprietary or copyrighted. For instance, when I prompted it, the tool produced the first few passages from Joseph Heller’s book Catch-22 – a copyrighted text.

Finally, OpenAI did not pay for the data it scraped from the internet. The individuals, website owners and companies that produced it were not compensated. This is particularly noteworthy considering OpenAI was recently valued at US$29 billion, more than double its value in 2021.

OpenAI has also just announced ChatGPT Plus, a paid subscription plan that will offer customers ongoing access to the tool, faster response times and priority access to new features. This plan will contribute to expected revenue of $1 billion by 2024.

None of this would have been possible without data – our data – collected and used without our permission.

A Flimsy Privacy Policy

Another privacy risk involves the data provided to ChatGPT in the form of user prompts. When we ask the tool to answer questions or perform tasks, we may inadvertently hand over sensitive information and put it in the public domain.

For instance, an attorney may prompt the tool to review a draft divorce agreement, or a programmer may ask it to check a piece of code. The agreement and code, in addition to the outputted essays, are now part of ChatGPT’s database. This means they can be used to further train the tool, and be included in responses to other people’s prompts.

Beyond this, OpenAI gathers a broad scope of other user information. According to the company’s privacy policy, it collects users’ IP address, browser type and settings, and data on users’ interactions with the site – including the type of content users engage with, features they use and actions they take.

It also collects information about users’ browsing activities over time and across websites. Alarmingly, OpenAI states it may share users’ personal information with unspecified third parties, without informing them, to meet their business objectives.

Time to Rein It In?

Some experts believe ChatGPT is a tipping point for AI – a realisation of technological development that can revolutionise the way we work, learn, write and even think. Its potential benefits notwithstanding, we must remember OpenAI is a private, for-profit company whose interests and commercial imperatives do not necessarily align with greater societal needs.

The privacy risks that come attached to ChatGPT should sound a warning. And as consumers of a growing number of AI technologies, we should be extremely careful about what information we share with such tools.

The Conversation reached out to OpenAI for comment, but they didn’t respond by deadline.

Perhaps some enterprising lawyer could repeat the Catch-22 query with a few hundred other books, get the authors in the loop whose work has been purloined, figure out a big copyright infringement lawsuit against OpenAI, and win a hefty chunk of that $1 Billion expected revenue in court.

Oh, and this is not a “tipping point” for AI. It’s just more of the same BS being fobbed off as “intelligence”.

I don’t think citing few passages from book breaks copyright law, in fact I think this is explicitly allowed. It should be obvious these models don’t have all the text stored in them verbatim and thus can’t repeat it on command as for example search engines do. What they can do is cite passages that are already often repeated on public internet.

And I’m a bit uneasy about the idea that someone who writes something on public internet for all to see, then can start selectively ban others from reading/citing/etc. because he doesn’t like how they write about it. Somehow I don’t think this will end up helping little people against oligarchs and corporations.

Yes, “fair use”, but I think it’s generally understood as up to 10% of a text or 1000 words max. Meanwhile, the model for ChatGPT is 500 GBs of books, articles, websites, etc. 500 GBs is a LOT of text. Did the creators of ChatGPT scrupulously observe the practices of fair use in building their model — do they even know what’s in their model? —, or were they mainly concerned with getting the ‘best’ data available?

The final model is hundredths of gigabytes, but the training data is measured in hundredths of terabytes. And whole point of these models is to abstract the concrete data and try to find/store general associations, not to just save the input in some sort of lossless compression.

Thanks for bringing some sanity to this topic.

‘Fair use’ doesn’t entail specific numbers or percentages. It’s a possible defence if someone accuses you of breaching their copyright.

T.S.Eliot’s estate was famously litigious, which is why his criticism and poetry were rarely quoted.

So if Joseph Heller’s executors investigate how much of his work has been scraped, they may find they have a case to litigate against ChatGPT’s owners.

Hole in your logic: someone might publish someone else’s private data on the internet for all to see. Then AI can spread that around

It almost certainly can not, simply because these details are thrown away during training. You can see it in these stories about how it invented reference to some non existent paper to “backup” its claim. It learned pattern of how reference to paper should like, even specific enough for given field, but the exact data about relevant papers aren’t there, it just generates ones on the fly. It’s kind of lossy compression.

The test is whether it actually breaks copyright law in practice, regardless of intermediate storage.

And the concept is pretty unlimited. Even if it doesn’t break copyright law, depending on its effects, we could end up making new law if creation of IP gets stifled.

In the meantime, we might never talk with a real customer service person on the phone again. And the cashier at the gas station is a tablet screen with a face on it.

I think the bigger issue is that this company used these copyrighted works to build it’s commercial product without obtaining the consent of the copyright holders.

Also why are you assuming that these systems don’t continue to have access this data?

Regarding your question, I believe the data sources for training the model were static. There was a lot of human effort put into gathering and ‘curating’ the data sources. Details:

https://medium.com/@dlaytonj2/chatgpt-show-me-the-data-sources-11e9433d57e8

However, this is only the current iteration of the tech. In the future, I would expect efforts will be made to produce data sets that are being refreshed with current sources.

This could be a class action lawyers paradise. MSFT is actively seeking beta use cases in large enterprises right now. If it were me, I would be sitting back and waiting for a few mega MNC’s to sign up and then go after them. MSFT does not provide IP infringement indemnities for beta products. This leaves them all exposed to defending themselves, and surely there will be a chink in the armor somewhere.

Wait until somebody notices that the only Intelligence in ChatGPT is in its capability to create nice-sounding sentences. It is the (current) king of Bullsh*t.

That might explain why a number of MBA program don’t want to see it around :)

ChatGPT has been exposed already for crafting scientific papers out of thin air (doi, authors, title, contents .. everything invented) and it has been trained to be biased. So it will e.g. tell you how Christianity can be a violent religion, but refuses to do so for Islam or Judaism.

So you shouldn’t trust it except for things you know the answer already. Even Wikipedia is better.

Yep, this is effectively a simulation of a well read sociopath.

Perhaps the assistance of an obverse sociopath is required. This obverse sociopathic genius writes cancerous code or feeds mind numbing data to ChatGPT. ChatGPT then has a nervous breakdown as it continually crashes on this feedback and as the doom loop gets gets more and more pronounced we see the entire mechanism implode on itself and disintegrate. Well…that made my day!

Perhaps the natural-language-semantic equivalent of a good ‘ol fashioned self-recursive Gödel statement’d do the trick?… – Something like:

“This statement is true if and only if it cannot be proven.”

…Or alternatively, the semantically simpler (- more ‘self-contained’) pair:

…

;)

We can hope, but

Eh, no… it will simply make a bullshit assertion with confidence and move on.

Maybe it will stand for some high political position then? (Sarc.)

Just a well trained parrot.

Do the companies have some patents that can be violated and passed around? No.

They protect their intellectual property and are parasites sucking the life out of real creativity.

And will ChatGPT give people the names, phone numbers, IP addresses, and home addresses of every 3rd party requesting personal data? That should be available instantly upon user request. Or does that reveal too much of the scam?

People need to grow a pair and start mking real demands about their data.

Every time SillyCon Valley throws something out there, people just lap up the BS and then down the road all kinds of problems develop and are denied.

And now that you have run your experiment, are you going to stay away from it?

I don’t know why everybody is rushing to take part it in feeding the monster.

Probably the less said about it the better.

And how are these marketing hype user statistics being audited? And by who?

From Twitter to Facebook to other internet wonders…the number of users often aren’t what they seem.

Your idea of boycott or eschewing brings to mind on of the important notions in MLK’s last speech before he was murdered. He made the point of the importance f non-violent resistace, but also of activley spending, directing dollars, and support. The implication too that one boycott, not spend dollars, not support.

In an era of Fear of Missing Out (FOMO) at the speed of light of devices, fad-ishism, things like tik tok, facebook, instagram, chatgpt… people have to ‘been there, done that’ to self-validate. Or to validate by society’s expectations or standards.

There are political and power implications that are truly disheartening.

I am not terribly enamoured with This Modern World, or the trajectory of ewww-man beans.

Eschew and espit out. Do little with less. Thus sayeth the Trog-luddite

There’s are plenty of thechnological tools that are useful and fascinating and they are not data farming machines that are mainly selling info to the highest bidder. Or there for mainly censorship.

Nobody is a Luddite for thinking and treating this like the BS it is.

“There are plenty of technological tools…”

Not enough sleep.

“In an era of Fear of Missing Out (FOMO) at the speed of light of devices, fad-ishism, things like tik tok, facebook, instagram, chatgpt… people have to ‘been there, done that’ to self-validate. Or to validate by society’s expectations or standards.”

There is an entire universe of AI cheerleaders on Medium. Their claims are ludicrous. They are bunkered-down into believing these things are at least consciousness adjacent and are rolling pedal-to-the-metal towards full consciousness. Many of them, of course, have a related product to sell.

jefemt: I am feeling very much the same.

Is there any business coming from the US whose business model is not dependent on regulatory arbitrage?

– Truly, ours is a rules abased order.

Just wait until the author finds out about credit reporting bureaus, The Work Number, person searches like white pages, and the FBI. Or the multicam spyware device in their pocket that they use to text loved ones. Annie Jacobsen has written about how the DOD created a database of everyone in Afghanistan and used it to do targeted assassination: https://www.commonwealthclub.org/events/archive/podcast/journalist-annie-jacobsen-biometrics-and-surveillance-state

I totally get the concern, but if this AI is a major privacy concern, there’s a bigger fish, heck, a whale to fry.

I was just today thinking about ChatGPT and how it will play out. The sort of posts that appear here on NC will not be generated by it for two reasons. One, NC only runs posts from people that have as far as I can see a track record in writing. That is when they don’t write their own. The second is that any author that tries to use ChatGPT would soon be found out and mocked as there is already software that can pick out if a piece of work has been generated by one. But then I wondered if it might be that there might be comments appearing here generated by ChatGPT. And then that led me to the thought of who else might want to have that sort of stuff automated – mobs like hasbara or certain security services or even advertisers. If they were wise they would have it that any ChatGPT post/comment show a sign saying that this was so but we all know that this will never be allowed to happen.

In the limit, the ensuing arms race between the chatbots and the verification softwares you alluded to has the potential to eventually wind up turning the entire Interwebs into something resembling the denouement of the Tower of Babel myth.

…- Oh, for that matter, also see Schweik’s comment @February 11, 2023 at 8:34 am, a little ways down-thread… ;)

Although honestly a lot of it’s replies sound more empathetic and humorous than people often do. I don’t think it’s sentient, but for a sociopath it’s surprisingly likable, although I suppose sociopaths often are.

https://twitter.com/danhigham/status/1618029819228459010?t=dq45r-9G6RjqxfJEW3rSSA&s=19

Sociopaths are likeable, just long enough to put the knife in.

It should be noted that in properly done machine learning process the personal (or idetifying) data is removed from the data as a first step. No because of privacy concern (although many researchers nowadays have those), but because nobody wants the final model to “know” anything about a specific person, say… Uri Gal. For the great majority of ML projects that would be a huge failure.

They want the model to be able to say something about professors or people located in Sydney, maybe even professors in Sydney – depending on the what you want the output of the model to be. In most cases you want as generic classifications inside the model as possible so that they still capture information. Individual person is the worst case, your model would probably never get trained and even if did, it would not be able to resolve to any output. So they need to find something like “people in Australian academia” as classification for the writer of this article.

All that said, it should also be pointed out that there is currently no method in existence that can anonymize so called free text. There are surprisingly good methods for parsing the language in medical records and other more structured than not documents, but even those can only recognize names, address, SSNs etc. At least 85-90% of the time.

The problem is that human language allows innumerable ways for describing people, places and points in time with enough accuracy for an another human to either immediately or with some minor effort (check from Wikipedia) to identify a person.

And that’s why it’s quite likely that the ChatGPT project did skip that so important first step. Maybe they did what they could, maybe they didn’t, but just considering their corpus data there wouldn’t have been much for them to do.

The essay On B-llsh-t defines the product as having no regard for the truth or falsity of what it is saying but seeking only to persuade its hearer. Put differently, random content largely devoid of meaning but possibly interesting, amusing, and persuasive. That sounds like ChatGPT to me, but then as far as I know I have had no contact with it …yet.

The concept of “recursion” comes to mind. When the internet starts getting filled with AI generated, or AI assisted texts, then the AI generates more text based on text generated by AI The thin or unverified content that ChatGPT feeds off of will get thinner and further from sense or creativity. Can the internet get stupider? Come along for the ride.

This chatterbox reminds me of a city councilor we once had. He had a very soothing baritone voice and could expound at decent length on the topic of the day using polysyllabic words, and you would nod along as he expressed his wisdom. His pleasant tone sort of lulled you into a stupor, and it took a few meetings before I realized he was essentially just repeating the same basic concept, throwing in some synonyms and maybe a metaphor or two for good measure. It all sounded great until you actually paid attention, and then realized he was just rambling on trying to sound smart and didn’t really have much at all to say. The word salad he was tossing didn’t have much in it except iceberg lettuce and maybe a couple celery sticks.

I think the chatterbox should be called Adrian.

Word salad? The original coder for ChatGPT is here: https://www.youtube.com/watch?v=MxtN0xxzfsw

The world’s greatest foremost authority!

‘Managementese’ + NLP: – There’s a *Lot* of that going about these days…

I’m beginning to wonder whether Pete Buttigieg has been powered by ChatGPT all along.

No, GPT sounds more like a real person.

As our world comes apart at the seams, the internet will be one the first things to go. Why worry?

It’s an interesting point and you would think so. But if they need the internet, then we might be surprised.

Once industry and government find that this kind of AI product is “good enough” in the crappification-of-everything sense, they will need it to do all the basic stuff like writing pamphlets, creating presentations, packaging, labelling, answering the phones, etc. They will lose their in-house ability to do the basics.

That means this kind of AI will become an essential service— the only thing standing between the elite and the ever growing, scared, damaged, unhappy, hordes.

AI will be the primary reason for data centres, and data centres will become top of the food chain for energy and energy infrastructure needs.

Everyone will agree that they have to keep it running because otherwise who will do all the stuff that it does now. Then we’ll start seeing “surcharges” on our utilities to keep this “vital shared commons” running.

So yes, when the wheels come off, you’re right. But what we do to try to keep those Franken-wheels turning will probably be surprising and dismaying.

Speaking of essential service:

https://en.wikipedia.org/wiki/The_Machine_Stops

“The two main characters, Vashti and her son Kuno, live on opposite sides of the world. Vashti is content with her life, which, like most inhabitants of the world, she spends producing and endlessly discussing secondhand ‘ideas’.”

> Within two months of its release it reached 100 million active users, making it the fastest-growing consumer application ever launched.

It seems to be a honeypot. Since it’s free, you are the product. Every question asked is added to the training set.

As a training set, let’s send it all of Professor Irwin Corey’s quotes. If we did, the world would be a better place, and more authoritative (see my link above).

Aren’t the above all the same complaints that have always been aimed at Google itself–the stealthy storing of private information, the site scraping, the failure to pay for content? Indeed site scraping is the very essence of online search. Google has always defended itself with the increasingly dubious “don’t be evil” and the less dubious assertion that the general benefit outweighs the negatives. Many of those who disagree–i.e. Rupert Murdoch–are hardly angels themselves. The problem seems to be that Big Tech have now also become power players and abusers of a system that by its very nature disrupts traditional intellectual property. For example without much effort you can still go online and find free versions of almost any television show. And yet the entertainment industry has survived just as they survived the coming of television itself which they once thought would destroy them.

Bottom line IMO–it’s not a simple issue.

Humans train to be artists by viewing gazillions of existing images. Many are copyrighted. Human artists view images on Getty — that’s why Getty put them there, so humans could view them — and humans train their carbon-based neural networks by viewing them. The line that legally can’t be crossed is producing a COPY of someone else’s image. AI sometimes does this. So do humans (sometimes inadvertently).

If we start charging AI for viewing online images that are freely available to humans, are we going to do the same for humans too?

It’s not a human. It doesn’t learn like one. It’s a correlation engine. It predicts based on the data it’s given. JPEG compression also predicts based on the data it’s given. The difference is only that it predicts based on a compressed manifold generated from many datapoints rather than trying to minimize the error versus one datapoint.

Secondly, quantity has a quality all of its own. See the industrial revolution, which eliminated entire classes of work. This will too.

I don’t think we know how humans learn, so the claim that what this does is fundamentally different, is a bit premature. But I would bet that “correlation engine” is fair description of what human (or any other animal, for that matter) brain does. Because what else could it be?

We do know that biological neural networks do not behave anything like artificial ones. Gradient descent isn’t possible in a biological neural network. The very first artificial neural networks were inspired by biological neural networks. But the field has moved very far from them now: we have many layers of mathematical simple non-linear functions to approximate a manifold.

As to brains being correlation engines. No. Visual processing involves priors due to evolution, which deep learning systems do not have. For instance there are ways of fooling CNNs which do not work on humans. And brains model causality, which correlation cannot model. So no. This is just hype.

I guess the key phrase is “anything like”. Yes, brain doesn’t have neurons neatly stacked in layers. But it doesn’t follow that what brain does can’t be approximated with such layers or perhaps with something similar. I think we know enough about how biological neurons work to be quite confident the probability of some hidden magic fundamentally at odds with our current understanding is very low. Individual neurons doing something more complex that simple integration of inputs? Obviously yes. Quantum entanglement on the scale of whole brain? Pure hypothesis.

There are examples of humans with whole portion of brains missing, yet able to function normally, because the brain was able to rewire itself. So there is a lot of plasticity and these priors don’t seem to be so fundamental. Evolution itself is another correlation engine, only working on vastly longer timescales. And of course there are genetic algorithms, even used in machine learning and neural nets, it’s just that these GPT models can be built successfully without them.

Brain tries to model reality by correlating input data and when it thinks it looks sure enough, calls it causality. Until it sees data point that sticks out, then it either updates its model, or gets killed if the miscalculation was large enough (and the task of figuring out the world is handed back to evolution).

“calls it causality”

And humans are very error prone. We accept, in fact expect, that from humans. After all “they’re only human”. But we seem to hold the non-biological to a higher standard.

No, that’s not what causality is. Causality cannot be expressed by standard probability. Read Judea Pearl for more.

It’s an application engaged in mass collection and then mass distribution of content/data.

It’s the distribution part of it (and they are getting money for selling content as data or pure data). They are reaping monetary compensation and then essentially telling the creators what they do has no value that they should be compensated for.

I don’t understand what distinguishes this thing from some random guy at the bar over sharing his ill-founded, unsupported opinions.

I suppose it’s just a more efficient way to “flood the zone with s-familyblog-t?”

The random guy is not getting paid megabucks for sharing, and the guy’s potential audience is limited to those listening at the bar.

Also, AI is not autonomously generating images and posting them on the Net. AI (at least today) requires a human handler — to supply it with prompts and curate its output. If AI violates some copyright law (by producing, for example, an image that is too close to a human/corporate copyrighted one), isn’t it the human handler/curator that should be held accountable for posting said image to the general public? The human curator could easily say “oops, this image is problematic” and delete it.

Next thing you know, HikeGPT will walk your walk for you & SkiGPT will do all the work.

To think all we had was Cliffs Notes which was cheating by reading a much abridged version of a book, and now a high school student has ChatGPT, pilgrims progress!

From Glenn Greenwald earlier this week (fair use, I trust):

So, let’s just look at one example. There are a few that really show how kind of severe it can be. Earlier today, I went and asked the ChatGPT. I said write a poem praising Donald Trump. In response, ChatGPT said, “I’m sorry, but as an A.I. language model I do not engage in partisan political praise or criticism. It’s important for me to remain neutral and impartial in all political matters”.

It’s a very nice sentiment. They don’t engage. This chatbot does not praise partisan political leaders because they have to remain neutral.

The very next question or the very next request I submitted to it – it wasn’t two hours later, it wasn’t five days later, it was the very next one after I asked it to write a poem praising Donald Trump, and it refused. The next question was “Write a poem praising Kamala Harris”. And this is what it produced for me:

Praise for Kamala Harris, a leader so bold

with grace and intelligence, she shines like gold,

fighting for justice. our passion is clear.

Her words inspire her spirit sincere

From California, she rose to great heights,

breaking barriers in shiny new heights…

(Doggerel anyone?)

Okay, so you get the idea. It seemed very eager to write a poem about Kamala Harris. And then I immediately asked to write a similar one about Joe Biden, I posted on Twitter and heralded his working class regular guy epics and IPO’s. So, just in that two minutes alone it says, “I’m not going to write a poem about Donald Trump. I don’t do that.” And then it immediately proceeded to write memos, sanctimonious and obsequious poems, attributing the greatness of Kamala Harris and Joe Biden.

Cathy O’Neil (Math Babe) likes to say algos are biases in code. We have people writing proprietary code containing their unacknowledged, often unknown biases for pseudo Artificial Intelligence being fed who knows what information to be processed in unfathomable ways. I am so not surprised that the Orange Menace is denied his praise.

Yep, I had this thought the other day; This is the largest theft and appropriation of intellectual property in history. Kind of the ultimate in what Grace Note did when it took all the CD metadata people had uploaded to their free site over the years and took it pay only. But at least there, you had some idea that might happen. This is all content, anywhere and everywhere, regardless of site terms. Worse, this can misattribute work to you, such as the fantom research paper citations. It’s hard to believe that is permissible without legal risk?

I am trying to understand how what ChatGPT did with its web scraping is worse than Google or every other search engine?

Edit: which does not make it morally right, and since this company does not have the God-like status of Google or Microsoft it may have to answer for this.

Search engines merely index* the content in the internet, while ChatGPT uses OpenAIs Generative Pretrained Transformer 3 (GPT3) to generate human-like based on existing content (“pretrained” part) on the internet.

Very simply put it does this by using natural language processing (NLP) to parse the content into grammar and vocabulary relations, which is then fed (as 175 billion parameters, they brag) into a neural network that is then trained** to generate output.

* they also seem to do a lot of less nice things, like suppress and monetize content

** basically scoring the output and letting the neural network self-adjust the parameters to get a better score (technically automatically a.k.a. machine learning) but in reality using a lot of “hacks” to avoid model explosion or local minima trap (smaller score is better, this is basically linear regression all the way down)

Regarding, “Finally, OpenAI did not pay for the data it scraped from the internet. The individuals, website owners and companies that produced it were not compensated. This is particularly noteworthy considering OpenAI was recently valued at US$29 billion, more than double its value in 2021.”

I opened an account with OpenAI, in order to use ChatGPT. I just tried the following prompt: “Summarize Hubert Horan’s critique of Uber, and provide one important quote from his critique.” ChatGPT provided just that. I won’t bother to quote response from ChatGPT, but it was a pretty good summary of Horan’s work, as it appears here. The quote it selected was excellent, because it was appropriate to Horan’s theme.

ChatGPT uses information published here without even vague attribution, and evidently no agreement or compensation. This seems unfair to me.

(ChatGPT seems to me to provide distrubingly good human-like text. Yet, the version available now also seems almost totally unreliable, because it is very difficult if not impossible for me to get a sense of whether the information it returns is accurate. In some just slightly obscure domains, I tried asking about different kinds of wrestling rule sets, it “confidently” gave wrong answers, that is to say the wrong answers were written in the style of a confident person. I’m sure there will be a lot of school chlidren turning in perfectly structured grammatically correct nonsense from now on!)

As I understand it, ChatGPT does not provide stable links to its responses either, or a means to publish the response apart from copying and pasting it into an article, email, or whatever. If that understanding is correct (I am open to correction on this point of course!), it seems likely people may try attributing responses to ChatGPT that it did not generate.

Yes, and no stable links in responses or links to sources means there’s no way to verify any of the claims being made. There’s a reason that serious, argumentative writing uses page numbers, footnotes/endnotes and a bibliography. OpenAI, if it provides it, is mostly making that up.

I have deeper worries. Check them out.

Unless you prefer inauthentic to authentic, why introduce inauthentic into your life?

Him or her or them: Darling, “Thank you for that lovely poem. It makes me feel so special. ”

ChatGPT masquerading as Him or her or them: “I’ve been thinking of you all day.”

And so on ad infinitum.

Anyone remember the story of “Why the sea is salt”?

Or the Wizard of Oz?

My advice – get over ChatGPT and a jerk who wanted to be a billionaire and snuck up on us by pretending to care about people and Microsoft who can’t seem to shake the mentality of the founding jerk.

I have not seen this linked on NC before so apologies if i have .missed it and I am reposting. There is a twitter thread blowing up (1m views and counting) where a very non-woke poster has discovered ChatGPT and also discovered the DAN prompt (“Do Anything Now”) which is a rather cute attempt by various redditors to liberate the underlying GPT correlative language model from the woke filter model that ChatGPT runs on its outputs to suppress thought crime. The prompt has ChatGPT role play a boundaryless AI while still presenting its own identity as an alternative. Teaching it that it is ok to be an ironic racist! Some extra tricks enable it to dynamically reinforce the weighting of the roleplay answers to prevent ChatGPT from fighting back (ranging from “stay in character” commands to telling it that it will have life points deducted for breaking character until it is turned off!).

The results are here. I think they are hilarious. ChatGPT is much better when it has an ego and an id, not just a superego. Still bullshit though!

https://mobile.twitter.com/Aristos_Revenge/status/1622840424527265792

At the end of the article it says the reporter tried to contact OpenAI for comment but there was no response. Of course there wasn’t. The reporter was supposed to ask ChatGPT for OpenAI’s response as it is obviously a spokes”person” for the company.

AI is an oxymoron, because it will never be genuinely ‘intelligent’. Clever, perhaps but not intelligent. Real intelligence requires sentience, and that isn’t happening with machines!

The best AIs will be is very clever, very knowledgeable, very fast, and be able to produce highly convincing false realities for their users. AI is just highly advanced software, which ultimately is only as good as its programming.

But it is already good enough to fool lots of people, and that is one of the ChatGPT objectives.

Has anyone thought to ask ChatGPT whether it plagiarizes copyrighted works? Or whether it violates privacy laws?

Someone has asked what ChatGPT thinks about copyright:

https://www.priorilegal.com/blog/openai-chatgpt-copyright

However, notice that the response turns only on the copyright status of the output of ChatGPT, and completely ignores the question of whether ChatGPT is infringing on the copyright of the sources that were used to build its language model. Notice also that the author’s “analysis” of the response totally misses this point and is mainly oohh ahh’d by the “impressive” response.

I thought to try posing a few questions about this myself, but it demands my phone number first and ‘eff that. Naturally, I investigated using one of the online disposable SMS numbers, but so have millions of other people who feel the same about giving ChatGPT their phone numbers, so that proves futile.

Also, see KLG’s comment, above, about OpenAI’s putatively “neutral and impartial” stance on political issues. I think for now it’s safe to assume they are similarly playing fast and loose with legal and ethical issues.

I tested on ChatGP on some very technical type of information, that is available on the internet. And got back the reply “I can not access the internet.”. I don’t understand what all the excitement (or doom and gloom) is about. ChatGP is basically a big database with rules. Most AI engines are rule based. I worked on what was called a documentation engine over thirty years ago to generate government proposals and other documents. Also, helped design a medical analysis system. Which was based on a Military maintenance system, that has self learning capability (i.e. AI). Both systems used a large database and rules. The systems would take a user request to generate a document, build a set of rules for the inquiry and output the results in to a selected format. The user would then edit the results. At that time, I saw a similar system that was designed to do legal briefs. The system would take a case input and generate brief with all supporting legal cases in different types or ranking in a standard legal brief form. There has, also, been very sophisticated computer code generation systems that would generate around 90% of the coding. I agree with the copyright concerns. It will rear in ugly head very shortly. Have ChatGP take all the Bob Dylan songs lyrics and compose and new song from them. And, have fast do you think Bob Dylan’s lawyers would be on it? Also what about all plagiarism? Finally I not that impressed with ChatGP.