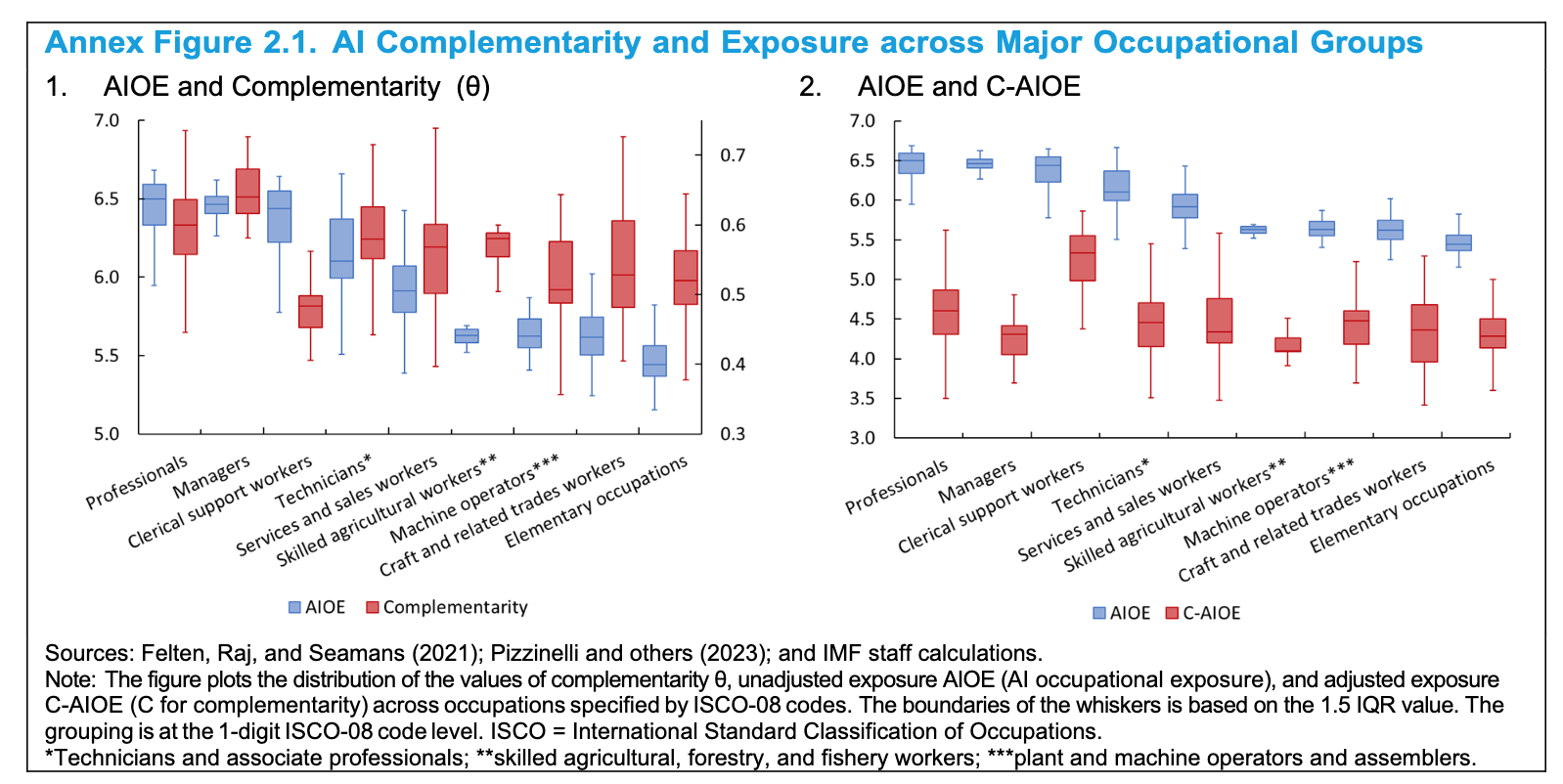

Your humble blogger has taken a gander through a new IMF paper on the anticipated economic, and in particular, labor market, impact of the incorporation of AI into commercial and government operations. As the business press has widely reported, the IMF anticipates that 60% of advanced economy jobs could be “impacted” by AI, with the guesstimmate that half would see productivity gains, and the other half would see AI replacing their work in part or in whole, resulting in job losses. I do not understand why this outcome would not also be true for roles seeing productivity enhancement, since more productivity => more output from workers => not as many workers needed.

In any event, this IMF article is not pathbreaking, consistent with the fact that it appears to be a review of existing literature plus some analyses that built on key papers. Note also that the job categories are at a pretty high level of abstraction:

Mind you, I am not disputing the IMF forecast. It may very well prove to be extremely accurate.

What does nag at me in this paper, and many other discussions of the future of AI, is the failure to give adequate consideration to some of the impediments to adoption. Let’s start with:

Difficulties in creating robust enough training sets. Remember self-driving trucks and cars? This technology was hyped as destined to be widely adopted, at least in ride-share vehicles, already. Had that happened, it would have had a big impact on employment. Driving a truck or a taxi is a big source of work for the lesser educated, particularly men (and particularly for ex-cons who have great difficulty in landing regular paid jobs). According to altLine, citing the Bureau of Labor Statistics, truck driving was the single biggest full-time job category for men, accounting for 4% of the total in 2020. In 2022, American Trucking estimated the total number of truckers (including women) at 3.5 million. For reference, Data USA puts the total number of taxi drivers in 2021 at 284,000, plus 1.7 million rideshare drivers in the US, although they are not all full time.

A December Guardian piece explained why driverless cars are now “on the road to nowhere.” The entire article is worth reading, with this a key section:

The tech companies have constantly underestimated the sheer difficulty of matching, let alone bettering, human driving skills. This is where the technology has failed to deliver. Artificial intelligence is a fancy name for the much less sexy-sounding “machine learning”, and involves “teaching” the computer to interpret what is happening in the very complex road environment. The trouble is there are an enormous number of potential use cases, ranging from the much-used example of a camel wandering down Main Street to a simple rock in the road, which may or may not just be a paper bag. Humans are exceptionally good at instantly assessing these risks, but if a computer has not been told about camels it will not know how to respond. It was the plastic bags hanging on [pedestrian Elaine] Herzberg’s bike that confused the car’s computer for a fatal six seconds, according to the subsequent analysis.

A simple way to think of the problem is that the situations the AI needs to address are too large and divergent to create remotely adequate training sets.

Liability. Liability for damage done by an algo is another impediment to adoption. If you read the Guardian story about self-driving cars, you’ll see that both Uber and GM went hard into reverse after accidents. At least they didn’t go into Ford Pinto mode, deeming a certain level of death and disfigurement to be acceptable given potential profits.

One has to wonder if health insurers will find the use of AI in medical practice to be acceptable. If, say, an algo gives a false negative on a cancer diagnostic screen (say an image), who is liable? I doubt insurers will let doctors or hospitals try to blame Microsoft or whoever the AI supplier is (and they are sure to have clauses that severely limit their exposure). On top of that, it would be arguably a breach of professional responsibility to outsource judgement to an algo. Plus the medical practitioner should want any AI provider to have posted a bond or otherwise have enough demonstrable financial heft to absorb any damages.

I can easily see not only health insurers restricting the use of AI (they do not want to have to chase more parties for payment in the case of malpractice or Shit Happens than they do now) but also professional liability insurers, like writer of medical malpractice and professional liability policies for lawyers.

Energy use. The energy costa of AI are likely to result in curbs on its use, either by end-user taxes, overall computing cost taxes or the impact of higher energy prices. From Scientific American last October:

Researchers have been raising general alarms about AI’s hefty energy requirements over the past few months. But a peer-reviewed analysis published this week in Joule is one of the first to quantify the demand that is quickly materializing. A continuation of the current trends in AI capacity and adoption are set to lead to NVIDIA shipping 1.5 million AI server units per year by 2027. These 1.5 million servers, running at full capacity, would consume at least 85.4 terawatt-hours of electricity annually—more than what many small countries use in a year, according to the new assessment.

Mind you, that’s only by 2027. And consider that the energy costs also are a reflection of more hardware installation. Again from the same article, quoting data scientist Alex de Vries, who came up with the 2027 energy consumption estimate:

I put one example of this in my research article: I highlighted that if you were to fully turn Google’s search engine into something like ChatGPT, and everyone used it that way—so you would have nine billion chatbot interactions instead of nine billion regular searches per day—then the energy use of Google would spike. Google would need as much power as Ireland just to run its search engine.

Now, it’s not going to happen like that because Google would also have to invest $100 billion in hardware to make that possible. And even if [the company] had the money to invest, the supply chain couldn’t deliver all those servers right away. But I still think it’s useful to illustrate that if you’re going to be using generative AI in applications [such as a search engine], that has the potential to make every online interaction much more resource-heavy.

Sabotage. Despite the IMF attempting to put something of a happy face on the AI revolution (that some will become more productive, which could mean better paid), the reality is people hate change, particularly uncertainty about job tenures and professional survival. The IMF paper casually mentioned telemarketers as a job category ripe for replacement by AI. It is not hard to imagine those who resent the replacement of often-irritating people with at least as irrigating algo testing to find ways to throw the AI into hallucinations, and if they succeed, sharing the approach. Or alternatively, finding ways to tie it up, such as with recordings that could keep it engaged for hours (since it would presumably then require more work with training sets to teach the AI when to terminate a deliberately time-sucking interaction).

Another area for potential backfires in the use of AI in security, particularly related to financial transactions. Again, the saboteur might not have to be as successful as breaking the tools so as to heist money. They could instead, as in a more sophisticated version of the “telemarketers’ revenge” seek to brick customer service or security validation processes. A half day of loss of customer access would be very damaging to a major institution.

So I would not be as certain that AI implementation will be as fast and broad-based as enthusiasts depict. Stay tuned.

So-called ‘AI’, even at the most complex levels (such as the hyperscale Large Language Models) is statistical analysis, inference and pattern matching.

There are many potential uses for this but replacing workers is not among them. Or rather, it would not be among the uses if the actual goal wasn’t degrading work quality to fit within the narrow capabilities of ‘AI’.

As you say, edge cases are infinite and marketing hype aside (Microsoft/OpenAI have to recoup operating costs somehow) these systems are not equal to the challenge.

In entertainment and coding industry most people will lose their job. Already AI can replace nearly all print journalist and you wouldn’t notice any different in their report.

Sorry, no. Someone needs do to actual reporting or similarly talk to the big dogs, then draft the press release.

Yves is correct–people are a necessary component for working with people, and not just in journalism. I’m more familiar with the software industry. Studies show that AI assistance/co-pilot systems bring huge improvements to average and below average coders, and smaller benefits for top experts. The average coder will become more productive. Since there is still far more demand for software than the available resources to create it, I doubt such improvements will lead to layoffs. It might affect coder compensation in the long term.

There is a vision for a world where product managers and designers will be able to replace a programming team with an AI that simply generates the code. While the desire is understandable, we are a long way from that “utopia.” During the foreseeable future it will be necessary to employ experts to work with the AIs. The people who will lose their jobs are those not agile enough to adapt to changes, and that is nothing new.

In the hierarchy of getting stuff done, AIs are not strategic planners. They are not yet even tactical actors. They can occasionally be quite good assistants. I suspect over time they will become better assistants. To the extent there is trouble ahead, it’s not going to be due to the malevolent strategies of AI, it’s going to be because some greedy, lazy morons put peripheral devices like weapons systems, HR, or social management under the dictates of an AI system that nobody manages or understands. I imagine that McKinsey et. al. are already advising insurance companies on how to use AI to further automate the denial of claims. The biggest risk is empowering bad actors, and we as society need to protect ourselves against that.

I recall how printers would become obsolete because files would be sent around electronically and so there would be no need to print hard copies. It turned out that lots of people prefer hard copies and so lots of trees continue to be cut down for copier paper. The same applies with books. I personally dislike e-readers. I like the touch and feel of a book, just as I prefer the touch and feel of a newspaper versus reading it on a screen. AI may replace some jobs, but likely far fewer than the AI mavens think.

I’m similarly doubtful about AI in the software industry.

As a simple test, I asked ChatGPT to give me code to parse a CSV-formatted file, specifying the language and version.

It kept giving me code with bogus, non-existent functions that would not compile.

I pointed out these errors, but each time the bot then gave me slightly different patterns that also included bogus functions.

After three tries, I figured there was no point in continuing.

It’s not going to replace programmers. It may cause lots of issues in that industry due to hype and credulity. CTOs are rarely very technical and tend to believe what they hear at conferences.

Worst case scenario is that corporations will be persuaded that it can, will fire lots of developers and we will see a period of chaos as they realize their mistake and try to replace them.

I seem to recall that you’re a fan of Harlan Ellison.

“Repent, Harlequin, said the Ticktockman” was one of my favorites.

The possibilities for sabotage against AI are fascinating. Perhaps a whole new cottage industry may spring up, kind of like cyber security which did not exist until the web was a thing. AI sabotage defenders?

I expect you’re correct. I would think it would be great fun to corrupt the data sets these things are trained on.

Not even sabotage per se, but “adversarial” behavior like happened with search engines and SEO, people gaming the algorithms so that the end result becomes very commercial and it’s an arms race between the SEOs (AIOs?) and the search engines/AI companies.

Thanks for sharing, Yves.

This is a perfect example of the need for significant political and economic decentralization, which can both create the sorts of economic redundancy that protect employment (and indeed expand it, while unleashing a new wave of scientific advancements across all fields!) and employ the superior wisdom and intelligence of representative local governments to solve the problem, each in their own way. Let a thousand flowers bloom!

Best regards,

Mike

While we appreciate new commenters, this one was somehow approved even though it has absolutely nothing to do with post. You showed up on other threads trying to shoehorn in a mention of decentralization.

This site is not a message board. We have written policies (see the Policies tab) and we enforce them.

More specifically, this comments section is not a venue for you to promote pet ideas. As Barry Ritholtz said years ago of this sort of thing, GYOFB (“Get your own fucking blog”).

A couple of points.

[1] As far as current AI controlling real-world robots moving through the world and replacing human workers in vast numbers, the people who believe that’s possible today have no conception whatsoever of the massive amount of computation our meat-based central nervous systems successfully carry out just to, forex, walk to the fridge, open the door, reach in for a beer can, pull it out, and pop its top off.

These robots from Agility Robotics that Amazon are trialing are currently about where the technology is at for mobile robot workers —

https://agilityrobotics.com/

Note that these robots are (a) a radio-controlled ‘swarm’ controlled by a central system and (b) that system has been trained to understand the specific, controlled physical environment of the factory/warehouse where it’s installed — and that’s all it’s mastered.

As you say, the current failure of autonomous vehicles — which don’t even have to manage the mechanics of walking around and balancing — is indicative of the reality. Rodney Brooks at MIT has been a leader at building robots for decades —

https://rodneybrooks.com/blog/

Brooks not only predicted the failure of self-driving cars, he’s now predicting another AI winter once the current hype dies down. I wouldn’t go that far. Much white-collar employment could be effected, and definitely LLMs have massive potential in tasks involving biogenetic analysis and also, unfortunately, surveillance.

However, while our meat brains usually have difficulty with computational tasks like merely memorizing seven digits in a row, machines have massive difficulties with the computational tasks involved in moving through the world — which we think nothing of, because we’ve evolved to do them.

[2] The OP quotes: Alex de Vries, who came up with the 2027 energy consumption estimate (who says) … if you were to fully turn Google’s search engine into something like ChatGPT, and everyone used it that way—so you would have nine billion chatbot interactions instead of nine billion regular searches per day—then the energy use of Google would spike. Google would need as much power as Ireland just to run its search engine.

To be clear, de Vries is being ridiculous — deliberately so, from his phrasing — and this would never be what a real-world AI rollout would look like in 2027.

Yes, running LLMs to answer individual ChatGPT queries as is currently done is massively energy-consumptive. But the resulting AI models can have quite small instruction-sets and kernels once they’re generated, so they can be run locally on laptops —

https://arstechnica.com/information-technology/2023/03/you-can-now-run-a-gpt-3-level-ai-model-on-your-laptop-phone-and-raspberry-pi/

Now, how Google and other network-based tech monopolies handle AI if they’re trying to keep AI services proprietary is another question. But that’s their problem, not ours. Conversely, Microsoft’s Copilot model makes sense, is low-code, and is probably what AI in 2027 will actually look like —

https://www.microsoft.com/en-us/microsoft-365/blog/2023/11/15/announcing-microsoft-copilot-studio-customize-copilot-for-microsoft-365-and-build-your-own-standalone-copilots/

Yes…and that cold one needs to be poured into the correct type of glass at just the right angle in order to form the perfect head. :)

I think the Chinese have made some progress in automating their warehouses and ports

https://www.youtube.com/watch?v=RFV8IkY52iY

https://www.youtube.com/watch?v=Fbr7K1l_JxU

Interesting. Thanks.

Not so much in the way of non-dedicated, adaptive, non-location-specific robots, though. That was full automation, with no humans in the warehouse/factory in the first video you sent, too.

“and also, unfortunately, surveillance”

all the vast data that is stored on everyone of us is now subject to analysis by AI in rapid time.

Social credit scores are us.

In the view of the economists writing the paper you cite and the ones managing the national economy, productivity growth increases jobs as it lowers the cost per unit production which increases sales.

See this as an example:

https://www.nber.org/digest/nov05/productivity-growth-and-employment

https://www.nber.org/papers/w11354

May, 2005

The Sources of the Productivity Rebound and the Manufacturing Employment Puzzle

By William Nordhaus

Abstract

Productivity has rebounded in the last decade while manufacturing employment has declined sharply. The present study uses data on industrial output and employment to examine the sources of these trends. It finds that the productivity rebound since 1995 has been widespread, with approximately two-fifths of the productivity rebound occurring in New Economy industries. Moreover, after suffering a slowdown in the 1970s, productivity growth since 1995 has been at the rapid pace of the earlier 1948-73 period. Finally, the study investigates the relationship between employment and productivity growth. If finds that the relevant elasticities indicate that more rapid productivity growth leads to increased rather than decreased employment in manufacturing. The results here suggest that productivity is not to be feared – at least not in manufacturing, where the largest recent employment declines have occurred. This shows up most sharply for the most recent period, since 1998. Overall, higher productivity has led to lower prices, expanding demand, and to higher employment, but the partial effects of rapid domestic productivity growth have been more than offset by more rapid productivity growth and price declines from foreign competitors.

Notice that manufacturing productivity has been declining now for 12 years:

https://fred.stlouisfed.org/graph/?g=m2mB

January 30, 2018

Manufacturing Productivity, * 1988-2023

* Output per hour of all persons

(Indexed to 1988)

https://fred.stlouisfed.org/graph/?g=lSyd

January 30, 2018

Manufacturing and Nonfarm Business Productivity, * 1988-2023

* Output per hour of all persons

(Indexed to 1988)

With all this AI displacing wage making human workers and, with the increased energy usage, and with all the new stuff needing to be produced so that humans can purchase for consumption- those goods – Oh wait…if humans are unemployed.. where are humans going to get the cash for all this AI junk.

Oh yea, AI is going to bring such great things to society… we will all be able to quit the hard work of thinking.

Darn, I forgot about that Free Market where it’s all about first mover advantage, all about profit and control, about vanquishing the competition…. the free market needs to be free of these silly notions of community, society and that rot… good thing AI is competing against HI – get rid of all that weight HI drags the free market down with

Initial thought after reading head line … AI is the evolution of Human Resources[TM] from the perspective of large vertical legal structures known as Corporations …

Granted that the U.S. is basically a conglomerate of Corporations with vestiges of a representative republic.

Yes, indeed, that “iirigating algo” made me want to go to the nearest tree and take a leak.

A lot of the productive gains from AI would be given up as a result of adverse host-parasite interactions. I.e. combating criminal AI. Capitalist elites require masses of workers to maintain competitive control amongst themselves. AI breaks down this capitalist hierarchy by making workers redundant. Any small group of people with sufficient ability can now leverage AI to subvert existing power structures. Hackers using AI to not only converse with you tirelessly but also impersonate you or someone you trust. (AI subverts the ‘costly signally’ dynamic). And here is the real kicker – AI will lead an overall fall in trust leading to significantly lower system wide productivity which will be very hard to remediate (host-parasite dynamics).

I think the part people miss with AI is that intelligence consists of an empirical and a logical aspect. The former is looking for patterns among observations, while the latter involves formulating theories and testing them.

Empirical: This shape is darker than the background. It reacts in certain ways when it’s manipulated.

Logical: It’s round. Maybe it’s a ball on the ground? If it is, it should roll away when I push it. Let’s try that and see if it works.

Current AI models do a great job of the empirical aspect (based on other conversations I’ve observed, what are the next words in this one likely to be?) but don’t tackle the logical aspect at all (is what I’m saying here true?) They are good enough at the empirical side to fake it and make it look like they’re reasoning logically (when people are asked about this particular answer, they mostly say it’s true, so I will as well) but they aren’t really.

With most AI technologies (large language models, self-driving cars) we are constantly being asked to conflate the two. More specifically, we’re given evidence that they can perform the empirical task and asked to accept that they can handle the logical aspect as well. This is baked into most of the discussions at a fundamental level. Take the concerns about ‘hallucination’, for example (language models making up shit). Hallucination as opposed to what? Saying stuff that’s true or accurate? How would an AI model know that?

There is some genuine value under all the hype (the progress on the empirical side has been astonishing, and there are ways that can be useful) but as always, the tech sector is making it out to be way more than it is. Perhaps once we get models that can handle logic and abstraction as well as they do empirical predictions, we might see them actually deliver on some of the promises, but that’s a vastly harder problem – and a theoretical one, so it needs a research breakthrough and can’t just be accomplished by tweaking existing models or throwing more compute at the problem. And it will not happen in a Moore’s Law timescale.

A reason why self driving is difficult is because there is an interdependence of models. You need a computer vision model to classify objects that feed into models that figure out what to make of those objects that feed into models that determine the best course of action that feed into models that actually move the car according to that course of action.

One camel and the whole thing breaks.

Where the healthcare models aren’t very impressive – at least most of them are built on first generation technology that’s basically “drop your database or csv here and we’ll make predictions.”

My prediction is that the healthcare type models won’t put anyone out of work and that the interdependence of many many models is so difficult that only a few will get something to work with actual business use case at first. But the ones that do will have 10 person companies (or divisions within companies) worth trillions of dollars. Will be really cool to see – but also not really a systemic threat – only people who solve the problem it does will be hurt. And it’ll take years for the next company to figure out another use case.

What major CEO’s think:

https://arstechnica.com/ai/2024/01/ceos-say-generative-ai-will-result-in-job-cuts-in-2024/

the large language models are corrupted buy their own output. The more we use AI the worse it will get.

For example “THE CURSE OF RECURSION:

TRAINING ON GENERATED DATA MAKES MODELS FORGET”

Look, from machines to algorithms, how many ways do employees need to be told about their relationship with the owners of the means of production: they’re just not that into paying you.

And the tech doesn’t have to live up to its promises. Crapified services and products (for the pleebs) don’t matter in world with more monolopies than the past.

It’s a pressure point for downward wages and a way to endure or discourage strikes altogether.

The ideal dream is probably for the algorithms to assist slaves and indentured servants (or some variant of) to assist the algorithms. Or vice versa…depending on the task or industry.

What you can do: Whenever possible, pay cash in small businesses, and never ever give personal details that are true when asked by anyone, except law enforcement, where a silent shrug is the norm.

It might be better to make up fake names, addresses and phone numbers than to say nothing at all. This helps corrupt databases.

Surveys are the new way to train A.I. Everytime I buy a roll of toilet paper, they want me to take a survey at the supermarket. If you have an anonymous landline, or a V.P.N., Do the survey, change your sex, age, race and income. Remember, toll free phone numbers and i.p. addresses give away your true identity. Everyone should be a black woman, veteran, billionaire, Republican, registered voter.

We are in a war and all is fair.