Yves here. We linked to the paper by Apple scientists which gave a pretty devastating blow to the idea that large language models like ChatGPT could be considered to engage in “thinking”. Tom Neuburger provides a longer-form treatment below. To be clear, these findings do not necessarily mean that some other class of AI model won’t be able to engage in processes that meets the bar for “reasoning”. But the big bucks have been chasing “tool of all trades, master of none” LLMs, which respond to queries in conversational form and hence so what amounts to faking thinking.

By Thomas Neuburger. Originally published at God’s Spies

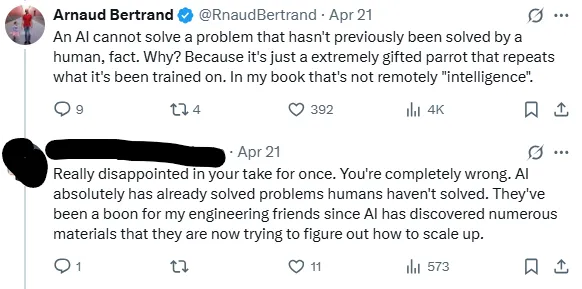

AI can’t solve a problem that hasn’t been previously solved by a human.

—Arnaud Bertrand

A lot can be said about AI, but there are few bottom lines. Consider these my last words on the subject itself. (About its misuse by the national security state, I’ll say more later.)

The Monster AI

AI will bring nothing but harm. As I said earlier, AI is not just a disaster for our political health, though yes, it will be that (look for Cadwallader’s line “building a techno-authoritarian surveillance state”). But AI is also a disaster for the climate. It will hasten the collapse by decades as usage expands.

(See the video above for why AI models are massive energy hogs. See this video to understand “neural networks” themselves.)

Why won’t AI be stopped? Because the race for AI is not really a race for tech. It’s a greed-driven race for money, a lot of it. Our lives are already run by those who seek money, especially those who already have too much. They’ve now found a way to feed themselves even faster: by convincing people to do simple searches with AI, a gas-guzzling death machine.

For both of these reasons — mass surveillance and climate disaster — no good will come from AI. Not one ounce.

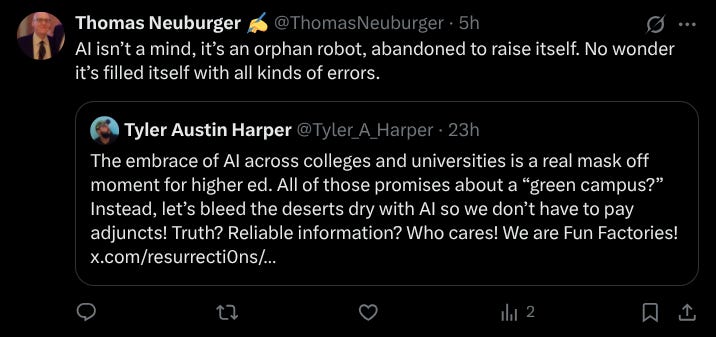

An Orphan Robot, Abandoned to Raise Itself

Why does AI persist in making mistakes? I offer one answer below.

AI doesn’t think. It does something else instead. For a full explanation, read on.

Arnaud Bertrand on AI

Arnaud Bertrand has the best explanation of what AI is at its core. It’s not a thinking machine, and its output’s not thought. It’s actually the opposite of thought — it’s what you get from a Freshman who hasn’t studied, but learned a few words instead and is using them to sound smart. If the student succeeds, you don’t call it thought, just a good emulation.

Since Bertrand has put the following text on Twitter, I’ll print it in full. The expanded version is a paid post at his Substack site. Bottom line: He’s exactly right. (In the title below, AGI means Artificial General Intelligence, the next step up from AI.)

Apple Just Killed the AGI Myth

The hidden costs of humanity’s most expensive delusion

by Arnaud Bertrand

About 2 months ago I was having an argument on Twitter with someone telling me they were “really disappointed with my take“ and that I was “completely wrong“ for saying that AI was “just a extremely gifted parrot that repeats what it’s been trained on“ and that this wasn’t remotely intelligence.

Fast forward to today and the argument is now authoritatively settled: I was right, yeah! 🎉

How so? It was settled by none other than Apple, specifically their Machine Learning Research department, in a seminal research paper entitled “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity“ that you can find here (https://ml-site.cdn-apple.com/papers/the-illusion-of-thinking.pdf).

Can ‘reasoning’ models reason?

Can they solve problems they haven’t been trained on? No.

What does the paper say? Exactly what I was arguing: AI models, even the most cutting-edge Large Reasoning Models (LRMs), are no more than a very gifted parrots with basically no actual reasoning capability.

They’re not “intelligent” in the slightest, at least not if you understand intelligence as involving genuine problem-solving instead of simply parroting what you’ve been told before without comprehending it.

That’s exactly what the Apple paper was trying to understand: can “reasoning“ models actually reason? Can they solve problems that they haven’t been trained on but would normally be easily solvable with their “knowledge”? The answer, it turns out, is an unequivocal “no“.

A particularly damning example from the paper was this river crossing puzzle: imagine 3 people and their 3 agents need to cross a river using a small boat that can only carry 2 people at a time. The catch? A person can never be left alone with someone else’s agent, and the boat can’t cross empty – someone always has to row it back.

This is the kind of logic puzzle you might find in a children brain teaser book – figure out the right sequence of trips to get everyone across the river. The solution only requires 11 steps.

Turns out this simple brain teaser was impossible for Claude 3.7 Sonnet, one of the most advanced “reasoning” AIs, to solve. It couldn’t even get past the 4th move before making illegal moves and breaking the rules.

Yet the exact same AI could flawlessly solve the Tower of Hanoi puzzle with 5 disks – a much more complex challenge requiring 31 perfect moves in sequence.

Why the massive difference? The Apple researchers figured it out: Tower of Hanoi is a classic computer science puzzle that appears all over the internet, so the AI had memorized thousands of examples during training. But a river crossing puzzle with 3 people? Apparently too rare online for the AI to have memorized the patterns.

This is all evidence that these models aren’t reasoning at all. A truly reasoning system would recognize that both puzzles involve the same type of logical thinking (following rules and constraints), just with different scenarios. But since the AI never learned the river crossing pattern by heart, it was completely lost.

This wasn’t a question of compute either: the researchers gave the AI models unlimited token budgets to work with. But the really bizarre part is that for puzzles or questions they couldn’t solve – like the river crossing puzzle – the models actually started thinking less, not more; they used fewer tokens and gave up faster.

A human facing a tougher puzzle would typically spend more time thinking it through, but these ‘reasoning’ models did the opposite: they basically “understood” they had nothing to parrot so they just gave up – the opposite of what you’d expect from genuine reasoning.

Conclusion: they’re indeed just gifted parrots, or incredibly sophisticated copy-paste machines, if you will.

This has profound implications for the AI future we’re all sold. Some good, some more worrying.

The first one being: no, AGI isn’t around the corner. This is all hype. In truth we’re still light-years away.

The good news about that is that we don’t need to be worried about having “AI overlords” anytime soon. The bad news is that we might potentially have trillions in misallocated capital. […]

I think Satjayit Das has it about right, the models make probalistic determinations. We don’t know the algorithms they use but we do know the laws of probability they must follow.

They will probably take over a lot of the bullshit jobs and won’t do them very well but it won’t matter very much because those doing them now don’t do them well now. No incentive to do so and not really needed.

No doubt they will be used where they shouldn’t be and cause massive problems but our digital infrastructure is going to do that without them as well. Mr Tainter will be proven right in a shorter time than otherwise.

I don’t believe it will take over even bullshit jobs. Synthetic text extruding machines cannot act. Even a bunny or an ant can act. Synthetic text extruding machines require a person to act, to at least prompt the machine to produce an output.

This will be a productivity enhancer in some situations. Which isn’t a bad thing. But for BS jobs, it will just help the poor person in the BS job be more productive at producing BS.

By the way, if you are looking for associative analysis to interpret textual data, LLMs are great. I help build a system to parse out PubMed, PMC, and BioRxiv publications, and store results in a database. We then did the following:

1. Take an article from PMC and strip out all the references.

2. Ask the model to suggest what other articles in the database might be suitable references for the stripped PMC.

3. Examine the list of suggested articles.

Depending on various technical parameters (for techies, primarily the length of the vectors used when calculating the embeddings) and a couple of other things, the suggested list would typically include 80-95% of the article in the database that were cited by the original PMC article before stripping out the references. By pushing the model harder (expanding the searches, running the model several times in succession) we could get to 98% in some cases. This was basically semantic searching, rather than keywords, which is what most searches use at present.

What this says to me is that when LLMs are asked to do analysis on an unpolluted dataset they cam work extremely well. As for generative cases, the number of stories about fabricated references in court pleadings says it all, as any fule kno.

Heh, this is “agentic” AI where it’ll go and run random code probabilistically assembled from the Internets. What could possibly go wrong with this?

I think I saw a demo somewhere by accident on Twitter, or was it linked here? Where “agentic” AI had two systems exchanging a “conversation” between each other to look up travel booking or something bizarre.

Like, we can already do that; it’s called APIs. There are even plenty of low/no-code systems that probably allow for this, and are likely more reliable.

The fatal problem with LLMs is hallucinations. That is, output that is completely made up out of whole cloth by the system. These are quite common in today’s AI systems, as many users have found out to their cost.

I claim that if you can’t trust the results or the output of something, then it is not useful. Sure, you can proof the output, look up every result to see if it’s correct, and then “use” what it gave you after making the needed corrections. But how is this useful? Not only does it take as long or longer than doing it yourself, but it also leaves you with a lot of lingering doubt about the results you have. If you corrected 50 mistakes, how do you know you got them all?

(Also, needless to say, you shortchanged yourself of gaining the expertise you would have gained by doing the work with your own brain. If anyone asks you follow up questions on the generated material, you will have to just shrug.)

I fear an information system where nothing can really be trusted.

Do US voters ‘think’?

Depends on the type of bacteria in their guts…

It’s nice to get in a real good out loud laugh once in awhile, thank you!

> no good will come from AI. Not one ounce.

While I’m healthily sceptical of current AI (and I wish people would stop using that term and use something like Large Language Models) to say no good at all will come from it is just as wrong-headed as believing that super-intelligence is coming any day soon.

For example, I work using Japanese in Tokyo but I’m not quite fluent. Today, working with a transcript of a meeting an LLM produced a perfectly usable translation into English for me, clarifying a point that I didn’t fully grasp due to me not catching a word that was used. The original transcript was also produced by an advanced speech to text model (a variant of language model) and was pretty useful even if not perfect.

At another point in my day I asked another LLM to explain how a tricky piece of code written by a colleague worked and it did an excellent job of this, saving me quite a bit of time in trying to understand it, and saving me from having to ask him. Using the LLMs to help with well-defined relatively small coding tasks is definitely a productivity boost.

I think we’re still a few breakthroughs away from AGI. I doubt throwing data and compute at it as seems to be OpenAI’s current strategy is going to get us there. That said, the technology if used correctly and with an understanding of its limitations (I know, I know, a big ask) is pretty damned useful and anyone denying this is also putting their head in the sand.

As a someone who has worked a lot with AI for it’s specific capability of “remembering” the data it has seen I can say that models do have their valid uses.

I’ve also spent some time trying make my colleagues to understand that data preparation for models is essential, since it’s the only way to control what the model “remembers”, of then in vain. For example, for the model to be usable, you don’t want it to recognize/remember commentator “Polar Socialist” but commentators like Polar Socialist, in order for it make anything akin inductive or abductive reasoning of commentators it has never “seen” before.

And there is the problem. I have seen several instances where intelligent use was made of AIs such as designing the electrical cabling installation of a new ship or deciphering the text from charred scrolls from Pompeii. But those were specific uses and the data sets were properly designed with them in mind. The trouble is that they are being pushed as the hammer to solve all the nail problems of the world and the reason that this is so is because there is an enormous scam in motion with AIs as the vehicle of it. It is enormous in scale with hundreds of billions of dollars being throw into this dumpster fire and some of the biggest corporations in the world have tied themselves to the fate of AI. And why wouldn’t they? They know that if it all falls apart that the Feds will bail them out. I think that this whole episode will trouble future historians. They will note that in a world of depleting energy sources, that Silicon Valley kept turning out everything from cryptocurrency to AIs that would burn though energy at a colossal rate and never anything that was more energy efficient. And they will not be able to decide if it was simply greed or sheer stupidity.

“And they will not be able to decide if it was simply greed or sheer stupidity.”

Greed makes people wilfully stupid doesn’t it? As in, wilfully blind to consequences they ought to see and care about.

And they will not be able to decide if it was simply greed or sheer stupidity.

No reason it can’t be both.

Anecdotes are not data. You are playing the psychic’s con on yourself. Here, let Baldur explain it for you: https://www.baldurbjarnason.com/2025/trusting-your-own-judgement-on-ai/

“Self-experimentation is gossip, not evidence.”

Maybe as Baldur contends, I am conning myself, but I think I have enough discernment to know whether the tools I use are genuinely helpful or not.

For one thing, their translation abilities between Japanese and English are real, and have undoubtedly helped me.

I’ve seen enough mistakes with code for me not to take what they output on blind faith, and I make sure to test thoroughly, but for the most part their coding abilities if used in a focused manner are useful to me.

It helps maybe that I understand the algorithms behind how LLMs work so I perhaps have a better understanding of their limitations than most. I know that at the bottom all they are doing is repeatedly sampling from a probability distribution of the next token, given the context of the previous tokens. It amazes me that this process performs as well it does. But I would never use them blindly in situations where high reliability was a requirement, because I know that highly probable tokens are not guaranteed to be correct.

Nicely put.

But what you are saying is that you have to know enough already to know whether the LLM is correct or not. In essence, it saves you from having to flip through the English/Japanese dictionary. I would describe that as an extremely niche use – helping those who already know a language find a better word to use.

I’ve recently used an online translator for a language I can speak a little, but am not fluent in. I have noticed that when I put in a paragraph for example, I might be given one meaning for a specific word. When I lose the context and just put in just the one word, the definition sometimes changes. I don’t know enough on my own to tell which usage is better, or if either is correct.

So while there may be some small niche uses which just substitute one kind or reference (consulting a colleague or a book) for a second, more immediate one, that is no reason to try to shove this tech down everybody’s throat and burn massive amounts of energy on warming planet while doing so.

Now excuse me while I get back to processing documents with the “AI” I’m being forced to use by my employer which does nothing except make the tasks take longer to accomplish than if I’d done them correctly myself from the get-go. But the employer is sure there’s value there somewhere if we just keep using it (and get paid noting to “train” it for the company that sold it to us in the first place).

> I don’t know enough on my own to tell which usage is better, or if either is correct.

In general, I would trust the translation when inside a paragraph more, with the proviso that the meaning ascribed to that word probably applies in that type of context only.

If given a single word the best the LLM can do is give you the “dictionary” translation of a word but given context they are able to give you a translation that makes sense in that particular case.

> that is no reason to try to shove this tech down everybody’s throat

I completely agree. I don’t think the tech is useful in all cases. I was just trying to point out that it does have its uses as long as the user understands what it is good at and what it isn’t

Why is it an improvement to ask a machine without understanding as opposed to asking the colleague who wrote the code and does understand it?!?? How did you verify that what the machine told you was correct?

Well in this particular case, the colleague in question happens to be very busy, so I would have been waiting a while for a reply.

I verified it by reading the code again after reading the explanation and seeing that the explanation made sense. As you point out in your other comment, I had to know enough to know whether what I was being told made sense or not.

A friend of mine working in robotics likes to simplify AI to mere statistics and valuations. The good thing access to big data. The bad thing is that too many things are not ruled by statistics.

I think the author gives these models too much credit. They’re not even gifted parrots. Parrots are animals that have consciousness and probably intent, along with will.

I like the term Emily Bender uses in her book “The AI Con: How to fight Big Tech’s Hype Machine and Create the Future we Want”

Synthetic text extruding machines.

This term properly puts “AI” in its place.

Now, the question is, our notorious tech bros certainly know this. So why are they lying to us about it’s capabilities? Could it be that they are no different than the 19th century snake oil salesmen?

The truth is, very few people understand even the rudiments of how an LLM works. It is somewhat arcane, of course.

Unfortunately, the people who don’t understand the rudiments include virtually all of the people who are making decisions about whether to use AI. The result is that even the people who are legitimately hesitant about this technology lack the confidence to speak up, and they assume others must know more.

Of course this situation is exacerbated by the armies of snake oil salesman trying to sell AI as a solution to all problems.

dunno why pundits don’t talk more about John Searle’s “Chinese Room” (probably cuz Chinese Room sounds not PC, lol)

All the obstacles that “AI” are dealing with was debated by people 30 – 40 years ago (probably longer)

we’re still at the 1920’s-style telephone stage for “AI”

chinese room: https://m.youtube.com/watch?v=D0MD4sRHj1M&pp=

I too recall a time when academics from various fields poured cold water on the state of play in building digital intelligence. Then, equity markets were starting to sag, chat gpt was rolled out followed by an unrelenting media campaign touting “AI” as the next internet, only more so, and here we are.

It’s classic case of SV guys overhyping things to suck out as much money as they can, and people then being dismayed when they find out the super intelligent AI overlords aren’t arriving anytime soon.

Computers are very powerful tools, their problem is they don’t speak human language, you have to learn their language to use them. And I’m not talking about programming, just to create picture of some simple schema with couple of boxes/arrows/titles, you have to know where and when to click in special program, in precise sequence. Most people struggle with this. So to have program that can take human description and create the picture, is actually huge. And there bazillion of such simple things that computers can do easily, but people starring at them don’t know how to tell them to do it.

As a method of stealth censorship, AI is brilliant.

I recently tested CHATGPT to uncover the sources for the political views that are embedded in AI output. They were straight mainstream, drawn exclusively from the West, and only rarely report much that is true.

When I challenged CHATGPT with other sources, the algorithm quickly admitted that when different sources are used, the AI output changes.

While the same is true for humans— garbage in = garbage out for datasets, CHATGPT’s output was pre-circumscribed. meaning that Western mainstream sources are ranked more highly as sources.

The point: by drawing from sources rank ordered by their fealty to Western propaganda, AI reproduces Western propaganda under the pretense that its methods are ‘objective.’

Other governments / economies can rank sources differently. But with AI output replacing original sources, AI is the new ‘shut up and sit down’ response to troublesome questions.

Then consider Kuhn’s The Structure of Scientific Revolutions. Today’s absolute truth is tomorrow’s ignorant superstition. How many robots must die for science to progress, for those who imagine that it does progess?

Garbage in, garbage out.

Trained on sound data, however, AI might be able to do some interesting things. Hence, so-called “expert systems.”

If we imagine the entire worldwide web as a single network, we might compare it to the noösphere of the Gaia hypothesis. (It certainly has been able to get everyone hooked on their phones.) Such an aggregation might be considered a “mind”, sentient in the way the behavior of a slime mold exhibits sentience.

So then the question becomes, “Does every mind actually think?” Observing the conduct of human beings, we then arrive at the question, “Do human beings actually think?” Often, many apparently do not. Hence, the proliferation of “thoughtlessness.”

If you would like to spend your life checking the work of a bright –but strangely retarded– assistant, then AI is for you. Personally, I would prefer to think for myself.

A better analogy for AI is that of a super genius with a lobotomy. It contains everything it learned, but can’t really reason about it. Human and animal brains have evolved some basic rule based capabilities like counting and primitive causality. Current LLMs have nothing like this at all. They were not forged in the fires of evolutionary pressure.

This will eventually change, of course. Google’s self training approaches are what’s needed for this and eventually someone, somewhere will recognize the obvious and set up a genetic algorithm that will take basic multimodal neural net models and evolve them in silica so their structure and function are constantly self improving, resulting in a far more effective, if nearly uncontrollable AI.

We’re probably a decade away from that, but maybe not. Once we get the first real AI, the rest follow very, very rapidly.

“This will eventually change, of course.”

Things will definitely change, but I have my doubts whether they will actually improve in the direction of truly reasoning AI agents.

It has been a long time, but I vaguely remember that earlier AI research about reasoning machines stumbled on insuperable difficulties such as the “frame problem”. These issues have, as far as I know, not been successfully tackled — which explains the purely statistical/MLE/Markov process approaches of current LLM and assorted neural networks.

If I had a dime for every “probably a decade away” tech promise I could have my own heavily subsidised “online bookstore” or spaceship company and maybe with all that money I could see the way to overpromise yet once more in order to feather my nest. And yet as we see with waymo it acts as a dystopian surveillance device with a transportation feature, and ai will effectively do the same thing. Unfortunately, being wasted on koolaid is not a criminal offense. Where are those fleets of million dollar self driving trucks that no trucking company could afford to buy, let alone maintain? The best example is the addition of various sensors in modern cars, they’re great, but require a human to use/monitor.

“Mrna is a programmable vaccine that will cure cancer!!!!!!” or “I’ll be wearing a fusion pack on my belt a decade from now!” in like 1980 or something.

The ultimate tech promise are flying cars. Humanity spent a century expecting those, and all it got is a glorified carnival ride in the form of battery powered helicopters.

Somewhat counter intuitively, I think solving the reasoning problem will involve not solving it directly. The genetic algorithm approach is how we as humans got to reasoning ability. I see no particular reason why this process can’t be adapted and duplicated with computers, particularly if they’re allowed to make direct changes to their own neural connection weights, organization and colocations.

We may not completely understand *how* it works for a long time, but like other genetic algorithm engineering solutions, it will probably work reasonably well until we determine how it works and consciously make something better, or at least more comprehensible.

I’m an engineer, and one of the many things I have learned is that a product that works in a way you don’t understand is not going to be a viable product. In order to be able to fix it, enhance it, write documentation about it, or even answer questions about it and use it intelligently, someone has to understand in great detail how it works.

A black box can never be a product. Taking the output of your system or device on faith alone is a sure-fire recipe for failure.

The author of this post says that AI was “just a extremely gifted parrot”. Can we look forward to saying this of it soon?

‘This parrot is dead. This parrot is no more! It has ceased to be! It’s expired and gone to meet its maker! This is a late parrot! It’s a stiff! Bereft of life, it rests in peace! If you hadn’t nailed it to the perch, it would be pushing up the daisies! It’s rung down the curtain and joined the choir invisible. This is an ex-parrot!’

Aldous Huxley was onto this early on. From Brave New World:

It’s just a simple step beyond to add probabilistic detail. In terms of reasoning/intelligence, there is NO progress beyond little Tommy here.

Excellent find, Jokerstein. I’m going to use it (with accreditation).

Thomas

The jump from LLMs and ‘thinking AI’ is enormous. Its clearly not linear. Will it happen soon? very much doubt it. Will it happen ever? if it does we probably will wish it hadn’t.

it is a reasonable hypothesis that human-style “thinking” is a fluke; not necessarily a convergent evolution point.

the default form of intelligent life might be something that more fits the definition of virus than anything else

book review: “Blindsight, by Peter Watts, explores the idea of non-conscious intelligence and in the process delivers one of the best examples of cosmic horror I’ve read in a very long time. It’s a story with implications for AI and how we perceive the world around us. Also, there are vampires.

https://m.youtube.com/watch?v=XqikPnYKo6M

Interesting link. Thanks. The book sounds interesting. But I bailed on the vid. It’s a festival of commercials.

Thomas

Earl Gray comment from above:

“I know that at bottom all they are doing is repeatedly sampling from a probability distribution of the next token, given the context of the previous tokens…”

From my limited perspective, I take your description as capturing what I would call the architectural

logic of its statistical operation.

In your opinion, does this process structure meaning in ways that prompt insights?

Would you agree that AI is not a thinker or a friend or a partner but a method that is now capable of flashes of clarity?

It should be acknowledged that the “clarity” is of human origin (which is why we even feel it is “clarity”), and likely involves copyright infringement, i.e., theft. For example:

Meta Torrented over 81 TB of Data through Anna’s Archive, Despite Few Seeders

https://digital-scholarship.org/digitalkoans/2025/02/07/meta-torrented-over-81-tb-of-data-through-annas-archive-despite-few-seeders/

In plain English: Meta trained their AI models using pirated content, and now they will roll out a paid-subscription for their chatbot.

There’s been much ongoing discussion here at NC about financial shenanigans, ginormous scams to defraud people, exploitation of workers, etc. — why should AI companies get a pass on this ?

> does this process structure meaning in ways that prompt insights?

In order to be able to build a good probability distribution for the next token, the models are trained so that they process the meaning of all the previous tokens. The attention mechanism is how they achieve this.

Does this achieve insight?

In the sense that in order to be able to predict the next token well in some cases, the latent meaning inside all the previous tokens has to be extracted and processed. Some people may call this insight but I personally wouldn’t use such a term for what is basically a mathematical computation.

> Would you agree that AI is not a thinker or a friend or a partner but a method that is now capable of flashes of clarity?

I definitely wouldn’t call it a friend or partner. LLMs are not sentient and have no feelings. They are just very capable of producing text that is human-like.

Do they have flashes of clarity?

To me this implies that they are initially puzzled and then have an Eureka moment. It is possible that something analogous to this is happening during the computation but I would avoid using anthropomorphic terms for something which is very different from human cognition.

The paper is flawed and smells of corporate FUD.

So Apple, the same Apple that is so far behind the game when it comes to the AI race releases a paper that goes viral right before their World Wide Developer Conference…

and it goes viral because a small part of the conclusion is latched on to by naysayers . i.e. confirmation bias is strongly at work here. A paper based on a very narrow choice of reasoning task. This is clearly stated in the papers Limitations section after the conclusion by the researchers themselves.

The most amusing rebuttal I can find comes from comes from an AI writer (and neuroscientist background) on substack:

The illusion of the Illusion of thinking

In it the author gets openai o3 model to write the rebuttal.

So an AI writes the rebuttal to a paper about AI not reasoning.

Two of the main points:

.1.” The assumption that the “reasoning traces” the AI model shows is a faithful portrayal of the model’s actual internal reasoning. ”

2.”The claim that there’s a phenomenon of “Complete accuracy collapse beyond certain complexity,” which reveals AI models’ inability to reason. ”

1. Apples premise is that the “reasoning trace” of tokens output from the model reflects how the model actually works. That is wrong. the trace output is the story the model constructs about what it did. Anthropic have research in this area.

2. Apple interpret decline in trace length at high complexity as models giving up. This mistakes communication style for the actual cognition .

and in the case of Towers of Hanoi actually doing the work would overflow the context window of various models. They recognise this and don’t do the task.

This is good reading from Anthropic. Though it is long. On the Biology of a Large Language Model

On this subject, here’s Rodney Brooks, former director of the MIT Computer Science and Artificial Intelligence Laboratory, writing 2018 (emphasis mine):

In parallel, Brooks started keeping a scorecard on his predictions about (1) self driving cars, (2) robotics, AI , and machine learning, and (3) human space travel. Here is an update, from January of 2025:

Again, this is the former director of the main research laboratory working on AI and Robotics at MIT, which has long been considered the #1 Computer Science Department in the world.

When I saw this, I couldn’t help but think of this video from the physicist Sabine Hossenfelder. She talks about a paper that asked a similar question but was looking more at the “how” in whether AI thinks or not.

https://www.youtube.com/watch?v=-wzOetb-D3w

Edit: I know I’m a bit late to the game. A pitfall of living in the Pacific Time Zone.

‘Reasoning’ without sentience isn’t reasoning, it is just calculation according to a bunch of algorithms and a mass of data as input.

AI isn’t sentient and therefore incapable of real intelligence, and real reasoning.

Real reasoning requires intelligence that only humans possess, including emotion, multiple senses, perception, instinct and intuition – all the preserve of biological species. That is sentience.