The New York Times Sunday Magazine has a long piece by Joe Nocera on value at risk models, which tries to assess how much they can be held accountable for risk management failures on Wall Street.

The piece so badly misses the basics about VaR that it is hard to take it seriously, although many no doubt will.

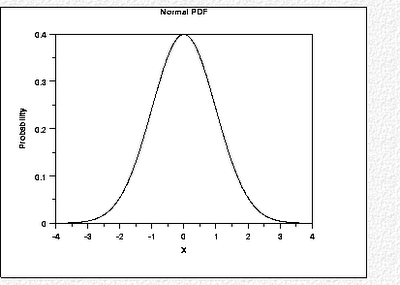

The article mentions that VaR models (along with a lot of other risk measurement tools, such as the Black-Scholes options pricing model) assumes that asset prices follow a “normal” distribution, or the classical bell curve. That sort of distribution is also known as Gaussian.

But it is well known that financial assets do not exhibit normal distributions. And NO WHERE, not once, does the article mention this fundamentally important fact.

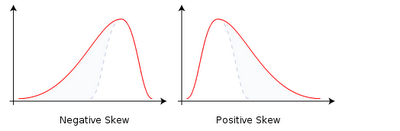

The distribution of prices in financial markets are subject to both “skewness” and “kurtosis”. Skewness means results are not symmetrical around the mean:

Stocks and bonds are subject to negative skewness (longer tails of negative outcomes) while commodities exhibit positive skewness (and that factor, in addition to their low correlation with financial asset returns, makes them a useful addition to a model portfolio).

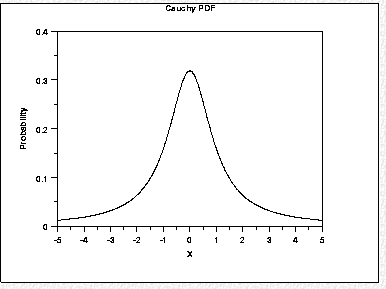

Kurtosis is also known informally as “fat tails”. That means that events far away from the mean are more likely to happen that a normal distribution would suggest. The first chart below is a normal distribution, the second, a so-called Cauchy distribution, which has fat tails:

Now when I say it is well known that trading markets do not exhibit Gaussian distributions, I mean it is REALLY well known. At around the time when the ideas of financial economists were being developed and taking hold (and key to their work was the idea that security prices were normally distributed), mathematician Benoit Mandelbrot learned that cotton had an unusually long price history (100 years of daily prices). Mandelbrot cut the data, and no matter what time period one used, the results were NOT normally distributed. His findings were initially pooh-poohed, but they have been confirmed repeatedly. Yet the math on which risk management and portfolio construction rests assumes a normal distribution!

Let us turn the mike over to the Financial Times’ John Dizard:

As is customary, the risk managers were well-prepared for the previous war. For 20 years numerate investors have been complaining about measurements of portfolio risk that use the Gaussian distribution, or bell curve. Every four or five years, they are told, their portfolios suffer from a once-in-50-years event. Something is off here.

Models based on the Gaussian distribution are a pretty good way of managing day-to-day trading positions since, from one day to the next, risks will tend to be normally distributed. Also, they give a simple, one-number measure of risk, which makes it easier for the traders’ managers to make decisions.

The “tails risk” ….becomes significant over longer periods of time. Traders who maintain good liquidity and fast reaction times can handle tails risk….Everyone has known, or should have known, this for a long time. There are terabytes of professional journal articles on how to measure and deal with tails risk….

A once-in-10-years-comet- wiping-out-the-dinosaurs disaster is a problem for the investor, not the manager-mammal who collects his compensation annually, in cash, thank you. He has what they call a “résumé put”, not a term you will find in offering memoranda, and nine years of bonuses….

All this makes life easy for the financial journalist, since once you’ve been through one cycle, you can just dust off your old commentary.

But Nocera makes NO mention, zero, zip, nada, of how the models misrepresent the nature of risk. He does use the expressoins “kurtosis” and “fat tails” but does not explain what they mean. He merely tells us that VaR measures the risk of what happens 99% of the time, and what happens in that remaining 1% could be catastrophic. That in fact understates the flaws of VaR. The 99% measurement is inaccurate too.

Reliance on VaR and other tools based on the assumption of normal distributions leads to grotesque under-estimation of risk. As Paul De Grauwe, Leonardo Iania, and Pablo Rovira Kaltwasser pointed out in “How Abnormal Was the Stock Market in October 2008?“:

We selected the six largest daily percentage changes in the Dow Jones Industrial Average during October, and asked the question of how frequent these changes occur assuming that, as is commonly done in finance models, these events are normally distributed. The results are truly astonishing. There were two daily changes of more than 10% during the month. With a standard deviation of daily changes of 1.032% (computed over the period 1971-2008) movements of such a magnitude can occur only once every 73 to 603 trillion billion years. Since our universe, according to most physicists, exists a mere 20 billion years we, finance theorists, would have had to wait for another trillion universes before one such change could be observed. Yet it happened twice during the same month. A truly miraculous event. The other four changes during the same month of October have a somewhat higher frequency, but surely we did not expect these to happen in our lifetimes.

Thus, Nocera’s failure to do even a basic job of explaining the fundamental flaws in the construct of VaR renders the article grossly misleading. Yes, he mentions that VaR models were often based on a mere two years of data. That alone is shocking but is treated in an off-hand manner (as if it was OK because VaR was supposedly used for short term measurements. Well, that just isn’t true. That is not how regulators use it, nor, per Dizard, investors). Indeed the piece argues that the problem with VaR was not looking at historical data over a sufficiently long period:

This was one of Alan Greenspan’s primary excuses when he made his mea culpa for the financial crisis before Congress a few months ago. After pointing out that a Nobel Prize had been awarded for work that led to some of the theories behind derivative pricing and risk management, he said: “The whole intellectual edifice, however, collapsed in the summer of last year because the data input into the risk-management models generally covered only the past two decades, a period of euphoria. Had instead the models been fitted more appropriately to historic periods of stress, capital requirements would have been much higher and the financial world would be in far better shape today, in my judgment.” Well, yes. That was also the point Taleb was making in his lecture when he referred to what he called future-blindness. People tend not to be able to anticipate a future they have never personally experienced.

Again, just plain wrong. Use of financial data series over long periods of time, as we said above, have repeatedly confirmed what Mandelbrot said: the risks are simply not normally distributed. More data will not fix this intrinsic failing.

By neglecting to expose this basic issue, the piece comes off as duelling experts, and with the noisiest critic of VaR, Nassim Nicolas Taleb, dismissive and not prone to explanation, the defenders get far more air time and come off sounding far more reasonable.

It similarly does not occur to Nocera to question the “one size fits all” approach to VaR. The same normal distribution is assumed for all asset types, when as we noted earlier, different types of investments exhibit different types of skewness. The fact that VaR allows for comparisons across investment types via force-fitting gets nary a mention.

He also fails to plumb the idea that reducing as complicated a matter as risk management of internationally-traded multii-assets to a single metric is just plain dopey. No single construct can be adequate. Accordingly, large firms rely on multiple tools, although Nocera never mentions them. However, the group that does rely unduly on VaR as a proxy for risk is financial regulators. I have been told that banks would rather make less use of VaR, but its popularity among central bankers and other overseers means that firms need to keep it as a central metric.

Similarly, false confidence in VaR has meant that it has become a crutch. Rather than attempting to develop sufficient competence to enable them to have a better understanding of the issues and techniques involved in risk management and measurement (which would clearly require some staffers to have high-level math skills), regulators instead take false comfort in a single number that greatly understates the risk they should be most worried about, that of a major blow-up.

Even though some early readers have made positive noises about Nocera’s recounting of the history of VaR, I see enough glitches to raise serious questions. For instance:

L.T.C.M.’s collapse would seem to make a pretty good case for Taleb’s theories. What brought the firm down was a black swan it never saw coming: the twin financial crises in Asia and Russia. Indeed, so sure were the firm’s partners that the market would revert to “normal” — which is what their model insisted would happen — that they continued to take on exposures that would destroy the firm as the crisis worsened, according to Roger Lowenstein’s account of the debacle, “When Genius Failed.” Oh, and another thing: among the risk models the firm relied on was VaR.

I am a big fan of Lowenstein’s book, and this passage fails to represent it or the collapse of LTCM accurately. Lowenstein makes clear that after LTCM’s initial, spectacular success, the firm stated trading in markets where it lacked the data to do the sort of risk modeling that had been its hallmark. It was basically punting on a massive scale and thus deviating considerably from what had been its historical approach. In addition, the firm was taking very large positions in a lot of markets, yet was making NO allowance for liquidity risk (not overall market liquidity, but more basic ongoing trading liquidity, that is, the size of its positions relative to normal trading volumes). In other words, there was no way it could exit most of its positions without having a price impact (both directly, via the scale of its selling, and indirectly, by traders realizing that the big kahuna LTCM wanted out and taking advantage of its need to unload). That is a Trading 101 sort of mistake, yet LTCM perpetrated it in breathtakingly cavalier fashion.

Thus the point that Nocera asserts, that the LTCM debacle should have damaged VaR but didn’t, reveals a lack of understanding of that episode. LTCM had managed to maintain the image of having sophisticated risk management up to the point of its failure, but it violated its own playbook and completely ignored position size versus normal trading liquidity. Anyone involved in the debacle and unwind (and the Fed and all the big Wall Street houses were) would not see the LTCM failure as related to VaR. There were bigger, far more immediate causes.

So Nocera, by failing to dig deeply enough, winds up defending a failed orthodoxy. I suspect we are going to see a lot of that sort of thing in 2009.

Recently there is a concerted effort by usual suspects to blame China for the unravelling of the Ponzi scheme and Keynesian policies are being peddled as a magic elixir.

Why UberKeynesian policies failed in Japan? Why same policies had devastating effects in many developing world in 70’s and 80’s.

I see a circling the wagons attempt by the Anglo-Saxon elites.

“So Nocera, by failing to dig deeply enough, winds up defending a failed orthodoxy. I suspect we are going to see a lot of that sort of thing in 2009.”

Indeed. Another excellent example will be commentators continuing to push Keynesian solutions, as opposed to questioning the viability and alleged long-term benefits of fiat money and central banking themselves.

Real economic growth was higher in the nineteenth century (which proceeded mostly with no central bank), and malnutrition never reached the levels it did when we first suffered a Fed-induced depression in the 30s. There’s a lesson in that that most are not even aware of, much less willing to consider.

“I see a circling the wagons attempt by the Anglo-Saxon elites.”

January 4, 2009 3:31 AM

~~~~

This whole crisis was caused by the Community Reinvestment Act … or… if that doesn’t fly, Fannie Mae and Freddie Mac … (sarcasm)

Voltaire said something like, “History is a lie commonly agreed upon” which will apply to a lot of what we hear in 2009.

What this really shows is how desperate the industry is to getting that formulaic equation that’ll reassure clients that there is more predictable performance and less risk than there really is, after all what is more assuring than normal distribution bell curve, it posits a normal randomness about everything and a quaint sort of fairness – think SAT scores.

Perhaps the mathematicians and physicists might like to relook the whole modelling process – after all, this is one situation where observing the very process itself(the observation of risk factors and measurement of risk )produces side-effects /changes the very process/experiment/model itself because the observer is often an investor or participant in the investment process which prompts changes in decisions which alters the dynamics of risk itself.

every action has an opposite reaction, whether it’s equal or not is debatable. the unrealistic ignoring of aspects of risk (all those wrong assumptions about the reduction/passing on of risk ) has resulted in uncertainty, suspicion and therefore magnification of risk in markets these days.of course what has just been said could be gobbleygook but that’s the risk the reader takes right?

http://www.nakedcapitalism.com/2009/01/woefully-misleading-piece-on-value-at.html

Woefully Misleading Piece on Value at Risk in New York Times

*flawed math base:

Gaussian vs. Poisson or other distribution

*systemic bias:

under-estimation of risk.

*length of historical data

insufficient data over time

Alan Greenspan’s primary excuse

*Mandelbrot examined 100 years of cotton

people want to eat at least twice per month, but can

defer clothing purchases.

does not account for technology leaps (plastic clothing)

*VaR allows for comparisons across investment types via force-fitting

different types of investments exhibit different types of skewness.

*black swan analogy not clearly explained:

common mode failure.

electric blackout voltage surge burns out the pumps at the New Orleans

oil refineries. Alaskan winter causes oil pipeline to freeze solid.

*chaos theory – not mentioned

*liquidity risk of market is not included

*liquidity risk of highly leveraged concentrated players

hedge funds

*importance of country political decisions:

China, Russia and other countries are ruled by small elite.

*systemic organizational risk:

central banks use VaR as simplified metric

did not use simple analogy:

patient is normal weight but says nothing about

cardiovascular health, good diet, etc.

*regulator over confidence in one metric VaR

*lack of reserve ratio cash versus AIG ‘insurance’

no mention

*human psychology and ‘panic reactions’

analogy: when car skids on ice, it is counter-intuitive to turn in

direction of skid and NOT to brake.

*case study: LTCM

Initiating crisis: simultaneous financial crises in Asia and Russia.

one of risk models was VaR

LTCM started trading in markets with ‘poor data’

model did not allow for trading liquidity risk.

size of its positions relative to normal trading volumes.

*LTCM debacle should have damaged VaR but didn’t

is thus not so.

*Three Mile Island: big complex model in nuclear plant control

operators did not know this, because

no sensor was provided to alarm under such a condition

http://www.controlglobal.com/articles/2008/BoilerBlowups0811.html

*missing data is all too common in thinly traded markets

nuclear plant has ‘missing sensors’

the VaR model is highly dependent on the ‘thin tails.’

*Northeast Blackout takes out Wall Street

GE control software failure was NOT anticipated, a black swan.

in hindsight, complex software DOES fail.

http://www.theregister.co.uk/2004/08/16/power_grid_cybersecurity/

*general tone:

defending a failed orthodoxy

*fails to build the ‘personality profile’:

portrayal of Taleb as somewhat ‘arrogant’

*basic factual error on position: Professor

*show me ‘catastrophe theory’

http://www.science.tv/watch/9504b9398284244fe97c/Prince-Rupert%27s-Drop-Glass

Bang on the glass bulb with a hammer to no avail.

If you snap off the hair-thin tail at the end of the drop,

it’ll shatter into dust.

depends on compression/tension structure of glass.

philosophy:

every complex system has its Achille’s Heel.

background is engineering

Yves,

That’s a much more useful summary of what’s wrong with VaR (and also wrong with BS options pricing and CAPM) than Taleb’s usual seethings.

Thank you.

The most these models can do is impose a sort of consistency requirement. With human nature the way it is, some pretty rigorous controls are a prerequisite even for that modest goal. But one might as well admit that some categories of bad risk taking can be highlighted that way, and I can understand the reluctance of traders and risk managers to ditch the model altogether. But that’s a pretty small picture view.

You are left with the inconvenient truth that while the actual evidence suggests (very strongly now) that the variance is infinite, the models require the price variance to be some finite quantity. That’s a pretty extreme idealization and we get all these daft ‘6-sigma’ events as a result. Hoocoodanode? Well, anybody, actually – it’s all in Levy, or Mandelbrot, or Taleb.

The unanswered question behind all this is: which risk-trading businesses are viable at all, over the longer term, given the level of risk implied by that infinite variance? Rather fewer than are out there right now, one suspects. Even if we knew how to create alternatives to VaR, there are plenty of interests out there who would resist it doggedly:

“If is difficult to get a man to understand something when his salary depends upon his not understanding it”

I see a few misunderstandings here. The version of VaR approved by the Basel Capital Accord which is actually used in practice by the greatest majority of market participants is not based on normal distributions. It is based on the last 500 days of historical data, simply recycling the shocks. This series is complemented with a few outliers added by hand for the purpose of stress testing.

The biggest problem with VaR for derivative portfolios was actually not mentioned in the list: pricing consistency. Namely, what one does as a rule is to use a different model for each deal. This procedure is called local calibration. Models most used are often fairly primitive variations on the Black-Scholes model and thus could not calibrate to all deals with the same choice of parameters. But this means that there is a lot of model risk and one is just left to hope that model risk diversifies away. The problem is that model risk tends to be highly correlated in times of crisis.

Ideally, the problem can be reduced to an engineering task: find realistic models for derivatives which are capable to calibrate simultaneously and consistently to a large number of deals. These should both reflect properties of the historical time series for the underlying and views on possible future events.

This is a problem that can definitely be solved by brute force engineering. If mankind was able to go to the Moon, we can certainly do this one. All one needs is to recognize the need of high quality, faithful and consistent modeling as a priority.

In the recent past instead, pricing models were mostly judged on their ability to prop up deal flow. They were just too simple and made a mockery of reality.

That’s a succinct discussion of tails risk, Yves; ‘preciate it.

Yves: ” . . . [R]educing as complicated a matter as risk management of internationally-traded multii-assets to a single metric is just plain dopey. No single construct can be adequate.” This has long been a particular bete noir of mine in all kinds of analysis of population behaviors and complex systems. Any single-metric almost certainly introduces more error than it removes, and so the production of simple numbers is blindness wraught by madness. Quantification is useful, but one has to learn to THINK about the systems and contexts involved, and so have a meaningful reference for any number. That implies at least multiple analytical methodologies, but also a perspective of standing outside the frame of reference when doing any analysis. I sincerely doubt that in the instance of financial analysis most anyone stands outside the box, because their bonus is dependent upon generating a number which fits inside their shop’s box. Ergo . . . data-forcing is an endemic failing of the financial industry, to me.

Along those lines, and turning to the cognet example of LTCM, as you sketch in a few blunt words LTCM’s sub-geniuses killed themselves _by basic lack of trading competence_. This had nothing to do with ‘risk analysis,’ ‘models,’ ‘chaotic perturbations,’ or the moon in Scorpio: they blew themselves up because they did not competently put on their positions. —And AALLLLLLLLLL of those big banks in the securitization business killed themselves _exactly the same way_. These banks packaging ASBs DID NOT kill themselves because their risk models mistook a flyspeck for a decimal place. They killed themselves because of basic market operational incompetence.

None of these well-remunerated blokes thought it possible that _ALL_ of their securities would become illiquid simultaneously, but that is exactly what happened: ASBs of practically all types except CMBSs became illiquid in less then two weeks in the back half of July 07, and everything we have had since was locked in as of 1 Aug 07. Why? I mean, these ‘securities’ were individually rated, for discrete properties; they couldn’t all go bad simultaneously, right? So they couldn’t all be boycotted, there would be time to unwind positions, right? Well, no. That was the koolaid they drank inside the issuer shops, that their ‘products’ were ‘discrete,’ and legally this was true, they had seperate covenants. But to the marketplace, they were a commodity because they were internally opaque, and sold on the same basis. So when one went bad, the market saw them all the same way. The issue with ‘risk analysis’ of individual ASBs is bogus, because the market didn’t make judgments based upon said analyses but rather on whether one could get a quote from a buyer in an hour on _any_ security. When confidence went, the quotes plunged in number and in price. Individual housing markets had their variances, and hence their respective tails risks, but the securities all functioned interchangably in the market place. Put another way, ASBs were maximally correlated in their market function, so analyses of individual risk were moot.

While the way the industry handles numbers puts my teeth on edge, I would never ascribe their total failure of the last eighteen months to such bad methodology first and foremost. The industry failed because of gross incompetence in understanding basic market behaviors. Why? Because cheap credit made them rich for years no matter how stupid they were, so it didn’t pay to bother to be smart. Just borrow more leverage, and gtt made richer. . . . Oops.

Yves: Exactly how do you get correlation or the reverse if there is no standard deviation? The current situation actually seems to very clearly indicate that there can be correlation. Does some of oils sell off relate to abandonment of winning positions to prob up the losers in other areas? Taleb has said that you want to be in positions where the surprises are usually on the positive side, where the black swans work for you. That is more often the case with commodities than with equity positions. Sometimes these will work in tandem, and sometimes the will not.

Irene:

There are too many variables to make the models work in anything other than a fuzzy add hoc sort of way. The econometrics crowd has been plugging their formulas into the economy for a long time now and haven’t had much success in even an ad hoc sort of way. Getting to the Moon is a much simpler mathematical problem.

An excellent post, thanks! [and a great insight on Taleb]

The mathematical modelling of the climate is probably rubbish too.

Hi Irene,

Nice details, especially about pricing consistency & the VaR price history. Does the use of a 500-day rolling history imply that, once (say) the Sept-Nov 2008 volatility drops out of the series used for VaR calculations, all the risk managers will calm down and the banks will start lending & trading like fiends once again? Perhaps it won't be that silly in practice, but with their impressive track record, it's as well to expect insect-like idiocy from banks.

I agree about the need to model reality closely. I just don't see how the theoretical framework embedded in VaR can be goaded into doing that for the risks that actually matter.

We just don't seem to understand the reality well enough to agree about what needs to be modelled and what can be ignored.

So while I agree that VaR can probably be patched up a lot more, I don't really share the optimism of your para 3. For instance, improved VaR models will still ignore the propensity of stressed markets to correlate, or simply to cease to function. Not unfair to want that to be modelled, though goodness knows how, and it's certainly a really, really important risk, if you like being able to exit positions, dynamically hedge, etc.

Also, the idea of 'outliers' (and a load of other familiar statistical objects) gets a pretty flaky when you have non-Gaussian distributions. Sticking in a few extra-big price move scenarios didn't seem to do the trick last time and it won't work next time either.

Value-at-risk is just one of the many soothing noises investment banks and fund managers make while pocketing their fees. They don’t know how to make money over the long haul, but they sure do know how to sell their services. And if their portfolios go kablooie – they keep their bonuses.

I can’t believe supposedly intelligent people are sitting at their computers, talking about how to “fix” these models of risk. It was never about managing risk, it was about managing customer *expectations* of risk so they’d keep putting in more capital and paying more fees.

Value at Risk has much in common with the way wall street sold modern portfolio theory 30 or more years ago. and common sense says that the biggest source of outright failure is leverage – it’s tough to get carried off the field if you don’t use borrowed money even if you make a whopper or two of a mistake during a modest period of a longer career. It’s also disturbing that so few mention survivor bias given how little attention is given to changes in index components or other evaluations of success.

I don’t fully agree with your analysis. While it make many true and important points, it imho also mixes two per se independent sources of mistake:

1) VAR as a concept itself is flawed. Simply because it cuts off any information in the part of the tail beyond the confidence quantile.

2) The widely used assumption of normal distribution of asset prices.

Those two are independent. It is possible to castrate a brilliant model with non-normal asset returns by letting it compute a VAR. On the other hand using more meaningful measure like expected shortfall does not bring you much as long you assume normal distribution for any unknown variable.

However, the basic flaw is that the victims of bad risk management (models) are not the shareholders. It’s the bondholders that suffer. For shareholders a VAR is even the perfect model as any loss bigger than the quantile is taken by the bondholders anyhow. Why would the shareholders care about the expected shortfall?

So the optimal investment strategy in the presence of leverage is to have as skewed returns as possible. This is just what many managers did. Probably also those of Lehman Brothers. Partly therefore, the debt recovery of Lehman Bonds were so terrible low. This means just, that difference between the expected shortfall and the VAR was so huge, that once the equity was lost nearly all the assets are lost.

None of these well-remunerated blokes thought it possible that _ALL_ of their securities would become illiquid simultaneously, but that is exactly what happened

If I have a portfolio of US treasuries, am I at risk that everyone will want to sell at the same time? What to do about this, especially if it is true that in times of crisis all correlations go to 1.0?

It is wrong to link VaR to the normal distribution. VaR can be applied to any distribution. For example, the credit risk capital calculations used in the Advanced version of Basel II, use VaR for significantly non-normally distributed losses in credit portfolios. VaR for non-normal distributions is used very commonly, so no need to create strawmen here.

Secondly, there are other measures commonly used by risk managers, such as expected shortfall, which provide further quantification of the tail risk, in addition to VaR.

So, do not blame the metric, blame people who misused it.

VaR contains interesting information.

I doubt, however that any intelligent, experienced market participant sincerely or seriously thought that it was a good measure of “risk” for a financial institution.

VaR was useful as part of the promotional material for the Ponzi scheme which continues apace: a small corrupt tribe of wealthy and powerful tricksters who suck the blood out of the economy with the help of politicians in their pay. None of this has much connection to finance: it’s plain and simple fraud on an unprecedented scale.

Annonymous at 8:24 A.M.

I’m with you.

I’d say value-at-risk is just another example of the pseudoscience the finance industry created in order to pull off one of the biggest swindles in history of the planet. It was all done deliberately, intentionally and maliciously. It’s the socio-political guys, and not the economists, who get it right:

In the 1980s, as befitting an age of knowledge industries and communications, the selling of a new political economics was mounted through a well-financed network of foundations, societies, journals, and theories. Broadly, their efforts were designed to uphold corporations, profits, consumption, wealth, and upper-brakcet tax reduction and to undercut government and regulation. Some of those involved antedated the 1970s, most noatably University of Chicago economist Milton Friedman and the “Chicago School” of free market economics. All together, they would give self-interest–critics substituted selfishness and greed–another philosophic era in the sun…

[B]y 2000 the conservative restatement of old-market theology, antiregulatory shibboleths, God-wants-you-to-be-rich theology, and Darwinism had built up the greatest momentum since the days of Herbert Spencer and William Graham Summner.

Kevin Phillips, Wealth and Democracy

Richard Kline has put his finger on the truth. The VAR models, such as they are, are not the underlying problem in this crisis nor previous blow ups.

The VAR models are based on a distribution of returns under a certain set of circumstances, like regulatory control and oversite, the historical probability that borrowers will pay back their loans, the diversity, depth, and solvency of counterparties (LTCM), market information opacity, etc., etc.

Obviously, the underlying distribution of borrowers paying back their loans changed completely when deadbeats could recieve speculative loans on their own lying say-so. The VAR models were all based on the distributions recorded over a long period of responsible lending practices. I believe, loans made under the historical mortgage lending criteria have continued to function as expected.

The VAR models are not the root cause except they were used as a short term way of convincing investors to buy a lot of intentionally fraudulent securities, whose underlying distribution was in no way similiar to the historical return set.

The financial crisis is the result from unwinding of a giant criminal enterprise. The hook was making investors believe that what was being sold was the same as what had been sold historically. AAA MBS anyone.

Kind of goes well with the neo-cons attacks on science, it’s a case of not wanting to see rather than not seeing… As Orwell said “We are all capable of believing things which we know to be untrue, and then, whene we are finally proved wrong, impudently twisting the facts so as to show that we were right. Intellectually, is possible to carry this process for an indefinite time: the only check on it is that sooner or later a false belief bumps up against solid reality, usually on a battlefield”. Neoliberalism is dead until someone revives it (in about 1 Kondratieff cycle).

Wonderful, wonderful post. Provides an erudite critique that really explains the matter in an accessible manner and furthers my nderstanding.

Pretty much why I read this blog daily.

Thank you very much for the time and effort you put into this blog.

One brief correction–kurtosis is not a synonym for “fat tails”. That condition occurs when you have leptokurtosis–a peaked top with fat tails. The opposite kind of distribution is platykurtic, in which you have a flat top and narrow (or even non-existent)tails. Obviously it is leptokurtosis we are concerned with in financial models.

Certain theoretical constructs like Black-Scholes assume a normal distribution of returns. But no one believes these models are completely correct. People are well-aware that returns are not normally distributed and work hard to account for that. Robert Engle won the Nobel Prize in 2003 for developing GARCH, an algorithm that allows traders to see how volatility is changing and what volatility is right now. (B-S assumes volatility is a constant.) The reality is that even without using common sense (that any model based on historical data will fail to take into account extreme outcomes), traders and bankers an financial engineers had access to much more sophisticated models than B-S or VAR.

The reason for this crisis is not bad models, as in 1987, but a deliberate assumption of risk by profit-hungry firms, all done while the government ostentatiously looked the other way and failed to use its regulatory power. If models like VAR had a part in this, it is that they were misused to provide the appearance of risk-avoidance.

By the way, Mandelbrot’s The (Mis)Behavior of Markets is an excellent read for those wishing to explore this subject in more depth.

He does a great job of deconstructing the theories upon which the modern finance industry is built. And he religiously, almost to a fault, stays away from making judgments and casting aspersions. His see-no-evil posture is evident from his explanation as to why the finance industry contiues to use such easily discredited theories:

So again, why does the old order continue? Habit and convenience. The math is, at bottom, easy and can be made to look impressive, inscrutable to all but the rocket scientist. Business schools around the world keep teaching it. They have trained thousands of financial officers, thousands of investment advisers. In fact, as most of these graduates learn from subsequent experience, it does not work as advertised; they develop myriad ad hoc improvements, adjustments, and accomodations to get their jobs done. But still, it gives a comforting impression of precision and competence.

It is a false confidence, of course.

Benoit Mandelbrot, The (Mis)Behavior of Markets

Robert Boyd said…

“Certain theoretical constructs like Black-Scholes assume a normal distribution of returns. But no one believes these models are completely correct. People are well-aware that returns are not normally distributed and work hard to account for that. Robert Engle won the Nobel Prize in 2003 for developing GARCH, an algorithm that allows traders to see how volatility is changing and what volatility is right now.”

Mandelbrot would disagree:

Likewise, when it became clear that volatility really does cluster and vary over time rather than stay fixed as the standard model expects, economists devised some new mathematical tools to tweak the model. Those tools, part of a statistical family called GARCH (a name only a statistician could love), are now widely used in currency and options markets.

But such ad hoc fixes are medieval. They work around, rather than build from and explain, the contradictory evidence. They are akin to the countless adjustments that defenders of the old Ptolemaic cosmology made to accomodate pesky new astronomical observations. Repeatedly, the defenders added new features to their ancient model. They began with planetary “cycles,” then corrected for the cycles’ inadequacies by adding “epicycles.” When these proved inadequate, yet another fix moved the center of the cycles away from the center of the system. In the end, they could fit all of the anomalous data well enough. As more data arrived, new fixes could have been added to “improve” the theory. They satisfied their early customers, astrologers. But could they lead to space flight? It took the combined efforts of Brahe, Copernicus, Galileo, and Kepler to devise a simpler model, of a sun-centered system with elliptical planetary orbits.

Benoit Mandelbrot, The (Mis)Behavior of Markets

An old piece of mine on certain aspects of this discussion, which might be useful background for some:

http://epicureandealmaker.blogspot.com/2007/05/not-far-now.html

I think few of the quants and risk managers who create, modify, and monitor these risk models are so naive as to believe they give the last word on risk. Unfortunately, sooner or later the risk decision arrives at a level within any organization where the people involved (CEOs and other senior executives) do not understand the models’ well-understood limitations. These are the people who take spurious comfort from the reassuring precision and pretty mathematical equations to make decisions to undertake these risks.

Barring putting David Shaw or James Simons in the CEO spot at every bank out there, this problem will always be with us.

Yves says:

"The econometrics crowd has been plugging their formulas into the economy for a long time now and haven't had much success in even an ad hoc sort of way. Getting to the Moon is a much simpler mathematical problem."

Econometrics models are not used for derivative pricing and risk management because of engineering difficulties in calibration. Pricing models typically involve only about 3 adjustable parameters and are often constrained to be analytically solvable in order to facilitate calibration. One then compensates for the scarce economic content of the underlying representation by means of deal dependent calibration. That's where model risk hits.

I think there is a lot to improve in this direction ONCE one agrees that realism is a top priority for derivative modeling. According to the current orthodoxy of financial engineering, economic realism does not matter. What matters is facilitating deal flow, conformity [i.e. doing what other banks do] and convincing [under-qualified] regulators. It's a process of reduction to the least common denominator and weakest link in the chain.

Richard Smith says:

"Does the use of a 500-day rolling history imply that, once (say) the Sept-Nov 2008 volatility drops out of the series used for VaR calculations, all the risk managers will calm down and the banks will start lending & trading like fiends once again? Perhaps it won't be that silly in practice, but with their impressive track record, it's as well to expect insect-like idiocy from banks."

You have put your finger on a real issue: it will be precisely that silly in practice unless things change in the interim! Historical VaR has always been hampered by "ghost effects" like the one you describe. This is not right, everyone knows it is not right, but that's what everyone does. Not so much because using historical scenarios is better than using normal or Student-t distributions, but because this way one satisfies automatically the backtesting benchmarks on which regulators insist.

Hopefully, in 10 years from now the regulatory framework will be different and VaR will be evaluated based on scenarios that clearly remember the 2008 volatility bursts.

I also agree with all comments about markets being awfully distorted nowadays. The fact that so far the modeling realism dear to myself has systematically been put down by business concerns is symptomatic of serious problems at other levels. A systemic fix hinges obviously on greater priorities such as curing the fallacies in the monetary and regulatory frameworks. Still, I have no doubts that a byproduct of the "right" sort of fixes, will result in a renewed emphasis on economic realism on the modeling side.

A Woefully Cheap Critique on the VaR Piece in the NYT.

This was a cheap post.

Nocera’s article was well done. As Fresno Dan states: “Provides an erudite critique that really explains the matter in an accessible manner and furthers my nderstanding.” Only the statement should be applied to Nocera. He’s the one who brought the matter out. Yves is simply commenting on carefully extracted excerpts—which anyone can do.

Of course one can quibble with the fact that Nocera doesn’t “dig deeper”. But of course, such quibbling typically comes from myopic pedants.

Nocera was writing an article for the general readership of the NYT for crying out loud. Do you really expect him to go into detail on Couchy Distributions? The only reason Yves was able to do this so effortlessly is that Nocera laid the foundation for her.

Try going into a discussion on kurtosis striaght up….Yes, Yves could obviously write a very illuminating piece on this as she is a very good writer—but it would be much more difficult to lay the foundation—and, depending on the readership–it might be pretty damn boring.

Yves does bring out some interesting points, and the furthering of the discussion on VaR is appreciated.

But it’s so much easier when you can simply take somebody else’s work—and add the nuance.

Nocera does a good job explaining how VaR came about…that managers underestimated the risk of a Black Swan…that VaR falls apart when liquidity dries up. Additionally he brings up a good point about how VaR might have been much more effective had it not been so widely relied upon.

No, this article would not be worthy of a cite in an academic journal on VaR. But neither would Yves’ critique of it.

The difference of course is that Nocera realizes this…and Yves doesn’t.

Hell Yves, you’ve obviously been troubled by VaR for quite some time. Why didn’t you just come out with a post—straight up—on VaR if you are such an expert?

Why waste our time discussing an article from somebody else on VaR when you could have just written one yourself?

EVERY single one of your posts is a comment on somebody else’s work. Why don’t you—for once—

just do one yourself?

The post was cheap criticism completely lacking in perspective—failing to take into account the author’s scope in relation to his audience.

A small point in corroboration: back in the 70’s when Black-Scholes had let to an explosion in equity options, the dealers noticed that the formula produced a small but definite under-pricing of OTM options. IOW, the tails were fat relative to the gaussian, since the derivation of B-S involves taking an integral over a gaussian distribution. The mm’s still made money with the spread, but they aren’t philanthropic organizations so they applied ad hoc corrections factors.

IOW, besides Mandelbrot’s empirical examination of the cotton time series, the folks in contact with the daily action were noticing the same thing.

My source for this is a book on options I read in a public library in the 1980’s, so it’s hardly esoteric knowledge.

Bit strong there, Dan Duncan, and some major irony in the final two sentences.

Hi,

I like your article, but even THIS doesn't quite do risk modeling justice. There is NOT ONE risk model at any of the big banks that assumes normality. In fact, one of the advantages of VaR is that it inherently does NOT assume returns are normal. If you assume returns are normal, then 99% VaR is simply

VaR = 2.33*volatity-mean.

These banks do NOT pay armies of well-paid PhD-educated quants simply to measure volatity and mean. Many of the third party vendors DO assume normality though and if you want to point fingers, I think third party vendors share a bigger role than most have given credit. Just imagine what would happen if many buyside firms were using the exact same risk model (which they are due to reliance on third party vendors). They will all receive the same signal at the same time. What impact would that have?

But lets assume for a moment that there IS a right number, i.e. assume that there is a right VaR, and we are just trying to estimate it. Even if we did know what the VaR was, that STILL won't protect us from blowups. The way I think of VaR is as a way to describe the boundary of tail events. In other words, if VaR is $10M, that means that we should consider any loss of $10M or more as a "tail event". We should expect to see a tail event (on average) once every 100 trading days for 99% VaR. But what VaR doesn't tell you even if you had the exact number is what loss you could expect to have IF A TAIL EVENT OCCURS. VaR just isn't designed to do that. It just tells you the boundary of tail events, i.e. where "There be dragons". There are other risk measures that ARE intended to tell what loss to expect if a tail event occurs, e.g. expected shortfall, but VaR is not one of them. Many firms have been moving towards these "coherent" risk measures. So blaming VaR is a straw man argument.

For the record, I know may of the senior quants at many of top banks amd hedge funds and they are probably groaning at the discussion in the news these days. Your article is probably less disturbing than most, but still, not one author is giving any of the quants the credit they deserve. Most of the quants I know have been warning management for years, but people only listen to those directly impacting P&L, i.e. traders.

Please have a look at an article I wrote in March 2006 (and republished in August 2007):

Risks of Risk Management

http://phorgyphynance.wordpress.com/2007/08/09/risks-of-risk-management/

Cheers

Thank you, Yves. I appreciate the information very much, especialy since I have to deal with less-informed folks who think that the staff of the NYT are all minor deities who work for the public good. You are making the internet a better place!

That’s a nice piece Eric.

With those scenarios in mind I am enjoying the mental picture of Fuld frothing at his VaR numbers back in the middle of ’08, though I wonder whether anyone was brave enough to show them to him.

Thanks for an excellent critique, most notably of the article itself, as opposed to the technical issues of VaR. It is no surprise the NY Times does a superficial analysis which leaves the impression that the SOP is A-OK. The NYT is in the business of making the world safe for corporations i.e. manipulating public perception in favor of the status quo.

As to VaR itself, IMHO, the critique doesn’t go deep enough. Asset prices are not a statistical phenomena at all. One put any time series into a histogram, that doesn’t make the underlying process a random one, regardless of the shape of the histogram. The endless attempt to force the histogram of asset prices into a gaussian reflects the deep assumption that the underlying process is random: if the assumption is true then gaussian follows by the law of large numbers. That the histogram isn’t gaussian is only the tip of the iceberg in understanding that the assumption is false.

If nothing else (and there’s plenty of else if one is honest) the use of quantitative models to inform market actions (buying and selling) ALL BY ITSELF introduces causation into the time series, and hence renders the time series no longer probabilistic. It also, obviously, changes the distribution. Thus the whole approach is farcical — at best — criminal in reality.

Just want to say thanks for an informative post, and the astonishingly high-quality discussion by practitioner-commenters.

One can only hope that in the age of the internet, business journalism will migrate towards authors who actually know what they are talking about.

I’m in the camp that believes models don’t kill people. People kill people.

But I have to say the discussion above has been fun reading.

VaR model flaws go much deeper than just the shape of asset price distributions because they rely on the Efficient Market Hypothesis (EMH) to model markets as efficient, always in equilibrium and self correcting where security prices simply react to random news releases. The EMH models day-to-day security price movements as “independent random variables” where purchase or sale is a “zero net present value transaction,” resulting in neither the buyer nor seller having an advantage. Consequently, security trading is modeled like casino gambling where price volatility determines risk assessment. The EMH concludes that because prices always fairly reflect intrinsic value and it is impossible to know what markets are going to do, consequently, fundamental analysis isn’t cost effective. The EMH model is both naïve and specious, i.e., it relies on incorrect premises and, therefore, cannot correctly model market risk. A discussion follows.

Markets are not always in equilibrium nor self correcting but rather are a discounting mechanism, i.e., professional traders look ahead and bid prices either up or down prior to earnings and/or economic news announcements; that is why prices can go up on bad news and down on good news. Security prices from day-to-day seem random, however, portfolio diversification cancels out unsystematic risk, therefore, markets as a whole only have systematic risk. Using a diversified market portfolio, monthly rather than daily data, trend lines and conditional probabilities based on fundamental analysis correctly models systemic market risk; thereby disqualifying the flawed EMH’s reliance on securities’ price frequency and the use of price volatility as a proxy for portfolio risk which is not sufficient information when hedging positions.

(Eric L. Prentis is the author of “The Astute Investor” and “The Astute Speculator,” http://www.theastuteinvestor.net )

As a “senior risk modeling quant” i have to second Eric’s post. It just like that: Many of the quants actually warned their management. Just for a banks manager the information: “Your bank might default with a 1% chance next year” doesn’t sound like a warning. They just understand: “With a probability of 99% I’ll get at nice bonus this year. Fine.” I’m not saying risk models are perfect, certainly not that all risk models are perfect, but the main trouble here was in the processes and the reaction on the models result.

The management simply is not interested in the 1% failure scenario, because it does not harm them personally. As I wrote above, even the shareholders do not bother to much about a 1% chance of default. They get their return accordingly to the risk. The bondholders should be the losers in this game. But they are bailed out by the government, so in the end it’s YOU that has the trouble.

It is such a pleasure to read a post that brings-out people to comment who seem well-versed in the specific subject matter.

As a general observation, too often so-called experts get away with spouting rubbish in the media because they are questioned by generalists. If only we could get a list together of specialists ready to question e.g. politicians and others when their chosen subject comes up, we’d end up with better scrutiny, better politicians etc and a better-informed public.

MrM and Irene,

Thank you for clearing up the issue with VaR not being tied to only normal distributions. Also, good insight into the problems with putting new and more complex risk management tools into practice. Regulators can’t be expected to keep up with highly paid quants at private institutions. This problem is a fine example of why answering the calls for “more regulation!” will not in itself fix our financial system.

Taleb’s interview on Charlie Rose and his screed against VaR should be juxtaposted (or linked) against this article. It is a blistering indictment.

Yes a beautiful work indeed. Taking down mainstream misinformation is what blogs do best and this piece is the epitome of fhat service to the reading public

Once again thank you and please don’t accept any offers from the big boys !

-self

I’M curious how much money is spent on money. It seems that as of lately, we have spent trillions of tax payer’s money on money (read that as investment banks which I equate as money, since all they basically deal in, is money – please correct if I am wrong).

While I don’t know how well a barter system would work (not very well is my guess), spending money on money seems to offer little benefit to average joe, aside from loans, which is not happening now, and may not for a long time.

So let me throw this idea out, with the plea that it be looked at in a manner that is somewhat respectful. That idea is that everything be free. That is not to be taken literally, as people would still have to work. But the benefits would so outweigh the negatives, it is ,IMHO, an idea who’s time has come.

If anyone wishes to discuss this further (and I would greatly appreciate any discussion), please contact me at brts@charter.net.

Thanks,and Happy New Year to one and all. Bill

Agree another fine post with good comments.

Have always maintained a strong

belief that:

1) A firm or individual involved in financial vehicle must have own skin in the game; and must be easily and economically accessed to face the consequences; and must face damage to rep by who can reasonably show were lied to etc.

(not be shielded by attorneys paid to shield & to lie as paid– for whatever side)

2) Layers of complex phrases serve to cloud key matters and deceive.

Instruments should be written in basic Business language not the

legalese with the devils in the details. Overgrowth of the professional class and spreading of their tentacles has choked off the nation's genuine productive and export sectors.

independent

excuse if this posted twice in error

Thanks for the very useful comments and elaboration. Some further thoughts, and a question:

1. Correct that I did not go further with the discussion of kurtosis into the particular types (informally, peaked versus flatter distributions); I was merely trying to point out that Nocera had missed a fundamental issue. I probably should have added a parenthetical and a link.

2. Fair also to point out that VaR does not require a normal distribution. However, Nocera gave that impression in the piece, and it appears that some (many?) variants of VaR used in the field do assume a normal distribution (certainly, Dizard suggests that the versions foisted on investors do).

A question re regulators. I am not clear how regulators could use VaR models that differ from the ones deployed at the institutions themselves. In the US, banks are permitted to use their own risk management tools (although my impression is that they were encouraged pretty aggressively in the mid-late 1990s to use VaR as industry best practice).

If a regulator were to seek to use a variant of VaR different from that used at a particular firm, it would need to obtain a great deal of data provided by the firm in question and crunch the numbers themselves. Does that actually happen, and if so, where and under what circumstances?

Fabulous article, Yves, and many excellent comments.

My view is that VaR, just like anything else out there, is a tool. When a single tool is relied on to build an economy, we are in trouble. We see this over and over again. What’s lacking is the judgment in how to use the tool. And, possibly most importantly, the judgment in how to compensate people based on these tools.

Personally I would want to see not just a single VaR number but a range based on both probabilities (90%, 99%, 99.9%, 99.99%, 99.999%, etc.) and varying historical time periods (week, month, year, 10 years, 100 years). Then look at this as a scatter plot and apply judgment. Such things are immensely useful, I think.

Or, use an equal weighting of a 90% or 99% VaR along with a careful analysis of what would happen in the remaining scenarios.

Or you could do like Buffett and concentrate on only doing things that don’t have a high cost outside the VaR probability at all, plus do well within the VaR probability. Seems to me that approach has worked quite well also :-).

@ Dan Duncan,

“EVERY single one of your posts is a comment on somebody else’s work. Why don’t you—for once—

just do one yourself?”

First, if you were a regular reader of Yves blog Dan you would find the occassional post that is entirely Yves. So stick around and you might learn something. At worst, if you already know everything, you will be keep abreast of much of the current news related to the economy and financial markets. You could do far worse lurking on other sites.

Secondly, financial blogs are a derivative of other news posts be they economic reports, op-ed, or some other current event. In other words, scraping is part and parcel of financial blogging. Content has to come from somewhere.

Third, there has been far too much reliance on modeling period. Too few people know how to use these models as risk management tools precisely because they don’t understand the strengths and weaknesses of these models. There is no perfect model, each has its Achilles heel and far too few people both inside the industry and outside know how to find that heel and know what to do.

The Mandelbrot citations from the Mis-Behavior of Markets was a perfect segue for the VaR discussion in the NYT.

Fourth, there is a great deal of appreciation amongst her readers for the breadth and scope of Yves’ knowledge as well as her tireless efforts that she puts into this blog. She has been a wonderful black swan and voice in the wilderness during shining a light on these murky waters in this financial jungle. She deserves our salute. While you may not feel compelled to show your appreciation, at the very least show some respect in your criticism.

Thank you

@ Eric L. Prentis

excellent elaboration on the subject of the flaws of EMH.

And you are spot on in your assessments of what markets truly are: discounting mechanisms. And that is precisely what professional traders do, buy and sell into bullish and bearish news several days, weeks, or more out in time. But it gets tougher to push out more than a quarter or two.

I very much enjoyed your article since I had just read Nocera’s piece (which Barry Ritholz thought was wonderful). I also enjoyed all the comments. Not being a quant or a mathematician, but having read Taleb, the conversation was fascinating.

I am interested in what you and your readers think of general epistemological questions regarding these issues. As you know, Hayek was very much against the “scientistic” approach of mathematical models of economic behavior, such as proposed by the Keynesians. He pointed out that you couldn’t have a model big enough to predict behavior with any precision because the parameters would be too great–too many actors.

And if anyone wishes to read beyond Mandelbrot, Peter Bernstein’s “A Story of Risk” is an exceptional read on the his-story of risk.

This is to attempt a response to the latest question by Yves.

The 1994 amendment to the Basel Capital Accord introduced VaR as a metric for market risk. In order for a VaR model to be validated for the purpose of calculating regulatory capital it has to pass back-testing benchmarks.

A VaR engine includes several bits. One needs an algorithm to produce shock scenarios and a valuation algorithm for portfolios under each scenario. The prevailing practice among banks who need to pass backtesting benchmarks is to implement historical VaR with stress testing. Namely, they use historical datasets from specialized providers such as Murex to obtain historical shocks across about 10,000 different risk factors, apply the most recent 500 shocks to spot data and fully revalue portfolios under each scenario. Additional scenarios may be used for stress testing purposes.

This methodology is routinely validated by regulators as it conforms to the Capital Accord tautologically. Regulators do not venture into running risk numbers on their own.

Prop desks, buy side investors and hedge funds who require a VaR calculator for risk monitoring and reporting but not for the purpose of determining regulatory capital, are not constrained by the backtesting requirement. That's why they often use parametric VaR which is numerically more efficient. Namely, they make use of pricing models for finding price sensitivities on a instrument by instrument basis, extrapolate out of that information the portfolio sensitivities and then evaluate a 10 day P&L assuming a certain distribution for risk factor returns. Normal distributions are convenient from the numerical viewpoint. But, all considered, it is nearly costless to assume a fat tailed distribution such as a multivariate Student-t. So that is also a common practice.

Regulators do not stress model consistency and avoidance of model risk. Hence one often sees VaR evaluated by means of simplified models which are not the same ones used for pricing and mark-to-market. Often, models used for risk management are simpler and faster for engineering considerations. Unfortunately, pricing models for mark-to-market are not asked to be realistic from an economic standpoint. Priorities there are conformity with "street standards" as this helps with deal flow. Models used in risk management endignes are considered acceptable if, on a portfolio basis, they don't deviate much from the pricing models used for mark-to-market purposes, at least on average on large portfolios. Finally, for buy-side applications, pricing models used in VaR calculators are the most pedestrian imaginable.

In regard to normality, Nocera’s good article only links (correctly) the orgin of VaR to the normality assumption. Today, it is well understood that VaR not only does not require normality, but VaR does not require any (parametric) distributional assumption at all (i.e., historical and forward looking simulations — e.g., Monte Carlo and bootstrapping — do not rely on parametric distributions). And, to support the first Eric’s *excellent* point, credit VaR and opRisk *never* assume normality. Nocera may not cite all the problems but he does cite a major benefit (it’s a common yardstick) and maybe the major drawback (it says nothing about the extreme tail). In practice, much of the actual work around VaR takes for granted the idea that asset returns are typically non normal.

In regard to regulations, if you mean Basel II, banks typicall look at (at least) two metrics: one for their “true” economic capital and another to comply with “regulatory capital.” Under advanced Basel II, the short version is, banks need to hold capital for credit (one-year VaR @ 99.9%), market risk (ten day VaR @ 99.0%) and opRisk (one-year VaR @ 99.9%).

While regulators do use (impose) a credit risk model on banks, they do not impose a model for either market or (especially) OpRisk. This illustrates why VaR, as Nocera correctly says, “isn’t one model but rather a group of related models that share a mathematical framework.” In regard to market risk, rather than impose a specific model, regulators insist that banks meet a stringent set of criteria, and more importantly maybe, the bank must regularly back-test their VaR model for accuracy. And, indeed they will obtain a good deal of data from banks, in any case, due to pillar two.

@ Eric Prentis and John,

You may be right about the flaws of EMH but that does *not* implicate VaR. VaR is willing to accept all sorts of assumptions about the evolution of asset prices; e.g., if we want to implicity endorse EMH by scaling by the square root rule, we can do that; but we can model non random walk processes too. I think VaR is the lightning rod for modelling challenges generally. It may be true that we can’t model asset prices (uh oh) but that implicates many financial models, not VaR per se.

Excellent review of Nocera’s painfully-stupid article. Thanks for that great work, and all your other posts.

Fwiw, my favorite Nocera quote from the article is this:

Taleb’s ideas can be difficult to follow, in part because he uses the language of academic statisticians; words like “Gaussian,” “kurtosis” and “variance” roll off his tongue

Sounds pretty clever, until one realizes that the main point Taleb makes in TBS (i.e., Mediocristan vs Extremistan) is not one involving the even-numbered moments (e.g., variance, kurtosis), but about the most important odd moment, which is properly referred to as “skew”. That’s a word he never mentions in his entire article.

So apparently Nocera thinks that the term “skew” is something that normal human beings cannot understand and that belongs only in academic discoure. Perhaps that’s because “skew” is an apt description of what he does for a living…

I liked the Nocera article. It answered a bothersome question, how can Taleb’s arguments be understood by the common folk but for some reason be beyond professional risk managers and PhDs. Nocera says the professionals are fully aware of Taleb’s points. Taleb is attacking strawmen presumably for entertainment value.

Without this article the managers at Goldman Sachs might have gone down in history as peculiarly insightful people that saved the firm by bravely ignoring the models that held everyone else mesmerized. It turns out they made money because they paid attention to a model that everyone else deliberately ignored.

I always thought that the EMH is exactly that the market is a discounting mechanism. A classic MBA Finance problem is: Company X announced that their earnings dropped by 5%, yet the market value of the firm rose by 10%. How is that consistent with the semi-strong version of the EMH?

The answer is ‘Because the market expected that the earnings would drop by 15%, so a 5% drop is good news, causing the price to rise.’

I am not defending the EMH here or arguing that it is true in reality, but instead saying I don’t understand how the discounting mechanism point is inconsistent with the EMH. So I am confused, not arguing. Maybe I just don’t know what you mean by the discounting mechanism.

Goldman Sachs might have gone down in history as peculiarly insightful people that saved the firm by bravely ignoring the models that held everyone else mesmerized. It turns out they made money because they paid attention to a model that everyone else deliberately ignored

You can’t be serious about GS I hope fr your sake?

There’s a third problem not mentioned that I think is more important than skew, or leptokurtosis, or whether Monte Carlo simulation corrects Gaussian errors, which is, “the data are usually but not always uncorrelated; worse yet, the data are HIGHLY SERIALLY CORRELATED in the tails, precisely where it hurts the most”.

I’m not a VaR expert, but it seems to me that there should be two separate VaR calculations: one for typical times and one for the extreme moments when everything but treasuries becomes illiquid and correlations go to ONE. Of course, the second VaR calculation would clearly have shown that levering an investment bank 30-1 was sheer madness and would have had to have been banned, forthwith, else the bonus pools never could have been maintained . . . . . .

I think if I read “engineering” once more in this context I’ll probably throw up a little in my mouth. Here are a couple of starting points for thinking about modeling:

1. Markets are not physical systems. They are complex adaptive human social systems.

2. Markets are contingent systems that have histories, and those histories are relevant to assessing their current state; time is not reversible. However, financial engineering either uses time reversible models or models in which time is thrown away (distributional stats).

3. Markets are non-stationary. Their properties change through time without warning or foreshadowing.

4. The various flavours of VAR differ mainly in how they select the sample from which risk is to be estimated. Anyone know of a flavour that selects samples in a way that takes into account changes in the market system, that is, a sample-selection methodology that is sensitive to the current dynamics as distinguished from those a year or two ago?

I love this blog. Sometimes though I think the indignation is misplaced. The comments have already touched on how VaR does not have to be parametric. For example, for the input, just look at the last 1, 2, 5, 10 years of observations, and look at the 99th, 2*99th, 5*99th, 10*99thth worst observation.

I am an undergrad and we learned this in an introductory course — which is why I find the indignation perhaps a little over the top.

Wonderful bit of point-counter-point. Only thing missing is some one saying Jane you ignorant s&#t, for comic relief.

One more ex sample of man made complexity/efficacy gone horribly wrong. If humans lived a thousand years or more we would end this type of self inflected pain, due to its repetitive and destructive nature.

You can not, model human behaver (repeat a million times in your head), you can identify gross traits in individuals and groups, but you can not factor (acts of god, in the legal term) or all the decisions humans as individuals or groups will make and expect a tool that will predict with any reliability, its out comes.

Some minds have put forward the idea, that when we have enough computer capacity, we can time travel in a way, by generating virtual time, but input will always be the limiting factor (computer software will decide the massive factors required/ AI program).

The application of higher mathematics to finance is an abomination of its truth. Scientists in worthy projects under stand their work is in the discovery of tangible facts and in the realization that it will be reworked as new information is collected and the theory is reworked over and over.

Where as the vaporous money markets are nothing but snake oil and their sellers, with out the sellers their would not "be a product". Their product amounts to the atomic weight of the electrons (1/1836 of the mass of the proton) present in electricity used (Eco friendly Hydrogen/hydro generation lets say with H#1 having only one electron) to store it in the cloud.

Banks have even invested in cyber community's (virtual universes, where people create assets, homes, landscapes, worlds) One wealthy Asian woman had 20 people working for her in this area. To create asset wealth that could be traded, sold or bartered on in real monies, talk about wealth out of thin air.

It seems the measurement was created before the object in relationship to synthetic investment and here we stumble around talking about how to improve, disprove measurement tools in regards to this abomination.

My hope is this thing blows sky high. Then we can start over with productive works built on discovery and rewarding beneficial acts, rather than feeding the machine built by those that would subject us to their needs, out of their mental dysfunction.

Darwin's "survival of the fittest" Quote as use in a post above is a misnomer, it was never meant to be use as a Draconian enabler. Good science used by people for destructive selfishness.

Skippy

I have sent a link to this discussion to Mandelbrot. For those who may not know, he and Taleb are close. There was a joint interview of them on The News Hour recently. In a recent conversation with me, Benoit wanted to know if the recent crisis will change what is being taught in B-schools and economics departments. Since I am in a law school I was not sure what was going on there. I did recently present a paper at a finance conference at Yale and got the impression the crisis was happening in another universe.

Any insights on this angle from readers here?

Does the government do something similar?

one out of many definitions of Ponzi Scheme is:

transfer liabilities to unwilling others

fake accounting: no mark to market

One Transfer is Social Security.

http://www.nber.org/papers/w14427

Implicit government obligations represent the lion’s share of government liabilities in the U.S. and many other countries. Yet these liabilities are rarely measured, let alone properly adjusted for their risk.

http://people.bu.edu/kotlikoff/The%20True%20Cost%20of%20Social%20Security,%20September%202008.pdf

One acknowledged criticism is that any real attempt to sell would

swamp all the markets!

If there was a flue pandemic like 1918 causing ‘early retirement’ then

the social security system does not appear to have any precautions against

this scenario.

Founding Fathers said about liabilities:

“No Taxation without Representation.”

Of course, if the government does not define it, it is not a crime ..

for the government at least is exempted.

Three trillion or ten trillion dollar Iraq War?

http://www.salem-news.com/articles/november172008/hank_trillions_oped_11-17-08.php

http://blogs.iht.com/tribtalk/business/globalization/?p=827

Today the US trade deficit establishes a new record nearly every year, while public debt is approaching ten trillion dollars and growing rapidly.

Three Trillion Dollar War

http://www.democracynow.org/2008/2/29/exclusive_the_three_trillion_dollar_war

Should veterans have ‘gulf war syndrome’ or ‘depleted uranium dust poisoning’

they may have to ‘retire early.’

Alzheimer’s and Parkinson’s disease is skyrocketing among even the ‘middle

aged.’

History shows that war tends to use ‘almost FAKE’ accounting.

Even the fleas have fleas and ponzi like schemes.

To RBH:

I think you made an absolutely perfect case for better engineering!

You say we need models that embed market regime shifts endogeounsly and are realistic. I agree, that’s preceisely the point.

Current models don’t do that. Current models need to be analytically solvable or else the numerical analysis is too hard to implement. Realistic models can certainly be conceived but we need to develop the engineering to support them!

The only reason why banks could go along with bare bone models is because of the stabilizing effects of super high leverage that dumpened the natural volatility and hid the economics away. Now that cowboy finance goes off the window, we will certainly be using more informative models.

I've posted this on other blog forums but indeed most of the problems with VaR as risk measure come from the fact that it assumes normal distribution of returns which turns out not to be the case in reality.

If you're interested in Fat-Tailed VaR you may check this daily Fat-Tailed back-Test VaR results of different equity indices and see the difference:

http://www.finanalytica.com/?p=2&lm=50

Note that i do not try to recommend buying the product from the link but rather wanted to illustrate the differences in the two concepts i.e. Normal vs Fat-Tailed VaR.

Unfortunately value at risk models do not take account of the effects and biases (disturbances) of Ponzi schemes over the long run and in the asset prices. Unless all information of a Ponzi scheme in place are reflected by prices there is no point in seeking a particular distribution. And if information of a Ponzi scheme are reflected into prices statistics is impotent and there is no point in discussing it…

Dan Duncan said: “EVERY single one of your posts is a comment on somebody else’s work. Why don’t you—for once— just do one yourself?”

1) That’s how the blogosphere works. And, frankly, that’s how academia works, and how journalism works. Rarely do we see a peice of original journalism. At least Yves and blogs generally are more up front about it.

2) Why should our thought-space be dominated by the self-defined limitations of journalism, i.e. not academic and easy to digest? And why should we respect journalists for maintaining these limitations? If Nocera is crying in his beer because of what Yves wrote, I say Good. Let’s put the NYTimes out of business and sustain in our culture a cloud-like intellectual infrastructure (p2p).

Dan said: “Try going into a discussion on kurtosis striaght up….Yes, Yves could obviously write a very illuminating piece on this as she is a very good writer—but it would be much more difficult to lay the foundation—and, depending on the readership–it might be pretty damn boring.”

3) I suspect you’re a big fan of Wikipedia. Well, not everyone can swim at those depths!

YVES: Thanks for this entry, and for the comments which have set useful signposts for my future research into risk management.

An interesting post Yves, and very high quality comments as well.

After re-reading Joe Nocera’s article in light of the comments here, something struck me as missing from this conversation, which stands out if you look at the following quote from the article and then unpack some of the assumptions that are buried in it: