Palantir Technologies has been described as a company that builds the kind of information infrastructure intelligence agencies would design if they had Silicon Valley’s best software engineers. Founded in the shadow of 9/11, the firm has grown into a critical provider of data analysis systems for governments and corporations around the world. Its platforms integrate fragmented, messy, and sensitive data on designated threats, transforming it into operational intelligence.

But Palantir’s technology is inherently Janus-faced. The same software that allows governments to defend against terrorism or respond to pandemics can also be deployed to monitor political opponents, quash dissent, and entrench authoritarian control. In this sense, Palantir represents a broader dilemma of the digital age: the dual-use character of advanced information infrastructure. Growing controversy surrounds Palantir, as the harmful potential of its work becomes increasingly evident.

This article examines the dual potential of Palantir’s capabilities. It traces the company’s origins, explains the architecture of its platforms, and evaluates how those systems can be constructive in outward-facing defense or destructive when turned inward against domestic populations.

Origins

Palantir was founded in 2003 by Peter Thiel, Alex Karp, Stephen Cohen, Joe Lonsdale, and Nathan Gettings. The company’s earliest funding came not just from venture capital but from the CIA’s investment arm, In-Q-Tel, reflecting its intended role in national security. The name itself, borrowed from Tolkien’s Lord of the Rings, refers to “seeing stones” — a metaphor for omniscient observation.

From the outset, Palantir diverged from Silicon Valley’s consumer-tech model. Instead of seeking millions of users, it cultivated a small set of highly sensitive clients: intelligence agencies, defense departments, and later, major corporations. Its development model centered on embedding “forward deployed engineers” within client organizations. These engineers tailored Palantir’s platforms to messy, real-world data environments, effectively co-developing solutions on site.

This model carries profound dual-use implications. Close integration with state institutions means Palantir’s systems inherit the missions and priorities of their users. When deployed in democratic contexts for external defense, they can serve protective functions. But embedded within domestic security organs, they can equally serve to normalize mass surveillance and political control.

Palantir Technical Architecture

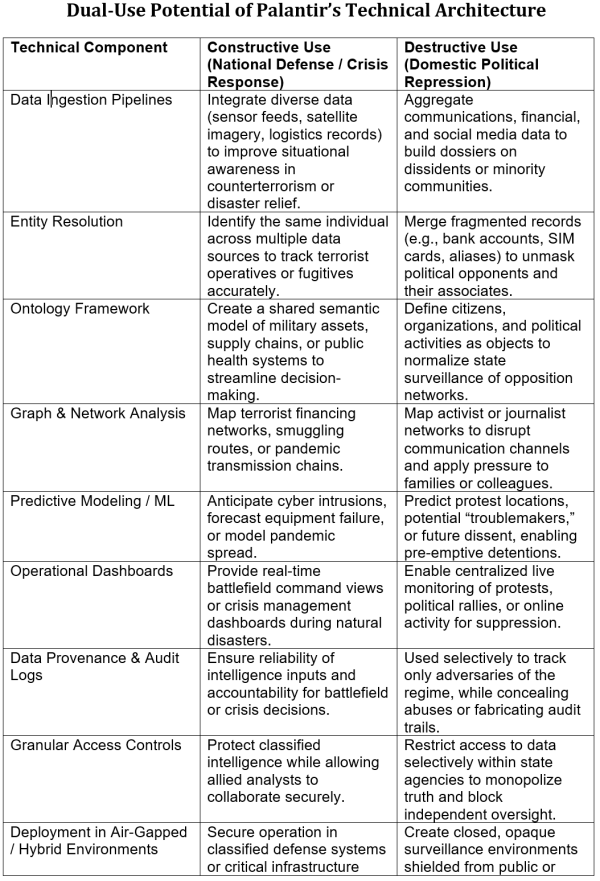

At the heart of Palantir’s duality is a technical architecture that is itself neutral. The product platforms are designed to ingest, harmonize, analyze, and operationalize heterogeneous data, regardless of its source or intended application. The architecture of the Palantir Gotham platform, sold to defense and intelligence clients, incorporates these key features:

-

Data Ingestion Layer – Capable of absorbing structured, semi-structured, and unstructured data from sensors, databases, documents, and social media feeds. Provenance and lineage are tracked to maintain auditability.

-

Ontology Framework – Provides a semantic model that maps raw data into objects (entities) and relationships, enabling analysts to work at the level of real-world concepts rather than tables and fields.

-

Analytical Layer – Supports graph analytics, machine learning pipelines, and scenario modeling. Users can search across silos, uncover hidden links, and test “what if” scenarios.

- AI Integration – Embeds machine learning pipelines and interfaces (including Palantir AIP) for predictive modeling, natural-language queries, simulation of scenarios, and real-time decision augmentation.

-

Operational Layer – Offers dashboards, collaboration tools, and alerts to translate analysis into decisions and actions in near real time.

-

Security and Deployment Model – Enforces granular access controls, maintains detailed audit logs, and can run in classified, air-gapped environments or modern cloud infrastructure.

These components are not intrinsically beneficial or malign. They provide capabilities. Whether they serve as a guardian of national security or as a tool of political repression depends entirely on how governments choose to deploy them.

Business Model: Integration with Clients in Price-Insensitive Niche

Palantir departs from the standard software-as-a-service model. Instead of selling turnkey licenses to a broad market, it secures long-term contracts with a narrow set of high-value clients: governments, militaries, and major corporations. Its engineers, known as forward deployed engineers, embed directly within these organizations, adapting the platform to fragmented, often classified data environments and co-developing operational workflows with client personnel.

The cleverness of this strategy lies in targeting domains that are intrinsically not price sensitive. For intelligence agencies, defense departments, and crisis-response institutions, the stakes are existential: terrorist attacks prevented, wars won, pandemics contained. In such contexts, effectiveness matters far more than marginal cost. By combining a generic ontology-driven architecture with domain-specific tailoring and embedded engineering, Palantir makes itself indispensable at the command layer of decision-making institutions.

This model arose because the environments where Palantir operates—classified networks, fragmented legacy systems, ad hoc intelligence databases—cannot be standardized from the outside. Instead, engineers must adapt Palantir’s ingestion pipelines and ontologies to the unique data landscape of each client.

This close integration is both the strength and risk of Palantir’s model. It ensures the platforms are highly tailored and operationally relevant, but it also means Palantir becomes deeply entangled in the objectives and methods of its clients. If those objectives shift from defending national security to repressing dissent, the technology follows along—its dual potential realized not in abstract but in lived governance.

Palantir in Gaza

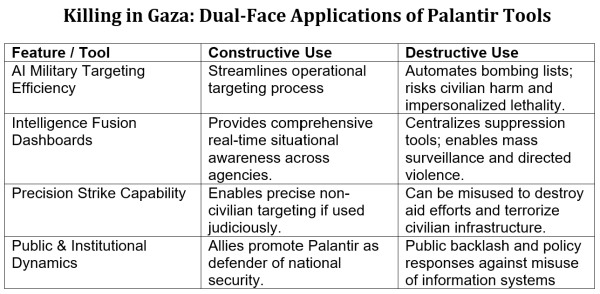

The consequences of Palantir’s dual-use potential are nowhere more visible than in the war in Gaza. Palantir platforms offer Israel’s defense establishment the ability to integrate vast amounts of intelligence data, generate a unified operational picture, and support military decision-making against a non-state adversary. However, these same tools are implicated in a campaign that has drawn widespread condemnation for disproportionate civilian harm and potential violations of international law.

Palantir’s role in Israel has been formalized through a 2024 strategic partnership with the Ministry of Defense. Its platforms reportedly assist in consolidating sensor data, drone surveillance, satellite imagery, and intercepted communications into shared operational dashboards. In principle, such capabilities can enhance the precision of military action, allowing commanders to discriminate between combatants and non-combatants and reduce operational blind spots. For a state confronting irregular adversaries who conceal themselves in dense civilian environments, the ability to fuse data into a coherent ontology is a critical defensive advantage.

Yet the same integration that improves military effectiveness can also facilitate targeting practices that raise serious ethical and legal concerns. Independent investigations have described the use of AI-assisted systems such as “Lavender” and “Gospel” by Israeli forces to generate thousands of strike recommendations at a pace previously impossible.

Although these systems were developed within Israel, Palantir’s platforms can serve as the infrastructure layer enabling their integration into broader intelligence workflows. The result, according to critics, is a system that automates the expansion of target lists, blurs human accountability, and facilitates the scale of bombardment witnessed in Gaza.

Reports of strikes on marked humanitarian convoys and medical facilities raise the question of how tools designed for intelligence precision can be turned toward suppression of civilian relief. UN experts and human rights groups have named Palantir among the companies “profiting from” the conflict by supplying AI capabilities that underpin operations in Gaza. Investor responses, such as the divestment by Norway’s Storebrand, highlight how corporate entanglement in contested wars can damage both ethical credibility and shareholder value.

Palantir’s Gaza involvement illustrates the dual-use paradox in sharp relief. The ontology-driven architecture and embedded engineering model that make its platforms indispensable for counterterrorism also make them adaptable to rapid, large-scale target generation in urban warfare. The same dashboards that can coordinate relief logistics in a natural disaster can orchestrate bombing campaigns. In Gaza, Palantir’s role is not hypothetical—it is a live demonstration of how data infrastructure can be simultaneously protective and destructive, depending on the political choices of its client.

The Domestic Risk of Misapplied Palantir Technology

Palantir’s platforms were born in the crucible of post-9/11 counterterrorism, but their architecture is not bound by mission. The same data ingestion pipelines, ontologies, and dashboards that help analysts track insurgent networks abroad could be redirected inward against a state’s own population. In the United States, where Palantir already provides tools to agencies such as ICE, the FBI, and local police departments, the risk of misapplication is more than theoretical.

If applied in a context of political polarization or authoritarian drift, Palantir’s systems could enable:

Mass Surveillance of Dissent: Integrating phone metadata, financial transactions, and social media into persistent profiles of activists, journalists, or political opponents.

Predictive Policing of Protest: Using machine learning models to forecast where demonstrations will occur and who might attend, justifying preemptive crackdowns.

Network Suppression: Employing graph analytics to map the associates of targeted groups, widening the circle of intimidation and making political activity personally costly.

Enabling “Social Credit” Schemes: Applying selective sanctions, such as travel restrictions and employment blacklisting, to targeted individuals based on their political activities

Palantir’s powerful information management tools could thus be employed in an infrastructure of domestic political control. The very features Palantir markets as safeguards—granular access controls, audit logs, and secure provenance—are only as effective as the institutions and personnel that govern their use. In the absence of robust legal frameworks and independent oversight, these technical protections offer little defense against deliberate misuse.

Conclusion

Palantir’s technology can act as a national security shield, defending against external threats and crises. But without legal safeguards, it can just as easily become a cage, controlling domestic political life through sophisticated means of surveillance. The question is not whether Palantir has built powerful tools. The question is whether the United States and other nations can ensure that such tools remain only tools of defense against foreign threats, and not become instruments of domestic repression.

> At the heart of Palantir’s duality is a technical architecture that is itself neutral.

Langdon Winner, of the question and article ‘Do artifacts have politics?’ wrote a little book of essays on the topic, ‘The Whale and the Reactor’.

A couple of clips:

I’m no longer sure it is valid to consider technologies as neutral. They shape society even as they are shaped. by society.

I would go further: it is a mistake to deplore the “misapplication” of technologies. In fact, the excellent tables in the articles highlight the quandary: the “destructive uses” of Palantir tools are intrinsically no different from the “constructive uses”, since the features and capabilities of the system are used in exactly the same way. It is just that some governing bodies / organizations / segments of the population have different values when they judge the specific applications.

In that sense, what is happening in Gaza is exemplary: Palantir is not diverted from “constructive” to “destructive” uses — it is used exactly for what it is: a system to collect data, structure it into information, analyse it, make inferences according to a model, and provide interactive decision tools to take action. Israelis (and many members of certain organizations in the USA and NATO countries) find the resulting application as Lavender and Gospel great, Palestinians (and lots of people around the world) do not.

To take another example, many find it disquieting that DNA analysis techniques may be used not for detecting highly debilitating genetic illnesses and allowing prospective parents to prevent an embryo from developing into a (most probably) disabled child, but rather to perform some eugenic selection of physical traits (such as eye colour), body strength, or even “intelligence”. But technologically, this is irrelevant: those tools enable the analysis of DNA, scanning for variants of genes, classifying them, and giving probabilities of associated physiological characteristics — and this is what is done in both situations.

We may investigate at length the interplay of values and technologies, and whether social values are actually reflected in those military / intelligence / law enforcement / commercial organizations or whether those organizations disregard them and endow applications with their own values, but there is another aspect we should consider: perhaps there are technologies that should simply not be used. Perhaps Palantir should not exist at all, whether for “constructive” or “destructive” uses, because these are utterly subjective, and slippery, value judgements, and the technology is too powerful.

Excellent points all – I’d just question whether Palantir’s tech even needs to be applied in Gaza at all, much less misapplied. It supposedly picks thousands of targets – how hard is it to just bomb everything that moves and most that doesn’t too, just in case? I’d say Palantir was more of a fig leaf than a necessary technology in Gaza that allows the Zionist entity to murder whomever it pleases.

I don’t know. IBM’s technology increased the efficiency of the Holocaust by enabling swift processing of a large quantity of information, and, if you get to the bottom of everything, that is also what Palantir etc are doing in Gaza. But processing massive amounts of data using technology is, for what it’s eorth, just a “routine” thing done by everyone in every context now.

AJP Taylor quipped that all auto accidents can be argued as having caused by two things: desire of people to go to places fast and the invention of the wheel (his actual example was the int’l combustion engine, but the point is really the same.) Attributing things to “big causes” sounds profound, but that distracts us from what actually happened.

My view is that we are still the same hominids that lived in trees a few million years ago, except with bigger sticks. We do evil because, well, for much the same reasons as we did back then. We just have more capacity to do evil, because of the bigger sticks. While I don’t eant to sound like I’m dismissing the changes too much (the harm that can be done is much greater after all), I’m not so sure if the change are quite THAT fundamental.

‘without legal safeguards….”

There will be none, zero, nada…

Tis a brave new world.

I think that this apparently godlike surveillance will give the users a false sense of effectiveness and cause not only great harm, but also great mistakes. All this technology being used by apparently unwise people who might have great intelligence, education, tools, and even goodwill, but like much of our ruling class, their political servants, then the nomenklatura, and finally the apparatchiks, full of overconfidence and lacking the ability to think critically.

If people are already turning their thinking to the almighty AI, then what will happen with this? From the billionaires down to the cops at the local station, all will some access to Palantir’s wonder weapons, which we will not have, to be used not only for public safety, but whatever they deem is the greater good, and perhaps not by a human.

Every tool is a weapon if you hold it right.

Well balanced, Haig.

Just so. In the hands of Sauron, the pallentir become the all seeing eye of surveillance followed by repression. In the hands of Denathor, the pallentir was limited and did not show a full spectrum of possibilities, only Sauron’s huge armies. Gandalf was reluctant to use the pallentir and reveal himself to Sauron.

“In principle, such capabilities can enhance the precision of military action, allowing commanders to discriminate between combatants and non-combatants and reduce operational blind spots.”

Hopefully, the comment I would have made would not be antisemitic.

Excellent analysis. of Palantir Technology.

Peter Thiel and many of the Tech Bros seem to be struggling with the nature of their own humanity.

Part of them appears, to me, to want to become AI, total control type Gods, while another part seems to at least flirt with the possibility that they are as broken as the rest of us and, on occasion, have glimmerings of their own longings for something besides big bucks and big power.

Two things:

1. There’s always a gap between what the press releases and the brochures say about the capabilities of a software tool, and the actual capabilities of the tool as it performs in operational environments. I’m pretty sure Palantir is an incredibly powerful tool, but I also doubt it’s a God-like, all seeing, unerring, omniscient tool that Alex Karp in his verbose monologues likes to claim it is. I asked the question the last time Palantir was the subject of a similar post, which i’ll ask again, why hasn’t it been used to locate the hostages held by Hamas? We can speculate that the Israeli government doesn’t want the hostages found, and subsequently returned, because a major pretext for continuing the genocide would disappear, but that’s just speculation.

2. I suspect Karp, Thiel and others in their war mongering elite circles aren’t losing sleep over their product being “misused” as a domestic tool of suppression. These people have psycopathic impulses that mean they’re not shackled by such moral compunction. Karp himself has said the West has to win against its enemies by whatever means necessary (I’m paraphrasing) so it’s not a stretch to imagine that, if eg, a groundswell of domestic opposition to foreign misadventures had to be crushed he’d happily go along with that objective being achieved using his product. The US is nominally a democracy at home and a (waning) dictator on the international stage, so it’s only logical that as its star fades globally, it’s authoritarian gaze turns to its own domestic population and those of its vassals like the EU. That’s over 800 million people that have to be surveilled and monitored to keep a lid on dissent, rising discontent about falling living standards, “domestic terror” etc. With so much money to be made by Palantir being the tool of choice facilitating the formerly democratic west taking a fascist turn, Karp and Thiel will be right there pontificating about the preservation of western civilization even as the average westerner has the surveillance walls closing in on them.

Thanks, Thutmose!

As with AI destroying all or most of the desk jobs in the world, Palantir is a different reason I think (and hope) we’ll be forced by necessity to abandon tech.

Gaza is a Genocide, not a war, therefore, the only legal, and moral course of action is to stop it. Then prosecute the perpetrators, their allies and accomplices.

This springs to mind.

https://en.wikipedia.org/wiki/IBM_and_the_Holocaust

Excellent piece, Haig. But you perhaps misuse ‘borrowed’ in regard to Thiel’s use of the invented word ‘Palantir’.

Though entirely resistant to Lord o’ the Rings’ three volumes, I do appreciate copyright law.

Unless Thiel paid Tolkein’s estate a license fee to use the invented word, he is guilty of IP theft. Just like the AI bot scrapers – but this is a documented instance of such theft.

Did Tolkein’s estate accept payment and grant Thiel a license? If they did, that tells us where they are coming from.

Hobbits beware!

Thanks Haig. I’ll add a few scraps. All quotes taken from https://archive.ph/E8DWs this excellent New York profile from 2020.

1. I think it’s important to see Palantir’s birth as an outsourcing of John Poindexters Total Informational Awareness program.

Back in 2003, John Poindexter got a call from Richard Perle [BP: remembers this evil man from days gone by with a shudder] , an old friend from their days serving together in the Reagan administration. Perle, one of the architects of the Iraq War, which started that year, wanted to introduce Poindexter to a couple of Silicon Valley entrepreneurs who were starting a software company.

The firm, Palantir Technologies, was hoping to pull together data collected by a wide range of spy agencies — everything from human intelligence and cell-phone calls to travel records and financial transactions — to help identify and stop terrorists planning attacks on the United States.

Poindexter, a retired rear admiral who had been forced to resign as Reagan’s national-security adviser over his role in the Iran-Contra scandal, wasn’t exactly the kind of starry-eyed idealist who usually appeals to Silicon Valley visionaries. Returning to the Pentagon after the 9/11 attacks, he had begun researching ways to develop a data-mining program that was as spooky as its name: Total Information Awareness.

His work — dubbed a “super-snoop’s dream” by conservative columnist William Safire — was a precursor to the National Security Agency’s sweeping surveillance programs that were exposed a decade later by Edward Snowden.

Yet Poindexter was precisely the person Peter Thiel and Alex Karp, the co-founders of Palantir, wanted to meet. Their new company was similar in ambition to what Poindexter had tried to create at the Pentagon, and they wanted to pick the brain of the man now widely viewed as the godfather of modern surveillance.

2. They got famous for “helping to catch Bin Laden” but in reality had no impact on the mission. If you google “Palantir Bin Laden” you’ll get plenty of fawning accounts and Palantir hint at it coyly

“That’s one of those stories we’re not allowed to comment about,” Karp once said in an interview.

But the reality is

No one I spoke with in either national security or intelligence believes Palantir played any significant role in finding bin Laden.

3. While one could argue the technology is neutral, the company is strongly not. It is explicitly culturally supremacist. They see/sell themselves as the vanguard of a civilizational war.

This kind of software is the reason I could never understand why China,Russia and Iran invest so little in their own softwares. Iran top message app is Whatsapp. People blame sanction why its so hard but that is just cop out, they are neoliberal government who dont believe that government should invest so much in private sector or develop own software, lucky China dint go the way as Russia and Iran but China is still years behind windows because they were dragging their feet so long