Seemmingly contradictory processes are at work. We may have reached peak AI, at least in terms of growth of use of the seemingly dominant type, large language models, which are conflated with “generative AI” (sticklers are encouraged to clear up fine points in comments). Several key growth measures are stalling out or even falling. Yet a wave of igh profile employers like Amazon, UPS, and Walmart announcing of big headcount cuts, citing AI implementation as the driver of the firings, says AI uptake is gaining steam. What gives?

And more important, even if AI is not performing all that well in terms of accuracy and reliabilty, that may not matter much as long as it is (barely) good enough to corporate executives to screw down furhter on workers, here in comparatively well-paid white collar roles, to the benefit of their bonuses and shareholders. While an AI bubble crash (and accompanying reports of AI not living up to its promise) may put this trend on pause or even in reverse, if this trend continues at its current pace and intensity, it will further damage the career and income prospects of college educated workers, most of all recent graduates, so as to be counter-revolutionary. It will upend the heretofore tacitly accepted responsiblity of governments and the managerial classes to provide for an adequate level of employment and social safety nets in a system that requires most of the population to sell its labor as a condition of survival. It will also reverse the virtous circle created by Henry Ford1 of giving workers steady enough jobs at high enough pay levels that they could afford to buy the new products made possible by widespread electrification and the mass uptake of automobiles….including in due course suburban homes. And it will further erode class mobilty, aka The American Dream.

The further thinning of the already increasingly precarious middle class will also greatly weaken social stability, particularly at a time when social safety nets are being gutted. Go long bodyguard services.

While we don’t have definitive answers on what is happening in Corporate America, particularly given the diversity of the perps, at least one motivation is to take advantage of the AI mania, still very much alive in the markets, and goose the stock by wrapping what is largely good old fashioned work force squeezing/process re-engineering in the AI mantle (although CEOs are going to some lengths to depict AI implementations as pervasive). We may get a better grip on what is happening internally in the coming months. On the one hand, a well-publicized MIT study found that 95% of corporate pilots of AI did not pan out. On the other hand, there are more and more breathless stories of big employers and professional services firms reducing hiring, particularly of newbies, and even, slashing white collar staff levels.

So is the way to square this circle that AI actually does not perform well, save as a weapon in class warfare?

The Corporate AI Firing Frenzy

Before unpacking the implications in more detail, let’s first look at the apparent magnitude of this trend. We’ve reported before that company leaders, in a sharp departure from historical practice, are touting running their companies with fewer warm bodies. This pursuit of corporate anexoria is long-standing (and extends to capex); we described it in 2005 in a Conference Review Board article, The Incredible Shrinking Corporation. The Wall Street Journal reported on this new enthusism in June and affirmed by Chief Executive Council:

In today’s rapidly evolving business landscape, it’s no longer rare to hear leaders celebrate layoffs as a badge of honor. CEOs are openly touting staff reductions; not as last-resort damage control, but as strategic wins. Companies are embracing workforce slimming as a deliberate tactic to prop up profitability and signal AI-readiness to investors.

As reported by Wall Street Journal by Chip Cutter on June 27th, 2025.

CEOs Are Shrinking Their Workforces—and They Couldn’t Be Prouder

Big companies are getting smaller—and their CEOs want everyone to know it.

The careful, coded corporate language executives once used in describing staff cuts is giving way to blunt boasts about ever-shrinking workforces. Gone are the days when trimming head count signaled retrenchment or trouble. Bosses are showing off to Wall Street that they are embracing artificial intelligence and serious about becoming lean.

After all, it is no easy feat to cut head count for 20 consecutive quarters, an accomplishment Wells Fargo’s chief executive officer touted this month. The bank is using attrition “as our friend,” Charlie Scharf said on the bank’s quarterly earnings call as he told investors that its head count had fallen every quarter over the past five years—by a total of 23% over the period.

Loomis, the Swedish cash-handling company, said it is managing to grow while reducing the number of employees, while Union Pacific, the rail operator, said its labor productivity had reached a record quarterly high as its staff size shrank by 3%. Last week, Verizon’s CEO told investors that the company had been “very, very good” on head count.

Translation? “It’s going down all the time,” Verizon’s Hans Vestberg said.

The shift reflects a cooling labor market, in which bosses are gaining an ever-stronger upper hand, and a new mindset on how best to run a company. Pointing to startups that command millions of dollars in revenue with only a handful of employees, many executives see large workforces as an impediment, not an asset, according to management specialists. Some are taking their cues from companies such as Amazon.com, which recently told staff that AI would likely lead to a smaller workforce.

A new Wall Street Journal article recaps some of the recent headcount cuts, with Amazon layoff of 14,000 (initially mis-reported by Reuters as 30,000) driving a new set of press sightings:

The nation’s largest employers have a new message for office workers: help not wanted.

Amazon.com said this week that it would cut 14,000 corporate jobs, with plans to eliminate as much as 10% of its white-collar workforce eventually. United Parcel Service UPS 1.14%increase; green up pointing triangle said Tuesday that it had reduced its management workforce by about 14,000 positions over the past 22 months, days after the retailer Target said it would cut 1,800 corporate roles.

Earlier in October, white-collar workers from companies including Rivian Automotive, Molson Coors TAP -2.43%decrease; red down pointing triangle, Booz Allen Hamilton and General Motors received pink slips—or learned that they would come soon. Added up, tens of thousands of newly laid off white-collar workers in America are entering a stagnant job market with seemingly no place for them…

A leaner new normal for employment in the U.S. is emerging. Large employers are retrenching, making deep cuts to white-collar positions and leaving fewer opportunities for experienced and new workers who had counted on well-paying office work to support families and fund retirements. Nearly two million people in the U.S. have been without a job for 27 weeks or more, according to recent federal data.

Behind the wave of white-collar layoffs, in part, is the embrace by companies of artificial intelligence, which executives hope can handle more of the work that well-compensated white-collar workers have been doing. Investors have pushed the C-suite to work more efficiently with fewer employees. Factors driving slower hiring include political uncertainty and higher costs.

Altogether, these factors are remaking what office work looks like in the U.S., leaving the managers that remain with more workers to supervise and less time to meet with them, while saddling the employees fortunate enough to have jobs with heavier workloads.

The cascade of restructurings has created a precarious feeling for managers and staff alike. It is also tightening the options for those who are looking for employment. Around 20% of Americans surveyed by WSJ-NORC this year said they were very or extremely confident that they could find a good job if they wanted to, lower than in past years.

Mind you, this is not the first time we have seen this movie, but this time, the production promises to be on an unprecedented scale. For instance, tarting in the 1980s, big companies started greatly thinning their corporate centers. The leveraged buyout wave precipitated this shift. Raiders targeted over-diversified conglomerates, which tended to be over-manned at their head offices, breaking them up and reducing need for big bureaucracies to ride herd on the operations. Many companies greatly trimmed the size of these activites to make themselves less attractive as prey. The rise of personal computers and improved management information systems facilitated this shift. It also became common simply to fire managers and require the survivors to do what had formerly been one and a half or even two jobs. Similarly, the outsourcing of tech jobs to foreign body shops and H1-B holders may not have reduced headcounts overall (Robert Cringley claims total manning increased) but did make it well-nigh impossible to land an entry level job in software at large companies, as over two decades of frustrated new graduates seeking advice at Slashdot attests.

For another summary (with a preview of broader implications, which we’ll discuss later), see this Breaking Points segment:

Signs of Weakening AI Demand Due

Given all the wonderous things AI is supposedly able to do, a slackening of demand would seem to reflect performance shortfalls. Admittedly these data points are indicative as opposed to dispositive:

Hartley, Jolevski, Melo, and Moore have been tracking GenAI use for Americans at work since December 2024.

They find that GenAI use fell to 36.7% of survey respondents in September 2025 from 45.6% in June.

I wonder if OpenAI, Google or Anthropic are seeing a similar decline… https://t.co/hjnss5u6vj

— Erik Brynjolfsson (@erikbryn) October 22, 2025

A must-read post by Gary Marcus contains more contra-hype signtings. For instance:

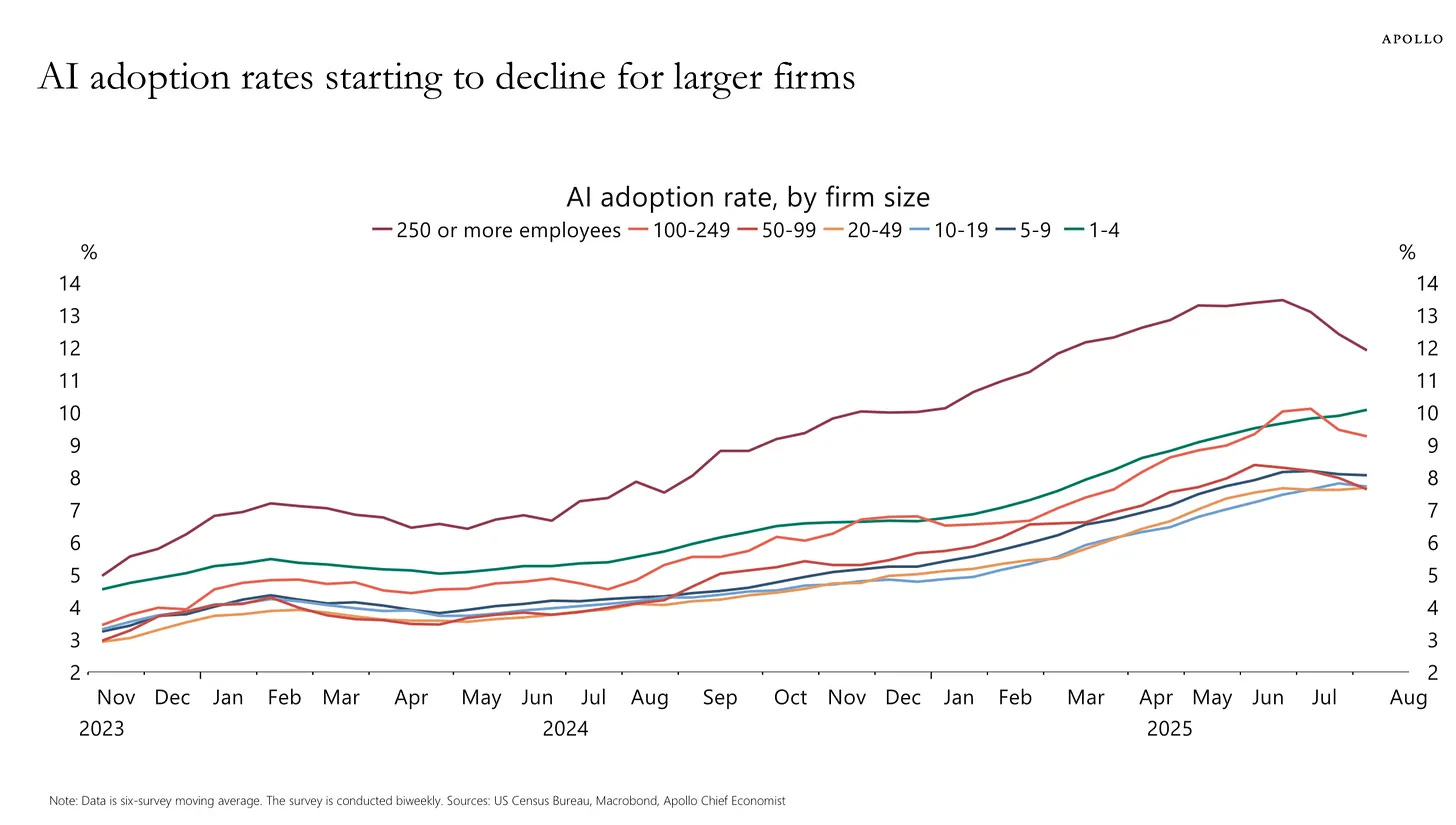

3. This also fits with this graph from Apollo Global Management’s Chief Economist Torsten Slok, based on Census Data, from early September:

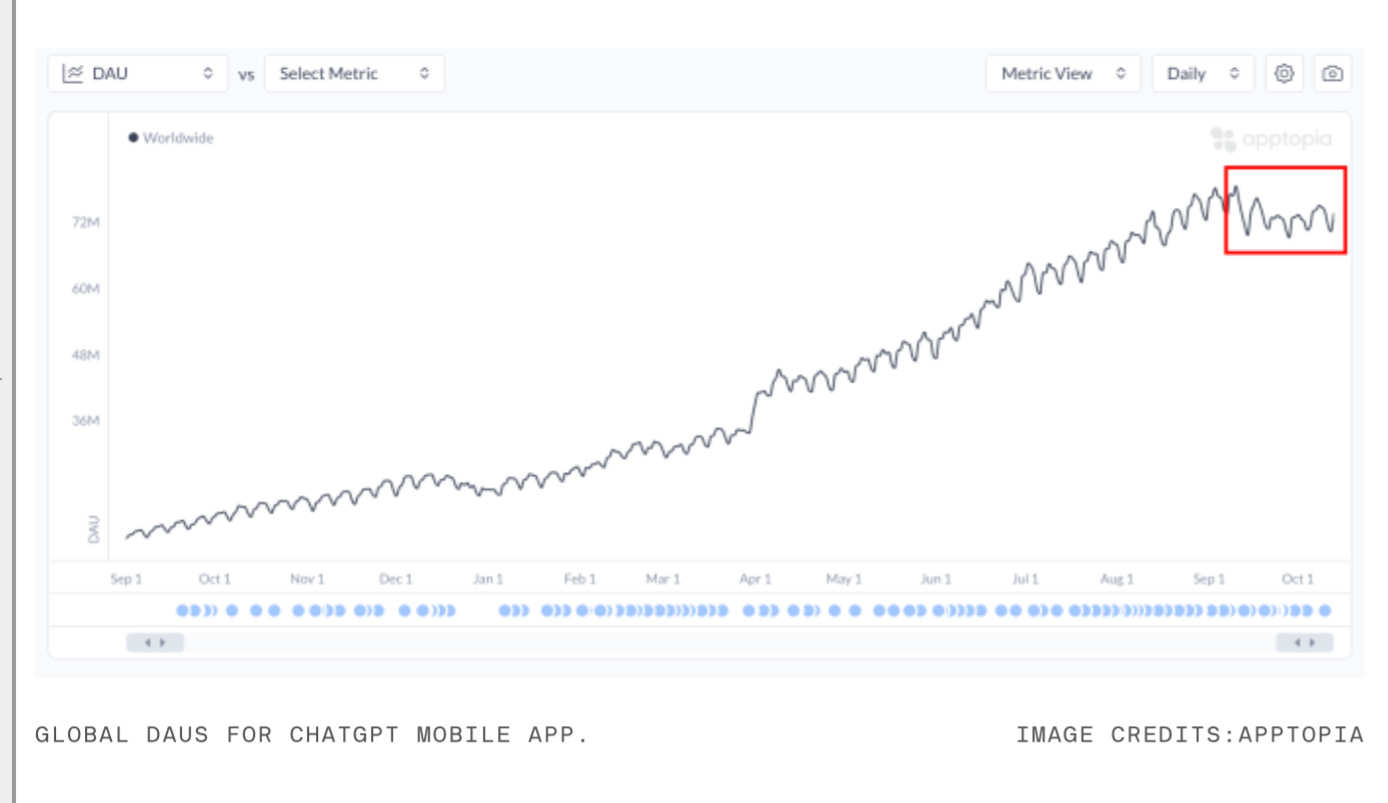

4. TechCrunch just reported that “ChatGPT’s mobile app is seeing slowing download growth and daily use” based on data from Apptopia

Even with these signs of more and more dogs rejecting the AI dogfood, the C Suite is still heavily populated with true believers. As we indicated above, AI might not have to work well to suit their purposes. In most types of goods and services, crapification has become the norm. Customer service and product qualty too often stink. So the top brass may feel, particularly in a world of too many big incumbents (as in few alternative providers), that they can degrade their offerings further at no/minimal risk.

What Happens as the Gutting of the Middle Class Accelerates?

Cynics might point out that office workers are finally being hosit on their own petard. They participate in, or at least did not object to, the outsourcing and offshoring of US jobs. We have pointed out that in many, arguably most, the business case was weak, even before factoring in the increase in risk due to greater complexity and dependence on outside contractors. But it made perfect sense if you viewed outsourcing as mainly intended to increase the pay and authority of executives and middle managers at the expense of hourly/lower level workers.

One of the big rationalizations of the AI boom, from an economic perspective, is that the implementation of new technology increases prosperity on a broad basis. Aside from ignoring externalities like pollution and the fact that these big changes produce winners and losers, and the winners seldom ameliorate the damage done to the losers, the idea that incomes rise isn’t even always true. The Industrial Revolution in England ushered in at least a generation (and according to some studies two generations) of declining living standards. Victorian workhouses were one indicator of how bad conditions became for those in the lower orders who failed to find employment.

In Europe, living standards have already been falling due to the sanctions-induced energy shock producing de-industrialization, as in job losses and pay cuts, in tandem with higher consumer power costs and often increased food expenses due to climate change reducing agricultural output. And that’s before getting to reductions in social safety nets due to falling tax revenues (see Russia sanctions blowback), turbo-charged by commitments to militarize even as the US trade wars are adding to economic damage.

In the US, while power costs are not dire (although the AI is increasing electrical bills and more is in the offing), food, housing, and medical expenses are high and generally rising. And tariff costs are kicking in as many producers have exhausted inventories stockpiled before Liberation Day. A new Financial Times story confirms that the young, traditionally the most employable, are hardest hit:

Income growth in the US has slowed to a near-decade low, with young workers especially hard hit, in a sign American consumers are struggling with a weakening labour market and persistent inflation.

Real income growth for workers aged between 25 and 54 this year dropped to its slowest pace — excluding periods of pandemic volatility — since the 2010s when the 2008 financial crisis led to high levels of unemployment, according to new research by the JPMorgan Chase Institute.

The slowdown is the latest sign of an increasingly distressed US labour market, and comes as Federal Reserve officials are expected to cut rates on Wednesday.

George Eckerd, co-author of the report, told the Financial Times: “We’re looking at a level of year-on-year growth that’s actually similar to [the 2010s] when the labour market was a lot weaker and the unemployment rate was higher.”

“Inflation is eating into otherwise decent levels of pay gains,” he added.

Keep in mind that the measure of inflation impacts the real income computation. Most experts consider inflation to be understated; measurement have been repeatedly jiggered to produce lower results so as to reduce the disbursements for cost-of-living adjustments, as well as for political purposes. So a higher inflation figure would lower the level of real income, and probably push the claimed growth into contraction, consistent with the lived experience of most Americans.

There are big implications if the US middle class suffers a big leg down in its size and health. For starters, many, particularly the young, took on student debt to go to college and get a “good” job. But employers are now playing musical chairs, except they suddenly removed all the seats save one. What happens when even more fall behind on payments and are hit with penalty interest rates?

And even putting aside the pernicious effect of student debt, young adults with low and erratic incomes means more living with parents, less household formation (econ speak for getting married and having kids), less home buying.

The much-lowered value of a college degree will blow back to universities in terms of less competition for admission, save at the very top schools, and likely lower enrollment overall. If more parents are telling their kids to become plunbers or painting contractors or HVAC techs, that will also somewhat unwind the Elizabeth Warren “two income trap” phenomenon of parents bidding up housing in school districts seen as being advantaged in college admissions terms.

In the modern era, in societies that do not have religions that justify yawning class/income differences, a combination of rising income levels, the perception of elite comptence, which includes at least some concern for the welfare of the lower orders, and class mobility have been the bulwark of legitimacy. All these pillars are crumbing in the US.

In the Breaking Points segment above, Saagar Enjeti suggested that the AI revolution, if it lives up to its hype, could foment an actual revolution by producing two conditons considered to be key to the Russian revolution: declining living standards and elite overproduction.

Based on the flaccidness of the No Kings protests, which had significant middle/upper middle class representation, I don’t see it. The impediements include atomization and weak social ties, the perception that protests with muscle (as that entail a real risk of police thuggery in response) are the province of losers like union workers (and there is also perilous little knowledge what early labor protestors endured to secure basic workplace protections). More practically, in our high survellance/recordkeeping state, organizing and participating in demonstrations, or worse, being arrested, is sure to be a job prospect killer. So many of the newly-downtrodden quite rationally may conclude that they can’t afford to take a personal stand.

As for elite overproduction being a factor that will amplify protest, given the lack of competence of our elite incumbents, yours truly is not optimistic that those who put themselves on education tracks for those roles are any more able to manage their way out of a paper bag….or organize a revolt.

Again, to use the analogy of the early Industrial Revolution, the enclosure movement, which shifted formerly self-sufficient farmers to factories and cities, did produce William Blake’s “dark Satanic mills” and the eventual 1848 revolts across Europe. However, depending on which historian you believe, that upheaval was either ineffective or produced only some concessions from the ruling classes in terms of improved social protections. But it did not upend the existing order.

That is not to say some sort of dislocation of the existing order is not baked in. But I don’t see it as being something as Manichean or potentially decisive as a revolution. If not a full-bore Jackpot, it’s likely to have an anachic flavor of a big increase in dysfunction, disorder, and desperation.

_____

1 This move was not altruistic, as some like to pretend. Ford found it hard to find and keep assembly line workers. The jobs still required training yet were tedious and restrictive. Ford found he had to pay his men well in order to have his factories operate on a high uptime basis. But his and other carmakers hiring (on similar employment terms) was on such a scale as to create a massive pool of blue collar elite that could afford to buy cars.

To be fair, the corporate hiring frenzy during the pandemic was too much. They could be cutting jobs and just putting AI into their announcements so Wallstreet loves them more.

Also, if you look at FRED data, median household income has been flat since 2020. The big gains were in the last Trump admin and the end of the Obama administration. So at the median its been no gain for about five years.

In IT I think this is most of the story, and may even be most of it. The factors seem to be:

– reverse to the mean after the pandemic hiring.

– responding to a general slowdown in the economy.

– the collapse of the VC ‘app’ economy.

AI is in the mix, but IT is a hype driven industry. There’s always some stupidity complicating hiring.

AI seems more like an excuse for lowering the cost of wages, not so much the head count:

“Amazon perhaps faces the most scrutiny. US Citizenship and Immigration Services data showed that Amazon sponsored the most H-1B visas in 2024 at 14,000, compared to other criticized firms like Microsoft and Meta, which each sponsored 5,000, The Wall Street Journal reported. Senators alleged that Amazon blamed layoffs of ‘tens of thousands’ on the ‘adoption of generative AI tools,’ then hired more than 10,000 foreign H-1B employees in 2025.”

https://arstechnica.com/tech-policy/2025/09/amazon-blamed-ai-for-layoffs-then-hired-cheap-h1-b-workers-senators-allege/

If you see a recession coming, you can blame AI for the layoffs, and look like a successful innovator rather than a struggling business.

There are actually two AI technologies in play, and while they’re related, they have important differences. One is LLMs/generative models (same thing essentially), the other is Machine Learning. While Machine Learning has been massively over hyped, it is still very useful and has many practical purposes. China is investing heavily in this, and its strengths are in control engineering such as industrial processes, logistics and complex engineering. It has limitations (self-driving cars are probably impossible), is not intelligent, requires a ton of training, but used appropriately is very powerful.

LLMs are really just smart text complete. They’re very good at spotting patterns, and then creating facsimiles of those patterns (forgeries essentially). But they’re limited by their training data (which by necessity must be incomplete), complexity (which means above a certain point they require huge amounts of energy and time, for diminishing returns – and there’s not much to be done about that) and the accuracy of those training them (underpaid graduates in the Philippines and Eastern Europe – best I can tell).

This makes LLMs good for repetitive office jobs, where accuracy isn’t critical, the outputs don’t vary significantly and where the cost of the work justifies a couple of nuclear power stations. That stuff exists (marketing, a lot of low level translation stuff), but its a comparatively small market.

For programming, even if this tech works (and it doesn’t really), the cost of the tools for a single developer are going to be more than that developer’s salary, for output that’s essentially similar to a mediocre intern and you still need that developer to supervise. The economics just don’t add up once the VC subsidies disappear. This makes Uber look sane.

Following up on this, a couple of additional points

> Even with these signs of more and more dogs rejecting the AI dogfood, the C Suite is still heavily populated with true believers.

I think it’s always important to remember that many members of the C Suite are not terribly bright and have only a limited understanding of what is happening on the shop floor. They also tend to be terrible with technology – even CIOs, at least in my experience, do not actually know much about IT. They will go to conferences aimed at CIOs, listen to podcasts and read the media selling stuff to them. And then cravenly try to do what everyone else is doing, so even if they fail (and the majority of IT projects fail), they can point to ‘industry trends’. When it comes to technology, the corporate class are sheep. Nobody wants to be the odd guy out.

Senior execs, even at places at Amazon, tend to be bad at identifying which jobs matter. For years corporate America has been dreaming of eliminating their IT department, and there have been many failed attempts. The original outsourcing boom of the 90s (which mostly failed) was an attempt to do this. Developers are expensive, tend to fail more than they succeed (the IT failure rate should be a scandal) and to the MBA class, in ways that accountants or sales people, are not.

When it comes to AI, I think its sweet spot is ‘bullshit jobs’. As someone who’s been marketing adjacent for much of their career, I’ve always been struck by how this is essentially a facts free zone. Does marketing work? Nobody knows. How would you measure that. Even if AI made marketing worse, would it matter? And I’m sure there are other jobs with lots of unnecessary work that could be automated easily enough, even if the results were garbage (a lot of corporate translation work has always been very low quality, to give an example).

Where it will do a lot of damage is in areas where those making the decisions (the c-suite) do not actually understand the work that is being done. This is already happening in IT (the next Y2K will be cleaning up the slop being generated by AI tools), and I’m sure there are other areas such as accounting where all kinds of errors are creeping in without anyone realizing. In addition, as people get used to AI (and I’ve observed this directly), they stop checking the work. I forsee a lot of corporate disasters over the next few years caused by AI slop due to cost cutting.

I also forsee some interesting conversations in the next few years as companies are forced to pay the true cost for AI tools…

re: the C-suite, I agree 100%. Upper management at my company talks about AI so much that sometimes it seems like they are glitching. But you can tell that above a certain level they really don’t know what they are talking about. But my company is owned by one of the biggest private equity companies who in turn are heavily invested in AI companies, data centers, and the power generating to run them. So senior execs at my company are in turn just being pummeled at every turn by our PE overlords with the AI directive. No one gets fired for buying AI is the new “no one gets fired for buying IBM” (for now).

I hadn’t considered the PE angle, but you’re absolutely right.

When this implodes, we’re going to see the mother of all hangovers as the entire corporate class realize they’ve blown a decade’s worth of investment capital on a chimera.

Heh, but it ain’t their money. I’ll be gone, you’ll be gone.

Wouldn’t it be nice to use that giant hand to squeeze Bezos’s head while asking him nicely to not fire thousands of employees?

Just spitballing here

I’m a specialist in measurable forms of marketing (direct mail, email, websites) who just got drummed out of his career after 40 years by a contracting employer with a bullsh*tter as its marketing chief.

Yes, marketing is measurable. It’s actually quite simple in principle: You measure what happens because of your campaign against a baseline of what happened without it, or what happened versus an alternative. Problem is, many of the best-paid people in marketing have fat paychecks based on pretending that it isn’t. And their clients play along, for reasons as simple as not knowing any better, as human as fearing they’ll be the next ones fired if they dissent, and as vain as finding TV shoots a glamorous experience.

As for CEOs, their reaction is the same as it was to the gutting of internal IT or customer service. It doesn’t affect them personally, the outsource vendor makes a really seductive-sounding presentation on how it cuts their cost and goes straight to the bottom line that pegs the CEO’s pay, and he gleefully signs off on it. The resulting tech support/customer experience/marketing is ineffective and awful, but the quarterly report looks great and the CEO doesn’t care because he’ll cash out before the company craters.

I’ve told friends that my marketing skills make me feel like a Latin scholar. I have all this knowledge that would still work, but in an era when no one else knows or cares how to hear it.

Your observations are spot on. I started programming and writing documentation (and, sigh, managing programmers) back in 1977 at a large high tone university. I didn’t have a degree in IT, but at the time the ambient attitude among the experienced programmers was that “if you need to study this stuff, you’re probably not very good.” And as more IT grads came along things got worse and worse, i.e, the quality of work I could expect from the people I was supervising just kept deteriorating.

There were two major factors to which I attributed this deterioration.

One was that academia took over the field and the produced a generation of people whose approach to career success was to appear clever, or “ingenius” and never put in the time to sweat all the details that determine the effectiveness of any piece of software. This became more and more true as the crucial components of quality control were increasingly removed from IT experienced senior programmers and taken over by non IT supervisory people or, if you will, the customers. During the 80s I saw much of the programming industry as a kind of hollow jobs program for largely unqualified people.

The second factor was the eagerness of non IT senior executives to demand a larger role in how IT was to be implemented. And these people were red meat for the hordes of software and hardware sales crews who descended upon them. I recall telling a highly placed young and eager assistant comptroller that he didn’t need to spend $250K on a new computer whose purchase he’d advocated since what he wanted to get done was a an essentially trivial exercise using some basic software already in place. The most shocking part of that conversation was that he hadn’t realized that the old IBM MVS system could actually do arithmetic. I kid you not, and such episodes were legion.

AI could be used to accomplish many useful things, but in the hands of decision makers who lack a deep understanding of its horizons and limitations, the prospects are dubious.

A few days ago I read a post (https://scienceforeveryone.science/statistics-in-the-era-of-ai/) by someone who used a higher level of ChatGPT to clean and analyze a data set that had been giving him trouble, checking at each step. Sounded very useful.

I have seen other descriptions of using these systems in software development as assistants.

They seem to ask “ChatGPT” to do something and check it.

(i wonder if you can use these things to do code reviews?)

A couple of problems:

1. The self-driving problem: The more often the system works correctly, the less likely you will detect when it does not.

2. How do you know that the system did not sneak a back door into your database system while it was doing what you told it to do correctly. (old problem at least back to one of the Unix Gurus)

there is still a strong belief in what remains of the american middle and upper-middle class that trumpismo (including AI, crypto, ICE, the whole sordid package) is simply a bizarre aberration and that “soon” things will snap back to the neoliberal status quo of our youth. nobody’s gonna risk everything on mass action if they think the sun will be back out in ’28, and even if they broadly sympathize with the cause they’d rather other folks take the risk. it should also go without saying that even if power was found lying in the street, nobody on the soi-disant american left would pick it up.

this is a good point. you can really see this in every candidate the dems are floating for 2028: they are not even trying to adjust to this new landscape. i think more likely, we will get more institutionally destructive maga type people in power. maybe with the occasional switch to dems in power, but they fundamentally lack the ability to reverse any of the changes made. it’s just going to be a slow nonlinear decline until something breaks. when things will break enough for mass action is the question.

i sound like a bit of a doomer here, but i don’t mean to be hopeless. acquisition of enough power to change things is a slow and arduous process, realistically it will take time to organize and build alternative structures. the best thing to do for now imo is to quietly build alternative structures so when collapse gets bad, there is a decent alternative lined up in place. and minimize damage caused. imo.

the problem, of course, is that the reactionary right has the church. labor used to have the union hall as a counterweight, and many mainline churches were (and are) decently liberal but with the rise of evangelical politics that door has mostly shut. folks these days are atomized and have zero mentorship in how to lead a movement. the soviet russian rank and file were the vanguard of revolution; maybe if troops here don’t get paid for enough months they’ll wise up. but i doubt it.

For LLMs, I’m going with yes. This is class warfare and the ego-mad fever dream of what Ed Zitron calls business idiots.

It won’t just be a gutting of middle class jobs, the executives will be going as well. Especially since the executive role is symbolic anyway, perhaps the most non-essential of anyone in the org – more so given there are very few real leaders anymore who drive success with charisma and contribution. Their role is merely to be the public or legal face of the org, the signature, the talking head, but since when was a CEO legally liable or responsible for anything? It become an obsolete legal construct. And the BBC has with their AI generated news anchor shown what corporations can do with the C-suite, have they not?

I had a prof once who, by way of introduction to programming languages, used the peanut butter and jam sandwich as an example. There is a repeated pattern to the making of the sandwich, and programming is all about capturing to a logic language that repeated pattern, which can then be interfaced with the real world in some way. This was not said, nor intended by the prof, but think about this – any work which is repetitive in any way can be replaced with an algorithm. Therefore, even without AI or LLM, most modern roles being highly repetitive in nature could be replaced with an algorithm.

But as mister Musk has learned, humans are highly adaptive and can handle unexpected outcomes better than AI.

Musk, he of the aforementioned c-suite?

A little more low-tech anecdote—-did rounds at the nearby tech/industrial park, full of the US equivalent of Mittelstand.

Only 2 firms (say 16%) had receptionists. everyone else had locked doors and you were met at the door by your party.

…which makes utilitarian sense, but still culturally jarring from being used to an environment in which every self-respecting firm had their own receptionist that greeted you when you exited the elevator. lmao.

There has been a generational change in what constitutes an “essential” employee, and even the weak-sauce LLM-driven AI is going to accelerate that change.

After listening to a interview with Sam Corcos the other day, I’m getting the feeling that the Tech Bros. have decided that their ‘Move fast and break things.’ method is ready for deployment, (Outside of their ‘lane’ so to speak.)

I think that’s a dangerous notion.

In the wider world, there are all sorts of dangerous pitfalls in breaking things you don’t understand how to fix, or things you don’t have a replacement for.

Sam Corcos, Trump’s Chief Technology Officer for Treasury, left me with the distinct impression that the ultimate ( If unstated ) intent of the DOGE program,

besides making Peter Theil the anti-christ, is to take control of the government’s procurement system as a whole, in the same manner that they took over USAID’s .

After chasing the current population of hogs away from that trough, they intend to put ‘procurement’ under much tighter control, and in my opinion, that means a simple re-direct of all that money into a different set of pockets.

The trouble as I see it, is a lot of their plan seems to include ‘Hope,‘ the hope that they can clean up the mess that not knowing enough about the stuff they are about to break is going to cause.

Hope is not a tactic…. and all that.

Which brings me to the massive recent lay-offs by big tech firms;

What the he*l were all those tens of thousands of people doing before they got fired?

I think the Tech Bros. are ‘Hoping‘ to demonstrate the immense productivity gains possible with AI.

This demonstration could be likened to a monster raise in the betting on a poker hand, IOW, what if the big bet, is a bluff. ‘Demonstrate‘ to the investor class, and all your potential customers that AI is already generating gigantic increases in productivity in your businesses, (look at the layoffs) it will do it for you too.

Did the Tech Bros. really replace tens of thousands of important employees with AI?

It’s hard for me to think they had tens of thousands of un-important people on their payroll?

It’s also hard for me to think that whatever all those people were doing, is now being done really well by AI.

“Did the Tech Bros. really replace tens of thousands of important employees with AI?”

In short, no. See me comment above with reference to this article:

https://arstechnica.com/tech-policy/2025/09/amazon-blamed-ai-for-layoffs-then-hired-cheap-h1-b-workers-senators-allege/

Well of course they are.

Thank you.

The thing is, its not hope for them. They are simply ignorant of systemic effects because the position they occupy in the Neoliberal dispensation has, to date, never been charged for its costs, its always unloaded those costs on “society” which, per Margret Thatcher, they don’t believe exists anyway.

Society doesn’t exist, climate change is a hoax, money is digital token. Judge them by what they do, but bear in mind their utter disconnection from reality. I can tell you that by the time I was consulting for Google in 2016 management had no idea what most of the people there were doing, but their margins were so fat no one yet cared.

The sooner they break everything, the sooner we get to start picking up the pieces. Don’t get me wrong, I’m an ameliorist not and accelerationist, but the foundation has been completely devoured by the Neoliberal termites and the sooner the real fall starts the more will be left to catch it.

RE: It’s hard for me to think they had tens of thousands of un-important people on their payroll

In a sane world, they wouldn’t. But we live in the stupid timeline. Give Graeber’s Bulls*it Jobs a read sometime. He describes how in the corporate world, the more minions a manager has, the more important that manager is. So there is incentive to have a staff full of people who don’t do anything. I may have even witnessed this myself….

This was on full display at Google in 2016-18 when I was in and out of their NY campus.

Shortly thereafter they started firing people in droves by division.

Big Fan of the antithesis:

Move slow; Make things

Comment of the day for me.

Not heard that before but I love it.

I will share widely

Exactly!

Thank you.

“What the he*l were all those tens of thousands of people doing before they got fired?”

I don’t know for sure – but I can guess. I left teaching two and a half years ago, after teaching thirty some odd years in various schools in four countries. The biggest, most frusrating change over the years was the ridiculous and continually growing amount of paperwork and documentation that was required – pointless paperwork that yielded no useful information that a teacher could use to help students learn. Because it wasn’t useful and didn’t affect standardised test scores, every year we had to spend after school hours – when we could be doing planning, perparing materials, marking paragraphs and essays – reinventing the wheel aka a different documentation process. I imagine all those middle management positions that you mentioned required reams and reams of statistical evidence showing what their company was doing to enhance profits – and whether or not that strategy was or was not worthwhile. Before anyone says that this boring, repetitive, dead end information gathering and analysis is what AI will do, all the data has to be meticulously gathered in a specific, time-inefficient way (often during prime learning hours) and then later uploaded into the computer. If my principals were anything to go by, there would also be tons and tons of meetings.

I’m not necessarily well informed about AI, but I think it is 99% hype. What they call AI is not ‘intelligence’ but is merely super-computing.

Stoking fear about AI taking over jobs serves to make workers (even if their jobs are actually perfectly safe from AI) more compliant and more exploitable.

I’ve seen videos of how AI may take over the world and cause human extinction. There was a click bait story about how AI tried to murder a human when it believed a human was trying to deactivate it.

The concept of an all powerful AI being is ludicrous. AI is a machine, and machines require electric components and electricity to operate. The idea that somehow AI is going to defend itself, repair itself and ensure a constant power supply to itself to prevent being shut down is fantasy.

AI is never going to be invincible to having it’s very life shut off by a human cutting it’s circuits or otherwise immobilizing it as there are 1001 ways to kill a machine, even with bare hands.

So no, I’m not buying into the fear and hype.

I think this is the most likely impact. Threaten workers with pay cuts, more workload and if they don’t like it, use the fear of AI as a stick.

There is still a lot of butt hurt over the short-lived, sporadic displays of worker bargaining power that happened during the beginning of Covid.

Someone on Bloomberg suggested that AI not tight money is cause for new structural UE.

In my under grad we used slide rules. The calculator and PC did not replace many engineers, but slide rule engineers have a better feel for the math under the solution.

To give positive ROI for the trillions or so in AI bubble building, much of it bleeding edge rapidly evolving HW and SW they will have to replace a huge sum of salary.

Some of these headlines concern the sales pitch.

Long way to go to see an exorbitantly trained LLM compete with a human.

AI absolutely will decimate white collar jobs. And AI absolutely isn’t ready to do that today. Making an analogy to relational databases, a concept generated by IBM in the 1970s, it took a decade from the creation of the idea to a working model by Oracle. It then took another decade for the world to adopt them and create valuable, usable products. LLMs are a result of the idea of the “transformer” (the T in ChatGPT) invented by a small team at Google in 2019. Transformers are what made LLMs actually seem to produce valuable information suddenly. And they really do for a lot of use cases. Over time, companies will move from LLMs to much more specialized models for more specific use cases, and an array of products will get created based on those specialized models and needs. Like relational databases, this will take a couple of decades. Once created though, the decimation process will proceed fairly quickly. I expect to hear a lot of RIF announcements every year for a while. Then I expect a massive cascade, resulting in some form of political chaos.

They may produce valuable information. In practice what LLMs often create is the illusion of valuable information.

You nailed it – It is the “illusion of valuable information.” In schools, it is the illusion of learning.

Did a quick search on “ai generated entity relationship diagram”. Got plenty of results. No idea how good any of these are.

I volunteer on an open-source software project. Only a handful of motivated devs (working in C++). We’ve been using CoPilot for code reviews and lately added Claude. IMO both add value, but you can’t just blindly accept the recommendations. Claude seems to me a bit more polished in that it does some ego-stroking of the dev and provides some cueing like “green checkmarks” to bolster its approval.

They are kind of impressive in how they can go from C++, to cmake files, to language translation, to doxygen which would be hard for an individual dev to do.

Over time I think their use will improve the code base (much of the core was built via hacker culture without much maintainability thought).

We may have reached peak AI, at least in terms of growth of use of the seemingly dominant type, large language models, which are conflated with “generative AI” (sticklers are encouraged to clear up fine points in comments).

As someone on the inside let me explain this more in layman’s terms.

There are an immense number of models that can be used to perform generative AI, ML, and adjacent tasks. They come in tiny, massive, open source, commercial, free but closed source, and many other variants. They are capable of a whole range of tasks but generally speaking they take some input (an image, a natural-language string of text, links to additional files, video data, etc) and do something autonomously with it to create an output (create a new image, categorize objects in video and provide in list/tag form, generate an explanation based on the input and what the model was trained on, etc). The big leap in capabilities from earlier iterations of this type of thing come from the flexibility in taking the input in natural human language instead of using a formally structured query language.

What takes all the focus in the current debate are the current generation of US frontier models, which are the biggest and ‘most capable’ when it comes to general use tasks. These are the OpenAI GPT series and Anthropic’s Claude series. These models were likely trained on the contents of the majority of the public internet and a lot of copywritten or non-copywritten but previously assumed to be protected data, like the uploads on Youtube or torrents of books or pornography. The training data of these models is not made public but since both companies have had to pay out for copyright infringement it can be assumed they did not ask before using the data to train their models which they turn around and sell usage of for revenue; their gamble apparently was that the sort of ‘fair use’ that a human might do (read a book, watch a video) is the same as training a LLM. The training data was required because the idea is these models are to be used for anything the end users might want (“It explains! It codes! It categorizes! It can even translate ancient Akkadian documents!”).

The idea apparently behind these massive general purpose models apparently was, if it is big enough and good enough fast enough, we can corner the market before other countries get there. And the revenue model was, if it’s powerful enough for a lot of general use cases, APIs can be used to build out other applications downstream. Those applications would pay API usage fees and their products would be dependent.

What has happened instead is that the downstream application layers are building in model-agnosticism for redundancy and cost control reasons. There are so many other options! And what has been discovered is in a lot of cases the general use frontier models aren’t as good as something smaller and hyper-focused on a specific use case. Right now what is happening is for viable use cases, new models – smaller, faster, cheaper, more focused on a specific niche – are being developed and taking over usage from the GPTs and Claudes.

“Generative AI” is an offshoot of the above. It is a specific type of use case that leverages output that is created by a model. Like with other use cases, models very specific to a use case are being developed because the frontier models are either too expensive or their training data makes the output of questionable value to a company looking to use the output in their own products. Right now the legal landscape on this is murky. Works that are ‘fully AI generated’ can’t hold copyright and if it can be proven something was generated by a company using an LLM that used fraudulently-obtained training data, the company can be sued and held liable. This is holding up the next phase of the use cases, which are in VFX, animation and video editing and probably music (the first one was software development/coding assistants, the second one is also going to roll out as plugins/extensions to existing editing tooling – this is why right now all those software are pushing unwanted extensions/features of this type – they’re market testing while they do private development). I suspect some deals have been made to get the legal situation ‘resolved’ given the huge amount of activity I’ve been seeing in my work around use cases in that area and the fact that OpenAI is publicly talking about music generation models.

Yes, this has been my take on it, although I’m definitely farther from daily usage than you are. But it seems like smaller, domain specific models, are going to be the future. And I think that calls into question the massive buildout of capacity, which seems like it’s unnecessary. I can actually generate pretty-good single speaker transcripts from video with a locally run model on a CPU (non-AI ready), about 25 minutes for 2.5 hours of video. I’m kind of amazed.

My larger concern is the entirety of the world is getting blazed by endless AI-generated garbage, on an order of magnitude worse than the spam wars of the early 2000s. What OpenAI is trying to do, tie in LLMs with all kinds of low/no friction transactions and in-app advertising, seems like a hellscape of a world to live in, if it becomes unescapable.

And I think that calls into question the massive buildout of capacity, which seems like it’s unnecessary.

Something to consider about the build out is that in a lot of cases a particular company is restricted to a specific data center availability zone because of location, prior agreements, other workloads running concurrently, or security requirements. (A big one is security certifications for something like federal or military projects in the US, or GDPR country-specific data requirements in the EU). This means that while the buildout overall might be excessive there will be local restrictions and shortages that mask it. The availability in the data centers around northern Virginia and London, for example, are currently very tight on the cheaper GPU capacity because of proximity to DC gov and London financial services and the associated security certifications required. Even these industries, which have more money than God, balk at paying several hundred thousand a year for an 8-gpu H200 instance running full time. So L240S capacity is exceeded first then they move up to H100 and beyond as those become unavailable.

My larger concern is the entirety of the world is getting blazed by endless AI-generated garbage, on an order of magnitude worse than the spam wars of the early 2000s.

The next generation of use cases are particularly terrifying on that front because there is a lot of text-to-speech and generative voice as a service being built out. Ostensibly for things like transcription services or the anticipated wave of gen-video that is usable for commercial and advertising needs (and the associated need for gen-audio/voices/speech with voice cloning), all I can think when I look at the API spec is that I need to warn my parents not to believe any sudden urgent calls of any type claiming to be me or any of their friends begging for them to send money to help them with a crisis. Scam spam at scale, as a service for a mere $22/mo.

That’s what I was thinking when I couldn’t sleep the other night. Watch out. If you have any digital presence at all, a few minutes of work and someone can extort you for very real looking adult activities with a minor. Only needs to fool some of the people. And BTC makes it easier than ever. Sigh.

I came across a YT post on Sim farms. The secret service busted a large sim farm.

Scary indeed.

https://youtu.be/FIgdXah-VuE?si=6VRTA_p17sKBCcu3

Thank you.

I’m still giving some weight to the idea that much of this desire is driven by what has happened with workplace relations (and other effects) since the beginning of the Covid era. Look at the timing. LLMs, the “AI” moniker, etc. had all been around before 2020.

I concur. I would argue though that the most likely outcome is the complete collapse of the social contract, producing what I can only imagine would be a Hobbesian outcome, which, given the amount of guns around, perhaps will be represented in rampant banditry and things of that nature. Maybe a better example would be 90s Russia, even if I suspect Russia was much better prepared for its collapse than the US is now. Though her work isn’t particularly scholarly, Svetlana Alexievich offered some examples in The Last of the Soviets which were not pretty.

“For instance, tarting in the 1980s, big companies…”

I think you meant to write, “starting”.

Then again……

Here is an example of how Agentic AI is being sold:

https://www.servicenow.com/products/ai-agents.html

Here is a handy calculator for economic value:

https://www.servicenow.com/products/ai-agents/roi-calculator.html/

I have no relationship with the vendor in this example, this is the simplest example of what is going on. Also, I have no idea how realistic this whole thing is, just sharing what is being passed around in these “efficiency” discussions.

Service Now is a steaming pile of garbage. It’s enterprise software lol

As the resident AI whisperer, on Agents:

“Agentic AI” means multi-step workflows using an LLM to power each step of the action. It can be multiple LLM, like a specialized model just for finding the exact right location in a very large image or text file to insert something generated by a different model, like a block of code or a mask for editing frames in a video. The agent is ‘programmed’ depending on the framework/tool used and deployment mesh. For example, in coding assistants, agents can just be a multi-step chat, or they can run in the background based on guidelines saved in the workspace. They can trigger crazy unexpected LLM API charges; horror stories about unexpected 400$ bills from walking away from the computer for a few hours are usually from a poorly-designed agent.

For example earlier today I was working on an agent to handle product ticket queues:

– step 1, read the ticket;

– step 2, search the existing items of work submitted by developers;

– step 3, update a status on anything changed, which would trigger a separate workflow elsewhere;

– step 4, review items in ‘completed’ state against original product spec;

– step 5, search outstanding work to be done relative to the product spec for the completed work item and gather a list of remaining tickets that still needed work;

– step 6, write a summary of current status and remaining work to be done on the product spec and pass it back to another system as an update.

This agent is scheduled to run every hour and was requested so as to cut down on some admin work the product team is always behind on. Real developers will mutter this can be done with scripts and existing sprint dashboards, and they’re absolutely right. The difference is the ease in setup and maintenance since you don’t have to be a script maestro and have a million cron jobs running with access to a ton of internal services. The agents typically ship with a service mesh and an interface to program them that is visual and takes instruction in natural language and some kind of visual interface to map the workflow steps. They can also use Model Context Protocol servers, which are little programs that pass additional data/context back to the LLM as part of a prompt/step. MCP servers are often used to create connectors between various app services. Think pulling customer data from a CRM and summarizing a transcript from a customer call in a different system and the agent then drafts a customer status summary doc for an agent that is designed to act as a daily activity dashboard.

Agents are probably one of the things that will get brutalized when the OpenAI/Anthropic part of the AI bubble pops (which I think is what most people conflate with the ‘AI bubble’ and fwiw I think are hyper overvalued). Right now they’re extremely buzzy so they’re popping up everywhere. They can be used to do stuff like web search in addition to working with internal systems so they have a lot of the same negative network effects on the public web as bots. So far I haven’t seen a really great use case for them that isn’t specific to big organization internal processes. Maybe autonomous research?

We’ve been building one to maintain our product documentation based on our actual code internally at work – again the real software devs will huff and puff that docs from code as been around for years and they’re correct – again the difference here is scalability and ease of maintenance over time compared to the existing solutions. If ultimately they are only really useful for internal process automation they won’t need the massive expensive frontier models and cheaper models built into the service mesh will be developed instead. I can see them being useful in specific industry verticals as part of a full use case workflow (animation rigging, video editing, software qa validation) If they end up being useful on the public web, a better/improved standard for autonomous agent interaction with the public web will have to be developed. Already the internet is creaking under the load of the agent crawlers.

I like your closing sentence.

A naive question would be:

what are “agent crawlers” doing?

A second naive question would be:

what will “agent crawlers” do,considering that they rely on the meager commons and roadkill left of the information highway.?

Thank you for the continuing education RJ. Your explanations are clear for this layman, and you often cover the parts of the AI ‘thing’ I don’t hear about.

Thanks.

But replacing deterministic APIs with this. How can you reliably test these agents? There be dragons there.

Using it for internal documentation, what could go wrong? It’ll miss that nuance.

You noted these are wasteful as well. Brute forcing web pages to get all stuff better done with a structured API. This is the promise of true no code. Gonna be a debacle instead.

For people who used to like mathew taibibi

There seems to be a sharper knife available:

Ladies and gentlemen coffeezilla

You never know how things will pan out but the future

looks bleak, Globalism and nationalism are done and

they can’t decide who is in charge. Democracies are

failing and the dictators are going to hell as well. But

on a bright note their are 10,000 nuclear weapons sitting

in the dark. What could go wrong with this picture.

Chaos breeds over reach and chaos but our tech

nightmare has just begun. Have a nice day….

most of those weapons are most dangerous as landfill……..

and the landfill emerging is the most potent weapon

Fire enough workers, and eventually you run out of customers.

The Imperial elites are cashing in their chips along with the chips of their remaining workforce and the many overseers, and corporate squad, company, battalion, and brigade leaders. They will give a new meaning to the slogan “Army of One” and the ancient symbol of the ouroboros. There will be no rebirth or victory from this war on the last tokens of imperial economic power. Management by Private Equity Economics will transform the empire to “Toys R Us” ashes with none of the properties of phoenix ashes but promising a rebirth as a website and some shelves in stores within a store. It is past time the the Imperial elites to protect further revenue from words like “Democracy” and phrases like “of the people, by the people, and for the people” with Trademark or Copyright.

Sadly, unlike Rome there will be no cultivation of potherbs or grazing of swine and buffaloes in the Imperial forums, though a golden temple to Mammon will decay in the ocean waters that will swallow the Imperial capitol. And given their due, Mammon and Moloch are not jealous gods. The Imperial Military Industrial Complex will offer up as many of our young and those of our minions to pink mist to the glory of Moloch as it can … and to worship of Mammon as profits to the Military Industrial Complex. The Imperial ruins will offer new blood to the ancient myths of Atlantis.

For the rest of us, a cold cold Winter is coming.