This is Naked Capitalism fundraising week. 694 donors have already invested in our efforts to combat corruption and predatory conduct, particularly in the financial realm. Please join us and participate via our donation page, which shows how to give via check, credit card, debit card, or PayPal. Read about why we’re doing this fundraiser and what we’ve accomplished in the last year, and our current goal, funding comments section support.

By Professor of Economics, University of Siena, Full Professor, University of Pavia, and Post-doctoral researcher, University of Siena. Originally published at the Institute for New Economic Thinking website

The last decades have witnessed the introduction of national research evaluation systems in several European and extra-European countries. Those often rely on quantitative indicators based on the number of papers published and of citations received, to monitor and assess the research performance of the country’s academic staff.

The widespread use of these metrics for evaluation purposes has generated heated debate in the scientific community. Their advocates claim that quantitative measures are more objective than peer-reviews, that they insure the accountability of the scientific community toward taxpayers, and that they promote a more efficient allocation of public resources. The critics, on the other hand, argue that when indicators are used to evaluate the performance, they cease to be simple measures. Instead they rapidly become targets, i.e., they start to change the behavior of the evaluated researchers, quite like the so called “Goodhart’s law” in relation to bank supervision.

The problem is that those changes are not always positive, nor transparent. For instance, if productivity is positively rewarded, publishing many articles becomes a target that can also be pursued with opportunistic strategies, for example by slicing up the same work into multiple publications. Analogously, if citations are positively rewarded, researchers may resort to strategic self-citations or enlist with their colleagues in the creation of citation-clubs, that is informal structures in which citations are exchanged by the club’s members to inflate their respective citation scores.

As it turns out, Italy in the years following the introduction of new research evaluation procedures in 2010 is a spectacular case in point. Since then, the Italian system has assigned a key role to bibliometric indicators for the hiring and promotion of professors. Indeed in order to achieve the National Scientific Habilitation (ASN), required to become associate and full professor, a candidate’s work must reach definite “bibliometric thresholds,” calculated by the central Agency for the Evaluation of the University and Research (ANVUR). Only if a researcher produces a minimum number of journal articles, with a minimum number of citations, and h-index value (a composite indicator based on publications and citations), is she eligible to take the final step, evaluation by a committee of peers.

In a paper published by PLOSONE, and featured among others by Nature, Science, and Le Monde, we show that the launch of this research evaluation system triggered anomalous behavior which we call “pathological inwardness.” After the university reform, Italian researchers started to artificially increase their indicators by strategically using citations.

This implies that the indicators used were not signaling what they were supposed to, with consequences for habilitations and hiring decisions. Less obvious consequences are even harder to assess, concerning the content of the research projects pursued or abandoned.

In contrast with the influential Leiden manifesto, our results represent a cautionary tale against the use of bibliometric indicators for evaluation purposes.

Inwardness

Given the difficulties of documenting the existence of strategic citation behaviors at a micro-level, we designed a new “inwardness indicator”, based on country self-citations. Country self-citations are a generalization of the traditional notion of self-citation, according to which a self-citation occurs when a citing publication and the cited one have at least one author in common. A country-level self-citation is defined considering the countries (geographic affiliation) of the authors of a citing publication and that of the authors of the cited papers. A country self-citation occurs whenever a citing publication and the cited one have at least one country in common.

The inwardness indicator is defined as the ratio between the total number of country self-citations and the total number of citations of that country. We called it “inwardness” because, in general, it measures what proportion of the knowledge produced in a country remains confined, i.e. it cited, within the borders of the country. Citations can be considered as information flows between publications. When the publications from one country are cited mainly from compatriot publications, it means that the information contained in those publications is differentially being taken up in the one country. It reflects an inward turn of attention that is not generalized outside of the country.

From the point of view of citations, a country’s inwardness can be estimated as the sum of the “physiological” and of the “pathological” quota of country self-citations. The physiological quota is constituted by the country self-citations generated by country-based researchers as a normal byproduct of their research activity. The physiological quota depends on the size of the country in terms of research production and on the degree of international collaboration, such as the participation in multinational research teams. The pathological quota is constituted by the country self-citations generated by strategic activities of country-based researchers, that is opportunistic self-citations and country-based citation clubs.

An anomalous rise in the inwardness of a country can result from an increase in either the physiological quota of self-citations or the pathological quota.

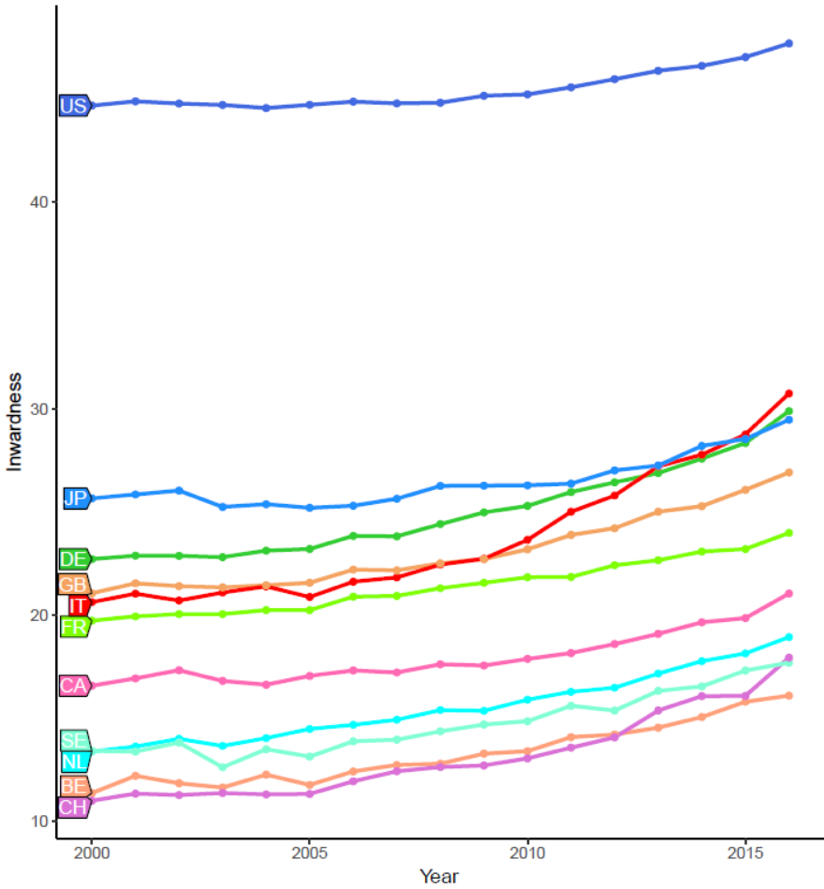

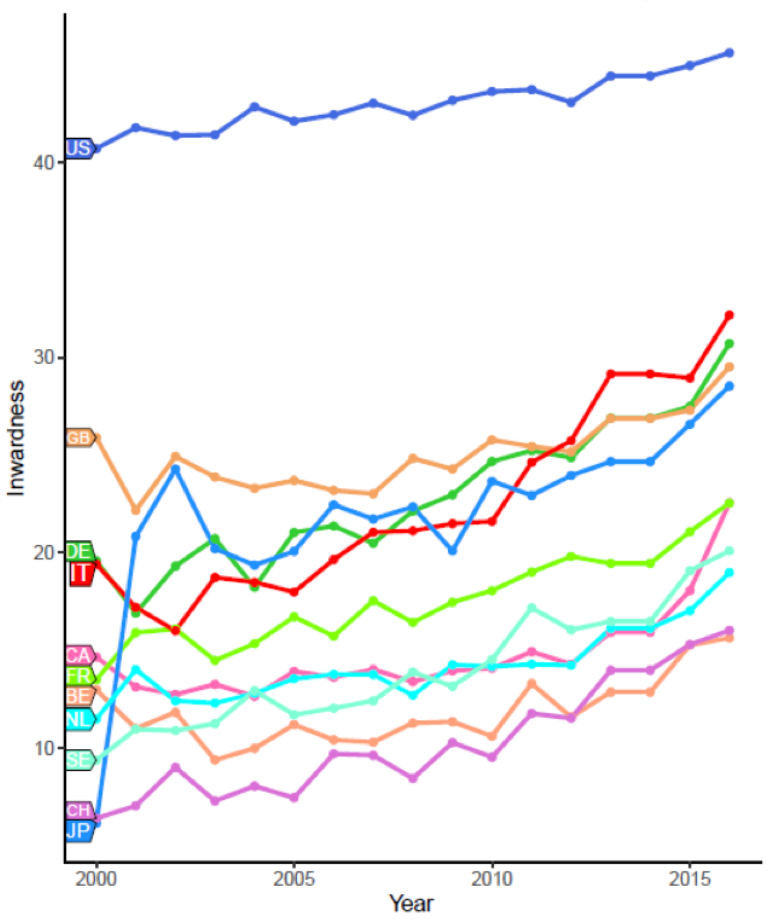

Based on these premises, we retrieved the data for calculating inwardness indicators for the G10 countries, including the traditional benchmarks of Italy (France, Germany, United Kingdom), in the period 2000-2016. This way, we could compare the Italian trend not only before and after the introduction of the research evaluation system, but also against the trends of comparable countries. The data were queried from SCIval, an Elsevier owned platform powered by Scopus data.

The Results

Our analysis revealed that, until 2009, all countries, including Italy, shared a rather similar inwardness profile. In fact, even if the absolute values of the inwardness index differ, coherently with the countries’ sizes, their trends remained similarly stable, with a slight growth over time. The Italian trend is no exception until 2010, when it suddenly diverged. In the period 2008-2016, the increase of the Italian inwardness amounted to 8.3 percentage points (p.p.), more than 4 p.p. above the average increase observed in G10 countries.

In 2000, at the beginning of the period, Italy had an inwardness measure of 20.6% and ranked sixth, just behind the UK. In 2016, at the end of the period, Italy rose to second, with an inwardness of 30.7%. In the six years after 2010, Italy overcame UK, Germany, and Japan, to become the first European country and the second one in the G10 group in terms of these measures.

Interestingly, we found almost the same pattern in most of the scientific areas, as defined by Scopus Categories: after the reform, Italy stands out with the highest inwardness increase in 23 out of 27 fields.

A possible first benevolent explanation of this anomalous Italian trend could be rooted in an increase in the physiological quota of country-self-citations. For example, it could reflect a sudden rise, after 2009, of the degree of international collaboration of Italian scholars. As a matter of fact, no peculiar increase in the Italian international collaboration can be spotted. On the contrary at the end of the considered period, Italy was the European G10 country with the lowest rate of international collaboration. Another explanation for the increase of the physiological quota may be a narrowing of the scientific focus of Italian researchers, i.e. a dynamic of scientific specialization on topics mainly investigated in the national community led to the growth of self-citations. This sudden change would be not only peculiar of Italy, but should be so strong to make the Italian inwardness diverge from those of the other G10 countries. We have no direct evidence for falsifying this explanation but it appears very implausible, given the peculiarity, dimension, and, especially, the timing of the phenomenon we observe.

The best explanation for the sudden increase in inwardness is a change in the pathological quota of the country self-citations. This means that there was a spectacular increase in authors’ self-citations and a rise in the number of citations exchanged within citation-clubs formed by Italian scholars. This reflects a strategy clearly aimed at boosting bibliometric indicators set by the governmental agency, ANVUR.

The beginning of the Italian anomaly almost coincides with the launch of the country’s research evaluation system. As we saw above, this system suddenly transformed the bibliometric indicators into crucial gateways to academic career improvements, at the same time leaving room for a strategic use of citations. The striking result is that the effects of the system have become visible at a national scale and in most of the scientific fields.

Figure 1. Inwardness of G10 countries (2000-2016). Source: Baccini, De Nicolao and Petrovich, PLOSONE

Figure 2. Economics, econometrics and finance inwardness of G10 countries (2000-2016 Source: Baccini, De Nicolao and Petrovich, PLOSONE

Three Lessons from the Italian Case

We believe there are three main lessons to learn from the Italian experience. First, our results support the idea that scientists are quickly responsive to the system of incentives they are exposed to. Thus, any policy aiming at introducing or modifying such a system should be designed and implemented very carefully. In particular, bibliometric indicators should not be considered neutral or unobtrusive measures. On the contrary, they actively interact and quickly shape the behavior of the evaluated researchers, especially when they are diriment to key career steps.

Second, our results show that a “responsible use” of metrics is not enough to prevent the emergence of strategic behaviors. The influential Leiden Manifesto recommends the use of a “suite of indicators” instead of a single one as a way to prevent gaming and goal displacement. The Italian case shows that, even if the researchers, as recommended, are evaluated against multiple indicators, strategic behaviors show up anyway.

Lastly, our results prompt some reflections on the viability of the mixed evaluation systems, in which the indicators simply complement or integrate expert judgments expressed by peers. In fact, our results show that the mere presence of bibliometric indicators in the evaluative procedures is enough to structurally affect the behavior of the scientists, fostering opportunistic strategies. Therefore, there is the concrete risk that the indicator-based component will eventually overwhelm the peer review-based one and that mixed evaluation systems de facto may collapse to indicator-centric approaches. We believe that further research is needed to better understand and fully appreciate this aspect.

In the meantime, we suggest that policy makers exercise the greatest caution in the use of indicators for their science policy.

A metric from a successful IU-SPEA professor:

Least-Publishable Unit.

Allows for maximum number of publications, and thus citations.

Yep, I agree with the lessons. Anyway I wouldn’t rule out some role on scientific specialization and narrowing into ‘italian-specific’ topics if –as I expect– the government promotes research on those which they regard as more important for Italy. For example, plant diseases that are currently important in Italy musn’t be the same that those in the Netherlands. I would also expect increasing inwardness if public research budgets decrease at least in comparison with other countries budgets, and it may well be the case, given austerity.