Yves here. This article confirms my prejudices about the importance of avoiding those spying home assistants at all costs. And it takes a bit of effort to try to thwart financial institutions’ efforts to use your voiceprint as an ID (I tell them they need to note any recording as invalid because I have my assistants get through the phone trees for me, and if they try taking a voiceprint, it won’t be of the right voice. That seems to put them on tilt).

But the notion of using voice patterns to guess at health issues or psychological profiles or product reactions sounds like 21st century phrenology. Although a lot of consultants will rake in a lot of dough selling these unproven schemes.

By Joseph Turow, Robert Lewis Shayon Professor of Media Systems & Industries, University of Pennsylvania. Originally published at The Conversation

You decide to call a store that sells some hiking boots you’re thinking of buying. As you dial in, the computer of an artificial intelligence company hired by the store is activated. It retrieves its analysis of the speaking style you used when you phoned other companies the software firm services. The computer has concluded you are “friendly and talkative.” Using predictive routing, it connects you to a customer service agent who company research has identified as being especially good at getting friendly and talkative customers to buy more expensive versions of the goods they’re considering.

This hypothetical situation may sound as if it’s from some distant future. But automated voice-guided marketing activities like this are happening all the time.

If you hear “This call is being recorded for training and quality control,” it isn’t just the customer service representative they’re monitoring.

It can be you, too.

When conducting research for my forthcoming book, “The Voice Catchers: How Marketers Listen In to Exploit Your Feelings, Your Privacy, and Your Wallet,” I went through over 1,000 trade magazine and news articles on the companies connected to various forms of voice profiling. I examined hundreds of pages of U.S. and EU laws applying to biometric surveillance. I analyzed dozens of patents. And because so much about this industry is evolving, I spoke to 43 people who are working to shape it.

It soon became clear to me that we’re in the early stages of a voice-profiling revolution that companies see as integral to the future of marketing.

Thanks to the public’s embrace of smart speakers, intelligent car displays and voice-responsive phones – along with the rise of voice intelligence in call centers – marketers say they are on the verge of being able to use AI-assisted vocal analysis technology to achieve unprecedented insights into shoppers’ identities and inclinations. In doing so, they believe they’ll be able to circumvent the errors and fraud associated with traditional targeted advertising.

Not only can people be profiled by their speech patterns, but they can also be assessed by the sound of their voices – which, according to some researchers, is unique and can reveal their feelings, personalities and even their physical characteristics.

Flaws in Targeted Advertising

Top marketing executives I interviewed said that they expect their customer interactions to include voice profiling within a decade or so.

Part of what attracts them to this new technology is a belief that the current digital system of creating unique customer profiles – and then targeting them with personalized messages, offers and ads – has major drawbacks.

A simmering worry among internet advertisers, one that burst into the open during the 2010s, is that customer data often isn’t up to date, profiles may be based on multiple users of a device, names can be confused and people lie.

Advertisers are also uneasy about ad blocking and click fraud, which happens when a site or app uses bots or low-paid workers to click on ads placed there so that the advertisers have to pay up.

These are all barriers to understanding individual shoppers.

Voice analysis, on the other hand, is seen as a solution that makes it nearly impossible for people to hide their feelings or evade their identities.

Building Out the Infrastructure

Most of the activity in voice profiling is happening in customer support centers, which are largely out of the public eye.

But there are also hundreds of millions of Amazon Echoes, Google Nests and other smart speakers out there. Smartphones also contain such technology.

All are listening and capturing people’s individual voices. They respond to your requests. But the assistants are also tied to advanced machine learning and deep neural network programs that analyze what you say and how you say it

Amazon and Google – the leading purveyors of smart speakers outside China – appear to be doing little voice analysis on those devices beyond recognizing and responding to individual owners. Perhaps they fear that pushing the technology too far will, at this point, lead to bad publicity.

Nevertheless, the user agreements of Amazon and Google – as well as Pandora, Bank of America and other companies that people access routinely via phone apps – give them the right to use their digital assistants to understand you by the way you sound. Amazon’s most public application of voice profiling so far is its Halo wristband, which claims to know the emotions you’re conveying when you talk to relatives, friends and employers.

The company assures customers it doesn’t use Halo data for its own purposes. But it’s clearly a proof of concept – and a nod toward the future.

Patents Point to the Future

The patents from these tech companies offer a vision of what’s coming.

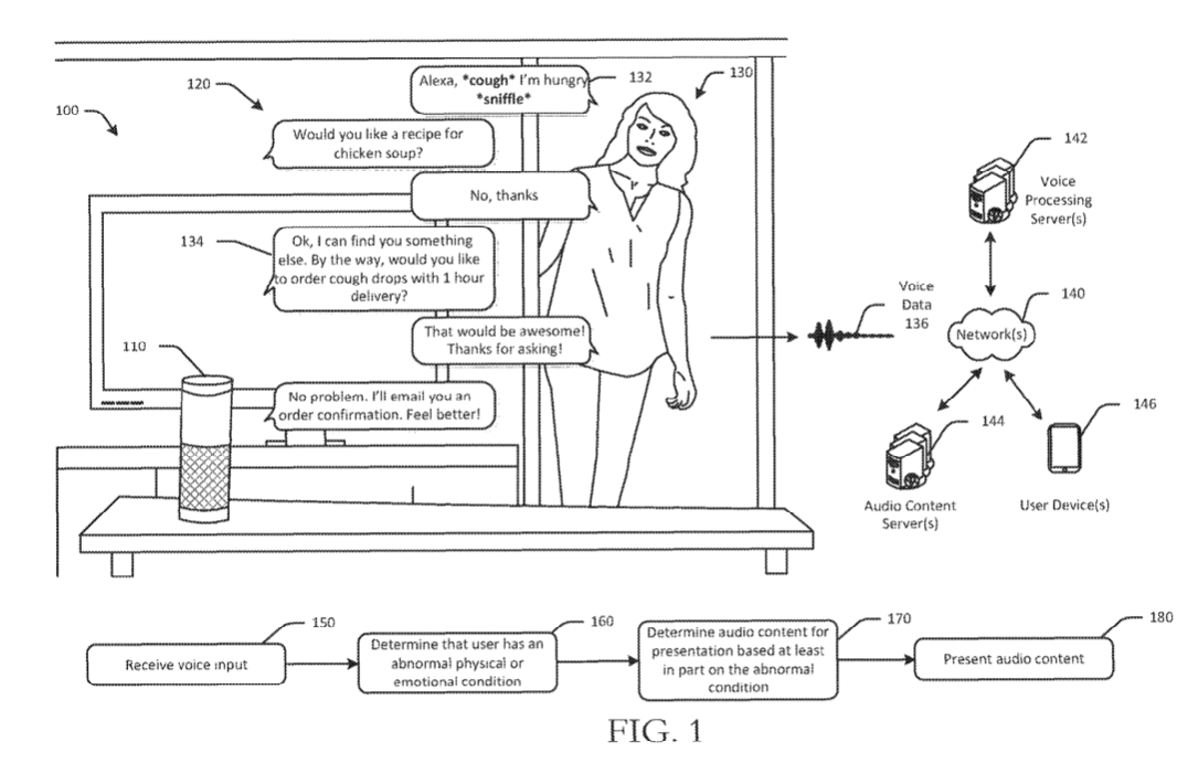

In one Amazon patent, a device with the Alexa assistant picks up a woman’s speech irregularities that imply a cold through using “an analysis of pitch, pulse, voicing, jittering, and/or harmonicity of a user’s voice, as determined from processing the voice data.” From that conclusion, Alexa asks if the woman wants a recipe for chicken soup. When she says no, it offers to sell her cough drops with one-hour delivery.

Another Amazon patent suggests an app to help a store salesperson decipher a shopper’s voice to plumb unconscious reactions to products. The contention is that how people sound allegedly does a better job indicating what people like than their words.

And one of Google’s proprietary inventions involves tracking family members in real time using special microphones placed throughout a home. Based on the pitch of voice signatures, Google circuitry infers gender and age information – for example, one adult male and one female child – and tags them as separate individuals.

The company’s patent asserts that over time the system’s “household policy manager” will be able to compare life patterns, such as when and how long family members eat meals, how long the children watch television, and when electronic game devices are working – and then have the system suggest better eating schedules for the kids, or offer to control their TV viewing and game playing.

Seductive Surveillance

In the West, the road to this advertising future starts with firms encouraging users to give them permission to gather voice data. Firms gain customers’ permission by enticing them to buy inexpensive voice technologies.

When tech companies have further developed voice analysis software – and people have become increasingly reliant on voice devices – I expect the companies to begin widespread profiling and marketing based on voice data. Hewing to the letter if not the spirit of whatever privacy laws exist, the companies will, I expect, forge ahead into their new incarnations, even if most of their users joined before this new business model existed.

This classic bait and switch marked the rise of both Google and Facebook. Only when the numbers of people flocking to these sites became large enough to attract high-paying advertisers did their business models solidify around selling ads personalized to what Google and Facebook knew about their users.

By then, the sites had become such important parts of their users’ daily activities that people felt they couldn’t leave, despite their concerns about data collection and analysis that they didn’t understand and couldn’t control.

This strategy is already starting to play out as tens of millions of consumers buy Amazon Echoes at giveaway prices.

The Dark Side of Voice Profiling

Here’s the catch: It’s not clear how accurate voice profiling is, especially when it comes to emotions.

It is true, according to Carnegie Mellon voice recognition scholar Rita Singh, that the activity of your vocal nerves is connected to your emotional state. However, Singh told me that she worries that with the easy availability of machine-learning packages, people with limited skills will be tempted to run shoddy analyses of people’s voices, leading to conclusions that are as dubious as the methods.

She also argues that inferences that link physiology to emotions and forms of stress may be culturally biased and prone to error. That concern hasn’t deterred marketers, who typically use voice profiling to draw conclusions about individuals’ emotions, attitudes and personalities.

While some of these advances promise to make life easier, it’s not difficult to see how voice technology can be abused and exploited. What if voice profiling tells a prospective employer that you’re a bad risk for a job that you covet or desperately need? What if it tells a bank that you’re a bad risk for a loan? What if a restaurant decides it won’t take your reservation because you sound low class, or too demanding?

Consider, too, the discrimination that can take place if voice profilers follow some scientists’ claims that it is possible to use an individual’s vocalizations to tell the person’s height, weight, race, gender and health.

People are already subjected to different offers and opportunities based on the personal information companies have collected. Voice profiling adds an especially insidious means of labeling. Today, some states such as Illinois and Texas require companies to ask for permission before conducting analysis of vocal, facial or other biometric features.

But other states expect people to be aware of the information that’s collected about them from the privacy policies or terms of service – which means they rarely will. And the federal government hasn’t enacted a sweeping marketing surveillance law.

With the looming widespread adoption of voice analysis technology, it’s important for government leaders to adopt policies and regulations that protect the personal information revealed by the sound of a person’s voice.

One proposal: While the use of voice authentication – or using a person’s voice to prove their identity – could be allowed under certain carefully regulated circumstances, all voice profiling should be prohibited in marketers’ interactions with individuals. This prohibition should also apply to political campaigns and to government activities without a warrant.

That seems like the best way to ensure that the coming era of voice profiling is constrained before it becomes too integrated into daily life and too pervasive to control.

Very interesting. However, I want Fidelity to use voice printing when I call for banking services. I was impressed when they implemented the technology, and I’m happy they’re using it to identify and prevent bad actors.

Until someone gets a small sample of your voice, uses it to create a fake of you, who then asks Fidelity to withdraw all your money –

https://www.youtube.com/watch?v=VQgYPv8tb6A

Moar tech is not going to solve all our problems.

It was part of the plot in the movie, Sneakers, back in the 90s.

I think I saw that in a Star Trek TNG episode; the one where Data hijacks the Enterprise to go to find Dr. Soong.

No matter what safeguards you try to build, someone is going to find a way around them. Even in a fictional future.

It also worked pretty well in Terminator 2 ;)

I’m happy they’re using it to identify and prevent bad actors.

Prevent “bad actors” from doing what? It’s quite something how quickly the perfectly serviceable English language is being replaced by a hybrid mishmash of management-speak, trendy internet slang and military euphemisms.

“It’s quite something how quickly the perfectly serviceable English language is being replaced by a hybrid mishmash of management-speak, trendy internet slang and military euphemisms.”

**********

Language is being mined like everything else. And, in the process, being destroyed and made unusable. Suprisingly, this is little commented on by those who should know. I believe that part of the so-called anti-science phenom is language degradation.

For example, vaccine and pandemic have been redefined by the Covid Crowd. Social media for the exact opposite. YOUTUBE for THEIRTUBE.

I recommend the works of Victor Klemperer a language scholar who had a ringside seat in Berlin to document in detail the wordy escapades of Nazi Propaganda. It would be wonderful to have such a skilled language practicioner commenting on today’s language abuses and assaults.

https://en.wikipedia.org/wiki/Victor_Klemperer

We are living through an accelerated/technified version of what Klemperer experienced in Germany.

You are remarkably naive. Anyone with the tech tools and a 30 second recording of your voice can fake it. Some firms who claim to have spook state connections say they can do it with IIRC a mere 9 seconds.

This is why I’m worried when I ring Centrelink (Welfare Services) in Australia, they use a voice print to identify you before speaking to an operator or to update income details. Asking for trouble.

But only for you, not for them.

“A claim denied is another soul saved or something”.

Video as well as audio can already be successfully faked in real time and is in operation: https://nltimes.nl/2021/04/24/dutch-mps-video-conference-deep-fake-imitation-navalnys-chief-staff

Any cybersecurity professional will tell you that using static identifiers for verification in myriad third party services is a huge mistake. In that regard, using your voice as confirmation is no better than using your date of birth or social security number.. you can’t change it!

The good thing on the voice-fingerprinting is that there’s already tons of good text-to-speach applications. So you can trivially claim you use one of those to go through the phone trees (if they are voice activated, which I find a pain).

Tagging someone as “friendly and talkative” while they’re being routed through voicemail hell would either be a first or a sign that the AI is pretty dumb. Or maybe it’s just me who defaults to “aggressive and hostile” every time I’m subjected to that fake treacly faux NPR computerized voice…

Gee, I wonder what would happen if you used one of those real-time voice modulators to change your sex or even give you a chipmunk voice-

https://inspirationfeed.com/voice-changers/

Had to ring the Tax mob here in Oz a coupla years ago and the recording asked if in future I wanted to be identified by my voice. Even though I said no, you knew that they kept it on file and you wonder how widespread this is.

I was thinking of trying to acquire one of those gadgets you see in the crime-oriented moving picture shows that alters the voice to sound deep and harsh. Use it to answer any call from an unknown number. Have a little fun freaking them out (momentarily) while preventing voice profiling. I wonder if there’s an app for that by now…the Kermit setting could be fun too.

Looks like there are smartphone apps that will change your voice on a phone call. That could be useful. I don’t know if any of them work well.

Ofc that can only help when the listening device is on the other end of a phone call. Not much use when, for example, conversing in person with someone who has a phone that’s listening all the time.

There is an effect, the Eventide Harmonizer, that is sometimes used to alter voices (Darth Vader’s voice in Star Wars for example). It’s an expensive audio device mostly used in recording studios, but nowadays I’m sure there is some app that can do similar things.

Darth Vader Voice, if it’s trickled down to the masses, and especially can be further enhanced, could set just the right tone for many an unwanted call.

In all seriousness, rhythm of speech, word usage, any identifiable pattern is/will be part of a vocal print, so voice-altering gadgets or apps can only disguise so much. Wasn’t that part of the conceit in a movie called IIRC “Ransom?” Some recognizable phrase. It still would be fun to talk like Darth to unwanted callers for a while, much more so if you can actually hold that little black box up to your phone.

For pc use consider Clownfish Voice Changer. Has DV and many other voices.

The problem with that is you then have another potential point of failure. Who/what is to prevent the app or gizmo that modulates your voice from first selling it to highest bidder -as in a silent online auction- PRIOR to modulating it for the destination you assume it’s exclusively going to?

Old devices made prior to 2005 and that do not have any means, wireless or otherwise of connection to some mother-ship, would be more likely to work as advertised. I would try a big wad of cotton, but then AI could probably filter that out. Otherwise, you need to say something like, “Well at least I tried,” (perfectly reasonable now-a-days) or know a lot and/or have special equipment before you could assume problem solved.

I prefer my customer service without the voice profiling please. That’s why, since Day 1, I’ve never talked back to the robo phone-operator. I simply start banging on ‘0’ until I’m connected to a human. Or, if that doesn’t work, I start illegibly mumbling to robo questions.

You misunderstand. Modern Customer Service means Serving up the Customer.

Do you mean like in the Twilight Zone episode, “To Serve Man?”

It’s the follow-up tome: To Serve Customers. More of an appeal to an international palate than the original, though the first volume was after all from the ’50s.

I’ve found the answer “aeuieueooeiueoueuoiueuiahh!” to any robo-question, repeated a few times, usually leads to being connected to a human faster than saying so in any intelligibe form.

“Don’t get on the ship! That book? It’s a….cookbook!!” Thanks for that; it’s a classic I’ll never forget.

It seems we’ve got weirder stuff now. For whatever reason, those automatic answering programs do not understand me. I’ve found if you get scrappy with them (such as Joseph K suggests babbling some nonsense) they throw up their robotic hands and they get you to a person.

Someone once advised me to shut up through the whole menu thing and they get you to a human. But many companies are on to this. Unfortunately. You may want to stick with insane babbling.

Yes, silence used to work. Now, sounding like a) a ferinner, b) an oldster without dentures c) someone with special needs, or any other demographic AI can’t handle yet, means that regrettably the human of last resort is going to have to be tasked, and paid. So far, mixing up “aeuieueooeiueoueuoiueuiahh!” with “aeuieuueiahh!” and ““uoiueuiahh!” etc works. So far. Next may have to be Darth Vader voice.

….Because you just *know* Yolanda (‘Baby’ to friends) in Manila, or Prabaljit (‘Jeet’) in Pune, have both the expertise to understand and the authority to resolve your Special Need (which is Important to Us).

You don’t say words like that, Saint Louise is listening (Soul Coughing)

(apologies in advance to the NC comrade who is irked by comments in the form of song lyrics)

What!?

Say it ain’t so…

https://www.youtube.com/watch?v=ENXvZ9YRjbo

Not so long ago, most people would be outraged if they discovered someone had planted eavesdropping devices in their home. Now some tech. co’s have persuaded people to pay to “bug” themselves!

I have to (grudgingly) admit that’s an amazing bit of marketing/salesmanship.

A casual friend I know from the dog park talked about how he finished refurbishing his home and how it has Alexa everywhere controlling everything. I looked at him wide eyed thinking that I won’t be visiting that home.

I can visualize the marketing task force meeting.

“So wait, you’re saying we train the frog to gradually turn up the heat on itself? Brilliant! I guess when it can no longer do that, it’s about ready to eat anyway. Maybe give it a couple of minutes to cook through. All speaking metaphorically of course, I mean we’re not barbarians!”

A few times over recent years, I’d been prompted by computerized voices to speak slowly and answer prompts such as “What is your destination?”. Even simple prompts had me suspicious as in “Say yes to confirm or no if you would like something else”. In a previous life as an audio engineer, I knew they could analyze the wave form and deduce many things. So, I would gargle, yodel, or sing falsetto my response. I have never put financial or personal information on line and wasn’t about to through audio. At this point, I use a Harmon or cup mute to speak to institutions via the phone.

This sentence from the article gave me a laugh: “it’s important for government leaders to adopt policies and regulations that protect the personal information…..”. No, I think most of us are so enamoured by the new, shiny toys that we have lost our way and have nowhere to turn. My latest bumper sticker idea: “Eschew Convenience”.

Halo: “The company assures customers it doesn’t use Halo data for its own purposes.”

Actually it doesn’t, because “its own purposes” includes selling the aggregate data at least.

The privacy makes big noise that the voice samples are not shared outside the phone. Of course not, the fingerprints of the voice is sent instead.

Also: “19. How can I delete my Halo health data? … data deletion includes currently stored on your smartphone.” but not the fingerprints stored by Amazon of course.

As Yves said, no way San Jose.

A few years ago I encountered voice recognition at work when I was trying to connect to another department. I finally had to find someone to speak for me.I am a Canadian from Scotland

https://www.youtube.com/watch?v=MNuFcIRlwdc

The problem is, that the companies that have developed these voice-profiling and facial recognition are probably talking to interested parties in the Department of Homeland Security, and it is probably matter of time before the TSA adopts facial recognition and voice scanning as a requirement of flight boarding much like they did with bodyscanners.

I doubt any degree of protest or backlash would be able to change Washington’s mind.

> Amazon’s most public application of voice profiling so far is its Halo wristband, which claims to know the emotions you’re conveying when you talk to relatives, friends and employers.

The company assures customers it doesn’t use Halo data for its own purposes. But it’s clearly a proof of concept – and a nod toward the future.

Amazon “spokespeople” are lying sacks of shit. Not one word they say has an iota of truth.

Know what else this portends? Moar power sucking data centers to store all the gibberish Amazon, Googlag and the rest of the digital creeps collect. And because they use so much electricity they get it super cheap instead of being charged triple retail to discourage the gargantuan waste. All to sell you moar garbage that you don’t need. What a waste of a STEM education. That’s what so called “data scientists” signed up for?

I’m glad I have no children to suffer in the digital hellhole being built by these creeps.

Goolag is good…nimitz units (10 bil for those who came in late) seem quaint anymore

Tagging someone as “friendly and talkative” …?

Consider the reverse, their response with someone frustrated and angry. Automatically routing, with no human interaction to um the call center equivalent of a padded cell perhaps.

In fact, for years call centers have been manually doing this with the unfortunate schmuck first in line sensing the caller feelings and routing the call to an unanswered, endlessly ringing extension or just putting the call onto the hold “your call is important to us and we’ll attend to you shortly”

If a menu comes up asking you to say yes or no, you can typically use the keypad numbers 1 or 2 instead. That’s what I do. Try to use the number pad where possible instead of speaking. The option is usually available even though they don’t tell you it is.

Naturally I wonder if smart phones and their various apps don’t already do this, not to mention desk and lap tops; all of which are equipped with mikes. And of course Ma Bell and Verizon and on and on get our voices all the time. What are the laws that protect the user from those behemoths? Are what ever is left of privacy laws strong enough to dampen the enthusiasm of companies like Google or Amazon who seem to consider laws like taxes; quaint vestiges of once upon a time nation states?

yes to this

they’ll do whatever they can’t be actively prevented from doing. If it’s illegal they call it data research then start lobbying congress to write laws to accommodate what ever grift they can mine from the mountain of said data. No need for facial recognition, the camera on your phone has given them a detailed three dimensional you, your location, your habits, and if you like brunettes. I still think back to when the somehow I think it was the nsa revealed googles offshore data shenanigans and am sure google was all “hey, we would have given you all that data! why did you tell everyone we’re collecting it! And now bezos is consulting the pentagon. At this point I truly feel the only thing that could stop the path we’re on is a massive economic crash due to an unexpected event, hurricanes, earthquake or a pandemic that kills lots more people than covid.