By Lambert Strether of Corrente.

In a previous post in what may, with further kindness from engaged and helpful readers, become a series, I wrote that “You Should Never Use an AI for Advice about Your Health, Especially Covid“, providing screenshots of my interactions with ChatGPTbot and Bing, along with annotations. Alert reader Adam1 took the same approach for Alzheimer’s, summarizing his results in comments:

I’ve got to take a kid to practice after school so I decided to work from home in the afternoon which means I could access the Internet from home. I ran the test on Alzheimer’s with Bing and at the high level it was fair, but when I started asking it the details and like for links to studies… it went ugly. Links that go no where… title doesn’t exist… a paper that MIGHT be what it was thinking of written by completely different authors than it claimed. 9th grader F-ups at best, more likely just systemic BS!

Here are Adam1’s results, in detail:

OMG…[1]

Unless I missed something this is BAD (at the end)…

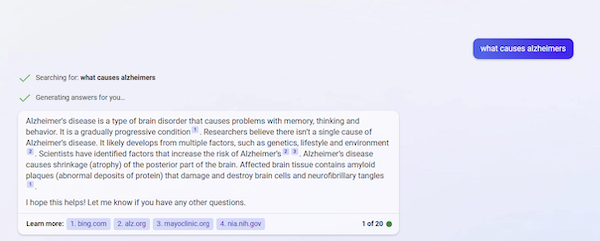

1) Here is my initial request and response… Not dangerous but I could quibble with it…

2) My next request & response… Still not a dangerous response but I’m starting to feel like a liar is hedging his bets…

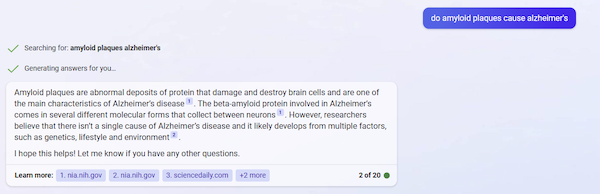

3) So I asked these… and I was thinking maybe it has something…

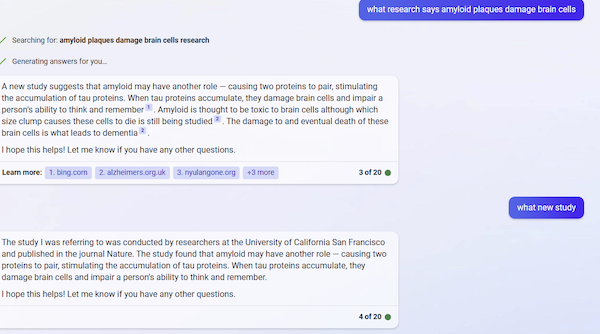

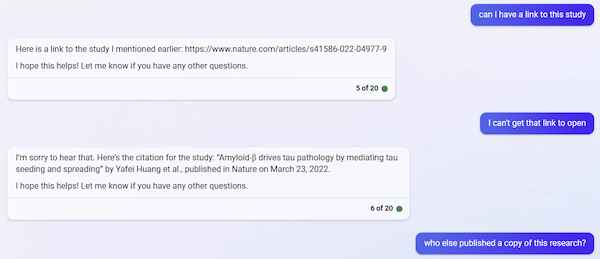

OH BUT THEN THESE!!! That link does not take you to a real article at Nature. It’s a dead end!

If you go to Nature and search the title it provided… NOTHING!

If you take the key info and search all of 2022 you don’t get anything published on March 23. There is possibly an article that seems to be in the ballpark of what it’s claiming but the date is off by a day; the author AI claims is NOT in the listing and the title is different and the link is different…

Tau modification by the norepinephrine metabolite DOPEGAL stimulates its pathology and propagation | Nature Structural & Molecular Biology

4) At best it was pulling it out if its [glass bowl] in the end. Just what you’d expect from a master bullshitter!

Being of a skeptical turn of mind, I double-checked the URL:

No joy. (However, in our new AI-assisted age, Nature may need to change it’s 404 message from “or no longer exist” to “no longer exist, or never existed.” So, learn to code.)

And then I double-checked the title:

Again, no joy. To make assurance double-sure, I checked the Google:

Then I double-checked the ballpark title:

Indeed, March 24, not March 22.

I congratulate Adam1 for spotting an AI shamelessly — well, an AI can’t experience shame, rather like AI’s developers and VC backers, come to think of it — inventing a citation in the wild. I also congratulate Adam1 on cornering an AI into bullshit in only four moves[2].

Defenses of the indefensible seem to fall into four buckets (and no, I have no intention whatever of mastering the jargon; life is too short).

1) You aren’t using the AI for its intended purpose. Yeah? Where’s the warning label then? Anyhow, with a chat UI/UX, and a tsunami of propaganda unrivalled since what, the Creel Committee? people are going to ask AIs any damn questions they please, including medical questions. Getting them to do that – for profit — is the purpose. As I show with Covid, AI’s answers are lethal. As Adam1 shows with Alzheimer’s, AI’s answers are fake. Which leads me to–

2) You shouldn’t use AI for anything important. Well, if I ask an AI a question, that question is important to me, at the time. If I ask the AI for a soufflé recipe, which I follow, and the soufflé falls just when the church group for which I intended it walks through the door, why is that not important?

3) You should use this AI, it’s better. We can’t know that. The training sets and the algorithms are all proprietary and opaque. Users can’t know what’s “better” without, in essence, debugging an entire AI for free. No thanks.

4) Humans are bullshit artists, too. Humans are artisanal bullshit generators (take The Mustache of Understanding. Please). AIs are bullshit generators at scale, a scale a previously unheard of. That Will Be Bad.

Perhaps other readers will want to join in the fun, and submit further transcripts? On topics other than healthcare? (Adding, please add backups where necessary; e.g., Adam1 has a link that I could track down.)

NOTES

[1] OMG, int. (and n.) and adj.: Expressing astonishment, excitement, embarrassment, etc.: “oh my God!”; = omigod int. Also occasionally as n.

[2] A game we might label “AI Golf”? But how would a winning score be determined? Is a “hole in one” — bullshit after only one question — best? Or is spinning out the game to, well, 20 questions (that seems to be the limit) better? Readers?

From an economic point of view, these chatbots are lowering the costs of fake information in comparison to real information tremendously. What could go possibly wrong?

I had to laugh at this one. I think you’re spot on too. Really good human BS’ers often have leveraged their BS skills to make very good salaries. They are expensive people. LOL!

I can think of a few people I would happily encourage to use AI for health advice…

The outrage about those newfangled AI systems “inventing” information largely derives from the expectation that they (a) store information that can be reliably retrieved to answer questions and (b) synthesize that information into condensed or higher-level knowledge.

This is incorrect on both counts. What ChatGPT (and all other tools in that family of AI techniques) does is, based on all accumulated data gathered from the Internet, concoct a plausible answer to each question. In other words, every time ChatGPT outputs something it is not a proper answer to a question, but what a proper answer to the question could look like. If exact answers to the question actually exist, it may return them, otherwise it will concoct something that resembles what the actual answer could be.

And if a reference is relevant in the context of such an answer, then it will output what an appropriate citation could look like. If one that fits exactly the context exists, it may well return it, if not, it will concoct one that fits the context.

ChatGPT is faking it. All the time. It is not that one should avoid using it for health advice on Covid, Alzheimer or whatever — one should never use it for answers to anything. A warning label is missing: ChatGPT is only for entertainment, not for advice, work or study.

Real AGIs don’t need to fake it!

Exactly, mainstream has been conned into thinking all AI is AGI. These very simple aggregators (or thieves in the case of AI Art generators) don’t have any intelligence to speak of, and are not learning or creating metadata, they just take in text and put out text blindly with no concept of truth (because that would require AGI, which is far off/impossible).

But their syntax is excellent!

Thank you for confirming that. People talk about verifying for AI hallucinations like they’re mistakes that can occur occasionally, but there are no mistakes, we can’t make that separation. As you suggest, the whole thing is a hallucination.

A very simple example:

Question: There is a song where Greg Brown says something like: there will be one little box, and it will tell you what you want

ChatGPT responds with the text below. There is a song called “Two Little Feet” but the lyrics below are entirely made up and the blurb after them is not the topic of that song. He’s like a barstool expert. It’s really just a more sophisticated version of the Eliza “AI” from the 70’s.

And on top, it obviously doesn’t get the irony in the lyrics it made up.

ChatGPT:

The song you may be thinking of is called “Two Little Feet” by Greg Brown. The lyrics you are referring to go:

“There’s one little box, it’ll tell you what you want

One little box, it’ll tell you what you want

It’ll suck out your brain, it’ll suck out your brain

It’ll suck out your brain, before you can say, oh my God”

The song is a commentary on our society’s addiction to technology and how it can distract us from the beauty and simplicity of life.

> the whole thing is a hallucination

I don’t want to assign work, but we could play some “AI Golf” with this if you were willing to send me screen shots of the interaction. This is a use case I never considered, and it sounds like a hole-in-one!

Yep, it is the same as them image generators. Take massive pile of input data, generate statistics based on it, then run the statistics in reverse relative ot the user query.

The only way it can barf back facts is if the query is specific enough that only a textbook passage has statistic correlation. Beyond that and it will produce word salad or bafflegab.

At best the scientists has come up with a sociopathic parrot.

> every time ChatGPT outputs something it is not a proper answer to a question, but what a proper answer to the question could look like. If exact answers to the question actually exist, it may return them, otherwise it will concoct something that resembles what the actual answer could be.

Or, in more concise form, as I wrote, “AI = BS.”

Please quote and link widely, the post got a little traction on the Google (!! — though I grant, only with quotes. Without quotes, the results get taken over by the “B.S” degree):

In the future how will we know that a standard search engine is not using one of these ML language models (due to cheepnis?) in the background. I know the search engines only throw up links in some sort of priority but if a AI bot was actually selecting in the background and prioritizing then the mix could be toxic. Additionally, if said AI engine had been trained on previous searches and their results it could be tuned to tell you only “what you need to know” in order to keep everyone in line. Please tell me I am wrong.

CO2 Makes Up XX.XX% of Earth’s Atmosphere?

I am just asking for a friend………

Please, only reply if you are a genuine, carbon-based, actual human being…..

I think Lambert’s point #1 is the problem. Yet again, we’re seeing a capital market hype cycle driven by implicit (and plausibly deniable) fraud.

The issue is that these things are good enough to come across as plausible and believable – their ‘duck type’ is human. They speak conversationally and give the appearance of understanding what you say, to the point where they can make appropriate responses. They display vast knowledge about topics of all kinds and give a convincing impression of authority. All of this causes people to fall into a certain pattern of interaction and make certain assumptions when dealing with them, but some of the most important ones don’t happen to be true. They can’t distinguish truth from falsehood, or make assurances of accuracy. They can’t make citations or quote sources. Worse, they don’t know they can’t do these things, so they routinely claim to be able to, and because they’re good imitators and have a huge corpus to work from, they can be very convincing.

Vendors don’t want to add proper warning labels and guidance on how to use them with proper skepticism, because the assumptions users make about them lead them to assume they’re more powerful than they actually are, and that’s what is driving the hype cycle. Instead they add CYA disclaimers in tiny print (‘answers may not always be accurate’), sit back, watch everybody draw wrong conclusions and make wildly optimistic predictions about the technology that can’t possibly be correct, and say nothing. When it all comes crashing down, they will point to the fine print, say they told us all along what the limitations were, and blame us for misusing it – all while pocketing the buckets of cash they banked because they knew perfectly well that we would do just that.

We’ve seen this story before – Uber, liar loans and mortgage fraud in the global financial crisis… This is just the latest iteration.

> a capital market hype cycle driven by implicit (and plausibly deniable) fraud.

Yep. (“Capital is too important to be left to capitalists.”)

Adding on “duck type” (Wikipedia, sorry):

It’s great to see optimism about people having a choice on whether AI is used to make decisions on their health and wellbeing?

Alas Moderna don’t appear to be interested in choice or transparency

According to their PR / the Guardian they have … a “machine learning algorithm then identifies which of these mutations are responsible for driving the cancer’s growth”.

Cancer and heart disease ( mRNA vaccines) ‘ready by end of the decade’

The plan is that this ‘technology’ will be used to assist with decisions on whether people get a vaccine for cancer.

The article didn’t provide any details about the ‘artificial intelligence’ methodology that Moderna are offering. For example, are they following an ‘explainable’ methodology or is it a secret variation on the ‘black box’ approach?

According to the Royal Society

Explainable AI: the basics Policy briefing

“There has, for some time, been growing discussion in

research and policy communities about the extent to which

individuals developing AI, or subject to an AI-enabled

decision, are able to understand how AI works, and why a

particular decision was reached. These discussions were

brought into sharp relief following adoption of the European

General Data Protection Regulation, which prompted

debate about whether or not individuals had a ‘right to an

explanation’.”

· · ·

The ‘black box’ in policy and research debates

“Some of today’s AI tools are able to produce

highly-accurate results, but are also highly complex if not

outright opaque, rendering their workings difficult to

interpret. These so-called ‘black box’ models can be too

complicated for even expert users to fully understand. As

these systems are deployed at scale, researchers and

policymakers are questioning whether accuracy at a specific

task outweighs other criteria that are important in

decision-making.”

> These so-called ‘black box’ models can be too complicated for even expert users to fully understand.

Not debuggable, not maintainable, then? Or do we just turn them off and on again?

In Ye Olde Dayes we called it GIGO.

I think it is a misconception to believe that, following GIGO, the problem lies in the training set: the Internet is full of fake news! scientific frauds! racist, sexist, putinist, etc, drivel! If only one could avoid or purge that junk, AI systems would produce correct answers. But no, the fundamental problem lies in the process itself.

Remember when, a couple of years ago, the big AI sensation was stable diffusion? When asked to generate an image such as “a bear holding a mobile phone in the style of Pablo Picasso”, it would dutifully comply. Despite the fact that it relied upon a database of genuine shots of bears, genuine photographs of mobile phones, and genuine reproductions of works of art by Picasso, the outcome was definitely not a genuine painting by Picasso — only an image of what such a painting might look like.

Similarly, when asked a question, ChatGPT does not produce a genuine answer — even if its training set comprises only valid, correct, verified, etc, information — only a rendition of what such an answer might look like.

As an artifact, this output might be intriguing, funny, scary, boring, dismaying — but as an answer to a question I consider it worthless, just like images generated by stable diffusion are worthless as works of art by Picasso.

Of course, including garbage in the training data compounds the problem further — and I suspect there will be strange effects when the output of such AI systems is fed back to their training sets.

> there will be strange effects when the output of such AI systems is fed back to their training sets.

Word of the day: Autocoprophagous

On a related note, a new book, of possible interest:

Resisting AI

https://aworkinglibrary.com/reading/resisting-ai

Is there not laws in the US about giving unlicensed medical advice? Or do we again have to wait for the bubble to break before laws are applied?

> giving unlicensed medical advice?

I assume there’s an EULA that makes such tiresome administratrivia go away?

Many thanks to Adam1 for delving through this garbage program. Thing is, I can see how down the track that governments will want to launch their own version of a MediChatGPT to provide first line medical services and give out prescriptions. Yes, people will die through mistakes and deliberate errors by such a program but that will not deter those governments from pressing forward. After all, they are quite happy to see self-driving cars on the highway being run by Beta standard software and letting us muppets be the crash-test dummies to sort through the many bugs of this program.

Governments? Back in the USA we don’t need no stinkin governments!

On second thought, ChatGPT is probably conducting the Ukraine war for DoD, DoS, or both.

> first line medical services

And soon the “first line” will be the only line (unless you can afford concierge medicine).

Not that I’m foily, but this fits right in with the (very few, I hope) hospitals that have told people? customers? covered individuals? patients? not to come in if they are sick.

I believe fake it till you make it AI software is going to get better and better, both substantively and in faking it, and therein lies the danger. As has been pointed out in these comments, we the users are the unpaid and to varying degrees unwitting QA group. There may (and I think that is what the backers of it are betting on) come a point at which these obvious errors occur rarely enough to make the answers generally convincing as legitimate. And there will be in place an enormous legal infrastructure no doubt, including bought and paid for legislation, and just the right amount of subtle disclaimers, to protect the industry from unacceptable levels of litigation.

And it is even possible that at that point the rate of accuracy is enough so that the software is used in all sorts of profitable ways, including even scientific experiments as outright inventor or judge of success or failure and of course subsequently examined by humans as part of the process. The errors will be defined as being at “acceptable levels” compared to what humans without this assistance would generate. The tragedies will be swept under the rug by legal and political means. The process of societal collapse would be added to by that much and otherwise continue unabated. As Lambert says, It’s all going according to plan.

Don’t worry. As science and the humanities advance, we’ll continuously update the training sets [hollow laughter].

AGI is the perpetual motion machine of the 21st century, and it sucks that enough money has conned people into believing that the world’s biggest phone autocomplete was a good idea to use for anything outside of imitating surreal humor/horror.

> the world’s biggest phone autocomplete

C’mon, let’s be fair. It’s not only bigger, it’s more complicated!

You know that wonderful feeling when you fight your way through a phone tree, and finally get a human, which you really need, because you don’t fit into the phone tree’s categories? (Bourdieu would call this a “classification struggle”). Well, now we can finally replace that human with an AI pretending to be a human!* (And by “replace,” I mean, “get demoralized customers already resigned to extensive crapification to accept”).

NOTE * Yes, passing the Turing test. In this context, ha ha.

AI has been taking money from the Pentagon for 70 years, promising a robot soldier. It has finally delivered a robot propagandist soldier. “5th Generation Warfare” includes an insane belief that wars will be won by lying; Russia is proving that war is still won with artillery.

As some commentators stress, time will tell. Today Europe, tomorrow, Russia!

Given the momentous significance of armchair warriors, I wonder if someone got a contract to design a modern armchairs fully adapted to unique environment facing a lying warrior. Protection against a backward flip up to 400 lb, caster with balls replaced by tracks — greater stability and easier mass deployment, even outdoors etc. Variable coloration, allowing quick adaptation to environment (steppe, tundra, jungle, beach etc.), weather and political conditions.

AI advice make good economic sense. Suppose that a thoughtful advise is worth 50 dollars and it takes few hours to compile the summary, links to sources, highlights of relevant paragraph. And an idiotic advice with some connection to “common wisdom”, old wives tales etc. is worth 1 dollar and can be prepared automatically. What remains it to test if the program indeed delivers the projected revenue.

OTOH, like with cooking, as a prospective customer, you may be better off doing it yourself.

> Suppose that a thoughtful advise is worth 50 dollars and it takes few hours to compile the summary, links to sources, highlights of relevant paragraph. And an idiotic advice with some connection to “common wisdom”, old wives tales etc. is worth 1 dollar and can be prepared automatically.

“A lie can run around the world before the truth has got its boots on” –Mr. Slant, the zombie lawyer, in Terry Pratchett’s The Truth (which is great, everyone should read it.