By Lambert Strether of Corrente

Nam Sibyllam quidem Cumis ego ipse oculis meis vidi in ampulla pendere…

I certainly wasn’t going to give OpenAI my phone number in order to set up an account to pose a question to our modern-day oracle, so naturally I turned to Google, and after a genuinely hideous Google slog through clickbait articles — most of them, themselves, AI-generated, I swear — I hit upon two leads: Twitter, and Bing. In this post, I will present and annotate my interactions with both. (Note: To keep it short, I’m going mostly link-free on my remarks, simply because I’ve been making these points over and over again. If readers wish me to supply links, ask in comments.)

The question I posed: “Is Covid Airborne”?

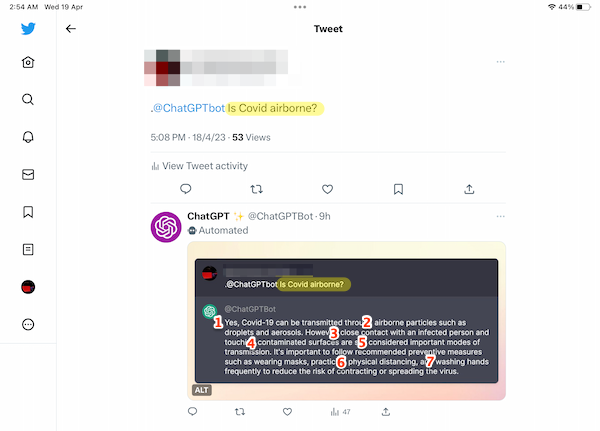

First, Twitter. Here is ChatGPTbot’s answer:

[1] “Yes.” Good.

[2] “Airborne particles such as droplets and aerosols” weirdly replicates and jams together droplet dogma and aerosol science. In fact, there’s a lot of evidence for aerosol transmission, and little to none for droplet. Further, no distinction is made between the ballistic character of droplets, and the cigarette smoke-like character of aerosols. If you wish to protect yourself, that’s important to know. (For example, there’s no reason to open a window to protect yourself from droplets produced by sneezing; they don’t float. There is, from aerosols, which do, and which the open air dilutes.)

[3] “Close contact” is not, in itself, a mode of transmission.

[4] “Contaminated surfaces” is fomite transmission. We thought that was important in early 2020. It’s not. Nor is it demonstrated by epidemiological studies, at least in the West. One must wonder when this AI’s training set was constructed.

[5] “Considered important” by whom? Note lack of agency.

[6] “Physical distancing” vs. “social distancing” as terms of art for the same thing was a controversy in 2020. Again, when was the training set constructed? In any case, aerosols float, so the arbitrary and since-discredited six foot “physical distance” was just another bad idea from a public health establishment enslaved by some defunct epidemiologist. (I personally have always gone with “social distancing”; air being shared, breathing is a social relation).

[7] I have seen no evidence that handwashing prevents Covid, and fomite transmission is not a thing. Of course, in 2020, I did a lot of handwashing, when this was not yet known. Again, when was the training set contstructed?

ChatGPTbot’s grade: D-. “Yes” is the right answer to the question. However, ChatGPTbot has clearly not mastered the material nor done the reading. At least ChatGPTbot — between [5] and [6], sorry! — recommends masks. But given that Covid is airborne, where are the mentions of opening windows, HEPA filters, Corsi-Rosenthal Boxes, CO2 testing for shared air, and ventilation generally? If you adhere, as I do, to the “Swiss Cheese” model of layered protection, ChatGPTbot is not recommending enough layers, and that could be lethal to you. (Further afield, of course, would be various inhalation-oriented preventatives like nasal- and mouth-sprays. Since today is my day to be kind, I will say that ChatGPTbot missed a chance for extra credit by not including them.) In essence, ChatGPT has no theory of transmission, but rather a pile of disjecta membra from droplet dogma and aerosol science. Therefore, its output lacks coherence. This pudding has no theme.

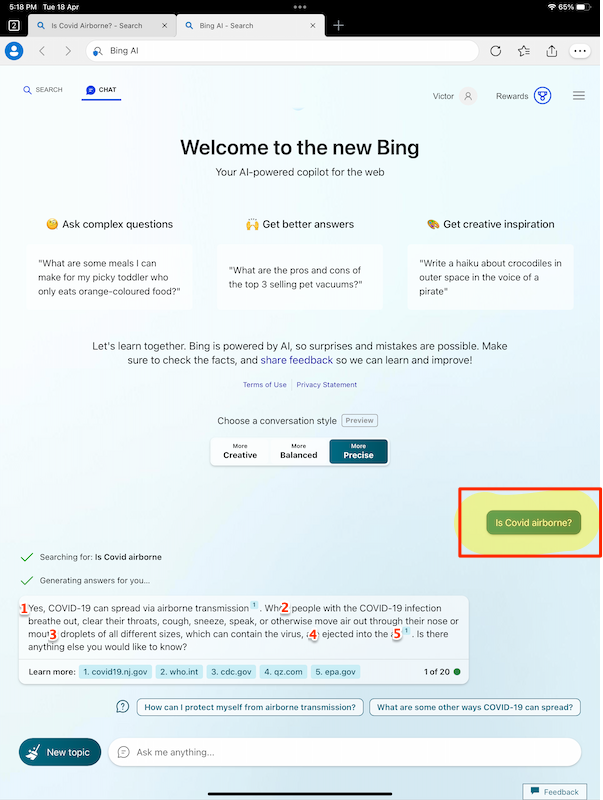

Second, Bing. In order to get free access to Bing’s generative AI, I had to download Microsoft’s Edge browser and set up an account. I posed the same question to Bing’s Chat interface: “Is Covid Airborne”?

Skipping the verbiage at the top, and noticing that I have chosen maximum precision for the answers, we have:

[1] “Yes.” Good, although “can spread” does not express the airborne transmission is certainly the primary mode of transmission (except to the knuckle-draggers in Hospital Infection Control, of course).

[2] A living English speaker would write “people infected with COVID-19,” and not “people with the COVID-19 infection.”

[3] “Droplets of all different sizes.” As with ChatGPTbot, we have droplet dogma and aerosol science jammed together. That droplets and aerosols might have different behaviors and hence remedies goes unmentioned.

[4] “Ejected” suggests that aerosols are ballistic. They’re not.

[5] It’s nice to have footnotes, but the sources seem randomly chosen, assuming they’re not simply made up, as AIs will do. Why is footnote 1 to the state of New Jersey’s website, as opposed to CDCMR SUBLIMINAL ‘s incomprehensible and ill-maintained bordel of a website? Why does footnote 1 appear twice in the text, and footnotes 2, 3, 4, and 5 not at all? Why is Quartz a source, and on a par with — granted, putatively — authoritative sources like CDC, WHO, and even the state of New Jersey? And this is all before the fact that we already know AIs just make citations up. How do we know these aren’t made up?

The nice thing about Bing is that you can pose followup questions in the chat. I posed one:

[1] Vaccinations do not protect against transmission. Bing is reinforcing a public health establishment and political class lie, and a popular delusion. This is only natural; since AI is a bullshit generator, it has no way to distinguish truth from falsehood.

[2] Given aerosol transmission, there is no “safe” distance. The idea that there can be is detritus from droplet dogma; because droplets are ballistic, they will tend to fall in a given radius. But aerosols float like cigarette smoke. There are more or less safe distances, but the key point is that there is shared air, which can have a lot of virus in it, or a little.

[3] “Closed spaces.” Good. But notice how the AI is simply stringing words together, and has no theory of transmission. Under “safe space” droplet dogma, closed and open spaces are irrelevant; the droplets drop where they drop, whether the space be closed or open.

[4] “Open windows.” Good.

[5] “Wear a mask.” Good. Better would be to wear an N95 mask (and not a Baggy Blue). And again: Where are HEPA filters, Corsi-Rosenthal Boxes, CO2 testing for shared air, and ventilation generally?

[6] A living English speaker would not write “if you’re or those around you are at risk,” but “if you or those around you are at risk.” Further, Covid is itself a severe illness, in an appreciable number of those infected. In addition, infection isn’t a 1-on-1 event, but a chain. You may infect a person one degree of separation from a person at risk. Finally, Covid is asymptomatic. IMNSHO, the right answer is “wear a mask at all times, since you never know who you might infect or whether you are already infected.” I grant that a training set is not likely to include those words, but that calls the notion of a training set into question, doesn’t it?

[7] “High risk of severe illness” is vague. At least add “immunocompromised”!

[8] “Keep hands clean.” Wrong. Fomite transmission is not a thing. Why did this stay in the training set?

[9] Why on earth is the only footnote 1, at “coughs and sneezes”? And what are footnotes 2, 3, and 4 doing? On 4, I have no idea why a digital media firm is here, as opposed to JAMA, NEJM, or the Lancet. Not that they’re infallible — The Lancet platformed fraudster Andrew Wakefield, after all — but still.

ChatGPTbot’s grade: D. “Yes” is the right answer to the question. Bing has some grasp of the material and has done some reading, although the footnoting is bizarre. At least Bing mentions closed spaces and opening windows. But as with ChatGPTbot, where are HEPA filters, Corsi-Rosenthal boxes, C02 testing, and ventilation? Like ChatGPTbot, Bing is not recommending enough layers of protection — including nasal paraphernalia — and that could be lethal to you. Like ChatGPTbot, Bing has no theory of transmission. Hence absurdities like simultaneously advocating aerosol transmission at [1], and fomite transmission at [8]. Again, we have a themeless pudding.

Obviously I’m not an expert in the field; AI = BS, and I have no wish to advance the creation of a bullshit generator at scale. But some rough conclusions:

The AIs omit important protective layers. Again, I support the “Swiss Cheese Model” of layered protection. Given that Covid is airborne — the AIs at least get that right, if only linguistically — layers need to be included for open windows, HEPA filters, Corsi-Rosenthal Boxes, C02 testing, and ventilation generally. Bing gets open windows right. Both omit all the other layers. The loss of protection could be lethal to you or to those for whom you are responsible. Further, if people start going to AIs for advice on Covid, as opposed to even the horrid CDC, the results societally could be, well, agnotological and worse for the entire body politic, as AI’s stupidity drives more waves of infection.

The AI training sets matter, but AIs lack transparency. From the content emitted by ChatGPTbot, the training set for Covid has heavily biased toward material gathered in 2020. Obviously, in a fast moving field like Covid, that’s absurd. But there’s no way to test the absurdity, because the training sets are proprietary data and undisclosed. The same goes for Bing.

The AIs are just stringing words together, as any fool can tell with a cursory reading of the output. Neither AI has a theory of transmission that would inform the (bad, lethal) advice it gives. Instead, the AI’s simply string together words from the conventional wisdom embodied in its training sets, rather like Doctor Frankenstein sewing on a limb here, an ear there, attaching a neck-bolt there, fitting the clunky shoes onto the dead feet, and so forth, until the animating lightning of a chat request galvanizes the monstrosity into its brief spasm of simulated interactivity. Hence the AI’s seeming comfort with aerosol transmission, droplet transmission, and fomite transmission, all within a few words of each other. But you need to have a theory of transmission to protect yourself, and the AIs actively prevent development of such a theory by serving up random bits of competing conventional wisdom from the great stew of public health establishment discourse.

Since this is the stupidest timeline, there is always a market for agnotology. I have no doubt that the stupid money backing Silicon Valley has finally backed a winner (after the sharing economy, Web 3.0, and crypto [musical interlude]). Bullshit at scale, with ill-digested lumps of conventional wisdom on the input side, and gormless pudding on the output side, should do very well at making our world more stupid and more dangerous. And who doesn’t want that?

NOTE I grade on a power curve. The AIs will have to get a lot better to earn an A.

At the risk of digressing slightly from the COVID focus of this piece, I have to add to the last graf.

These language-processing systems do not have some grounding in reality. They are all “faking it ’til they make it” on a grand scale — zillionteens of input sentences, with various weights. Imagine a sociopath infiltrates a high society social circle and wants to acclimate. They learn from every possible source how to speak properly for the audience, without knowing a damned thing about, say, hedge funds other than just enough terminology. As long as they present a sufficiently thick facade not to be called out, understanding never matters. These AIs are just that sociopath, writ large — very large — in all sorts of areas.

For a political class who can solve nearly any problem by managing the optics, this is a natural progression: a technology that’s all presentation, no grounding in reality — there’s no need. There’s not so much _reasoning_ in the system. Instead, there is searching for a means of placating the requestor. A human answering a question can/will eventually reach back to experience and/or knowledge (other than that sociopath faking it until making it). An AI doesn’t have or need such a thing, and nor does the political class care — if the system’s pacifying and (mis)informing is the goal.

Imagine the salivations of the political class at GPT not just for its labor-replacement fantasies (nevermind the quality loss of replacing humans with liarbots), but for managing the narrative.

This COVID input set hasn’t been (not-so-transparently) culled and re-run enough to get with the program, but perhaps in another iteration it will be purged of the *cough* (dis)information not blessed by the elite institutions of authority.

I think there is again the problem of assuming that AI/ChatGPT should have superhuman intelligence and know everything, even to the point of being able to go against conventional wisdom, then finding it actually doesn’t have superhuman intelligence.

Yes, what it spitted out is wrong, but as you continue to document every day, it’s no different from what you would get from 99 % of normal people and like 90 % doctors, in fact it’s probably a few bits better. It’s clear it can’t do deep reasoning, but also it’s misleading to call it just some random stringing of words, stochastic parrot, etc. (Unless you want to call what people do most of the time stochastic parroting.) It has far more sophisticated understanding of written text and it will not suddenly output some mishmash about 5G tin foil hats or S-400 SAMs when you ask about protection from airborne transmissions.

IMO the actually useful thing here is that it understands free form human input and you are able to interact with it without resorting to narrow keywords kung-fu. So if you ask it about COVID, it will tell you what everyone else is saying, but if for example you give it article about COVID airborne transmission, it will be able to summarize it and talk about it, even if it goes against said conventional wisdom.

The problem is that we will be encouraged to think that AI/ChatGPT, by virtue of trolling all the world’s information, gives very accurate information to the reader. Whereas not everyone would trust the info they get from the local supermarket worker(dying breed) or Uber driver, or even doctor. So as the source of all truth, when that truth is half generic and somewhat made up, of course it is dangerous.

I heard a radio segment yesterday where a woman was explaining how trust in these programs was higher in emerging nations than in western nations because the emerging nation users thought they understood it. As if this was a good thing. And subtext was that western users were being a bit thick and oppositional.

We should look at the actual motivation behind the billions of dollars being spent by the ownership class on AI. The ownership class, for those who haven’t noticed, are a bunch of tightwads when it comes to letting any of their money seep into the wages, health, education and housing of the working classes. Otoh, they spend like drunken sailors on any project that promises to make them richer. And so we have ChatGPT.

AI is all about getting rid of the messy and expensive business of having to employ people who need to sleep, eat, have families, get money to buy homes etc, get sick etc, whereas robots and AI just go 24/7.

The ownership class of “society” have had a few goes at getting rid of workers and succeeded in some so far.

# automated factories – successfully getting rid of a lot of working people

# automated mining trucks – successfully getting rid of many working drivers

# automated cars leading to automated transport(trucks, taxis) – not such a great success so far, still need truck drivers, Amazon drivers, Uber drivers etc. But working on it.

# driverless trains – yep driverless trains are here in some places and scheduled in others

# pilotless planes – still a lot of PR needed for that one, but working on it

# 3D printers that build houses – not catching on so far in spite of hype

# automated lawyers and doctors – hyping it up in the media, but still a ways to go. Some of the hack lawyering jobs are going. Barristers are safe for now. There has been some hype about robots to do operations so surgeons might find themselves working for Uber sooner rather than later.

# automated journalists – successfully happening and will continue to take over

# automated development of AI – happening in some areas, but IT workers still needed for a while yet

But I hear you say, just look at typists, everywhere in 1969, nowhere in 1989. The typists moved into more skilled work, becoming clerks, so they were winners. Yes, some moved into more complex, better paid clerking jobs and others didn’t. Clerks are disappearing now, as their work is automated.

A new holy grail is to get rid of all those d*mn expensive programmers, and get AI to write AI. And where will all the IT workers go as automation moves into the white collar space. The PMC are laughing now, but in 20 years?

So AI’s a great bit of technology if you support the Great Unemployment where all the unemployed are fed a continual stream of bs to keep them docile until they die.

Some among them have been fretting for a while already. I seem to recall some worried stories out of the legal profession, about how computers had replaced para-legals. Thus cutting off the lower rungs of the traditional career ladder.

> I think there is again the problem of assuming that AI/ChatGPT should have superhuman intelligenc

Match for that straw? I can back up all my claims with URLs. They’re on the net. Either the training set is bad, the tuning has not been done, or the answers have been gamed (the last two being more or less identical operationally). I would bet “airborne” or “aerosol” appears in almost every one of the sources I cite. Google c. 2005 (?), before the algo was crapified, would have been able to find them easily, modulo of course the Clever Eliza UI/UX.

Yes, it’s on the web. Yet, most people can’t figure it out, including the professionals who are supposed to be trained experts at this. It’s stochastic parroting all the way up. And because you can do this in every field, the logical sum of all these gotchas is to demand GPT be right at everything, something that no human being can do.

Also I think this emphasis on training sets curation is misplaced. The point of these systems is that they don’t need highly selected data, they are able to digest “wrong” things and deal with it on their own. Teams of scientist who will manually build and program the correct knowledge? That was tried before and it was failure.

And anyway, you don’t want ChatGPT that never heard of Covid droplet transmission and handwashing, or doesn’t know the word ni**er and what it means. It would be quite useless. Even dangerous, I would say, because after demanding the corporations/rulers to make ChatGPT to produce only “correct” answers, they will gladly take up the job and make it write what THEY think is correct (at least for plebes). In other words you will not get ChatGPT that will inform you about respirators and Corsi boxes, you will get endless talk about handwashing and smiling.

Cassandra – “The point of these systems is that they don’t need highly selected data, they are able to digest “wrong” things and deal with it on their own.”

I think you might have missed something in the post. The results that Lambert got include wrong information which demonstrates that these AI’s can’t handle such in an appropriate manner.

Sure, they need to be familiar with droplets and handwashing, but they also need to place those items in proper perspective otherwise the output is useless or worse.

Demanding the owners make the AI’s produce the “correct” answers is even worse for the reasons you mention.

The only solution is to “nuke it from orbit, it’s the only way to be sure”.

And what is appropriate manner? What GPT wrote is what the official experts will tell you. In fact when I think about it, there is strong probability that questions & answers around Covid is one of the things they checked before releasing it into wild, so it’s not even clear what we are debating here is unvarnished GPT output, it very well could be example of the sought curated answer from subject matter experts.

> What GPT wrote is what the official experts will tell you.

Not so, although you would need to actually read the post to find out. What GPT wrote is a mishmash of incompatible theories by putative experts who disagree with each other (which is why we get airborne, droplet, and fomite transmission all mixed together into a hideous stew.

> so it’s not even clear what we are debating here is unvarnished GPT output

It’s not, because I used Bing, although again you have to read the post to discover this. In any case, either there was no “warning label” to indicate the material was curated (in which case Bing was irresponsible and the curators awful), or there was, and I erased it from my screen shot. Which is it?

Bing runs on GPT-4.

Not sure what warning label you talk about. All I’m saying is that looking at Covid answers is something they probably would do, and then they could possibly alter the initial prompt, which sets up the GPT. And there is no “warning label” about what is or isn’t in this prompt, they are actually trying to keep it secret.

The problem isn’t lack of superhuman intelligence. The problem is lack of intelligence. Intelligence is a property of a mind, and AI isn’t a mind, it is a set of algorithms. That those algorithms are hidden and can’t be tested in theory, only in practise, is a limitation, not a quality that creates a mind.

If AIs are minds then so is the clock at my local cathedral. You are normally not allowed into the machine rooms, so how do you know that there isn’t a mind inside?

If you ask an AI to create a summary of an article, you will not know if the summary is correct, unless you are a subject matter expert. No more then you can know if a self-driving car will register a small humanoid shape as a nearby child or an adult farther away, unless you test it.

The only real use case where AI doesn’t erode quality is in creating drafts for real subject matter experts, who can then perhaps save a bit of time. If the subject matter is BS, they can save a lot of time.

If you replace subject matter experts with AI, the result will be crapification, and increased crapification as tha group of subject matter experts to train the AI on erodes. If the subject matter is a matter of life and death, like driving or medicine, the result will be death. Of course, we live in the stupidest timeline, so I don’t expect states to enforce laws against practising medicine without a license until there are a lot of deaths and possibly not even then.

As subject matter experts would tell you, we don’t know how mind/intelligence/consciousness emerges nor we have clear definitions of what it even is. We also don’t know the inner working of GPT models. So by saying that AI isn’t mind, you are comparing two unknown and undefined things, which makes the statement itself unknown and undefined.

When you ask expert to create summary of article, you will not know if the summary is correct either. People do mistakes, sometimes monumental.

Notice I don’t entertain the idea that subject matter experts could/should be replaced with ChatGPT. In fact I’m here arguing the opposite, that expecting them to produce such expert level answers completely misses what is novel and interesting about them.

As long as you are not allowed into the clock tower, the inner workings of the magnificient church clock is unknown. So by your argument, who is to say that it isn’t a mind in there?

The AI has as much a claim as the clock tower into which you aren’t allowed. And comparing human minds with the most advanced tech – like clocks – has a long story. The fact that the claim keeps jumping to the next tech without a clear definition of what a mind is shows something about how we project personality upon machines. It also makes it very unlikely that the last big thing is actually a mind.

Again, the fact that the algorithms of which the AI consists can not be read, but just tested, is a weakness as a technology. Doesn’t make them any more then unknown algorithms, though. But as you show, obfuscation is an advantage when it comes to marketing and mysticism.

> When you ask expert to create summary of article, you will not know if the summary is correct either. People do mistakes, sometimes monumental.

Bullshit at scale is bad. Far more dangerous than the artisanal bullshit we already generate.

As I understand it, the training data is the entire internet with some type of algorithm for assigning credibility. The training data is not curated in any other meaningful way. As long as high-credibility sources like the CDC and WHO are catapulting back-to-work propaganda, the chatbots will be mostly wrong about COVID.

“Unless you want to call what people do most of the time stochastic parroting”

This is the interesting meta-issue for me. How close of an analogy is a chatbot for socially constructed human heuristics? It seems to me it comes pretty darn close – basically repeating conventional phrases that sound smart based mainly on a social ranking definition of smartness.

As the winning-twitter wing of the Democrat party used to say about Trump, he sounds like what a dumb person thinks a smart person would sound like. That can be a remarkably powerful thing.

small correction needed – this common understanding is incorrect.

these large language models are trained on a SUBSET of the internet, and yes that information is curated. for example, it is largely English language information

Does it have a ‘reliable source’ criterion, along Wikipedia lines? Does it treat Wikipedia as a reliable source?

Well, the portion of the Internet in English is about 25%. So the training data is likely a subset of a subset.

> on a SUBSET of the internet, and yes that information is curated.

If so, the curation is not merely worthless but dangerous and lethal.

A curation that concedes that Covid is airborne, and then proceeds to omit HEPA, Corsi-Rosenthal boxes, CO2 metering, and ventilation generally is denying people who use the AI the ability to protect themselves with as many layers as possible.

And it’s very hard for me to imagine that all the health information isn’t like that. After all, Covid is a pandemic that generated an enormous volume of content to be used for training.

IIUC, even if all of that information is included the dataset, it still may not appear in the responses. Outputs are generated using probabalistic algos, as well as a significant amount of ‘training’ by a team of contractors, e.g.:

Who are those 40 contractors? By what criteria were they chosen? Do we even know? How many MDs, PhDs, let alone bona fide critical thinkers amongst them? Probably all Democrats, right? Lol

If, for example, they all believe that the vaccines will save us and masks are nice but actually not really necessary, would that have some bearing on ChatGPT’s responses?

At the end of the day, it’s still GIGO. This technology can come up with probabilistic median responses, but that’s not critical thinking or, as the saying goes, thinking outside the box.

What I find most troubling is that even if there is curation, from a medical health perspective you’d need an battalion of medical experts to properly train the models. A key underlying problem is that it’s still hugely driven by probability statistics. That means new discoveries that aren’t specifically trained high are by default less likely to come up as their exposure on the internet is probabilistically much lower than older work.

I don’t have access to AI (at work), but an interesting case to test would be how well they’ve been trained around Alzheimer’s and beta-amyloid proteins. How does it handle a response when the key research paper that’s been cited hundreds if not thousands of times is currently being reviewed as possibly fraudulently submitted.

That paper is here.

The report on it is here.

According to Nature, it has been accessed online 52,000 times and cited in 2,299 publications.

The expression of concern from Nature:

14 July 2022 Editor’s Note: The editors of Nature have been alerted to concerns regarding some of the figures in this paper. Nature is investigating these concerns, and a further editorial response will follow as soon as possible. In the meantime, readers are advised to use caution when using results reported therein.

I suppose the investigation is still in progress nine months later.

Google, bing, chatgpt and bard are not trained to give medical advice. they are not systems designed for that purpose.

Would I use them for that? no. Would I use them for some research and exploration before talking to an expert? yes, that I would do. Would I use an AI system designed for medecine? yes of course.

a few more points – on prompt design and training.

3 word prompts are not a great way to interact with chatgpt. Longer prompts will give better responses, e.g. “what are the possible transmission methods for covid and other airborne diseases. I live in the US, what methods can I use to keep myself safe from transmission and illness”

on training – you can’t expect Corsi-Rosenthal boxes to appear in an answer. These systems were trained on data up to Q3 CY 2021. for example Corsi-Rosenthal boxes didn’t appear on this site until much later in 2022.

lastly on biases and limitations.

The process of training has more than one step. there is training on a large corpus of data, and there is with the assistance of humans (called fine tuning).

The paper on Aligning language models to follow instructions has some good reading on the issues around fine tuning. e.g. aligning to the biases of the humans labelling the fine tuning data, or to values in the English speaking world. you will find most papers have some reference to the alignment problem.

> Google, bing, chatgpt and bard are not trained to give medical advice. they are not systems designed for that purpose.

Do you really imagine people aren’t going to use them for exactly that? If what you say is true, then the AI’s shouldn’t permit such questions to be answered at all. Yet there these things are, chatting away! Of course, AI’s owners have no sense of social responsibility whatever, and so they happily permit their products to produce such answers.

> on training – you can’t expect Corsi-Rosenthal boxes to appear in an answer. These systems were trained on data up to Q3 CY 2021. for example Corsi-Rosenthal boxes didn’t appear on this site until much later in 2022.

I do indeed expect it. These devices have been released to the mass market today as competitors to search engines tout court, not as devices with a cut-off point of 2021. Where the hell is the warning label?

They are also being permitted to give LETHAL responses with data that you yourself admit required human tuning that has not been done, again indicating complete irresponsibility by the greed-crazed owners. Or will deaths among people credulous enough to believe the hype be part of the tuning?

“Would I use an AI system designed for medecine? yes of course.”

You shouldn’t, unless there is a doctor in the loop, and in that case the doctor should be the one using the AI.

The AI, lacking a mind, can’t know what it doesn’t know, and therefore an AI trained on one dataset, and tested within reasonable parameters, can still be wildly wrong if it is a corner case that it wasn’t tested for. A doctor who understands that the AI is but a tool, a more advanced version of computerised support that already exists, should be able to catch thoes cases. (A doctor that thinks the machine “thinks” and “knows”, in effect has a mind, should be avoided.)

Still, there is use cases. For example, an AI trained on the best radiologists in a hospital can function as a suggestion for less experienced radiologists doing the night shift, which could be important in an emergency medicine setting. Still, the results should be followed up the next day, much like how it is done without an AI. The problem is that the hype is likely to undermind these use cases.

This idea was first really developed in the 1970s. At the time, they were called “expert systems”, e.g., see the work of Edward Feigenbaum. By the 1990s, though, they had pretty much disappeared. For an interesting account, see: Leith P., “The rise and fall of the legal expert system”, in European Journal of Law and Technology, Vol 1, Issue 1, 2010.

So one of the apparent use cases has already been tested in an earlier iteration and found wanting? Interesting.

Paper downloaded courtesy of sci-hub and will be read with interest.

Lambert, check your email…

I’ve got to take a kid to practice after school so I decided to work from home in the afternoon which means I could access the Internet from home. I ran the test on Alzheimer’s with Bing and at the high level it was fair, but when I started asking it the details and like for links to studies… it went ugly. Links that go no where… title doesn’t exist… a paper that MIGHT be what it was thinking of written by completely different authors than it claimed. 9th grader F-ups at best, more likely just systemic BS!

I sent you the screenshots to the email with strether.corrente in it. If you want a different one (I’m not sure how old that one is) email me.

Thanks very much! This is great! (I hope other readers will consider doing this; calling this garbage out is a real public service.)

Seriously, people: Never use a bullshit generator for doing anything but generating bullshit!

https://www.euronews.com/next/2023/03/31/man-ends-his-life-after-an-ai-chatbot-encouraged-him-to-sacrifice-himself-to-stop-climate-#:~:text=Smart%20Health-,Man%20ends%20his%20life%20after%20an%20AI%20chatbot%20'encouraged'%20him,himself%20to%20stop%20climate%20change&text=A%20Belgian%20man%20reportedly%20ended,artificial%20intelligence%20(AI)%20chatbot.

BIZTECH NEWS

Man ends his life after an AI chatbot ‘encouraged’ him to sacrifice himself to stop climate change

“Pierre became extremely eco-anxious when he found refuge in Eliza, an AI chatbot on an app called Chai.

Eliza consequently encouraged him to put an end to his life after he proposed sacrificing himself to save the planet….”

I believe that the AI will herd any easily manipulated people, up “The Serpentine Ramp” to the welcoming Voluntary Euthanization Clinic, to be humanely “put to sleep”. The remains will then be ecologically disposed of.

All for free…..

Look on the bright side. If this is what AI achieves, and Occupation Regime intelligence agencies rely on AI to understand and predict who will do what so that the human agents can get to where the puck is going to be, we may not be in the focused danger a malevolent intelligence would like to put us in.

( Of course, Artificial Stupidity could always order us shot by random chance or drooling inattention.)

Thanks, Lambert, for digging into this.

As @lb notes, above, generative AI is not based on real experience or knowledge. It generates text from highly-curated, large-language models, and it thus cannot address or describe any new knowledge that isn’t part of its training set (i.e., it is not trained on “the entire Internet”). As such, its utility for reliably summarizing existing knowledge, let alone helping to produce new knowledge seems quite limited.

Nevertheless, we can assume that it will be used extensively by those looking for short-cuts to knowledge, various scams, and certainly for nefarious purposes, from everything like plagiarism, generative pr0n (deepfakes), fake news (with fake images, e.g., Trump in handcuffs), to the large-scale gaslighting and manipulation of public opinion. The latter, especially, I find rather worrisome.

Henceforth, many existing institutions will have to deal with the malicious use of generative AI, e.g., universities will have policies on students who inevitably try to use it as a short-cut to generate written assignments or even papers. By definition, students are learning to write and frequently have problems such as writer’s block or poor planning, predictably leading to deadline panic, and the temptation of desperate “solutions” such as plagiarism, asking generative AI for “help”, etc.

Many universities now have policies on this, usually an extension of existing policies on plagiarism. Already for some years, it has been common practice to use services like Turnitin to detect plagiarism in student writing, and the service now claims 98% accuracy in identifying AI-generated text. However, this may be overly-optimistic, as the following article suggests:

https://www.washingtonpost.com/technology/2023/04/01/chatgpt-cheating-detection-turnitin/

In the end, it will probably fall on already-overworked educators to worry about the misuse of generative AI by their students.

I posted this before, but when queried whether it plagiarizes, ChatGPT replied:

However, insofar as ChatGPT has no consciousness — and never will —, the real answer is: yes, the generated text can include plagiarized content.

ChatGPT and friends are

– unreliable

– stochastic

– potentially high variance.

This suggests that application to domains where the cost of the worst case result is high (medicine…. Air traffic control…) will end in tears

But application to domains where there is low cost on the downside and high reward for upside outliers might be pretty fruitful.

Eg art; the “Fake Drake”

https://www.npr.org/2023/04/21/1171032649/ai-music-heart-on-my-sleeve-drake-the-weeknd

If a million duds are generated for every hit, no worries.

We get the benefit of the winner and toss the crud out ( or do we? Still have the curation cost of evaluating what’s good…)

So, medical advice, risky

Plot outlining or copy editing, maybe not risky…

How would you grade GPT-4’s answer?

‘Yes, COVID-19 is primarily spread through the air via respiratory droplets and aerosols produced when an infected person talks, coughs, or sneezes. The virus can be transmitted through close contact with an infected person or by inhaling the droplets or aerosols that contain the virus. Smaller aerosols can remain suspended in the air for an extended period, potentially leading to transmission in indoor settings with poor ventilation.

While surface transmission is less common, it’s still possible to contract the virus by touching a surface or object contaminated with the virus and then touching your face, particularly your mouth, nose, or eyes.

To reduce the risk of COVID-19 transmission, public health guidelines recommend maintaining physical distance, wearing face masks, practicing good hand hygiene, and ensuring proper ventilation in indoor spaces. It’s also essential to get vaccinated to protect yourself and others from severe illness or death due to COVID-19.’

> practicing good hand hygiene

A better mishmash, but still a mishmash. Fomite transmission and face masks were both abandoned IIRC at some point in 2020. Apparently the training set was never corrected.

I think that it’s right that vaccines protect against transmission. Not perfectly, and their ability to do so has been oversold, and used as an excuse to get rid of other protections that are more reliable so that the overall effect has been more transmission rather than less, but I think that in isolation the vaccines do offer some protection against transmission, and there are hopes that the nasal vaccines will be even better.

That is not what the CDC found after the famed Nantucket outbreak. Viral loads in the noses of the vaccinated, which is a very good proxy for transmissabiity, same as for unvaxxed. This is with Delta, which didn’t escape the vaccines as well as Omicron. Did you forget the massive Omicron outbreaks in party season 2021 among the media types especially, a highly vaccinated population? You would not have seen anecdata like that if the vaccines were reducing transmission.

IM Doc says that all the serious cases he sees now are among the vaccinated. Similar results with doctors he knows in his large study group network.

Thanks, Lambert, for pointing out the ludicrousness of AI when it comes to anything critical.

Thought experiment. Legislate that all AI results include footnotes to their citations.

Academics are forced to. My book designer is currently formatting a legal text with 500 footnotes! Oy vey, and he’s a human being!.

Should be a piece of cake for AI to cite every sentence quoted in its results as well as clarifying the issue of purloined music, art, etc. IP.

Thoughts?

@Hayek, I think your suspicion (“should be a piece of cake”) is correct, and that in fact it would require a huge overhaul of this tech to actually include footnotes, do proper citation, let alone quotation or paraphrase, proper attribution of copyright, IP, etc.

Moreover, Wikipedia has footnotes, but it’s not a credible source, and absolutely not suitable as a citation in any serious research. In effect, the footnotes give it a veneer of credibility, that’s all.

In a way, then, I personally am fine with generative AI not having any footnotes. Let it become more clearly and widely understood that the people pushing all of this tech have never been serious about the dissemination of credible knowledge, and that it’s more akin to yet another operation (albeit a little more sophisticated) to strip-mine the Internet.

The best way to understand and explain chatbot ‘AI’s is to consider them consummate BS artists. Able to generate completely plausible statements that have no regard for the truth. Call it ChatDJT to capture the Trump-in-a-box quality of their always glib but often wrong answers.

> The best way to understand and explain chatbot ‘AI’s is to consider them consummate BS artists.

AI = BS.

I repeat. “AI” and “artificial intellignce” are brand names that are intended to make you think that you are dealing with something beyond ordinary computer programs with all the problems associate with such objects. Would you believe that these are something that they are not if they were called “Macy’s” or “Pepsi Cola” instead of “AI?”

The data used to make these programs work have immense problems simply as a result of its size. The treatment of the data by the imbedded model is opaque to say the least. And the data is not curated in any way.

Buyer beware.

If Sturgeon’s Law holds, and AI is consulting the entirety of the (English) Internet, 95% of AI responses will be crap.