Yves here. Normally I do not take the liberty of reproducing posts of other writers in full. However, top AI expert Gary Marcus has issued a call to action which I trust he wants to be circulated as widely as possible. Marcus has been warning that AI companies, who have no hope of ever achieving an adequate return on their massive investments, will soon do the obvious, which is to see a massive taxpayer rescue based on their presumed-obvious too-big-to-fail status.

Keep in mind that Marcus has been early and accurate in depicting the failure of large language models like OpenAI’s ChatGPT to live up to their promises, and has also debunked the idea that they could ever achieve true artificial general intelligence. More recently, he provided a series of sightings of how uptake and use of generative AI were slackening and even falling. This is consistent with various reports of AI not delivering in corporate settings, where its big applications were set to occur. For instance, a MIT study ascertained that 95% of corporate pilots of generative AI had failed. Similarly, Rand has determined that as many as 80% of AI implementations fail, twice the level of other software projects, and TechRepublic put the kaput rate at 85%.

Not only is the idea of rescuing super wealthy tech bros and their enablers in the venture capital community deeply offensive, it is even more so given the continuing Trump moves to immiserate ordinary citizens as part of Trump’s plan to roll the US back to 1890-level living standards. Among the many examples are DOGE rampaging through programs that help ordinary citizens and small businesses, such as weather alerts and extensive USDA programs to assist farmers in improving efficiency, to damage done by Trump tariffs to both to enterprises and to consumers increasingly bearing their cost. The shutdown, by halting current pay of many Federal workers and reducing SNAP benefits, makes the spectacle of largesse to the rich even more insulting.

So please call your Congressional representatives and circulate this post widely and urge others to make calls and send e-mails. The “youthquake” of the Mamdani win in New York and Republican repudiation in other key races shows voters are refusing to eat Trump’s dog food. These results should put fear in the hearts of Republican incumbents and any Trump-appeasing Democrats. That means stern words from voters, that backing an AI rescue is a fast path to a political graveyard, have good odds of getting a hearing.

By Gary Marcus, professor emeritus of psychology and neural science at New York University. Originally published at his website

A few days ago, Sam Altman got seriously pissed off when Brad Gerstner had the temerity to ask how OpenAI was going to pay the $1.4 trillion in obligations he was taking on, given a mere $13 billion in revenue.

In a long, but mostly empty answer Altman pointed to revenue that hasn’t been reported and that maybe doesn’t exist, attacked the questioner, and promised that future revenue would be awsome

First of all. We’re doing well more revenue than that. Second of all, Brad, if you want to sell your shares, I’ll find you a buyer. I just, enough. I think there’s a lot of people who would love to buy OpenAI shares. I think people who talk with a lot of breathless concern about our compute stuff or whatever, that would be thrilled to buy shares. So I think we could sell your shares or anybody else’s to some of the people who are making the most noise on Twitter about this very quickly. We do plan for revenue to grow steeply. Revenue is growing steeply. We are taking a forward bet that it’s going to continue to grow and that not only will ChatGPT keep growing, but we will be able to become one of the important AI clouds, that our consumer device business will be a significant and important thing, that AI that can automate science will create huge value. .. we carefully plan. We understand where the technology, where the capability is going to grow and how the products we can build around that and the revenue we can generate. We might screw it up. This is the bet that we’re making and we’re taking a risk along with that. A certain risk is if we don’t have the compute, we will not be able to generate the revenue or make the models at this kind of scale.”

What Altman couldn’t say then was that he has a plan, to reduce the cost of his borrowing … by having the American taxpayer (indirectly) foot the bill.

The cat came out of the bag today, at a Wall Street Journal conference, from the mouth of OpenAI’s CFO, who seemed to be test-piloting the notion:

In justifying what would like be among the biggest (indirect) government subsidies in history, Friar said, “AI is almost a national strategic asset. We really need to thoughtful when we think about competition with, for example, China.” (NVidia seems intent on making exactly the same play.1)

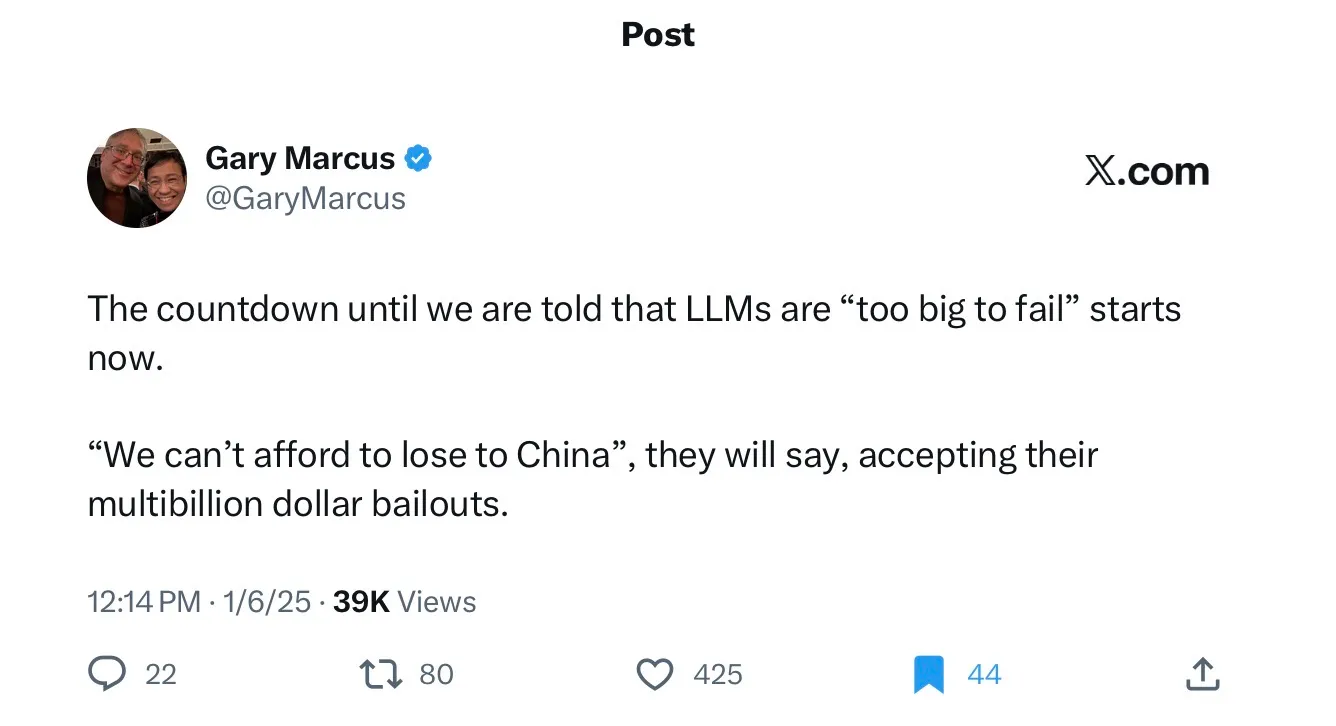

Remember this tweet?

To the letter, almost 10 months to the day, exactly that game is now on. And I already hear rumors that the government is likely go along.

Which means you, the taxpayer, will be footing the bill.

Disgusting. Tell your congress person — today — that you don’t want your taxes used to bail out overhyping and economically shaky AI companies that spend far more than they earn. Workers, already feeling the knife from layoffs, should not be footing the bill.

Get ahead of this before the too-big-to-fail bullshit becomes too-late-to-stop.

Methinks Zitron got under some people’s skin. Credit where credit is due, brazenly robbing the taxpayers would solve OpenAI’s cash problems, so in that sense Altman is doing what he must to save his company. The obvious question is, should it be saved? I suppose enough people are riding on the Nvidia free ride in the stock market to be getting a little queasy about this whole bubble talk, and a free lunch guaranteed by the government would quell fears. But this would amount to a massive wealth transference to the richest, and given the shaky state of the US (people literally going to starve without SNAP…) if I were in power I’d be very scared of tempting fate like that. Enough money to Argentina, Israel, Ukraine and Sam Altman and who knows if some cities in the US are going to become famous for defenestrations much like Prague.

Zitron said that he thought that they would not get bailed out.

I do not know if that is still his position.

I will take the other side of the bet regrettably.

The gangsters and fraudsters are in complete control until proven otherwise.

It will be interesting to see how the conspiratorial right spins this one.

“”This was done to f### the Republicans etc””

🤗

Well, if a bailout happens you can go compare which way a congresscritter voted by who they received donations from.

Yes, but “donations” is a NewSpeak euphemism. The traditional English word is “bribes”.

There is now too much dark money “donations” to track who will be responsible for the ultimate bailouts.

I beg to differ, it’s the usual suspects. Having a top-heavy oligarchy hiding in plain sight makes the institutional corruption rather obvious, but folk want to remain in blissful denial. The US is a democracy with “free and fair elections” and all that…

Even the emperor openly boasts about how he was bribed with 100 million from Miriam.

The healthiest thing that could happen (in the full spectrum longer term) would be to let these “adventurous risk-taking” AI companies collapse, get bought-up, and their 2nd generation versions be run by new boards. That would also be healthiest for the political fortune of Trump and the Republican Party.

Should it be saved? Let’s see, a company that makes a product that there’s no real demand for, a product that doesn’t work well, that allows rampant cheating, that disseminates false information far and wide, that preys on those with psychological problems, whose real purpose is to replace paid labor at some point in the not too distant future, and whose rescue would be nothing but a massive wealth transfer as you noted.

No.

It’s time for the capitalists, both the tech overlords and the would-be squillionaires punting around on Robinhood, to learn the meaning of the phrase caveat emptor, and learn it good and hard. Let it burn, figuratively speaking, and we might have a lot less literal burning going on in the world as the energy guzzling data centers get shut down or are never built.

you forget its enormous ecological footprint. another point for let it fail.

OMG! Imagine an AI gap with Putin and Xi! Oh the humanity!

I recall the nuclear missile gap, I was just a kid. The missiles sold to close the gap are still in the silos, 60 odd years later! In 1972 I luckily avoided the “launch capsule, key turning career”!

Imagine the SW engineering machine that delivered F-35 software, closing the “drone swarm AI gap”!

Patriotic, national security extremism for AI is the latest “refuge……”

Yep. Really started worrying about this when they started talking about US AI dominance and other foolishness.

I think it is all too evident that neither Congress nor the Supreme Court report to the Populace. I remember Obama and his handling of the 2008 Financial Crisis, and his handling of Healthcare, and I remember the consequences for him and those who owned him. I may write or call my Congressman … but I doubt it will have much impact.

AI is has held peculiar interest for DoD for many decades. I remember the HPC [High Performance Computing] initiatives of the aughts and the automated Army envisioned by the FCS [Future Combat Systems] program from that same era. I remember when the Army decided to build its combat systems around Microsoft software instead of taking over the Unix based software that supported Sun Systems, or adopting some special build of Army or DoD constructed high security software operating system. I believe DoD has demonstrated a long-term interest in AI and a strange affiliation with the Silicon Valley elites.

I have an uncomfortable feeling the fix is already in. The question I have is what might result from yet another massive wealth transfer to the wealthy? The Populace is growing desperate. Will the resulting madness blowback on the wealthy with their mansions and bunkers or will the madness further immiserate conditions for the Populace?

I don’t necessarily see “blowback” and “further immiseration” as an either/or proposition…

I read at Zero Hedge this AM from NVDA’s Huang that China is only seconds behind US in AI.

More likely China is innovating both SW and HW to be more efficient and better performing.

There remains use for OpenAI profit base in data centering the mass surveillance state that will keep US “safe” for the pillagers.

Taxpayers funding their own prisons

For the sake of national security we will bankrupt ourselves. I am reminded of John le Carre’s metaphor for the Soviet Union’s demise as the Russian knight gradually wasting away and dying inside his armor. The U.S. seems well down the road in that direction.

But Ai is not generating security. It’s a worse-than-F-35-non-performing-boondoggle.

Hedgie

@HedgieMarkets

·

12h

🦔Meta is hiding $30 billion in AI infrastructure debt off its balance sheet using special purpose vehicles, echoing the financial engineering that triggered Enron’s collapse and the 2008 mortgage crisis. Morgan Stanley estimates tech firms will need $800 billion from private credit in off-balance-sheet deals by 2028. UBS notes AI debt building at $100 billion per quarter “raises eyebrows for anyone that has seen credit cycles.”

The Structure

Off-balance-sheet debt through SPVs or joint ventures is becoming the standard for AI data center deals. Morgan Stanley structured Meta’s $30 billion in an SPV tied to Blue Owl Capital, making it easier to raise another $30 billion in corporate bonds. Musk’s xAI is pursuing a $20 billion SPV deal where its only exposure is paying rent on Nvidia chips via a 5-year lease. Google backstops crypto miners’ data center debt, recording them as credit derivatives.

My Take

This is 2008-style financial engineering repackaged for AI. The key difference from the dot-com era is growth was financed with equity then. Now there’s rapid capex growth driven by debt kept off balance sheet. When chips estimated to last five to six years may be obsolete in three, and companies structure deals where their only exposure is short-term leases, that’s hidden leverage creating the opacity that preceded past crises. Meta keeping $30 billion off its balance sheet while UBS warns about $100 billion quarterly AI debt buildup shows the pattern I’ve been documenting where leverage accumulates outside traditional visibility.

Hedgie🤗

I’m confused by the claim that AI won’t be adopted widely if it doesn’t meet some efficacy standard. Shitty AI will replace labor because it continues the trend of shitification and the process of shifting adjustment costs to the customer. There will be rationalizations that this process is necessary to “train” AI and customers need to go along. Profit will be higher after the adoption of sub-standard AI which replaces human labor even after taking into the resulting account customer attrition. This trend is well established in shitification. Thus companies will/are adopting AI at the level of its lowest skilled manpower and will continue to do so, increasing it wherever they can.

I suggest you read the post with more care. Companies are finding that these AI projects are failing, that they are not in fact resulting in labor cost reductions.

To add to this point, though personally engineers seem to say that AI can make them more productive, they were in general incapable of properly evaluating the benefits from AI, which were in fact nonexistent: there were losses in productivity (19% longer to realize tasks, according to this study). A piece by Harvard Business Review gives a good explanation as to why:

So AI essentially not only does not do the tasks it’s supposed to do, it also creates further problems downstream in the production line. And given that AI encourages people to not think about what they’re doing, it furthermore means that debugging and fixing the broken code parroted by the LLM is possibly more time-consuming than actually writing decent code.

Yes, I neglected to include this essential point. Thank you for not only mentiioning it but also providing backup.

Back in my days as an engineer, we called this “negative work”: work performed by an incompetent person that resulted in more work to fix than the time spent initially “completing” the task. Alternate definition: the work performed while inebriated, say after a ship party lunch. The 3 hours you spent “working” after the party turned into 4-6 hours of why did I do that, and now I have to fix it the next day. At some point we instituted a rule that no “real work” is allowed after drinking.

Ar least with an incompetent person there’s hope that education and mentorship can fix them. AI, not so much… at least not until the next release.

Execs at these orgs reporting failure also point to charts which show AI reliability increasing over time, the projection is that AI will reach a point where it is reliable.

At my org the execs have always said, and continue to say, that the failure rate is still unacceptably high for AI to be currently used for anything critical, even while continuing to invest in AI projects – the expectation is this will change.

Fintechs must hedge by investing, must have projects at least well underway just in case AI does reach an point of reliability. And it seems to me only large scale widespread actual failures of AI powered critical systems will turn that around.

Banks especially are just big number crunching automatons, people powered calculators, so they make a natural target to be replaced by algorithms regardless if AI exists or not. I suspect the same is true for most large corporations in existence. So perhaps that will be the large scale widespread failure point?

Is AI the failure-in-progress of capitalism?

Um, no. In banking, for decades, over 80% of large IT projects <$500 million) have failed. They are given quiet burials. So plenty of precedent for shooting them in the head rather than carrying on past the point of undeniable failure. And we here are also talking about end users that have bottom lines to worry about and cannot keep pouring money into burn pits.

I’ve seen lots of references to the majority of IT projects failing, but do you know what exactly constitutes a “failure”? Is it having an inchoate idea that never gets implemented at all? Or does trying to ram a square peg of a software program into the round whole of a business and then being stuck with the crappy results count as a “failure” too? In recent years I’ve seem lots of software implemented that doesn’t work well at all and has increased labor costs, but we’re still paying Silicon Valley for the privilege of using these crapified versions. I’d count that as a failure, but Larry Ellison probably wouldn’t.

Can NVDA and OpenAI be like Lockheed?

Military SW projects never fail. They do not deliver full functionality, they break too frequently, and many breaks required engineering and retest to “fix”. Always bust schedule.

Fail often implies compromised cost and performance, sometimes full scrape.

Recent example, F-35 block after block of SW releases, always late, tests too costly and demand more development of new blocks.

So bad the SW blocks slowed new HW fixes to try deliver functionality.

They can be if they are developing AI for the military. If they are developing AI for a corporation expecting increase profits, maybe not so much.

Also, given David Graeber’s assertion that 20% of jobs are “bullshit jobs”, “AI took my job” may simply be convenient cover for businesses to downsize.

Much of business seems to be guided by herd behavior, as we have seen in subprime meltdown, internet bubble, and the recent content streaming wars.

Some businesses counter the trend, for example Sony didn’t get into streaming, instead licensing content to the streaming companies.

Anyway, why can’t AI use AI to determine its path to success?

See above. In banking/large financial services companies, a particular initiatie is announcent, contractors get hired, internal types are tasked to the project. 18 to 36 months later, it has not completed most (all?) of its major deliverables. It would take either a lot more expenditure, with no more certainty of getting there, or a complete reboot, since the deliverables are now stale and should be updates. So the entire project is killed.

It’s being reported that Amazon is already backfilling its recent “AI driven” layoffs with cheap H-1B migrant workers.

If you hate employing Americans, doesn’t that mean that it’s you who hates America, Mr. Bezos?

Absent massive monopolization of every single industry, there are practical limits to enshittification.

Take the airlines – they’re effectively a cartel, not a monopoly of course, but in practical terms you have tiers:

United, Delta, Alaska Airlines: still offer somewhat decent service, less enshittification, higher fares

Southworst, American, JetBlue: more enshittification, somewhat lower fares.

Spirit, Frontier: Supposedly lower cost, but the entire experience is crap.

The lower tiers keep the higher tiers somewhat “honest” insofar as they offer competition.

As long as there is at least some competition, within an industry, if a given company destroys the customer experience with AI then they risk losing market share.

Of course what they are really counting on and asking for is a bailout of the stock market which was also true during the last big bailout. The wealthy see they themselves as too big to fail in contrast to the “little people.”

This desire to cement an oligarchy in place is feudal indeed and they want to do so without even offering the “noblesse oblige” that said lords and ladies had some obligation to the poor who support them or to fight in the wars oligarchs are so fond of fighting. We are even dealing with oligarchs whose idea of culture is less beautiful cathedrals or Michelangelo and Beethoven and more UFC and gold toilets.

We need to expel these useless eaters before they allow nature to do it to all of us.

Please stop Making Shit Up. You do this all the time and I am losing patience. Please limit your remarks to where you know what you are talking about.

The GFC rescues, no way, no how were about the stock market. I cannot believe you wrote such uninformed tripe.

And you will get even rougher handling from me if you persist in not shaping up.

Sorry for not labeling my piece of rhetoric as speculation. There are some subjects, though, on which I know quite a lot. I’ll stick to those if allowed.

Whatever Sam Altman expects, he’ll need, it won’t be enough. States are building out data centers in tech parks with Nuclear energy as the centerpiece. The American people already own part of the nukes since Trump gave Westinghouse the money to expand nuclear energy across the country. What’s interesting is that it was structured like a socialist project with the federal government taking an ownership interest. #communism LOL

What will happen if the AI Bubble bursts? What will states do with these massive nuclear-centered tech parks?

As I said with the Internet, I noted with AI, it should have been a government project owned and controlled by the people. You cannot compete with China on these ventures, as the government owns a significant part of the projects. The oligarchs complain about “China’s government subsidies.” They aren’t subsidies! They are invesstments on behalf of the Chinese people.

> What will happen if the AI Bubble bursts? What will states do with these massive nuclear-centered tech parks?

Perhaps the GPUs could be repurposed to do synthetic imagery for streamed virtual reality experiences.

Just trying to imagine what all this hardware could be used for if the intended use case doesn’t work out.

They will expand the monitor/track/kill systems perfected by the zionazi regime to worldwide implementation, starting in Amerikkka.

That is the ‘planned from the beginning’ end-use case.

“Compete with China”

Why is this always the line that mainly comes up when somebody in the USA is trying to do something anti-labor?

Let them eat cake or day old crusts for that matter. This had bubble written all over it from the start. John Scalzi “hand-waved” an intelligent agent in to existence, Isaac Asimov the positronic brain, Robert Heinlein an aware super computer to mastermind a revolution, and on. Sam Altman and Judson Huang surely can do likewise. If not, one might think them and their ilk snake oil salesman, carnival barkers. From the little I know it appears that China has taken a more measured approach to LLMs and robotics. The”AI” of the present has its uses, limited uses. One of those uses is not to endow promoters with a indulgent sugar daddy to pick up the pieces if they fall flat on their faces.

Nah. Let them eat Soma.

It’s a Brave New World.

Maybe confinement loaf?

I’m investing in Soylent Teal

It’s a much more attractive product than its predecessor

Catherine Bracy was interviewed yesterday by Gil Duran of The Nerd Reich about her new book , World Eaters: How Venture Capital Is Cannibalizing the Economy. She describes how some non-economic factors are pushing investment in AI regardless of business sense.

Related: a short Business Insider documentary about how data centers are devouring the countryside and destroying the peace and quiet of even affluent suburban developments, especially in northern Virginia and in Arizona.

I have long held the paranoid belief that AI isn’t going away because of the Total Information Awareness Project now known as Palantir. It seems like that’s the real reason for all these data centers. It’s Not for the average consumer or even companies to cut labor (although that’s the excuse for excessive layoffs). It’s so they can know everything about everyone all of the time. They need AI to read all that information and tell them who is a threat before they act. Hence regional data processing centers.

So, they don’t need to make money from the masses because the military/government will pay them.

Systems are constantly “training”, but at the same time are supposed to correctly know everything about everything.

Outside basic surveillance, the main thing they seem to be after is knowing which groups of people can be conned into paying more money for goods and services.

So why don’t you get a Faraday bag and a burner phone or two? Basic measures to create big gaps in tracking are not hard.

I’m more concerned about license plate readers, and facial recognition cameras. They’re everywhere. Even if I leave my phone at home, I’m being tracked everywhere I go. Every street corner, parking lot, and building has cameras. Traffic cameras everywhere.

The Washington DC government, is so predatory that they lowered the entire city’s speed limits to 25mph, even on major thoroughfares, and put Lockheed Martin cameras everywhere. The fine for doing 36 in a 25 is $100! Worse yet are the Stop sign cameras, where you have to completely stop behind the line for 3 seconds or you get a $150 ticket mailed to you. Also no-turn-on-red is allowed anywhere in the city. Parking meters are pay by app/credit card only.

I’ve had days where i was fined more than I made at my job that day…and they truncated major roads with bike lanes making traffic much worse. It’s so frustrating that instead of improving infrastructure shortcomings, it’s intentionally being made worse. No everywhere I go takes longer than it used to.

As a daily cyclist in DC (~10 miles/day crosstown at least 5 days/week), all of these things you are whining about that at least pay lip service to traffic safety (since the actual cops don’t do anything to calm traffic) help reduce the risk of me getting turned into street pizza. I mean, imagine actually having to stop at a stop sign, the horror.

What excuse will the Congress critters use to justify the bailout of the AI titans? I just don’t see how a bailout could be justified.

China blah blah blah china. If we don’t bailout these companies the Chinese will control the world.

It’s bs, but that’s the line they’ll go with: bailout the techbro Trump buds, or your kids will be eaten by DeepSeek.

On the class warfare aspect this is a good discussion.

https://scheerpost.com/2025/11/06/wealth-taxes-will-barely-slow-inequality-so-why-do-the-super-rich-resist-them/

The blowback against billionaire culture is not just here in the US.

Altman sounds very much like a snake oil salesman. “AI will automate science”.

Selling AI investments as essential for the economy is another indicator of snake oil salespeople there in ChatGPT. Besides, this, asking for federal backstop is a signal. Problem is that with current levels of stupidity in the high spheres such a mistake cannot be ruled out.

The entire statement struck me as the antithesis of science.

Sure, but he was talking about the money making kind of science (ie big pharma drugs, newfangled polymers, stuff you can turn into patented products to sell…), not the increase humanity’s knowledge kind of science.

On our local radio station, KQED, interview with call ins: If We Are in an AI Bubble, What Happens if it Pops? (1 hour audio)

Yves – I know in the past you’ve recommended calling either local office or DC office for more impact. Can you remind us which you recommend? (Sorry for the ‘assignment’, but I’m sure it’s essentially zero real work).

I recommended the local office but some ex staffers maintained it did not matter. I would still call the local office.

This sounds like a job for the “free market” to fix, in conjunction with the “invisible hand”

“AI that can automate science will create huge value.”

“Automate science”…

He constantly makes shit up.

Heh

OpenAI CFO Sarah Friar says company isn’t seeking government backstop, clarifying prior comment (CNBC)

We shall see, no?

I also remembered reading last night about an attempted dial back on her comments.

I found this non-walled article:

https://www.msn.com/en-in/news/world/openai-cfo-receives-flak-for-us-govt-bailout-remark-issues-clarification-amid-ai-funding-plan/ar-AA1PU1U8/

OpenAI CFO Receives Flak For ‘US Govt Bailout’ Remark, Issues Clarification Amid AI Funding Plan

“ChatGPT creator OpenAI had issued a clarification on Thursday that the company is not seeking US government loan guarantees to fund its massive infrastructure build-out.

This follows OpenAI CFO Sarah Friar’s comments at the Wall Street Journal’s business conference, where she said government backing could help attract the huge capital needed for AI computing infrastructure, especially given the uncertainty around how long AI data centers remain viable.

According to Friar, federal loan guarantees would “really drop the cost of the financing,” making it easier for OpenAI and its investors to borrow larger sums at lower interest rates.”

People are supposed to believe that doesn’t mean eventual bail out.

Sarah wants our children’s college funds to finance OpenAI.

Having all of our 401k invested in SP500 is not exuberant enough for her.

I’m currently between travel and quite busy so unfortunately I cannot give this post the commenting time it deserves. I will say that the consensus among connected/informed peons in SV AI work such as myself has been for quite some time that OpenAI would eventually become wholly government-owned or financially bailed out. Expect to see more circular ‘partnerships’ from decaying blue chips with deep pockets + OpenAI as part of the ‘financial innovation’. I also expect eventually to see some type of official government data center partnership with OpenAI for sensitive workloads (right now these are all private with third party certification and capacity around DC is strapped). I would expect this as part of a final/desperate play after all the other private partnerships are not enough.

I have a lot of thoughts on the efficacy and use case portion of this discussion but will have to wait for a future time when I am not so terribly busy, unfortunately.

Doesn’t this look like a standard capitalist industrial revolution? You get a new tech that will in 20 years revolutionize society – trains, PCs, the Internet, … But in the first flush you get over investment, derivative traders making a killing, a collapse of the scam, the industry gets cleaned out and then a more rational development that does change society at a realistic pace.

I expect ultimately that everything will change… just not tomorrow or in 4 years. We’ve been through the scam stage before and ai ain’t Bitcoin.

Pushing AI and the tech-bro backers off the cliff is a hill that I’m willing to die on, and do so with enthusiasm.

I despise the product and the tech-bros with the heat of a billion suns.