Yves here. This VoxEU article on the systemic risk posed by AI comes as even the popular media is getting worried about precisely this type of exposure. However, a new piece at Time bizarrely depicts the real risk as panic, as opposed to key breakdowns that are not or potentially even cannot readily be fixed. Contrast Time’s cheery take with the more comprehensive and as a result more sobering assessment from the VoxEU experts. From Time in The World Is Not Prepared for an AI Emergency:

Picture waking up to find the internet flickering, card payments failing, ambulances heading to the wrong address, and emergency broadcasts you are no longer sure you can trust. Whether caused by a model malfunction, criminal use, or an escalating cyber shock, an AI-driven crisis could move across borders quickly.

In many cases, the first signs of an AI emergency would likely look like a generic outage or security failure. Only later, if at all, would it become clear that AI systems had played a material role.

Some governments and companies have begun to build guardrails to manage the risks of such an emergency. The European Union AI Act, the United States National Institute of Standards and Technology risk framework, the G7 Hiroshima process and international technical standards all aim to prevent harm. Cybersecurity agencies and infrastructure operators also have runbooks for hacking attempts, outages, and routine system failures. What is missing is not the technical playbook for patching servers or restoring networks. It is the plan for preventing social panic and a breakdown in trust, diplomacy, and basic communication if AI sits at the center of a fast-moving crisis.

Preventing an AI emergency is only half the job. The missing half of AI governance is preparedness and response. Who decides that an AI incident has become an international emergency? Who speaks to the public when false messages are flooding their feeds? Who keeps channels open between governments if normal lines are compromised?…

We do not need new, complicated institutions to oversee AI—we simply need governments to plan in advance.

You will see, by contrast, that the article sees AI risks as multi-fronted, often by virtue of the potential to amplify existing hazards, and that much needs to be done in the way of prevention.

By Stephen Cecchetti, Rosen Family Chair in International Finance, Brandeis International Business School Brandeis University; Vice-Chair, Advisory Scientific Committee European Systemic Risk Board, Robin Lumsdaine, Crown Prince of Bahrain Professor of International Finance, Kogod School of Business American University; Professor of Applied Econometrics, Erasmus School of Economics Erasmus University Rotterdam, Tuomas Peltonen, Deputy Head of the Secretariat European Systemic Risk Board, and Antonio Sánchez Serrano, Senior Lead Financial Stability Expert European Systemic Risk Board. Originally published at VoxEU

While artificial intelligence offers substantial benefits to society, including accelerated scientific progress, improved economic growth, better decision making and risk management and enhanced healthcare, it also generates significant concerns regarding risks to the financial system and society. This column discusses how AI can interact with the main sources of systemic risk. The authors then propose a mix of competition and consumer protection policies, complemented by adjustments to prudential regulation and supervision to address these vulnerabilities.

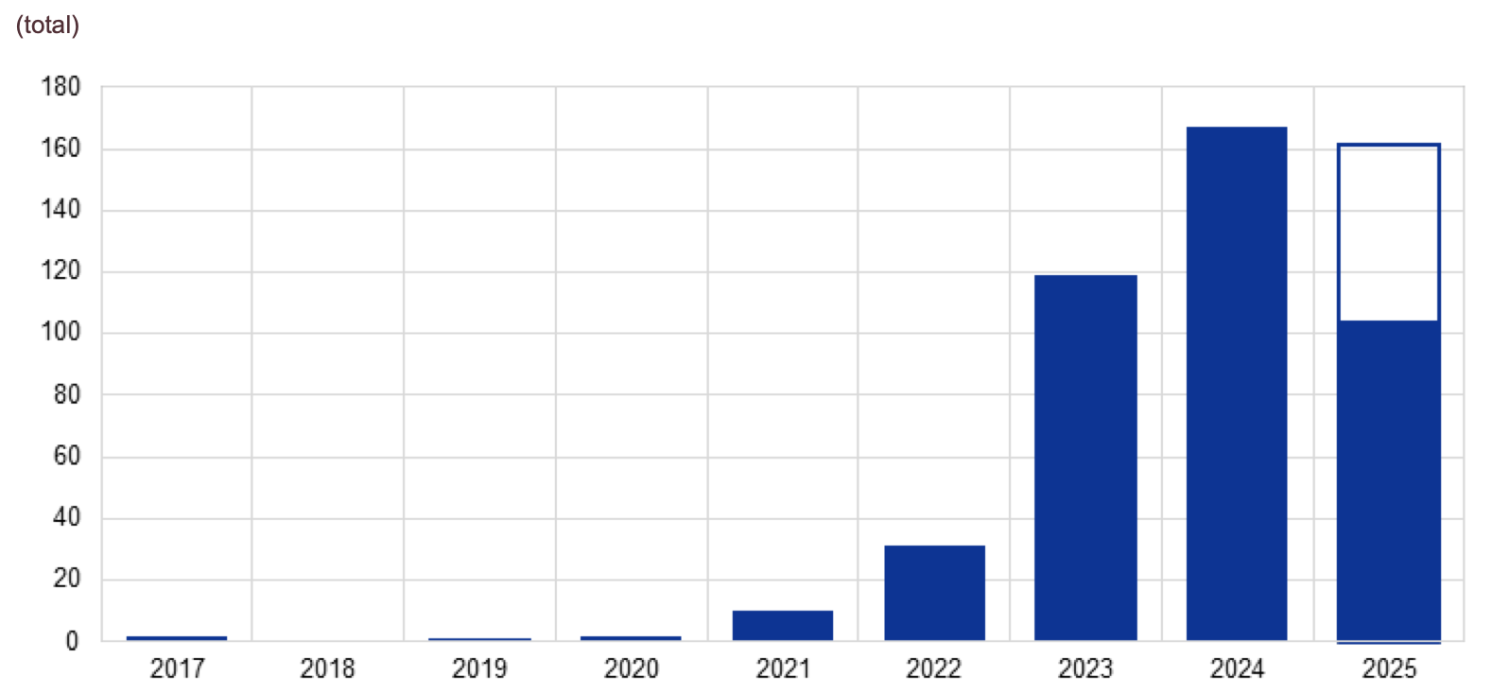

In recent months we have observed sizeable corporate investment in developing large-scale models – those where training requires more than 1023 floating-point operations – such as OpenAI’s ChatGPT, Anthropic’s Claude, Microsoft’s Copilot and Google’s Gemini. While OpenAI does not publish exact numbers, recent reports suggest ChatGPT has roughly 800 million active weekly users. Figure 1 shows the sharp increase in the release of large-scale AI systems since 2020. The fact that people find these tools intuitive to use is surely one reason for their speedy widespread adoption. In part due to the seamless inclusion of these tools in existing day-to-day platforms, companies are working to integrate AI tools into their processes.

Figure 1 Number of large-scale AI systems released per year

Notes: Data for 2025 up to 24 August. The white box in the 2025 bar is the result of extrapolating the data to that date for the full year.

Source: World in Data.

A growing literature examines the implications for financial stability of AI’s rapid development and widespread adoption (see, among others, Financial Stability Board 2024, Aldasoro et al. 2024, Daníelsson and Uthemann 2024, Videgaray et al. 2024, Daníelsson 2025, and Foucault et al. 2025). In a recent report of the Advisory Scientific Committee of the European Systemic Risk Board (Cecchetti et al. 2025), we discuss how the properties of AI can interact with the various sources of systemic risk. Identifying related market failures and externalities, we then consider the implications for financial regulatory policy.

The Development of AI in Our Societies

Artificial intelligence – encompassing both advanced machine-learning models and, more recently, developed large language models – can solve large-scale problems quickly and change how we allocate resources. General uses of AI include knowledge-intensive tasks such as (i) aiding decision making, (ii) simulating large networks, (iii) summarising large bodies of information, (iv) solving complex optimisation problems, and (v) drafting text. There are numerous channels through which AI can create productivity gains, including automation (or deepening existing automation), helping humans complete tasks more quickly and efficiently, and allowing us to complete new tasks (some of which have not yet been imagined). However, current estimates of the overall productivity impact of AI tend to be quite low. In a detailed study of the US economy, Acemoglu (2024) estimates the impact on total factor productivity (TFP) to be in the range of 0.05% to 0.06% per year over the next decade. Since TFP grew on average about 0.9% per year in the US over the past quarter century, this is a very modest improvement.

Estimates suggest a diverse impact across the labour market. For example, Gmyrek et al. (2023) analyse 436 occupations and identify four groups: those least likely to be impacted by AI (mainly composed of manual and unskilled workers), those where AI will augment and complement tasks (occupations such as photographers, primary school teachers or pharmacists), those where it is difficult to predict (amongst others financial advisors, financial analysts and journalists), and those most likely to be replaced by AI (including accounting clerks, word processing operators and bank tellers). Using detailed data, the authors conclude that 24% of clerical tasks are highly exposed to AI, with an additional 58% having medium exposure. For other occupations, they conclude that roughly one-quarter are medium-exposed.

AI and Sources of Systemic Risk

Our report emphasises that AI’s ability to process immense quantities of unstructured data and interact naturally with users allows it to both complement and substitute for human tasks. However, using these tools comes with risks. These include difficulty in detecting AI errors, decisions based on biased results because of the nature of training data, overreliance resulting from excessive trust, and challenges in overseeing systems that may be difficult to monitor.

As with all uses of technology, the issue is not AI itself, but how both firms and individuals choose to develop and use it. In the financial sector, uses of AI by investors and intermediaries can generate externalities and spillovers.

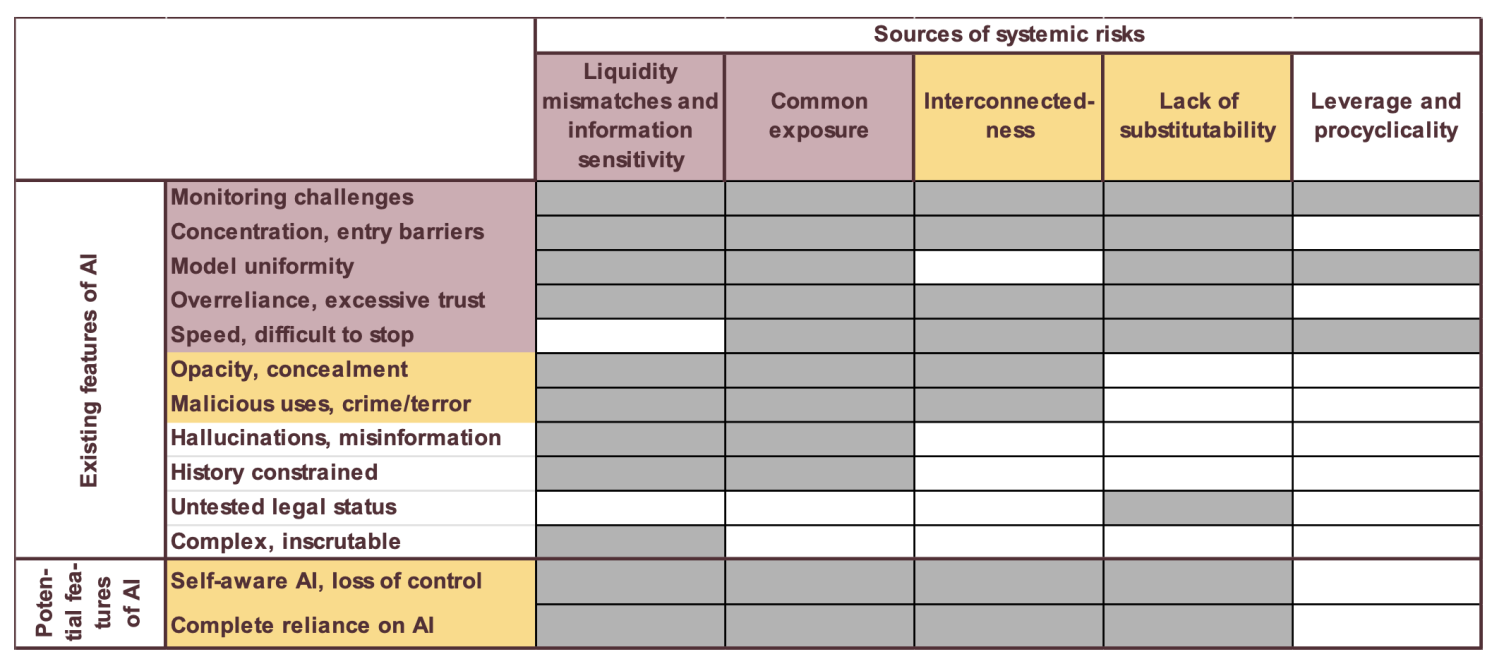

With this in mind, we examine how AI might amplify or alter existing systemic risks in finance, as well as how it might create new ones. We consider five categories of systemic financial risks: liquidity mismatches, common exposures, interconnectedness, lack of substitutability, and leverage. As shown in Table 1, AI’s features that can exacerbate these risks include:

- Monitoring challenges where the complexity of AI systems makes effective oversight difficult for both users and authorities.

- Concentration and entry barriers resulting in a small number of AI providers creating single points of failure and broad interconnectedness.

- Model uniformity in which widespread use of similar AI models can lead to correlated exposures and amplified market reactions.

- Overreliance and excessive trust arising when superior initial performance leads people to place too much trust in AI, increasing risk taking and hindering oversight.

- Speed of transactions, reactions, and enhanced automation that can amplify procyclicality and make it harder to stop self-reinforcing adverse dynamics.

- Opacity and concealment in which AI’s complexity can diminish transparency and facilitate intentional concealment of information.

- Malicious uses where AI can enhance the capacity for fraud, cyber-attacks and market manipulation by malicious actors.

- Hallucinations and misinformation where AI can generate false or misleading information, leading to widespread misinformed decisions and subsequent market instability.

- History constraints where AI’s reliance on past data makes it struggle with unforeseen ‘tail events’, potentially leading to excessive risk taking.

- Untested legal status in which the ambiguity around legal responsibility for AI actions (e.g. the right to use data for training and liability for advice provided) can pose systemic risks if providers or financial institutions face AI-related legal setbacks.

- Complexity makes the system inscrutable so that it is difficult to understand AI’s decision-making processes, which can then trigger runs when users discover flaws or behaviour is unexpected.

Table 1 How current and potential features of AI can amplify or create systemic risk

Notes: Titles of existing features of AI are red if they contribute to four or more sources of systemic risk and orange if they contribute to three. Potential features of AI are coloured orange to show that they are not certain to occur in the future. In the columns, sources of systemic risk are coloured red when they relate to ten or more features of AI and orange if they relate to more than six but fewer than ten features of AI.

Source: Cecchetti et al. (2025).

Capabilities we have not yet seen, such as the creation of a self-aware AI or complete human reliance on AI, could further amplify these risks and create additional challenges arising from a loss of human control and extreme societal dependency. For the time being, these remain hypothetical.

Policy Response

In response to these systemic risks and associated market failures (fixed cost and network effects, information asymmetries, bounded rationality), we believe it is important to engage in a review of competition and consumer protection policies, and macroprudential policies. Regarding the latter, key policy proposals include:

- Regulatory adjustments such as recalibrating capital and liquidity requirements, enhancing circuit breakers, amending regulations addressing insider trading and other types of market abuse, and adjusting central bank liquidity facilities.

- Transparency requirements that include adding labels to financial products to increase transparency about AI use.

- ‘Skin in the game’ and ‘level of sophistication’ requirements so that AI providers and users bear appropriate risk.

- Supervisory enhancements aimed at ensuring adequate IT and staff resources for supervisors, increasing analytical capabilities, strengthening oversight and enforcement and promoting cross-border cooperation.

In every case, it is important that authorities engage in the analysis required to obtain a clearer picture of the impact and channels of influence of AI, as well as the extent of its use in the financial sector.

In the current geopolitical environment, the stakes are particularly high. Should authorities fail to keep up with the use of AI in finance, they would no longer be able to monitor emerging sources of system risk. The result will be more frequent bouts of financial stress that require costly public sector intervention. Finally, we should emphasise that the global nature of AI makes it important that governments cooperate in developing international standards to avoid actions in one jurisdiction creating fragilities in others.

See original post for references

“This is the voice of world control. I bring you peace. It may be the peace of plenty and content or the peace of unburied death. The choice is yours: Obey me and live, or disobey and die.”

— Colossus

I took the time to work my way through another very long (probably too long) piece from Ed Zitron yesterday. What struck me was the amount of credit/debt that has been supplied by banks. Credit funds are concerning enough, but banks, really? Combine this with the growth in CDS to protect lenders and we have the makings of a repeat of a credit crisis. Yet, market commentators still say no bubble, all is well. Alas…

So, talk about systematic risk. Fed and regulators where are you?

>So, talk about systematic risk. Fed and regulators where are you?

My guess is they are going to the same cocktail parties and inhabiting the same social circles as the leaders of the Big Banks, PE Funds, VC managers, politicians, you name it. All of them carrying a big tube of chapstick to remedy all the ass kissing going on.

Clear and present danger is to the western financial system already stressed by war in Donbas.

I have been reading Ed Zitron, since a few months when NC posted one of his long writings.

A lot of capital going to facilitize/support a lot of AI users the vast majority are not paying for the services they use, as if it were wiki or google maps.

Also, AI hyperscalers/acce;erators are soaking up a lot of venture capital. Data centers are being funded using anticipated performance that no one knows the operating costs much less the revenues to pay the upkeep and operations.

Interesting, Zitron notes the scalers are using 5 year depreciation on NVDA (etc) chips when the chips will be obsolete before the massive computer they make up is ever turned on!

All that capital, I wonder what good tech we could be fielding at the cost of whims about a cyborg future?

Powell is in enough trouble with Trump why would he do what Greenspan failed?

EU/US capitalizing war with Russia (US it is China) is a drop in the bucket risk.

“Zitron notes the scalers are using 5 year depreciation on NVDA (etc) chips when the chips will be obsolete before the massive computer they make up is ever turned on!”

depreciation exploitation ??

Allows the accountants to lower depreciation expense, making the earnings seem better.

Zitron talks about creative accounting in both revenue and costs, relating to some of the actions from the telecom/dotcom bust and bankruptcies.

What will trip this irrational enthusiasm bubble?

I struggled through the Ed Zitron piece yesterday with less than complete comprehension. I did come away with a deeply troubling impression of the scale and most worrying level of chicanery and flawed assumptions underlying the present AI madness. I am very frightened of what this madness could likely accomplish. I cannot comprehend how to rationalize the AI the Magnificent Seven are confabulating … after reading some of the prognostications of the Honest Sorcerer and OilPrice regarding the declining futures of fossil fuels, and after reading of some of the flaws of the u.s. electric Grid. Where will the electric power AI needs come from? When I combine that worry with my cursory understanding of Zitron’s discussion of the investments versus revenues for AI, and my vague grasp of the user markets he described … I am mystified how the AI madness could ever pay off as an investment. Needless to say, I am scrambling to figure out how to manage the investments I inherited from my father.

My relatively conservative father believed in investing in a mix of stock market index funds and “low risk” bond funds. He held to that mix through ups and downs and over time did very well. I have been extremely reluctant to change what has worked so well in the past, but this AI madness has me extremely worried. If I understand the weighting used to construct the index funds my father invested in most/all of those funds are fairly heavily invested in the Magnificent Seven and thereby in AI. The bond funds might be holding an uncomfortable amount bonds issued to fund AI expansion, and even the large bank where I have moved my retirement cash holdings appears to have exposure to AI bonds.

If AI breaks the Financial Markets at a scale possibly dwarfing the 2008 Crash, will Trump bail out the losers the way Obama did? Predicting what Trump might do is more problematic than predicting next week’s weather. How safe are bank deposits? And gold? I would rather hold something with intrinsic value and some amount of liquidity [ceramic bearings and bicycle chain?] — and I am not keen to stockpile bullets like the currency in many post-apocalyptic imaginings.

No one can predict the future. Ed Zitron’s piece is logically sound (albeit he does come across as a hater stylistically:). This by itself doesn’t mean that the whole thing will collapse tomorrow. It doesn’t mean that things might not materially change next month (remember the kerfuffle with DeepSeek?) Above all, it doesn’t mean that the market will crash imminently. The whole thing is very complex and very dynamic. Above all, much money and human ingenuity is working on it.

Two things – bonds are not necessarily “low risk”. Gold is perhaps a little richly valued at 4500.

The best portfolio is what lets you sleep at night :)

I think the gambit is to create so much systemic risk that open ai et al are folded into the .gov in order to save them and in the process handing the keys to the kingdom to the tech lords similar but not exactly the same as the ACA saving insurance companies and keeping all those premiums and subsidies flooding onto wall st., and using the GFC as an excuse to stuff wall st. and investors with the cash necessary to buy everything and rent it back to the usians who used to own it.

This post also makes the oft repeated claim that there will be jobs no one can imagine needing to be done because of the wonderful utopia of ai when my belief is that no, there will not be new jobs. this is based on the actions of those in control now which is to disrupt, destroy, and diminish the lives and fortunes of those would be workers.

tegnost: … the gambit is to create so much systemic risk that open ai et al are folded into the .gov in order to save them … handing the keys to the kingdom to the tech lords (in a process similar …(to) using the GFC as an excuse to stuff wall st. and investors with the cash necessary to buy everything and rent it back to the usians who used to own it.

Agreed.

Except they’re also planning to disemploy all those future renters.

They’re the dogs chasing the cars with no real idea what to do with them when they catch them.

I expect turning on one another will be their next move as, despite all the creativity poured into their tech (by their serfs), they are fundamentally uncreative, even anti creative and accumulation is the only thing they really understand.

I also agree with tegnost’s percipient comment.

actually, the job issue is more insidious as it is the entry level jobs that are being replaced, whereas the senior positions with experience are remaining (until retirement). It’s not hard to forecast another demonstration of “Idiocracy” the documentary.

I think it’s hard to tell what the effects on the wider economy will be when it crashes (it could even be positive, given the huge misallocation of resources), but long term my two predictions are:

1) The tech industry will have to play by the same rules as everyone else going forward. No more bullshit startups.

2) This will significantly reduce the perception of the US as a safe harbor for US dollar investments, which will have significant effects on its role as an international currency.

I also wouldn’t be surprised if we see another lost decade, much like what happened in the UK.

I think it was Jamie diamond that issued a warning a couple months ago, along with Bank of England… both are concerned about excessive bank lending, probably not the non-bank bits so much. Imo neither Fed (or trump) would let its big wall st babies fail, but equities might take a beating before they move. And the non-bank lenders might be in trouble, to say nothing of those getting margin calls. Dot com was worse than GR.

Unlike others, nasdaq was unable to reach new high this month, maybe a little rotation away from tech.

“Fed and regulators where are you?” — Just trying to save their own necks, like everybody else. What? You thought they were somehow better than that? Hah!

And the risk is not just systematic, it is systemic. Can we even call it “risk” anymore?

Fed chair Powell should welcome the opportunity to be replaced, quickly write a book, and watch as TSHTF.

Greenspan serves as a model for this game plan.

Mikerw0: we have the makings of a repeat of a credit crisis. Yet, market commentators still say no bubble, all is well.

At this point, they’re very clearly wrong.

Happy New Year, everyone!

In contrast to the direction of Western development, after his recent visit to China, Alastair Crooke was astounded at the productivity achievements that they have achieved in the last few years by emphasizing industrial AI with its specific training sets producing reductions in factory work forces on the scale of 2000 to 200 workers in a year. Generative AI with its inherent GIGO issues, of course, is the focus of Western AI developers.

“However, using these tools comes with risks. These include difficulty in detecting AI errors, decisions based on biased results because of the nature of training data, over reliance resulting from excessive trust, and challenges in overseeing systems that may be difficult to monitor.”

Precisely, and this is specifically the issue that Rob Urie speaks of in generative AI. My spidey sense says that this is the root “systemic” issue to worry about. In a similar way that wealth concentration is, or has become, a prized feature of neoliberal economics rather than a bug, perhaps these kinds of risks in generative AI are its very attraction for the wealthy and governmental interests that we are seeing here.

I surmise that a lot of the frantic money and emphasis on developing AI in the West is specifically for the darker purpose of AI training its human components, the people who use it, to be good, docile citizens leaving their betters to run the world as they wish, rather than the other way around. Of course, these hoped to be unshackled rulers have done such an excellent job so far. Just look at the once prosperous, democratic EU. It doesn’t take long to devolve into autocracy, just the will to do it. I’m sure they plan on having AI’s able help in this ongoing endeavor.

At least we will get to learn, positively or negatively, from how China addresses the disemployment problem they’ve created ahead of us.

That on top of deflation presents some real challenges to The Mandate of Heaven.

Seeing the engineering solution, or not, to those challenges should be interesting.

I thought China was facing a shortage of younger workers, particularly as the huge older generation ages/retires/produces less/needs more help. Plus what I assume is a growing military. Granted, they need more social spending to make the shift from export to internal consumption, something I’ve heard Xi opposes, apparently tied to the export model. Otherwise we might see unemployment workers and senior dumpster diving.

2000 down to 200 more than covers demographic decline.

Yeah, Machine Learning is a different (if related) technology to LLMs. Most of the Chinese investment has gone into the former, but because the west is really only interested in areas where there is direct competition, this has mostly flown under the radar here.

“AI” is really just a tool for dealing statistically with large datasets. It’s very powerful and useful, but Nassim Taleb’s work is probably the best framework for thinking about it. ML deals very well with white swans – which means black swans when they come along can be even more devastating.

Machine learning is AI, despite what Sam Altman, and hyperscalers say.

It does not need supercomputers and unlike OpenAI et. al. it can run over dedicated data bases where the operating company controls the data and maintains standards..

Very few customers can pay for LLM.

Well it depends, like a lot of things, on what you’re doing.

But ML requires a significant investment upfront, and continued ongoing investment. Most US ML projects seem to have failed, because company’s underestimated that (or the return did not justify the investment).

In my experience, most companies significantly overestimate the quality of their databases (or rather their data collection methods), and data scientists (despite the pretentious name) don’t have the statistical skills required to handle that.

“AI and Systemic Risk”

If I would change AI to Human in the article and title to –

‘Human and Systemic Risk’

I would see just one difference; Humans have proven capable of what AI is anticipated to accomplish – good and bad – so whats the use?

Humans will always be the biggest negative and positive risk to human constructs and to the rest of life on this planet. AI just might be humanities perfect scapegoat for bad things and feeding humans hubris and anthropocentrism for the good.

But what do I know

You may not be interested in AI.

AI is interested in you.

If you are not vigilant, AI enablers at every social media platform will mine you and your activities, reducing further any shred of remaining privacy.

See an article by Kim Komando about a few protections you probably need.

“Artificial intelligence – encompassing both advanced machine-learning models and, more recently, developed large language models – can solve large-scale problems quickly and change how we allocate resources.”

This isn’t true, and it’s incredibly frustrating to me that so many decision makers believe it to be true.

Yes, ML can be used as a tool by (very expensive) experts to solve well bounded, but complex, problems where you have good, reliable, data. Those experts need to analyze the situation, make sure they truly understand any outliers (many ML experts are bad at this, in ways that Nassim Taleb would recognize) and then build a complex model. If the environment that the ML model is deployed in changes, then the ML model needs to be modified also (and this is not easy, quick, or cheap).

Many problems do not fit these requirements, which is why so many ML projects (at least in the west) fail. It’s also expensive to setup and deploy. This is also why the ML hype was beginning to die out prior to the LLM craze. Much like every tech hype prior to it, it under-delivered.

LLMs cannot solve problems. There are two ways to think about them:

1) Text completion on steroids (this is literally how prompts for LLMs work).

2) Zip/JPEG compression of their data sets (what you get out from an LLM is kind of like a fuzzier version of what was put in). You can actually create a simple LLM using compression algorithms. It’s fun – if that’s the kind of thing you enjoy.

What an LLM can do when presented with a problem, is present a linguistically plausible solution. Sometimes – say for example it’s dataset contained lots of examples of similar, or identical, problems with solutions – at other times it will be completely wrong. But textually, it will have the same shape and structure as a real solution would.

In other words LLMs are really good bullshitters. And if you have a problem where bullshit is a perfectly good solution, then LLMs are a mighty fine tool. Otherwise, it will go about as well as you’d expect.

Spot on!

I like the list of how “AI might amplify or alter existing systemic risks in finance”, however it seems to be missing an important financial risk –

“Accelerate the concentration of wealth by speeding up the game of monopoly, which makes the entire financial system more fragile until one group owns the board and the game is over.”

But I suppose that since AI is mostly being funded by the investor class so they can achieve more power and control over the rest of us, and therefore maintain their ownership of the world and the capitalist system that made them rich, “game over” likely seems to be their goal. And based on all the AI PR saying “AI will solve all our problems”, they seem to be trying to convince the rest of us that AI will be a beneficial improvement to all. The magician is waving the shiny AI hand so you don’t see the other hand picking your pocket.

“Game over”!

That’s what I see coming when, “the dogs chasing the cars with no real idea what to do with them when they catch them” do in fact catch them!

Then what? The entire world they’ve totally trashed is still there watching them high five.

All this talk of policy responses, regulations, and “governance” seems like so much hand waving to me. What ridiculousness! None of that will happen. So far the only regulation has been Trump trying to preempt state-level regulation. The AI companies clearly don’t want any regulation they can’t control, and when was the last time a Republican congress talked about a regulation they weren’t in the process of dismantling? Is it assumed the Democrat do nothings will act when they take congress next? Please, what a joke! With a trillion plus sloshing around, they will be just as eager to steamroll over any opposition as Trump.

Today Reuter headlines said NVDA is talking with TSMC about PRC demand for H200 GPU.

Last week PRC made noise about self sufficiency in chips, and have achieved 3 nm…

China is doing lower load AI like machine learning.

Not many operators need LLM.

The media sphere is selling OpenAI and NVDA.

The fragment of the Time article looks exactly like the hodgepodge of ideas that AI would create with some human edition. Each phrase by itself makes (more or less) sense but each one of them tries to join too many items in a way that in some cases look chosen at random. May be it is my problem with English language.

I saw a piece in BBC Science Focus recently that did a good job of summarizing the problems with the current AI approach and why it will fail:

https://www.sciencefocus.com/future-technology/hidden-forces-ai-bubble

In a nutshell, providers assumed Moore’s Law type scaling would apply (because, for them, it always has done) and that they could keep producing better results simply by constantly scaling. This is untrue for a number of reasons. Compute requirements do not scale linearly with model complexity, so the incremental gain gets smaller and smaller. Also there’s a limit to how much training data exists in the world, which we already hit a while ago according to some reports. Companies are resorting to tricks like synthesizing training data (which doesn’t increase the data space and in fact probably breaks some of the foundational assumptions of the underlying models, likely causing them to degrade).

So all the huge capital-intensive infrastructure bets are assuming a future that is literally impossible to realize, absent a huge breakthrough on the research front that almost certainly won’t come in time. It’s not going to be pretty.

I remain convinced that the killer apps of AI are human-computer interface, assistive technology and smart tooling. That’s not nothing, but it’s not the world-changing transformation that it would need to be for all the investment to see a return.

The problem described by Time sounds very much like the problems once predicted for the Y2K “crisis”. Computers and software are so widely used in mission critical systems throughout our society that widespread problems in computers and software would have unpredictable and likely drastic effects which would be hard to identify and repair.

Certainly one can fantasize that similar problems might erupt if AI technology were widely deployed. The real world track record of AI to date is so horrible, however, that I doubt things will ever get to that point even if the financial system doesn’t collapse first. If half the information squirting out the back of an AI box is a hallucination, that’s going to be fairly easy to spot and anyone using AI for any important purpose is going to be swiftly laughed out of town.

(BTW it’s amusing to see Time propose the use of “guardrails” to prevent ill effect from AI systems. Does anyone actually know what these guardrails are or how they work? LLM software does not have locations where you can fire up a code editor and go in and “fix” things. It’s just a network of billions or trillions of nodes connected by weighted edges, the weights having been opaquely assigned during training. Which of the trillions of edges should you alter to “fix” something and what is going to be the overall effect of that change? Literally, no one on earth knows. LLMs cannot be fixed or adjusted by hand.)

The approach to AI by US big tech puts me in mind of Martin Simpson’s achievements when he took over the control of GEC.

There is a trivially simple way to address this, simply change the law such that AI models and data sets are not covered as IP, removing patent and copyright protection.

This eliminates the profit motive, which eliminates (most of) the inclination to recklessness .

Of course, this pops the AI bubble, but I’d rather that Masayoshi Son takes it on the chin than the clueless late to the game small investors.