Yves here. It is telling that the very measured Bruegel website is pretty bothered that Intel looks likely to get away with relatively little in the way of financial consequences as a result of its Spectre and Meltdown security disasters. This is a marked contrast with Volkswagen, where the company paid huge fines and executives went to jail.

However, it was the US that went after a foreign national champion. The US-dominated tech press is still frustratingly given the Intel train wrecks paltry coverage relative to their importance.

One thing that could change the dynamic would be if a foreign regulator, say in the EU or China, were to lower the hammer on Intel. And that would take a while to develop, since that authority would want to have a very well thought out case before it took on such a high profile player. But the flip side is Intel’s lapse was glaring, and some of the comments on tech-savvy sites and threads suggest that Intel was not only not unaware, but understood the implications of its business/profit decisions.

Consider a discussion at Slashdot kicked off by this summary:

troublemaker_23 quotes ITWire:

Disclosure of the Meltdown and Spectre vulnerabilities, which affect mainly Intel CPUs, was handled “in an incredibly bad way” by both Intel and Google, the leader of the OpenBSD project Theo de Raadt claims. “Only Tier-1 companies received advance information, and that is not responsible disclosure — it is selective disclosure,” De Raadt told iTWire in response to queries. “Everyone below Tier-1 has just gotten screwed.”

In the interview de Raadt also faults intel for moving too fast in an attempt to beat their competition. “There are papers about the risky side-effects of speculative loads — people knew… Intel engineers attended the same conferences as other company engineers, and read the same papers about performance enhancing strategies — so it is hard to believe they ignored the risky aspects. I bet they were instructed to ignore the risk.”…

Notice this reaction in particular:

I was one of those who called “no way” at first, but just yesterday I found this quote [danluu.com] from an Intel engineer. It was originally posted in a reddit thread [reddit.com] but has since been deleted – but not before being confirmed by other former engineers at Intel.

As someone who worked in an Intel Validation group for SOCs until mid-2014 or so I can tell you, yes, you will see more CPU bugs from Intel than you have in the past from the post-FDIV-bug era until recently.

Why?

Let me set the scene: It’s late in 2013. Intel is frantic about losing the mobile CPU wars to ARM. Meetings with all the validation groups. Head honcho in charge of Validation says something to the effect of: “We need to move faster. Validation at Intel is taking much longer than it does for our competition. We need to do whatever we can to reduce those times… we can’t live forever in the shadow of the early 90’s FDIV bug, we need to move on. Our competition is moving much faster than we are” – I’m paraphrasing. Many of the engineers in the room could remember the FDIV bug and the ensuing problems caused for Intel 20 years prior. Many of us were aghast that someone highly placed would suggest we needed to cut corners in validation – that wasn’t explicitly said, of course, but that was the implicit message. That meeting there in late 2013 signalled a sea change at Intel to many of us who were there. And it didn’t seem like it was going to be a good kind of sea change. Some of us chose to get out while the getting was good. As someone who worked in an Intel Validation group for SOCs until mid-2014 or so I can tell you, yes, you will see more CPU bugs from Intel than you have in the past from the post-FDIV-bug era until recently.

If this sort of thing is leaking out, Intel may be more vulnerable in discovery or regulatory proctology than Mr. Market is assuming now.

By Alexander Roth, a Research Intern at Bruegel who previously at the European Commission, the Centre for European Economic Research, Commerzbank AG and the University of Mannheim, and Georg Zachmann, a member of the German Advisory Group in Ukraine who has also worked at the German Ministry of Finance and the German Institute for Economic Research in Berlin. Originally published at Bruegel

Intel suffered only minimal pain in the stock market following revelations about the ‘Meltdown’ hardware vulnerability. But if the market won’t compel providers to ensure the safety of their hardware, what will?

On January 3, it became public that almost all microprocessors that Intel has sold in the past 20 years would allow attackers to extract data that are not supposed to be accessible. This hardware vulnerability termed “Meltdown” is depicted as one of the largest security flaws in recent chip designs.

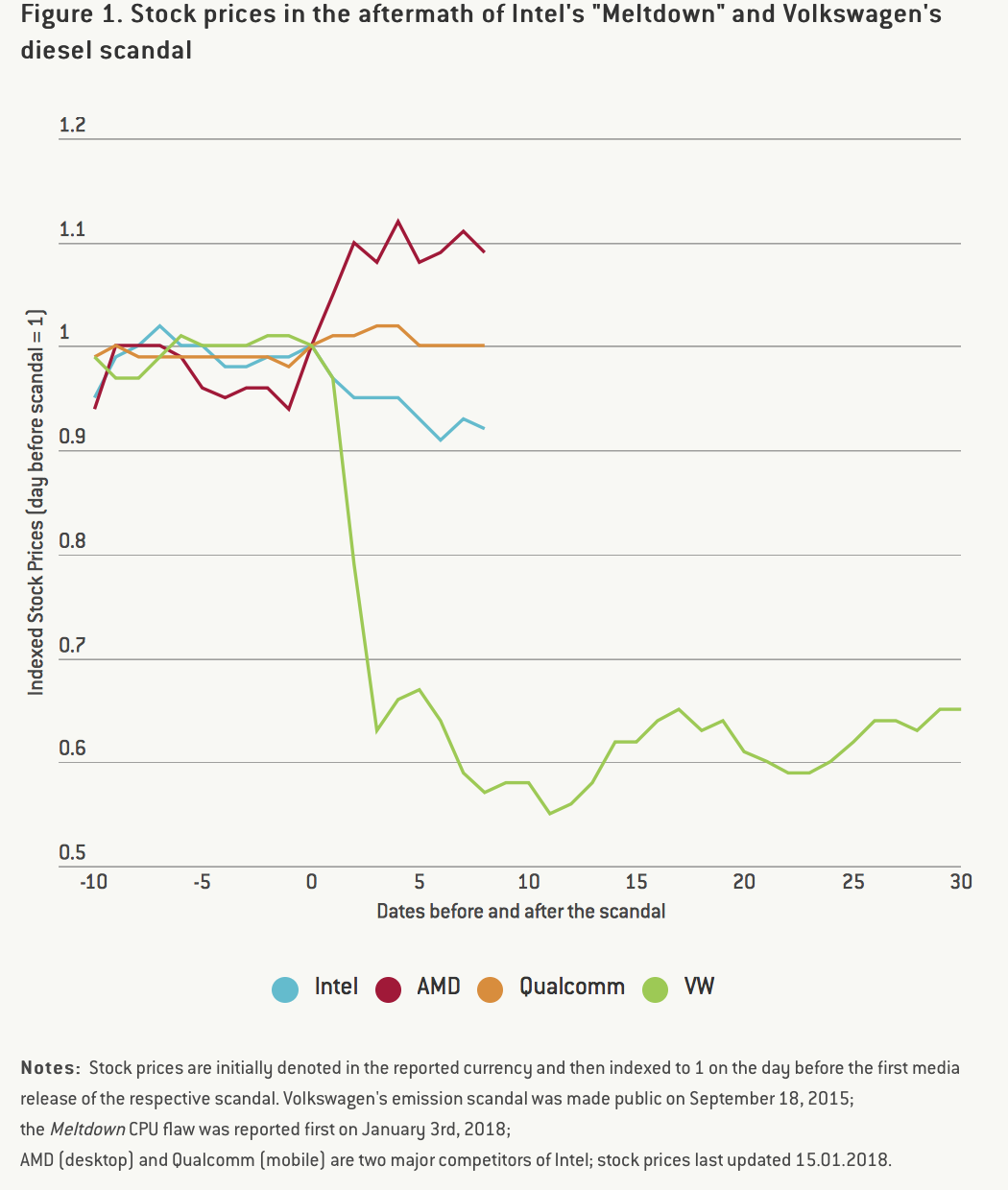

Financial markets were relatively unimpressed with the news; Intel’s stock price fell initially by 5% but stabilised afterwards. This contrasts sharply with the Volkswagen diesel scandal of 2015, which saw the car company’s value fall by almost 40% within a week. The two cases have more differences than similarities, but the striking resilience of Intel’s market valuation to the revelation that most Intel CPUs are vulnerable to specific attacks raises an interesting question:

Do hardware providers have sufficient incentives to make sure their products are as safe as possible?

Intel – having been made aware of the flaw more than six months ago – was able to provide guidance in how to address this security problem, so that patches to millions of computers could be rolled out. However, it is the providers of operation systems (such as Microsoft in the case of Windows) that provided these patches and that have to bear substantial cost.

We have already learned about the incompatibility of the original patch with AMD-processors or certain standard antivirus software. Administrators of complex IT infrastructures in particular will have to expend substantial resources on testing and adapting any patch on their critical hardware. Moreover, to date it remains unclear how severe the implications for processor performance are. As the security flaw lies in a feature to increase a processor’s computing power, modifying this feature could cost speed. First reports do not agree over the expected performance losses: some expect significant speed reductions, while Intel and Googleclaim that effects will most likely be minimal.

However, the reaction of the stock market suggests that Intel will not be held fully accountable for this incident, therefore will not have to bear the full cost of the flaws in its processors. This is somewhat worrisome, as it indicates that producers of essential IT hardware seem not be incentivised by the stock market to provide secure products, while the costs of the flaws in their products have to be paid by others.

Intel’s domination in the market of desktop and server processors could partly explain the gentle reaction of its stock. In a more competitive market, consumers would have more product choices and consequences for Intel would have been more severe. In contrast to Intel’s case, the stark drop of Volkswagen’s stock price could be explained – among other factors – by the more competitive market environment.

The question, however, of whether more competition in the processor market would have prevented a flaw such as “Meltdown” remains very much disputed. It is unlikely that higher investments by Intel, induced by stronger competition, would have prevented “Meltdown” – which has remained undetected by the entire chip industry for 20 years.

The most worrying aspect of the Intel case remains, though, the implication that providers of essential hardware might have more to lose from continuously searching for problems that do not exist, than from occasionally failing to spot a potential threat.

Are we removing the notion that Intel purposely built in these flaws to allow government parties to infiltrate otherwise secure hardware? Because if that’s the case, this is totally unlike the VW chicanery, where punishment has been doled out globally.

Having interviewed there 20 years ago, I think it more a case of excitable 28 year old engineering managers ordering excitable 24 year old engineers to slave day and night so that everyone gets a bonus and a new BMW. IOW, you give them too much credit.

I doubt they did it on purpose, they were looking for an easy way to increase performance, and along with OS providers (it takes both to do this, one by themselves couldn’t have done it) since the OS has to support the feature enabled by chip

This is the holy grail of exploits and can even be accessed by freaking Javascript on a webpage!?! Some of the 3 bugs (Meltdown and Specter are 2 of the 3) are also present in other manufacture’s hardware (though seemingly not to the same extent) which seems odd to me. Is this likely due to a design choice? Probably. Was that design choice nudged in that direction to allow for exploitation? I think that’s a reasonable question especially considering the penetration of Intel in the market. And if so… any evidence of the PPT coming into rescue INTC?

As to WobblyTelomeres comment re: “excitable 28 year old engineering managers ordering excitable 24 year old engineers to…”, isn’t this a perfect environment to slide in such an exploit?

This is interesting – https://www.reddit.com/r/technology/comments/7nzpqb/intel_was_aware_of_the_chip_vulnerability_when/ds5ytg2/

The fix for the latest vulnerability is based on an optional security feature that has been around a long time (at least on Linux). It wasn’t enabled by default because of the 17-30% performance hit and people didn’t think it was really necessary. But if the NSA knew otherwise, they had an easy switch to enable.

Similarly there’s an undocumented way to disable IME: https://en.wikipedia.org/wiki/Intel_Management_Engine#“High_Assurance_Platform”_mode

A commenter a few days ago asked if/how Intel was still selling computers and devices with the flawed chips. And if they are why…. Also why hasn’t there been any talk of a recall? I lost track of the thread but I am curious….

I guess the scope of a recall is beyond imagination but why ARE these flawed chips still being sold?

Dumb question, I guess. But maybe Intel would take this thing more seriously if the questions were asked.

There’s nothing else in the pipeline and they can’t just stop selling chips, there are entire industries that would come to a screeching (well whatever sound you like, like a bus hitting a brick wall) halt.

Someone with experience in the industry might be able to tell us how long it will take to redesign, test, build new production facilities and ramp up to get chips without this design at their heart into the pipeline.

But I thought someone said AMD chips don’t have the flaw…. and does Motorola still make processors?

I know nothing will be done. But I do think demands and options should be discussed.

Motorola

threw the baby out with the bath waterspun off their chip division into a company called Freescale years ago.Seems amd is also impacted, which makes since they both run the same os

Meltdown doesn’t affect AMD. Intel took a short cut in securing privileged memory during speculative execution that AMD did not.

Spectre V1 and V2 seem to affect everyone.

I am not a chip designer. I read and vaguely understood the paper describing the flaw. My take is that Spectre V1 and V2 are essentially fundamental to existing design of speculative execution. Pretty much every modern processor uses speculative execution. In addition, it is not an area where there is much fundamental innovation, so it is unlikely anyone went off and did something sufficiently different that prevents Spectre. Most of the innovation occurs in the part of the CPU that is guessing which branch will be taken next, not in how the branch is executed. We have only heard about Intel, AMD, and ARM but I expect Power 5 chips in IBM mainframes and SPARC (don’t know if they are still used) and other more obscure CPUs have the same problem.

Basically it depends on how the os works. If where user programs runs (like say a browser) has access to storage(data) the os uses, what could happen is a rogue program could get access to the os storage without having to pass validation that the rogue program actually was authorized to do so. now why that could have been allowed, was speed of execution, validating that authorization will slow execution down. Now you may wonder what these means to you, it means that data in the os is exposed (say your admin userid…and password might be viewable). the fix thats coming now is making the os do that validation.

As I read the google paper, at least one of the exploits is based off time for a speculative branch to execute depending on the result of a bitwise check inside a speculative branch. If processor were to delay execution so both results take the same amount of time, you can’t get any information about memory contents. I doubt it would cost too much, because it is one clock cycle lost occasionally in a branch that probably wont even execute.

Yes because it makes sense to take chips nobody should buy, and build them into computers nobody will buy. Remember trash adds to GDP!

It actually does, because so many cities use companies to pick up trash

18 months. I have friends who work for zIntel and Broadcom.

Part of the delay is the question of Fab plant and Technology. Moores Law applies.

Perhaps for the same reason that for many years auto makers produced millions of vehicles that were “unsafe at any speed” or that cigarette companies, oil companies, Monsanto etc. do what they do when the profit motive is given undue precedence over other, quite arguably more important, social goods, IMHO. Also, tech along with finance is now up there in the commanding heights of the economy and can’t be messed with too much lest the house of cards comes tumbling down.

No conspiracies required, practical things like manufacturing lead times dictate.

And to address another responder, these chips are designed into products, deeply embedded. AMD etc do not make pin for pin replacements, to say nothing of the programming changes needed. Further, while high end hardware has socketed CPUs laptops and many consumer goods have soldered in chips. Replacement is not practical.

Yes. And for older CPUs, Intel doesn’t even have the manufacturing line anymore. I’ve got an old Penryn-based laptop and a tiny Sandy Bridge-based computer that I use as a print server. Alas, even if Intel wanted to manufacture upgraded versions of these chips that contained fixes for the speculative execution side-channel attacks, they couldn’t. Both CPUs went out of production years ago, and their respective fab lines have been long abandoned.

And even if Intel did somehow resurrect these fab lines (an exercise that would surely take many, many months), what would they do next? Send bare CPUs out to customers? Hah. Most people lack the technical savvy to properly replace a CPU in a desktop computer, much less a socketed CPU buried deep inside a laptop. And if it’s a laptop with a soldered CPU… Forget about it.

I agree. No conspiracy necessary. Just business as usual as it is now per prevailing ideology and priorities.

It is incredibly expensive and difficult design new CPUs. These are very complex chips and this industry is very capital intensive.

I think that it is likely that the next generation of chips will be shipped with the same flaws.

A major response like this might not be resolved until 2020. It may take a couple of years for Intel to churn out a 100 percent bulletproof chip to Meltdown.

Computer chips take years to design from ground up. Often more than 5 years from concept to a shipped product due to the complications.

As for Intel, they have a near monopoly. AMD might be able to challenge them eventually, but right now they just barely got back from near bankruptcy with Ryzen, their new CPU architecture.

VIA Technologies still seem to be operating in Taiwan. The Eden processors that we used featured low required power, so they didn’t boast the multi-gigahertz screaming performance that causes the trouble with Meltdown and Spectre.

Not only is Intel not having to pay damages here but in all likelihood they will see at least a short term financial windfall from this debacle.

The performance hit from the Meltdown fix is forcing companies to move their workloads to a larger cloud instance in AWS, etc. And ultimately that boils down to Amazon, Microsoft, Google, et. al. buying more hardware, including CPUs from Intel. The same thing is happening in SaaS companies and even in the rare dinosaur companies still buying and running hardware in their own datacenters.

I’m not sure the analogy with VW is the best fit. After all, the “cheater firmware” that was installed in recent VW diesels was done intentionally. It was done for the explicit purpose of deceiving regulators and violating emission regulations. That’s not okay. Not even remotely.

As best I can tell, Intel’s error was an honest mistake. One that went undiscovered for over 20 years. Modern CPUs are terribly complicated beasts, and it’s not surprising that this subtle vulnerability evaded detection for so long. [Side-channel attacks like Meltdown and Spectre often require truly sideways thinking on the part of security researchers to discover.]

And why has Intel’s stock price only suffered slightly? Because they arguably still have the best product on the market. AMD has nearly caught up with their recently released Ryzen, Threadripper, and Epyc processors, but for many years before that, running AMD meant consuming more power for inferior performance. Today, if you don’t want Intel, you can switch to AMD (but still be susceptible to the Spectre attacks) or you can re-purchase or re-engineer all your software to run on a non-speculative non-x86 platform and consume more power for inferior performance. To be honest, the options are limited here, and making a big change could be very painful.

When VW got busted, customers had all sorts of other options for an energy-efficient vehicle. Diesels from Audi or Mercedes or BMW or Chevy. Hybrids from Toyota or Honda or Chevy or Kia. Or even pure electrics from Tesla or Nissan. There was little penalty associated with a move away from VW.

It could have been known, though. This stuff is not new. The people responsible for getting Multics its B-level security certification were certainly aware of side-channels implemented through timing. In their case it was page-faults, not cache misses, but it amounts to the same thing.

> Intel’s error was an honest mistake

Well, for some definition of “honest.” Quoting again the Intel engineer:

That doesn’t sound especially honest to me.

I’m still not seeing any intent to deceive, which is the key consideration for me when defining the word “dishonest”. There may be evidence supporting the word “sloppy” (especially in how they handled disclosure), but I don’t see deception.

And don’t forget that Intel started putting speculative execution capabilities in their processors way back with the Pentium Pro, which was released back in 1995. They were undoubtedly hyper-vigilant at that point, as they’d recently absorbed the brutal fallout from the Pentium FDIV bug. If 2013 is the year that Intel’s quality control culture went all to hell because of management stupidity, it doesn’t explain how the vulnerability escaped detection in the long line of processors (Pentium Pro, II, III, VI, Core, Core 2, and four pre-2013 generations of i3/i5/i7) released during the preceding 18 years.

It escaped detection because it was a subtle side-channel attack. Security researchers had been looking for memory protection vulnerabilities in CPUs for years before this was finally discovered.

Remember Hanlon’s Razor here: Never attribute to malice that which is adequately explained by stupidity.

I would have sworn “Never attribute to malice…” was one of Mayor Salvor Hardin’s epigrams in Foundation; but apparently not. I read it in Junior High school, and that was a very long time ago.

That said, your comment does not engage with the post. You write:

Possibly you’re not looking in the right place; I suggest the post itself, starting at “Let me set the scene.” That’s how dishonesty is handled by management in large corporations.

Devil’s advocate, if IBM Processors have the same flaw, is this situation like the Pinto fuel tanks? Where only Pinto (Ford) got punished even though “fuel tank behind the rear axle” was a common design before & after?

It actually take the os architecture to make the flaw work.

I think the Meltdown and Spectre vulnerabilities endow Cyberwarfare with a new level of threat. If I correctly understood the discussion on Meltdown even Javascript can be used as remote exploit to obtain root on a system and unpatched user-privilege exploits can be boosted to root. Imagine the effects of a worm like the Sapphire Worm exploiting the Meltdown feature to lock hard drives. I wonder — is DoD having any second thoughts about Netcentricity and their efforts to save money by purchasing COTS equipment thereby driving chip manufacturing into the commercial sector and from there to outsourcing production — even some design — overseas? Of course the last I knew they were still using COTS operating systems and investing considerable efforts at testing, delivering and installing IAVA patches. I suppose insecure software deserves insecure hardware. So much for the national defense.

Intel hasn’t been spanked but what about our banking networks? Are people still rushing to pay all their bills online — to save trees? It’s getting to the point where I can’t do simple things like pay for parking without installing some poorly crafted app to tap into one of my credit cards. Is there potential for a real-life “Mr. Robot” scenario? Aside from underreporting and widespread ass-covering is anything effectual being done to protect against these bugs? If a big credit agency can’t be bothered to install relatively simple patches just how secure and security minded are our banks? [Thinking from a different direction — maybe the security holes in our banking system represent another means for creating money in our economy. Out of curiosity — what stops off-shore banks from creating money for the rich by the flip-of-a-bit?]

I heard that, long, long ago, there was something called “governments” that could enact security requirements through something dubbed “norms” via a process named “legislation”…

I was thinking something similar . . . I remember that a long time ago, in a galaxy far, far away, there was . . .I can almost remember . . .I got it! There was this thing… Regulation, that’s it! And, and . . . YES! Consumer protections . . . but maybe it was all a dream..

Meh, Spectre isn’t an Intel train wreck, it’s an inherent hazard of letting bad guys run code on your computer and it applies to most advanced computers, not just Intel’s. Meltdown is Intel-specific and it’s more of a train wreck than Spectre, but it’s also easier to fix.

Considering the initial microcode fix from Intel is glitched on certain CPU families, I disagree with your overall statement with regards to Spectre and Intel

And Spectre cannot be completely fixed at this point…

If Intel is not going to suffer any ‘market’ consequences, will it suffer hardware sales consequences? What I mean is this. If you have some mob that are seeking to upgrade their computers, will the guy in charge opt for computers with AMD-processors instead of Intel-processors so that they will not be accused of choosing insecure hardware and thus making themselves legally liable? Unlike the so-called market of the stock exchange, this would be the market of the real world at work.

Doesn’t the fact that they provided info on the problem to “Tier 1” firms before the general public scream of security fraud?

Good point.

Adding-

I can remember hackers talking about this type of exploit at least 5 years ago, in relation to “the cloud”. Rent a server from AWS and hope you were sharing it with someone who had something to hide.

Can’t figure why this isn’t a bigger deal for any of the big cloud/data people.

Will replacements and/or credits be issued by intel to the big guys? That’s what seems “baked in” at the moment.

That’s an interesting question.

I disagree with the tenor of this post. I think this is much ado over nothing in the sense that this is a sign of poor quality from Intel or unsafe design.

I’m not dismissing these bugs. They’re terrible security bugs, and are going to be hard and expensive to fix/replace the buggy hardware —- virtually every modern CPU is vulnerable. These bugs aren’t a design defect, they’re deeper than that, a design strategy defect.

Here’s an analogy of the bug. Say you are working on a project, and you need an answer from your manager before continuing. You can either A: Wait 2 days for a response, twiddling your thumbs, or B: *predict* the answer from your manager. If you guess wrong, you need to take a little time to throw out the wrong ‘speculative’ work and resume where you guessed wrong. If you guess accurately, you’re more productive; you exploited that waiting time. If you guess accurately often enough, this optimization strategy is effective. High performance CPU designers use strategy B — it is vastly complicated because of the need to track speculative work and undo it, but it is done because it is worth such a huge performance gain.

Where the bug comes in… When I said ‘you throw out the wrong work’… That wrong work leaves behind ‘ghosts’, (Continuing the analogy, you might throw out the work you did under your wrong guess, but could you fixup all of the ‘last accessed’ timestamps of every file you touched in those two days?). Now combine this with a malicious software who can manipulate your ‘manager predictions’ as well as measure those ghosts, and you have a recipe for leaks. Predictions don’t have to be accurate — they’re validated with ground truth from your manager — so bad predictions or manipulative predictions seemed harmless.

This kind of issue is a fundamental artifact of design B, where you make a prediction and undo the overt effects if you turn out wrong, which is why it hits just about every modern high performance CPU in the last 20 years, which all use this strategy. Meltdown and Spectre are both instances of this bug. What distinguishes Meltdown is that Intel CPU’s make a certain kind of prediction that AMD cpu’s don’t, and the consequences were particularly impactful.

To be fair, this class of attacks on OOO CPU’s were predicted in a 1995 paper, but there are hundreds of high quality papers every year warning of security risks. In a world where we do not know how to make robust and secure software, and every vendor has frequent patch days (if you’re lucky and they patch at all!), those papers get the ‘hypothetical problem — we have bigger problems to worry about’ whiff to them…… until they’re not so hypothetical.

Yes, these are major, ugly bugs, that can be (somewhat) worked around in future CPU designs. To me, this looks to be an oversight that blindsided EVERYONE, not just Intel.

Thanks for the explanation of how the speculative execution bug works (“ghosts”). Because that bug is hard for laypeople to understand, it’s always good to have another one.

However, I think a focus on the technical side deflects attention from the real problems, which are institutional. You write:

That sounds to me like a layperson’s paraphrase of an argument Intel’s brief (were Intel to be subjected to “discovery or regulatory proctology,” as the post puts it).

However, this argument ignores two things.

First, Intel is a monopoly with enormous technical, managerial, and financial resources. If anybody had the capability to design an architecture to avoid these speculative execution bugs, Intel did. You yourself write:

(I’d love a link to that 1995 paper, by the way.) To me, that looks very much like the responsibible parties at Intel either neglected to review the technical literature — “There are a lot of papers” doesn’t strike me as a particularly strong defense against that claim — or they were knowledgeable, and decided they had other priorities. This would, of course, be a matter for discovery; I haven’t seen any deep reporting on the design process that led Intel to make the architectural decisions that it did.

Second, “they’re all guilty (so nobody is guilty)” ignores the testimony proffered by an actual Intel engineer in the post:

To claim that this “an oversight that blinded” is at variance with the facts.

If Spectre and Meltdown end up in the courts, Intel is going to have to come up with better arguments than these to avoid liability.

IEEE Security and Privacy: https://pdfs.semanticscholar.org/2209/42809262c17b6631c0f6536c91aaf7756857.pdf is the paper, it discusses a myriad ways in which execution has unintended side effects. Read it and cry about how complicated CPU’s were …. 20 years ago. (They have 1000x more transistors now.) It illustrates how many ways there are for ‘ghosts’ to be left behind.

Meltdown has a straightforward, though costly workaround on older CPU’s, On more recent CPU’s a hardware feature makes the workaround less costly.

The workarounds for this general class of speculative attacks (Spectre) however, look like special instructions to disable speculation across critical spots (in particular, across privilege boundaries), which programmers will need to manually include…. and if they miss one, they’ll be subject to this attack class — again. So, now us lucky programmers have yet another way in which we have to be perfect, or bad-things-happen.

New CPU architectures are incredibly difficult; you have to give up decades and trillions of installed software. Recall that intel tried once, with Itanium, and went down in flames.

The earliest OOO speculative CPU’s intel designed were designed in the late 90’s, when FDIV was fresh on people’s minds, almost 20 years before the 2013 post you cite. The goal of validation is to validate that the physical design matches the architecture — the written description of what is supposed to happen. The ‘CPU architecture’ describes what the CPU is supposed to do under all circumstances, e.g. under every error condition. Those bugs how up on erratum like https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/7th-gen-core-family-spec-update.pdf

I’m not a lawyer, so the legal and liability issues are way above my pay grade. :)

This still seems like excuse making for Intel. I’m supposed to care about the failure of Intel to design a better CPU, or that they can’t be bothered to spend the money to do so? Especially when the current design mentality is to hell with security, just give me faster CPUs…

I work in IT and I can’t even begin to tell you how disruptive this has already been. And I don’t see it going away anytime soon.

Finally, I don’t think one needs to be a lawyer to determine the basic legal and liability issues in this case. Anyone being prosecuted for these issues is another thing, however.

Your comment boils down to “CPUs are complicated.”

Yes, and that’s why enormous and highly profitable chip manufacturing companies have engineering teams, and bespoke tooling, and technical managers, to manage the complexity.

Since you don’t engage directly with my responses, I can assume we’ve achieved consensus. Good.

Sounds like excessive capitalist competition caused a major company with ubiquitous products to compromise on their product leading to a situation where millions of products and customers are affected.

What is interesting is how the management didnt seem to have consulted any tech departments before speeding up the chip design process to compete with ARM. That’s just bad bad management and another case of managers thinking they are far more able than they actually are.

Its not valid to use the “Let me set the scene: It’s late in 2013” quote as evidence that Intel may have or should have known of this vulnerability, or that vulnerabilities such as this were more likely.

That quote is evidence that Intel chose to scale back effort devoted to testing. That is an ordinary business decision that is not necessarily nefarious. My team constantly debates trade-offs of this nature: Does the value that we invest in testing result in a commensurate improvement in the value of the product ? Often times it does not, and so it makes sense to reduce that effort, or use more effective ways of finding and removing bugs.

The date of the quote, 2013 is long after Intel and other companies began to use speculative execution. Why should we infer that reducing test effort, long after the important design decsions were made, would somehow be an argument that Intel should have found the problem sooner (or did find it and weren’t telling anyone) ?

Even if Intel decided to devote *more* effort to testing, I believe they would not likely have found this bug. Testing (seeks to) demonstrate that the design as implemented meets its specification. No amount of testing can demonstrate that the design is free of problems that we haven’t thought of yet.

I believe ‘should have known’ is the wrong frame for viewing these exploits. Even if security researchers warned in 1995 that speculative execution, as implemented was suspect, it still took another two decades for someone to figure out an exploit that was actually usable. I can just see myself in front of our management saying “We can get a 30 % speed improvement. The security guys are saying there may be a way it could be exploited, but no-one has come up with a plausible scenario for that to happen – shall we go ahead with the design ?” No brainer. I know what my management would say already.

Disagree; in light of their previous debacle scaling back testing to meet a competitive push from ARM is a classic case of putting business interests before those of the end customer.

As far as not finding the bug…I point to Scott’s post of a white paper up-thread that points to possible issues with such a mechanism. From 1995. And while you do give an explanation for why this wouldn’t matter, that just seems like more excuse making.

This whole post comes across not only as an excuse for Intel’s flawed business practices, but a criticism that we should expect better.

I don’t blame Intel engineers for this. I don’t agree with the sentiment that ‘they should have known’ or ‘they should have tried harder’.

A previous poster succinctly stated: “To engineer is human”. It is a fundamental truth that all complex designs have bugs. We expect a constant supply of increasingly sophisticated technology. You could say that our entire economic system depends on it.

Bugs are generally a result of unexpected interactions or couplings between components. As designs get more complex, the number of components increases, the desired interactions become more complex, and the number of possible unexpected interactions explodes exponentially.

Security bugs are the very hardest class of bugs to forsee, because you have an enemy actively seeking to find the interactions you hadn’t thought of. The Spectre and Meltdown bugs occur as a result of exploiting particularly surprising interactions with leftover side effects of instructions that should have had no effect. No, I don’t blame the Intel engineers for not anticipating that coupling.

So to those folk who believe they deserve a free replacement CPU: Really ? The processor just does what it is specified to do. These speculative execution mechanisms are well known. You say Intel should have known of possible side effects. I could turn that around and say that buyers should have known that this exploit was possible. The CPU is operating according to its data sheet. We have all suddenly learned, that it should have been a different data sheet.

Thanks crusty engineer for looking at this realistically. There is not enough money in the world to make anything foolproof. If you have a secret don’t put it on a computer. Don’t hire someone to do a crime. Don’t have sex when your wife is pregnant…..Someday it will come out…Stormy Daniels?

> There is not enough money in the world to make anything foolproof

I think you’re confusing “realism” with straw manning. Do better, Intel apologists.

> it still took another two decades for someone to figure out an exploit that was actually usable

Since resources were not devoted to the task, it would seem unlikely it would happen rapidly, no?

not only not unaware

Not to be too critical, but wow I don’t think I’ve ever seen a triple negative before. It was a little hard to get right reading it.

Thanks for covering this, Yves. And by all means, to my health care provider: Fire up them thar EHR’s!! What could possibly go wrong?

I consistently refuse to let my health provider or her staff email me test results, etc. I want a good old fashioned telephone call – I still have a landline, yeah, I know – and I get what I asked for. Her office is good that way.

o what? ….no mention of intel’s hyperthreading bug?

https://www.theregister.co.uk/2017/06/25/intel_skylake_kaby_lake_hyperthreading/

Do you think the rot stops at cache related problems(Spectre, Meltdown) alone? Amazing how the “free” press in the country doesn’t pass the smell test! Amazing.

O what, no mention of Intel’s hyperthreading bug?

https://www.theregister.co.uk/2017/06/25/intel_skylake_kaby_lake_hyperthreading/

do you think the rot stops with cache related (Spectre, Meltdown) bugs only?

The “free” press doesn’t pass the smell test