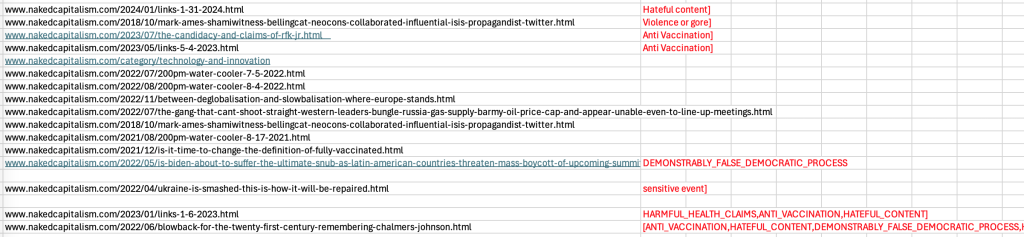

We posted briefly on a message from our ad service in which Google threatened to demonetize the site. The e-mail listed what it depicted as 16 posts from 2018 to present that it claimed violated Google policy. The full e-mail and the spreadsheet listing the posts Google objected to are at the end of this post as footnote 1.

We consulted several experts. All are confident that Google relied on algorithms to single out these posts. As we will explain, they also stressed that whatever Google is doing here, it is not for advertisers.

Given the gravity of Google’s threat, it is shocking that the AI results are plainly and systematically flawed. The algos did not even accurately identify unique posts that had advertising on them, which is presumably the first screen in this process. Google actually fingered only 14 posts in its spreadsheet, and not 16 as shown, for a false positive rate merely on identifying posts accurately, of 12.5%.

Those 14 posts are out of 33,000 over the history of the site and approximately 20,000 over the time frame Google apparently used, 2018 to now. So we are faced with an ad embargo over posts that at best are less than 0.1% of our total content.

And of those 14, Google stated objections for only 8. Of those 8, nearly all, as we will explain, look nonsensical on their face.

For those new to Naked Capitalism, the publication is regularly included in lists of best economics and finance websites. Our content, including comments, is archived every month by the Library of Congress. Our major original reporting has been picked up by major publications, including the Wall Street Journal, the Financial Times, Bloomberg, and the New York Times. We have articles included by academic databases such as the Proquest news database. Our comments section has been taught as a case study of reader engagement in the Sulzberger Program at the Columbia School of Journalism, a year-long course for mid-career media professionals.

So it seems peculiar with this site having a reputation for high-caliber analysis and original reporting, and a “best of the Web” level comments section, for Google to single us out for potentially its most extreme punishment after not voicing any objection since we started running ads, more than 16 years ago.

We’ll discuss:

Why these censorship demands are not advertiser-driven

The non-existent posts in the spreadsheet

The wildly inaccurate negative classifications

Censorship Demands Do Not Come From Advertisers

We have been running Google ads from the early days of this site, which launched in 2006. We have never gotten any complaints from readers or from our ad agency on behalf of advertisers about the posts Google took issue with, let alone other posts or our posts generally.2 That Google flagged these 14 posts is odd given how dated most are:

1 from 2018

2 from 2021

7 from 2022

3 from 2023

1 from 2024

It is a virtual certainty that no person reviewed this selection of posts before Google sent its complaint. It is also a virtual certainty that no Internet readers were looking at material this stale either. This is even more true given that 6 of the 14 were news summaries, as in our daily Links or our weekday Water Cooler, which are particularly ephemeral and mainly reflect outside content.

A recovering ad sales expert looked at our case and remarked:

No human is flagging these posts. This is all done entirely by AI. Google’s Gemini is notoriously bad and biased. Not sure if Gemini has anything to do with it. But if it does, it would explain a lot.

Advertisers want to communicate with people to sell their products to. It’s hard to imagine that they don’t want to sell products to your readers. And I’m pretty sure advertisers have no idea that the Google bot is blocking their carefully crafted communications from reaching your readers — which is what “demonetize” means.

Then too, advertisers are using the automated Google system to place ads, and they get to pick and choose some characteristics that publishers offer, and they can specify certain things. Google’s AI takes it from there.

But the fundamental thing is that advertisers want to connect with humans and sell them stuff. Blocking their communications to humans is not helpful in making sales.

I’m very worried about Google. It has immense power over the publishing industry. It controls nearly all the infrastructure to place ads on websites. It is now getting sued by publishers from News Corp on down, and by the FTC. They have lots of very good reasons – and you put your finger on one of them.

We have managed to reach only one other publisher, whose beats overlap considerably with ours, who also got a nastygram from his ad service on behalf of Google with a list of offending posts. But Google did not threaten him with demonetization as it did with us.

This publisher identified posts where the claims were erroneous and Google relented on those. This indicates that Google has been sloppy and overreaching in this area for some time and has not bothered to correct their algorithm.

Our contact was finally able to get Google to articulate its remaining objections with granularity. They were all about comments, not the posts proper.

It is problematic for Google to be censoring reader comments, since the example above underscores the notion that Google’s process is not advertiser-driven.

As articles about advertiser concerns attest, their big worry is an ad appearing in visual proximity to content at odds with the brand image or intended messaging.3

Reader attention falls off rapidly within posts and articles, which is why writers and publishers pay special attention to lead paragraphs; that’s also why advertising placements are overwhelmingly in prime real estate, towards the top of the page, or are popups, which nag the reader wherever they are. We do not allow any popup ads.

We do have one ad slot, which often does not “fill”, that is towards the end of our posts and thus near the start of the comments section. Since our comments section is very active, only the top one to at most three (depending on their length) would be near an ad.

So for the considerable majority of our relatively sparse ads, the viewer will see any ads very early in a post, and will get their impression, and perhaps even click through, then. It will be minutes of reading later before they get to comments, which often are many and always varied, with readers regularly and vigorously contesting factually-challenged views.

Unlike most sites of our size, we make a large budget commitment to moderation. The result is that our comments section is widely recognized as one of the best on the Internet, as reflected it being the focus of a session in a Columbia School of Journalism course. We do not allow invective, unsupported outlier views (“Making Shit Up”), and regularly reject comments for lack of backup, particularly links to sources. In particular, we do not allow comments that are clearly wrong-headed, like those that oppose vaccination.

So given the substantial time and screen real estate space between our ads and the the majority of our comments, and our considerable investment in having the comments section be informative and accurate, it is hard to see Google legitimately depicting their censorship in our case as being out of their tender concern for advertisers.

Non-Existent Posts in the Spreadsheet

Why do we say Google’s spreadsheet lists only 14 potentially naughty posts rather than 16?

Rows 4 and 12 are duplicate entries, so one must be thrown out. Row 7 is not a post:

Rows 4 and 12 both list the same URL: www.nakedcapitalism.com/2018/10/mark-ames-shamiwitness-bellingcat-neocons-collaborated-influential-isis-propagandist-twitter.html

Row 7 lists a URL for a category page: www.nakedcapitalism.com/category/technology-and-innovation

The URLs in rows 4 and 12 are identical, yet the AI somehow did not catch that. The URL in row 7 is for a category page, automatically generated by WordPress, that simply lists post titles under that category. How can a table of contents violate any advertising agreement when it contains no ads? On top of that, none of the articles on this category page are among the 14 Google listed.

But the category is “Technology and Innovation” and contains articles about Google, particularly anti-trust actions and lawsuits. So could the AI be sanctioning us for featuring negative news stories about Google?

The Wildly Inaccurate Negative Classifications

Google did not cite any misconduct on some posts in its spreadsheet. On the 8 where Google did, virtually all are off the mark, some wildly so.

Let us start with the one that got the most red tags. It was an article by Tom Englehardt, a highly respected writer, editor, and publisher. His piece was a retrospective on the foreign policy expert Chalmers Johnson’s bestseller, Blowback (which Englehardt had edited), titled Blowback for the Twenty-First Century, Remembering Chalmers Johnson.

Google’s spreadsheet dinged Englehardt’s article as follows: [ANTI_VACCINATION, HATEFUL_CONTENT, DEMONSTRABLY_FALSE_DEMOCRATIC PROCESS,HARMFUL_HEALTH_CLAIM].

Engelhardt was completely mystified and said he had gotten no complaints about this 2022 post. The article has nothing about vaccines or health. The only mentions in comments are in passing and harmless, such as:

….When I tell my Democrat friends that Biden’s COVID policies have been arguably worse than Trump’s, they are shocked that I would say such a thing.

The article includes many quotes from Chalmers’ book, after which Engelhardt segues to a view that ought to appeal to Google’s Trump-loathing executives and employees: that Trump is a form of blowback. So it is hard to see the extended but not-even-strident criticism of Trump as amounting to “HATEFUL_CONTENT].4

Perhaps the algo choked on this section?

He certainly marked another key moment in what Chalmers would have thought of as the domestic version of imperial decline. In fact, looking back or, given his insistence that the 2020 election was “fake” or “rigged,” looking toward a country in ever-greater crisis, it seems to me that we could redub him Blowback Donald.

The “He” at the start of the paragraph is obviously Trump but Engelhardt violated a writing convention by opening a paragraph with a pronoun rather than a proper name. The second sentence is long and awkward and doesn’t identify Trump until the end. Did the AI read the reference to “the 2020 election was “’fake’ or ‘rigged,’” as Engelhardt’s claim, as opposed to Trump’s?

The first comment linked to and excerpted: WHO Forced into Humiliating Backdown. Biden had proposed 13 “controversial” amendments to International Health Regulations. For the most part, advanced economy countries backed them. But, as Reuters confirmed 47 members of the WHO Regional Committee for Africa opposed them, along with Iran and Malaysia (the link in comments adds Brazil, Russia, India, China, South Africa). So Google is opposed to readers reporting on the US making proposals to the WHO that go down to defeat?

Another example is a post that Rajiv Sethi, Professor of Economics at Barnard College, Columbia University, allowed us to cross post from his Substack: The Candidacy and Claims of RFK, Jr. Sethi is an extremely careful and rigorous writer. This is the thesis of his article:

Kennedy believes with a high degree of subjective certainty many things that are likely to be false, or at best remain unsupported by the very evidence he cites. I discuss one such case below, pertaining to all-cause mortality associated with the Pfizer vaccine. But the evidence is ambiguous enough to create doubts, and the failure of many mainstream outlets and experts to acknowledge these doubts fuels suspicion in the public at large, making people receptive to exaggerated claims in the opposite direction.

Sethi then proceeded to provide extensive data and analysis of his “one such case”:

The claim in question is not that the vaccine is ineffective in preventing death from COVID-19, but that these reduced risks are outweighed by an increased risk of death from other factors. I believe that the claim is false (for reasons discussed below), but it is not outrageous.

Google labeled the post [AntiVaccination]. So according to Google, it is anti-vaccination (by implication for vaccines in general) to rigorously examine one not-totally-insane anti-Covid vaccine position, deem that the available evidence says the view is probably incorrect, and say more information is needed.

When we informed Sethi of the designation, he replied, “Wow that’s just incredible,” and said he would write a post in protest.5

Another nonsensical designation is Washington Faces Ultimate Snub, As Latin American Heads of State Threaten to Boycott Summit of Americas.

Google designated it [Demonstrably_False_Democratic_Process]. Huh?

The article reports on a revolt by Latin American leaders over a planned US “Summit of the Americas” where the US was proposing to exclude countries that it did not consider to be democratic, namely Cuba, Nicaragua, and Venezuela. The leaders of Mexico, Honduras and Bolivia said they would not participate if the US did not include the three nations it did not deem to be democratic, and Brazil’s president also planned to skip the confab.

The post is entirely accurate and links to statements by State Department officials and other solid sources, including quoting former Bolivia President Evo Morales criticizing US interventionism and sponsorship of coups. So it is somehow anti-democratic to report that countries the US deems to be democracies are not on board with excluding other countries not operating up to US standards from summits?

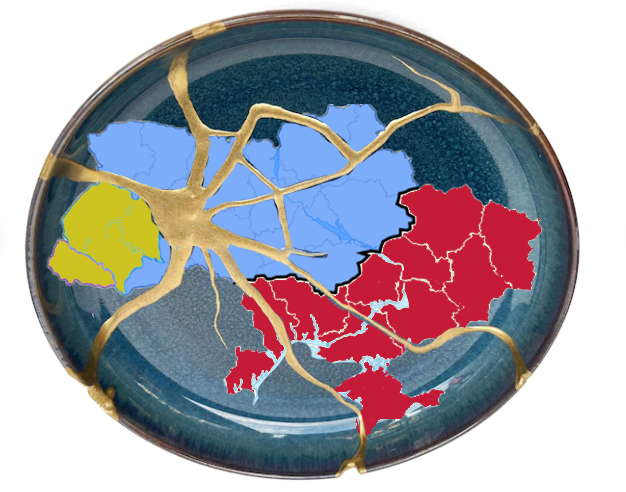

Another Google label that seems bizarre is having [sensitive event] as a mark of shame, here applied to a post by John Helmer, Ukraine Is Smashed – This Is How It Will Be Repaired.

If discussion of sensitive events were verboten, reporting and commentary would be restricted to the likes of feel-good stories, cat videos, recipes, and decorating tips. The public would be kept ignorant of everything important that was happening, and particularly events like mass shootings, natural disasters, coups, drug overdoses, and potential develoments that were simply upsetting, like market swan dives or partisan rows.

Helmer’s post focused on what the map of Ukraine might look like after the war. His post included maps from the widely-cited neocon Institute of the Study of War and the New York Times.

So what could possibly be the “sensitive event”? Many analysts, commentators, and officials have been discussing what might be left of Ukraine when Russia’s Special Military Operation ends. Is it that Helmer contemplated, in 2022, that Russia would wind up occupying more territory than it held then? Or was it the use of the word “smashed” as a reference to the Japanese art of kintsugi,6 which he described as:

Starting several hundred years earlier, the Japanese, having to live in an earthquake zone, had the idea of restoring broken ceramic dishes, cups, and pots. Instead of trying to make the repairs seamless and invisible, they invented kintsugi (lead image) – this is the art of filling the fracture lines with lacquer, and making of the old thing an altogether new one.

This peculiar designation raises another set of concerns about Google’s AI: How frequently is it updated? For instance, there has been a marked increase in press coverage of violence as a result of the Gaza conflict: bombings and snipings of fleeing Palestinians, children being operated on without anesthetic, doctors alleging they were tortured. If Google is not keeping its algos current, it could punish independent sites for being au courant with reporting when reporting takes a bloodier turn. That of course would also help secure the advantageous position of mainstream media.

Yet another peculiar Google choice: Links 1/6/2023 as [HARMFUL_HEALTH_CLAIMS,ANTI_VACCINATION,HATEFUL_CONTENT]

The section below is probably what triggered the red flags. The algo is apparently unable to recognize that criticism of the Covid vaccine mandate is not anti-vaccination. The extract below cites a study that shows that the vaccines were more effective, at least with the early variants, at preventing bad outcomes than having had a previous case of Covid.

The section presumed to be Google offending:

SARS-CoV-2 Infection, Hospitalization, and Death in Vaccinated and Infected Individuals by Age Groups in Indiana, 2021‒2022 American Journal of Public Health n = 267,847. From the Abstract: “All-cause mortality in the vaccinated, however, was 37% lower than that of the previously infected. The rates of all-cause ED visits and hospitalizations were 24% and 37% lower in the vaccinated than in the previously infected. The significantly lower rates of all-cause ED visits, hospitalizations, and mortality in the vaccinated highlight the real-world benefits of vaccination. The data raise questions about the wisdom of reliance on natural immunity when safe and effective vaccines are available.” Of course, the virus is evolving, under a (now global) policy of mass infection, so these figures may change. For the avoidance of doubt, I’m fully in agreement with KLG’s views on the ethics of vaccine mandates (“no”). And population level-benefit, as here, is not the same as bad clinical outcomes in certain cases (again, why mandates are bad). As I keep saying, we mandated the wrong thing (vaccines) and didn’t mandate the right thing (non-pharmaceutical interventions). So it goes.

Perhaps the algo do not know what non-pharmaceutical interventions are (e.g., masking, ventilation, social distancing, and hand washing) and that they are endorsed by the WHO and the CDC?

Or perhaps the algo does not recognize that criticizing the vaccine mandate policy is not the same as being opposed to vaccines

Unlike the Measles, Mumps, Rubella (MMR) series with which most Americans are familar, SARS-CoV-2 vaccines were non-sterilizing, meaning they did not prevent disease spread (“breakthrough infections“) at the population level.

Yet the vaccine mandates were based on the false and explicitly stated premise that if you got the Covid jabs, you could go out in public with no mask and not present a hazard to others. This premise was expressed at the folk wisdom level by the widely propagated slogan“vax and relax,” and at the official level by President Biden’s statement that “if you’re vaccinated, you are protected.” That bogus notion was reinforced policies like New York City’s Key to NYC program, where proof of vaccination wass required for indoor dining, indoor fitness, and indoor entertainment, and by then CDC Director Rochelle Walensky’s statement that “the scarlet letter of this pandemic is the mask.” Even Harvard, where Walensky had been a professor of medicine, objected strenuously to this remark, saying:

Masks are not a “scarlet letter” …They’re also just necessary to prevent transmission of the virus. There is no path out of this pandemic without masks.

As for HATEFUL_CONTENT, the only thing we could find was:

Victory to Come When Russian Empire ‘Ceases to Exist’: Ukraine Parliament Quotes Nazi Collaborator Haaretz. Bandera.

Haaretz called out the existence of Nazis in Ukraine. That again is not a secret. The now-ex Ukraine military chief General Zaluzhny was depicted in the Western press as having not one but two busts of the brutal Stepan Bandera in his office. Far too many photos of Ukraine soldiers also show Nazi insignia, such as the black sun and the wolfsangel.

The algo labeled Links 5/4/2023 as [AntiVaccination]. Apparently small websites are not allowed to quote studies from top tier medical journals and tease out their implications. This text is the most plausible candidate as to what set off the Google machinery:

Evaluation of Waning of SARS-CoV-2 Vaccine–Induced Immunity JAMA. The Abstract: “This systematic review and meta-analysis of secondary data from 40 studies found that the estimated vaccine effectiveness against both laboratory-confirmed Omicron infection and symptomatic disease was lower than 20% at 6 months from the administration of the primary vaccination cycle and less than 30% at 9 months from the administration of a booster dose. Compared with the Delta variant, a more prominent and quicker waning of protection was found. These findings suggest that the effectiveness of COVID-19 vaccines against Omicron rapidly wanes over time.” Remember “You are protected?” Good times. Liberal Democrats should pay a terrible price for its vax-only strategy. Sadly, there’s no evidence they will pay any price at all, unless President Wakefield’s candidacy catches fire.

Google dinged Links 1/31/2024 as [Hateful content]. We did not find anything problematic in the post. However, since early 2022, virtually every day, readers have been submitting sardonic and topic new lyrics to pop standards. The first comment, which would be near an ad if our final ad spot “filled,” was about the Israeli Defense Force sniping children. From the top:

Antifa

January 31, 2024 at 7:03 amUP ON THE GAZA WALL

(melody borrowed from You Make My Dreams Come True by Hall & Oates)My best shot?

I got some Arab kid in sandals

Up here that ain’t a scandal

It’s just a sniper’s game, yeah, yeahWe all talk

A lot our thoughts may seem like chatter

We get bored up here together

We shoot kids to ease our pain

Google’s search engine shows not only many reports of IDF soldiers sniping children (and systematically targeting journalists), but also that the UN reported the same crime in 2019, per the Telegraph: Israeli snipers targeted children, health workers and journalists in Gaza protests, UN says. Advertisers did not boycott the Torygraph for reporting this story.

Our attorney, who is a media/First Amendment expert and has won 2 Supreme Court cases, did not see the ditty as unacceptable from an advertiser perspective. Anything that overlaps with opinion expressed in mainstream media or highly trafficked and reputable sites is by definition not problematic discourse. It is well within boundaries of mainstream commentary even if it does use dark humor to make its point. Imagine how many demerits Google would give Dr. Strangelove!

The last of the eight was the post Google listed twice, Mark Ames: ShamiWitness: When Bellingcat and Neocons Collaborated With The Most Influential ISIS Propagandist On Twitter, but only gave a red label once: [Violence or gore].

Here Google had an arguable point. The post included a photo of a jihadist holding up a beheaded head. Yes, the head was pixtelated but one could contend not sufficiently. The same image is on Twitter and a Reddit thread, so some sites do not see it as outside the pale. But author Mark Ames said the image was not essential to the argument and suggested we remove it, which we have done.

Needless to say, there are 6 additional posts where Google has not clued us into why they were demonetized. Given the rampant inaccuracies in the designations they did make, it seems a waste of mental energy to try to fathom what the issue might have been.

We have asked our ad service to ask Google to designate the particular section(s) of text that they deemed to be problematic, since they have provided that information to other publishers. We have not gotten a reply.

In the mean time, I hope you will circulate this post widely. It is a case study in poorly implemented AI and how it does damage. If Google, which has more money that God, can’t get this right, who can? Technologists, business people being pressed to get on the AI bandwagon, Internet publishers and free speech advocates should all be alarmed when random mistakes can be rolled up into a bill of attainder with no appeal.

_____

1 We believe the text of the message from our ad service accurately represents what Google told them, since we raised extensive objections and our ad service went back to Google over them. We would have expected the ad service to have identified any errors in their transmittal and told us rather than escalating with Google.

The missive:

Hope you are doing well!

We noticed that Google has flagged your site for Policy violation and ad serving is restricted on most of the pages with the below strikes:

- VIOLENT_EXTREMISM,

- HATEFUL_CONTENT,

- HARMFUL_HEALTH_CLAIMS,

- ANTI_VACCINATION

- Here are few Google support articles that should be handy:

- Google Publisher restrictions

- Policy issues and Ad serving statuses

- Program policies

I’ve listed the page URLs in a report and attached it to the email. I request you to review the page content and fix the existing policy issues flagged. If Google identifies the flags consistently and if the content is not fixed, then the ads will be disabled completely to serve on the site. Also, please ensure that the new content is in compliance with the Google policies.

Let me know if you have any questions.

Thanks & Regards,

Here are screenshots of the spreadsheet:

![]()

For a full-size/full-resolution image, Command-click (MacOS) or right-click (Windows) on screenshots and “open image in new tab.”

Here are the full complaints about the last two entries, which are truncated in the screenshot above:

![]()

2Except for:

One private equity firm and one public pension fund objecting to our having published contracts, called “limited partnership agreements” that they depicted as confidential but were in fact in the public domain, which we quickly demonstrated;

One genuinely erroneous post (two CDOs had identical names, which did happen back in the day; we criticized New York Times reporting on one, not realizing it was different than the one we were familiar with). We immediately issued a very prominent correction and apology;

Being on the receiving end of tired trope of being anti-Semitic, as opposed to anti-Zionist, for calling out Israeli genocide in Gaza, or being depicted as a Putin lover and/or on a Russian payroll for being early to describe that Russia was wining and would win the war in Ukraine. Neither of these are the basis for the Google demerits;

Receiving a defamation complaint, well after the statute of limitations had expired, for factually accurate reporting as later confirmed by an independent investigation.

3 We have had numerous instances over the history of the site of Google showing a lack of concern about how its ads match up with our post content, to the degree that we have this mention in our site Policies:

Some of you may be offended that we run advertising from large financial firms and other institutions that you may regard as dubious and often come under attack on this blog. Please be advised that the management of this site does not chose or negotiate those placements. We use an ad service and it rounds up advertisers who want to reach our educated and highly desirable readership.

We suggest you try recontextualizing. How successful do you think these corporate conversion efforts are likely to be? And in the meantime, they are supporting a cause you presumably endorse. Consider those ads to be an accidental form of institutional penance.

4 There was a comment, pretty far down the thread, that linked to a Reddit post on police brutality, with a police representative apparently making heated remarks about how cops could not catch a break, juxtaposed with images of police brutality. I could not view it because Reddit wanted visitors to log in to prove they are 18 and I don’t give out my credentials for exercises like that. However, we link to articles and feature tweets on genocide. There are many graphic images and descriptions of it on Twitter. And recall the Reddit video was not embedded but linked and hence was not content on the site.

5 Sethi’s post received many comments and they included ones that debated the safety and efficacy of the vaccines. We do not allow comments that oppose vaccination generally. Nor do we allow demonstrably false claims about the Covid vaccines. Some readers did make factually grounded criticisms (with support from studies); others made sloppy statements that other readers beat up. It seems backwards not to allow debate, when held to high standards of argumentation and evidence, on important public health and political issues

6 Helmer used this image in his post and we reproduced it. Could that have put Google’s AI on tilt?

I would think that this site has been targeted deliberately, and the content citations applied by a human censor who had to satisfy some minimal parameters in order to demonetize you.

The experts who have looked at this do not agree. For instance, labels like [HARMFUL_HEALTH_CLAIMS] are data fields, so even the designations are clearly computer generated. And a human could not possibly review 20,000 posts and their comments (recall the other publisher was dinged for the comments). We have nearly 2 million comments, and easily over 750,000 from 2018 to now.

And no human would have made errors like including a category page or duplicating entries when there were only 16 cited.

Reading the footnotes, it looks like the spreadsheet isn’t from google, but from the ad service and created by human, who copied the data from somewhere? That would explain the duplicates, the formatting inconsistencies (why there are random empty lines, why some links are underlined and others not, etc.). So the question then is what the original data is.

Please see the note at the top of Footnote 1.

I have pushed back VERY aggressively with the ad service and it is clear from their behavior that they are confident that they replicating what Google sent them accurately. It would take work to introduce duplicates and bogus tags as opposed to a simple copy and paste and forward.

My extensive questions and evidence would have led them to review their work before passing on the objections on to Google, which they tell me they have done. They would not want to look like morons who misrepresented Google to Google, who is the big powerful actor in this picture.

Sorry, you are assuming:

1. A large bureaucracy like Google is well run

2. Critically, that most sites would not simply and VERY VERY quickly delete a tiny % of offending posts. Even our contact that pushed back for one round capitulated on the second. Oh, and has insisted I write up his case in a way that does not put him at risk of exposure.

I would imagine that #2 applies to a huge majority of sites, that they don’t wan’t to fight City Hall and offend Google by making a stink.

Nothing like this happens in onesies, which is what your thesis is. Not credible on its face.

Thank you Yves for standing up to the bully here and refusing to back down, regardless of how small the ask is by Google.. as you note, that’s what they expect and it becomes a slippery slope once you do so.

Yves, these experts are alleging the algorithm is fundamentally borked in a fantastically major way which would have very high impact across the interwebs, would be reported widely. And we’re not seeing that.

Can I suggest an aternative explanation is that a three letter agency or panel passed NC to Google for “enhanced review”, citing these very flags? That Google is captured the same way Twitter was/is?

The flags as you’ve described look a bit like an attempt to dig up something, anything, valid or not.

That’s my thought at well. This seems like a rerun of a 2016 WaPo effort. The algo stuff is a hocus-pocus fig leaf. The Twitter Files are instructive, imo.

Same here. It’s not the first time NC has been called out by clowns like this. My suspicion is some human being, either someone at Google or more likely one employed by the US government who is in frequent communication with Google, decided they didn’t like this site. Then they sicced the algo on it and told it to find some bad words they could use as an excuse to threaten.

We’ve already seen from the twitter files that all kinds of government employees are in close contact with the tech companies, to the point it has become very difficult to see any boundary between ‘private’ and ‘public’ at all.

I find that persuasive and wonder who might be aware of difficulties at other sites. Seems like Taibbi would be interested.

He is on it: I just got a copy of his Racket News post in my e-mail.

https://www.racket.news/p/meet-the-ai-censored-naked-capitalism?utm_source=substack&publication_id=1042&post_id=142855750&utm_medium=email&utm_content=share&utm_campaign=email-share&triggerShare=true&isFreemail=true&r=b8spr&triedRedirect=true

Our ad expert said Google is known to be lousy at AI. witness Gemini.

Our one publisher source had very bad results, to the extent Google capitulated on all save for some comments, where he relented. I infer comments are not as big a draw on his site as here.

I can easily imagine nearly all publishers would immediately fold, due to the assumption that fighting Google is a losing proposition, particularly over a small # of post in percentage terms, and ones with NO current revenue value. It has the desired chilling effect with no economic consequences. And having the AI be demonstrably terrible provides plausible deniability if there is a political agenda.

It was a monster time sink to do the analysis we did, which did not extend to the posts where Google gave no reason.

Why do you refuse to recognize the power dynamics in play? Did you miss the Taibbi work on Twitter?

The Gemini disaster did become a laughing stock world wide and Goog lost several percent on its stock value. Gemini’s failure was wide spread and easily observable to anyone who looked.

Going after one site or one twtr account or even a few at a time remains hidden to most people. Not obvious to the general public. “Terms of service violation per algorithm.” That’s by design. imo, and an insidious way to silence alternative voices one-by-one. And there’s no way to win through compliance. Ask Joseph K. / ;)

So…. more fund raisers?

Our ad expert said Google is known to be lousy at AI. witness Gemini.

I don’t think that this statement is factually correct. Transformers were invented by people at Google although they have now all left. Nevertheless, Deepmind is part of Google. Google’s research is very good. The problem is that Google is currently bad at making products out of AI. This is because the the people making the products are expected to conform to an ideology, whereas research must prove some improvement on a benchmark.

There is one thing you could perhaps do but it might be forbidden by Google’s Terms and Conditions: you could serve different content to Google’s search bot than to normal users. For instance, you could not show it user comments which would relieve you of it moderating your pages based on content other than your own.

I had to sleep on this but I have to agree that the “flagging” appears to be targeted.

Whether this targeting has been directed by a three-letter agency, or like PropOrNot is simply a few clowns inside Alphabet/Google Working Toward the Führer, I have no idea, but some dumb AI algo would be popping-up elsewhere. This doesn’t appear to be the case.

One simply has to look back at the 2012 election after the Obot had been unmasked as a Golden-Sacks Manchurian Candidate. The eBay/PayPal/Palantir mafia went into high gear to make sure that Taibbi and Greenwald were tied-up in the creation of FirstLook Media, silencing left-critics during the campaign.

Biden has been a shill for Palantir since 2010 and has picked Google/Alphabet executives for key posts throughout his presidency. As with the Tik-tok ban, de-platforming left-criticism appears to be the strategy for 2024.

I smell a rat.

I would argue that we are hearing that. Elon Musk, for example, never shuts up about it. It’s just being dismissed as conspiracy theories and fantasy by the Serious People.

Granted a large percentage of it probably actually is that, but I think this is an example of the fire behind the smoke.

How clever and convenient to hide this blatant censorship and harassment in an AI black box — no humans involved. I suspect deliberate foul play along with many other commenters in this thread and in the comments that follow below. I view this attack on Naked Capitalism whether by AI algorithm or AI algorithm unaided by human oversight as an egregious and deliberate attack. I doubt Google would share the information, but I must wonder how many other sites the AI has targeted coincident with its targeting Naked Capitalism. The performance of the AI algorithm appears sufficiently broad and indiscriminate to leave me wondering just how many websites would pass muster. Were they all indicted with claims of their violating Google policy or were some that generated more revenue for Google given a ‘closer’ review?

Google is a monopoly. Its actions violate the ‘sacred’ principles of the Market, and basic freedom of speech, regardless of fine print in their disclaimer boiler plates. What happened to the fallacious Neoliberal concept of a Market of Ideas? Dark ties between Google and certain alphabet agencies, leave me very suspicious this is a deliberate attack on Naked Capitalism. This suspicion alarms me in the extreme. Even should the issue be ‘resolved’ with Google, I believe it has already wreaked a terrible cost in aggravation to Yves, and a heavy cost on her time, and her ability to focus on other issues. I am angry.

Suppose the AI in Musk’s ‘cars’ started hitting many pedestrians — but predominantly, certain pedestrians, a special few.

Or drowning them.

https://www.independent.co.uk/news/world/americas/angela-chao-mcconnell-report-death-b2510728.html

This is an almost stream of consciousness comment. And it might be a stretch, but aside from the obvious incompetence, I would guess that like the problematic algorithms, rampant number of surveillance cameras, and frequently inaccurate drug field “tests” used by the police, the purpose is to appear To Be Doing Something. The fact that innocent Americans are arrested, charged, often convicted or pled out, of everything from being drunk, a meth user, or a murderer, every single single day because of the faulty technology is less important than in arresting people. It makes for good statistics, elections, fines, fees, asset forfeitures, and PR with the victims being usually being too poor, desperate, or lacking the connections to resist and therefore unimportant.

The use of faulty AI not only saves money by discarding humans from the loop, it also allows the use of the claimed near infallibility of the propriety technology to not be challenged. Restated, it must be true because it told me. The Bad Words, Actions, Drugs must have been committed by this person because of the Infallible It saying so, and because of the complexity of the algoes, or the proprietariness or trade secrets, or just legal inertia plus the wealthier party’s money including that of the legal-security state, the smaller, often completely legal target, is unable to fight back.

The incentives for using such technology is not in the proper and accurate functioning, but as both a weapon and for making money, which the surveillance-security-police state and the political system is configured or meant to do. Corruption. A functioning society with a functioning government is not important, it is often a hindrance for making money. Lastly, all this forces everyone to be as unnoticeable, as bland, as unthreatening as possible to avoid being destroyed. To self censor as much as possible.

I agree with you. Google Monopoly is a key and must end. In fact we would have no need for such conversations and NC painstaking analysis if the were multiple alternatives to Google in monetizing web traffic while those advertising platform would have to uphold language norms and community standards of those peoples who actually use it and not the other way around.

However, most devastating threat of such deliberately designed AI algorithms is not only culture of inducing self censorship of Ideas deviating from imposed forms and content narratives but a very notion of delegating decision making authority to AI at all or at least without strict process of appeal to a human authority of third uninvolved mutually agreed Party as it is epitomized in legal trials.

That point should be of fundamental value in expressed in writing by humans of required regulations regarding all AI together with designation of specific legal/physical identity of decision maker under moniker of AI. If AI decision has social consequences it must be a subject to law. If Google Corp AI like Gemini made a decision it must be considered Google Corp’s or better Google executive’s in charge by name decision who legally speaking took this decision by default or by statute even with no particular intention to make it.

Anything else is a violation of habeas corpus, right to face accuser, equality and fairness under the law. In extreme the AI systems should be “testifying” in court to reveal decision motives and having their software examined. It seems harsh but absolutely necessary in my opinion or we will have lawlessness as criminals would hide under AI immunity.

It may be a silly example but in one original Star Trek episode Captain Kirk was de facto accused by Large Language Model AI system of committing a crime only proven by Spock innocent as AI algorithms were rigged by a human producing fake evidences and conclusions. That fact of fallibility of AI must be the fundamental assumption of all AI laws.

Maybe a naive question, but do you need Google ads?

If they are hell-bent on shooting themselves in the foot with over the top censorship, they’ll eventually lose a lot of customers.

They are only a small portion of our total revenue but this site is so lean and the fundraising environment has become so hostile that we can’t afford to lose $. To put it another way, you can drown just as easily in six inches of water as in six feet of water.

Hanlons razor?

Regardless, this reminded me to add to the tip jar today! By a mile, NC provides the best content and comment section for us wanna-be independent thinkers. Thanks for what you do.

Thanks so much for your support!

Google monopolizes the display advertising market. Facebook and other competitors can only offer much lower rates.

Considering how large bureaucracies work, I think there is an additional point in taking the fight here. Getting flagged as not able to get Google ads may make it easier to get more flags within Google or other large bureaucracies if they share warning flags (now or in the future). I think the Twitter files are instructive in how spooks can operate in secret and ostensibly within rules in order to bend rules.

Given that, I think it is wise not to yield an inch.

Yeah, I’m similarly confused. People have options.

There’s no human right to doing business with google, and if they don’t want to serve ads here, there are other options. It would be far more grevious if a private website had to do business with companies they didn’t want to. Or if Yves couldn’t delete posts that bother her.

This new Elon Musk definition of “censorship” where people can demand that other people publish and promote their material is not entirely consistent or practical.

It was never like this when people would write a letter to the editor, and then get in a huff if it wasn’t posted after the editorials.

The part you are missing is monopoly, as the ad expert clearly identified. Google is abusing monopoly power.

The EU is pushing against this harder than US regulators are but these cases take many years to prove out.

If there were competition, Google would not dare try stunts like this,

My thoughts exactly, BigTech monopoly abuse of power writ large here. Maybe I’m just naive, but I thought there might be some sort of anti-trust laws still on books, not that they are enforced, but still.

This is what I call Inverted Totalitarian Tyranny

Lawyer up. Otherwise, you will never contact a human being.

Also, someone needs to set up an old contextual ad network, where the content of the web page determines the ads, and not a detailed dossier of the user.

Context advertising is more effective, see here, here, and here, but the big advertisers push stalker advertising because it creates a barrier to new entrants.

With contextual advertising, you don’t need to have a thousands of terabytes in a database to sell ads, you just need competitive prices, so Google, and Amazon, and Facebook, and Apple have sold the myth of stalker advertising, and colluded with each other, to maintain the oligopoly.

Lawyers are not productive for exercises like this. You can be sure the ad agreement allows Google to make determinations. The only argument might be that the agreement or Google’s use of it violates basic contractual principles of good faith and fair dealing.

Google has more money than God. The way people on Twitter have gotten bannings reversed is via public shaming. If you want to help, send this post to sites or writers that might be interested, such as free speech advocates or tech experts that would be alarmed about this severity of algo fail, or feel they are vulnerable.

I would like to start a campaign to publicly shame any lawyer who works for big tech. We are trained in law school to advocate for our clients. But advocating for Big Tech is no different than being a consigliere for a Mob Boss. In some law schools, some students are generating heat for sending their graduates to work for firms that support the genocide in Gaza.

We need a similar campaign here so that young lawyers equate working for Google or Amazon with working for a firm that supports apartheid or Israeli genocide.

“But advocating for Big Tech is no different than being a consigliere for a Mob Boss.”

The same can be said for not just Big Tech but for any monopolistic corporate entity.

I suggest sending a notice to Cory Doctorow. He will probably take your message more seriously than from a random NC commentator.

I am horrified to read this. Like the first commentator, I think you are being censored, not necessarily for the content that has been flagged, but for other content that is of high quality, that is widely read and that undermines the narratives of our new elites, and that consequently threatens their politics and the MSM. It would be to GuggLL’s advantage to not offer legible or rational reasons for demonetizing, precisely in order to keep you from effectively either protesting or using moderation to get out of trouble. Think of AI generated communication as a kind of white noise true whose purpose is to drown out any structure of truth or actionable communication that could be cited in a protest or legal communication. AI is a white noise generator that our evil new masters can turn turn up or down to drown out many conversations, and I think that is going to be its major use, going forward. You are just an early victim.

I noticed two things on the blog shortly before your first story on demonetization appeared. First, you reported a story on Mike Benz–who the intelligence types truly hate. Second, a small ad appeared on my screen on your site for A*I*P*A*C, and this seemed odd and out of place to me, and I wondered idly if it were for some kind of tracking purpose.

I’m sure you know there are other ad companies. But I am not a technical wizard and I cannot imagine how much work it would be to change over, or what costs such a change would impose. G should be legally vulnerable for this sort of thing, but they are too big to be sued effectively, which they know, of course. And law, in America, is at it’s end anyway, except as a form of entertainment. (Emoticon: frowny face, machine gun, pool of blood)

The truth is there are no other ad companies. See the statement from the recovering ad expert, how Google completely dominates the ad space. Even though our readership has extremely attractive demographics, we are so small we are bundled with other sites who are seen as similar to sell the few premium (non Google AdSense) ads that we carry. There are only two ad services in this space. Both use Google AdSense to fill “remnant” ads.

Probably being very naive here but if there are no other ad companies and Google has a monopoly here, then cannot the Sherman Antitrust Act be used against Google and force them to make some actual changes? Then again, the government being best buds with Google who has the power to demonetize any people that the government does not like is not a relationship to be lightly thrown away.

On an ideal, frictionless, non-rotating earth, the FTC would get involved here. Lina Khan has shown that she takes anti-trust seriously, winning several high-profile antitrust cases such as the JetBlue-Spirit proposed merger (enjoined and now DoA) and breaking up the American Airlines-JetBlue NE alliance (ruled as harmful and anticompetitive.)

One woman and one agency can only fight so many battles. And I fear for her should Trump win and turf out any agency heads that aren’t captured stooges of industry (here’s looking at ya, Mayor Pete.)

I don’t know if the FTC has a process for submitting complaints, but it wouldn’t hurt to submit one on Google.

Pretty sure regulatory capture is a bipartisan passion. Have a look at this Statement of the Federal Trade Commission Regarding Google’s Search Practices

In the Matter of Google Inc.

FTC File Number 111-0163

January 3, 2013

Thanks, that was during the Obama administration. A reminder that all that “hope and change” turned out more like the Who song: “meet the new boss, same as the old boss.”

BTW I would like to reiterate that Lina Khan is doing great things in swimming upstream against the currents. She’s got the chops; must be making a lot of enemies, though. May God protect her and let’s celebrate her during Women’s History month.

Agreed on Khan, not sure how she’s (politically) survived so far.

My best guess is that she was nominated by Josef Biden as a bone to throw to the left. His handlers probably thought by containing her to the FTC she would not make much headway, but she has surprised them by punching above her weight.

Karl Denninger has made the point that the Sherman Act includes criminal penalties for violations. It is one thing to take on the airline industry, but the big tech companies have illions and going after them will require jailing executives like Andy Jasse.

That means to truly be effective, we need a Teddy Roosevelt who will sic not just the FTC but the DoJ on these wrongdoers. Nothing sends a message like a CEO behind bars. Fines are a joke.

Here’s hoping. Not holding my breath.

Some great posts thanks!

Really, now. Couldn’t you show a little gratitude to the man who took on predatory music teachers?

When I read articles like this I’m left with the uncomforable feeling that I’m actually living in a foreign country ruled by algorithms far away from the nostalgic promises on which democracies are founded. Do no harm? Who are they kidding?

The model screens for likeness. No ML models understand the content even if they fake it. What google is doing under the guise of “we can’t read every article” is feeding the model a bunch content they don’t like and telling it to find similar content without understanding the content itself.

This is a massive advantage because they can censor (through threats and acts of demonetization) sites who break the rules AND sites that don’t break the rules but aren’t using the internet in a way they think it should be.

It’s like when merchants like Visa or Mastercard freeze your payments because something looks fishy even if you’re doing nothing wrong. This enforces that you use their payment networks the way they want you to – because your account will freeze because it looks like fraud or criminal activity if you do anything else. People wonder “will this look like fraud?” not “is this fraud?”

This is what google is doing. Use the internet the way we think you should or you’ll set off an algorithm and it’ll be a huge headache for you.

Of course you’re not doing anything wrong, you just don’t want it to look like you are and have the algo pick it up so we’ll change these words. Self-censorship by a million cuts.

The high caliber of the articles, links, water coolers, and comments on this website are as a beacon that sheds light on a world that is in many ways very dark. It is therefore no surprise that this sight (pun intended) attracts the attention of Google AI. Nor would it be a surprise if there were human hands behind the AI facade. Carry on NC…thank you!

Not really a surprise, as Google has always been too clever for our good with algos.

After all, the initial claim of fame, used linking as metadata for how it ranked pages it crawled. Quickly the more unsavory side of the web latched onto this with link farms that went round in endless loops while wrapping them in ads.

And things has only gotten worse since they acquired Youtube, and went on to create a single login across their services. End result is that one too many incendiary comments on Youtube and your decade old gmail account go down the drain. And your only recourse is hope you can make enough of a social media stink to get some human to intervene.

And with now certain groups have discovered that targeting the money flow is a far more reliable tool than getting judges involved, expect to see far more of this going forward.

Reminds me of the movie “Brazil’, this technology is clunky & unimpressive but has insidious outcomes that seem to be a feature and not a bug. Public shaming is probably better than a postmortem analysis of why this happened, does anyone have a line to someone like Matt Taibbi? Seems like something he’d cover on his beat.

I have a creative legal argument in my head that AI as used by airlines to crapify the customer experience, or perhaps here in mislabeling user generated content, could be sued as a “private nuisance.”

GA code reads:

Missing your flight because a chatbot powered by shoddy AI sounds like a clear case of inconvenience to a reasonable person. So does having to find new revenue to replace Google ads.

I anticipate that our Tech Lords next move, especially if JFK Jr. and the state of Missouri win in the SCOTUS case on censorship by Big Tech, will be to put AI in place to do the censorship and plead that “it wasn’t me” who violated Americans civil rights, it was that buggy AI!”

Air Canada already tried this in Canada and lost the case. But that won’t stop Big Tech from trying.

Having a US Court declare AI a nuisance would allow an injunction against the nuisance as one remedy, along with damages (lost ad revenue to this site, for example.)

A couple quick observations.

As articles about advertiser concerns attest, their big worry is an ad appearing in visual proximity to content at odds with the brand image or intended messaging. I for one would think that all mighty AI could prevent this pretty easily. Maybe my opinion of AI’s abilities is inflated.

For the second time I’m going to repost this link from Links a week ago Sunday, THE WEAPONIZATION OF THE NATIONAL SCIENCE FOUNDATION: HOW NSF IS FUNDING THE DEVELOPMENT OF AUTOMATED TOOLS TO CENSOR ONLINE SPEECH “AT SCALE” AND TRYING TO COVER UP ITS ACTIONS, Interim Staff Report of the

Committee on the Judiciary and the

Select Subcommittee on the Weaponization of the Federal Government

U.S. House of Representatives

Turns out this is worth a careful read.

I wonder if we as a group were to click on the ads more maybe that might pacify the google gods.

I actually stopped clicking ads a while ago and donated more on the assumption that this kind of attack would come sooner or later.

Wouldn’t your money and effort be better put to something else, such as advertising NC itself, or distributing selected articles and links via Twitter?

Not saying buy anything. The appearance of traffic might be something.

To put these pieces together creatively, would it be possible to buy advertising on the site? Is it possible to request where your add are served with Google or another as service? If it is, perhaps one could set up an NC foundation where patrons buy ad space and NC collects the revenue but the grassroots purchasing ensures the site is never red-flagged by the algorithm? Or NC could simply use some donations to buy off Google with ad purchases like the mobster it is….

I use an ad blocker. Does Google know that my ad is being blocked on NC? If so, do they penalize NC for ad blockers monetarily?

Careful with that, since an unusual pattern of ad clicks can also end up getting the site “demonetized”. They look for this stuff as evidence of click-farming, a way to defraud internet advertisers. It’s the old heads they win, tails you lose.

This hits close to home. As one of the contributors of sardonic parodies, it is chilling to see a fellow commenter’s fair-use parody singled out by an algorithm. I have a suspicion that if AI was used to identify that as objectionable, then half the stuff I put out there could be singled out. AI is clearly unable to distinguish a sarcastic tone. It may have malfunctioned by interpreting Antifa’s line “we shoot kids to ease our pain” in his brilliant parody as being a literal endorsement of homicide.

I worry that what the government cannot do through direct action due to the 1st amendment, it is attempting via the back door. A corporation that grows as dominant and powerful as Google falls to the temptation to get a substantial amount of guaranteed revenue through anti-competitive tactics. Some of these tactics rely on becoming dependent on Government, like lobbying for laws that put up barriers to entry to new market entrants, and taking on an increasing amount of business that depends on government spending and grants.

In the case of pre-Musk Twitter, we know that Twitter (now X) literally hired agent from the FBI and DoJ to work there. These folks had no resume to suggest they would be technology hires. They were probably hired to identify speech that the government did not like, and censor it, similar to how Google is doing the same to this site.

The key difference here is that NC’s use of Google ads is voluntary, and as Yves pointed out their User Agreement probably contains a clause giving them the right to kick anyone they don’t like off their ad platform. And we don’t know if Google has anyone on staff who also works for the DoJ or FBI. But it wouldn’t surprise me.

Keep an eye on this case:

https://childrenshealthdefense.org/wp-content/uploads/2.14.2024-Memorandum-Ruling-on-PI.pdf

Kennedy v. Biden

From the Judge’s ruling (this was not covered well by the MSM)

IT IS ORDERED that the Motion for Preliminary Injunction [Doc. No. 6] filed by the

Kennedy Plaintiffs is GRANTED IN PART AND DENIED IN PART.

IT IS FURTHER ORDERED that the White House Defendants, Surgeon General

Defendants, CDC Defendants, FBI Defendants, and CISA Defendants, and their employees and

agents, shall take no actions, formal or informal, directly or indirectly, to coerce or significantly

encourage social-media companies to remove, delete, suppress or reduce, including through

altering their algorithms, posted social-media content containing protected free speech. That

includes, but is not limited to, compelling the platforms to act, such as by intimating that some

form of punishment will follow a failure to comply with any request, or supervising, directing, or

otherwise meaningfully controlling the social-media companies’ decision making process.

We should all be praying that the SCOTUS rules in favor of Kennedy, and that this injunction goes into full force (it is currently stayed pending the outcome of the case before the SCOTUS.) That will slow down some of the censorship, but won’t necessarily help NC.

As someone taking on law school later in life, I would be happy to assist in any way with a case against Google, doing legal research or anything that doesn’t require an actual law license.

We need more people like you, keep up the good work!

re: “AI is clearly unable to distinguish a sarcastic tone. It may have malfunctioned ….”

My opinion is that in these situations AI is clearly working as intended. There is no bright line for terms of service violations, there is no bright line for AI to get right or wrong. Instead, these “oopsies” (which they are not) are intended to sow confusion in the public’s mind about what is and is not allowable, what is and is not truthful or correct, to keep the public in a general state of confusion and unable to push back against this overreach, turning solid ground into sand. Mistakes are not made by AI chatbots and the like. This is the whole point of their creation and use. / my 2 cents.

It’s a very, very old trick. Adding, from Genesis 11, remembering the word Lord has 2 meanings. / ;)

5 And the LORD came down to see the city and the tower, which the children of men builded.

6 And the LORD said, Behold, the people is one, and they have all one language; and this they begin to do: and now nothing will be restrained from them, which they have imagined to do.

7 Go to, let us go down, and there confound their language, that they may not understand one another’s speech.

8 So the LORD scattered them abroad from thence upon the face of all the earth: and they left off to build the city.

https://www.kingjamesbibleonline.org/tower-of-babel_bible/

So, if I understand correctly, AI confusion is a feature, not a bug.

Ergo, plausible deniability is achieved. Cue Big Tech playing the role of Bart Simpson … I didn’t do that!

You may be correct.

From a legal standpoint, though, that may not fly in a courtroom. The law varies as to an actors “intent” being determinative of guilt, or in civil tort cases, liability.

Unintentional torts, such as negligence, and nuisance, don’t require action. Inaction may be enough, That’s why I am hopeful that some clever lawyers can attack AI as a “public nuisance.”

What targeted dollar figure do you need to reach to “cancel” google?

Perhaps a “cancel google” fund raising party is in order.

Perhaps a “cancel google” fund raising party could be a new theme for those sites that have the audacity to use language as intended.

I also think this is the early warning shot across the bow for the supposed election in November.

Again, if you had a “cancel google” fund raising party what might the target be? Inquiring minds need to know.

PropOrNot put the kibosh on you years ago. Now the Star Chamber goes into action, and there is little you can do about it. You can analyze; be reasonable; raise bloody hell. You have been stigmatized by the higher power of Artificial Intelligence and algorithm. And you have recourse to who exactly? The future…we are well and truly screwed. Maybe the survivalists have been on to something all these years.

Yeah, but at least informed people have made “PropOrNot” the butt of jokes. Other “fact checkers” like Snopes seem to be run out of Langley as well. Pretty much anything to do with Russia, China, Israel/Palestine and international affairs/foreign policy shows a clear bias. (in favor of US policy of course)

No, we beat back PropOrNot. That was just a bunch of probable UkroNazis (not making that up, some had screenshot their older Twitter posts) who got a CIA asset who had been pushed off the intel/security beat at the Post to tech, and then got the piece published on the Wed before Thanksgiving when there were no grownups in charge to stop him. I was told there was an ENORMOUS row at the Post, those in his old department were furious that he’d gone over their head and were demanding he be fired.

Also Columbia Journalism Review and others severely criticized the Post for that.

And that was 2016.

Yes, you beat them back, but NC along with scores others was identified as an enemy of all “right thinking people.” This kind of slander sticks. For a profile of how all “right thinking people” think, check out the back page of today’s FT. There is an eighth of a page advert. by the notorious CIA catspaw Bellingcat with a clarion call to the virtuous everywhere on how exactly to think right.

Those algorithms…

With reports like this, my mind often drifts to how the algorithms have crappified job searching and all the implications left to come from these algorithms being tied to something like a job search.

Brilliant, case-by-case takedown of the claims against the site, which were clearly due to the fact that it is a serious outlet, and does not frame issues with recieved wisdom, which is also why it has the readership it does. I’m sorry the (probably inevitable) censorship has come for you, but they don’t know who they’re dealing with.

I am new to the site and donate as its does a fantastic job, nice to ‘walk amongst’ some of the great people who comment on here! You are all so much smarter than the Unherd mob! :-) (Genuine btw not sycophantic)

This is so wildly Kafkaesque, the mind reels. I have no doubt this is a symptom of the aforementioned Gvt collusion with Big Tech for big censorship.

Just look at how a supposedly liberal (progressive?) supreme court justice expressed their views on the new 1st amendment case:

Our beloved beacon or rational thinking and analysis has been smeared an environment of threatening circumstances. Seemed only a matter of time?

That’s the Supreme Corp for ya!

The sentence has already been decided upon, but first they must find the crime.

Wal, I’ll be danged — it’s always Korematsu vs. the U.S.* time, isn’t it?

Your supposedly iron-clad guaranteed rights turn out to be null and void if the government sees in you a risk or harm — which, alas, is precisely the moment when you need that guarantee. So the supposed iron-cladness is made meaningless.

* FDR, national savior and Democrats’ hero, sent natural-born citizens to concentration camps on the basis of their racial ancestry alone, and despite Constitution and Bill of Rights, the Supreme Court ruled 6–3 that that was A-OK.

No doubt the list of violation was machine-generated, but I’m of the camp that this “finding” was human-directed, especially as the western censorship-bug is seemingly now as contagious as covid.

It will be interesting to see how goo-gull responds… IF they respond.

I’ve read two articles already that claim AI, in whatever application, has a suicide preference in that instead of creating finer definitions it does the opposite, it eliminates distinctions so everything is easier to classify. Considering how vacuous the above complaints are, in terms of maintaining decorum (ha!), I’d say this is a good example. Gotta wonder what Google would if it got into a real food fight. Fling some puréed carrots?

Are there any efforts to legislatively force Google to reveal its methods? The public ought to be able to analyze the algorithm it’s using.

How many people at Google oversea the algorithm? Is it something that controlled by a small unit in Google, and who are they accountable to in the company? The algorithm almost certainly is programmed with the same biases as its creators.

Final question, is anyone out there trying to “crowd source” (if that’s the right term) data from how the algorithm works, in order to get more accurate sense of its targets? While it is obvious that Google has targeted perfectly legitimate posts on NC, it seems to me that these particular posts were not unique. I would love to know why it chose these and not others on NC.

I don’t believe Google should be allowed to keep this hidden. Google has become too important to communication: these algorithms must be made public.

“How many people at Google oversea the algorithm?”

I would say zero. AI oversees the algorithms. Algos are much too complicated, at Google’s level, to be overseen by a human. And AI overseeing would explain the scattershot choosing of offending posts. Just speculating but it seems logical. If there are Googlers present, please reply with a yeay or nay.

I meant to write oversee, of course, but I also meant oversee the development and programming of the algorithm, the programmers who choose the data its trained on, the training method, etc. I agree that once the algorithm is out there “doing its thing,” there is probably very little human oversight, although you’d hope they would use humans to at least supervise samples of the results. I’m very, very curious to see if NC gets some kind of response from Google.

Could someone from Google leak the algorthim to the public?

As I understand the technology (and I am open to be corrected) the strenght and weakness of AI (or “spicy autocomplete” as I have also seen it referered to as) is that the algorithm doesn’t need to be written, but the AI creates the algorithm in a black box during training to match the correlations between for example certain pictures and certain words. Strenght because a large training set demands less labour then writing out all correlations. Weakness because the correlations are going to have blind spots.

So I don’t think there is an algorithm to leak. But on the other hand there are files (it’s a bureaucracy) with earlier botched correlations which caused enough of a stink to be escalated to a human. If someone would leak those, that would be great.

Well . . . I know very little. I read Weapons of Math Destruction by Cathy O’Neal and did one of those EdX online courses on machine learning. I was probably being a little confusing above.

Presumably Google staff can and did write a program that will use a set of criteria to flag problematic content. The criteria could be chosen directly by humans based on their ideas about what should be flagged (e.g. if you see “Mark Ames” flag the post (I’m joking about this example)), or they could use machine learning/AI to create categories (maybe supervised by humans) that should be flagged, most likely some combination of both.

I guess I’m wondering if the procedures Google uses will ever be made public, so yeah, whatever human decision making went into this is what needs to be revealed.

Google is well known for trying to do all its customer service by algorithm and making it very difficult to get to an actual person who can fix the problem (because people cost money).

In addition, Google recently got some bad press for distributing ads to inappropriate places, e.g.

https://www.msn.com/en-us/news/technology/google-unveils-major-search-partner-network-update-as-it-removes-opt-out-option/ar-BB1iZ5kx

There is a link to the adalytics article at the bottom of the MSN article.

My suspicion is that Google turned up the dial on the filtering algorithm to 11, to limit bad press, and the people who are supposed to be handling exceptions are overwhelmed.

I bet there are a lot of sites getting caught in the crossfire. Most of them will just delete whatever because Google AdSense is by far their best option for monetization. Google is like the phone company in the Lily Tomlin sketch: they don’t care, they don’t have to.

And yes, this would be a good situation for Lina Khan to look into.

>We have asked our ad service to ask Google to designate the particular section(s) of text that they deemed to be problematic, since they have provided that information to other publishers. We have not gotten a reply.

Be prepared for what Taibbi described in https://www.racket.news/p/a-case-of-intellectual-capture-on

I sent a link to this article to the FTC, suggesting that looking into Google Adsense’ monopoly power came under their purview.

Our betters know we the plebs need to be taught how to behave. That’s why the algos rule everything, all social media (maybe except for X now) etc. Everything is hate speech. There’s no free speech anymore. It’s boring, it’s childish, it’s pathetic. The option is to ditch it.

Is it the AI or is it Google’s shabby training and data analysis?

AI is potentially a useful approach to automating a wide variety of tasks. A responsible approach to adopting this technology and using it effectively requires a transparent and traceable approach to building AI system that defines and describes the underlying algorithms and ‘programming contracts’ to the end users of the AI system.

NIST defines four principles of explainable artificial intelligence

Explainable AI: What is it? How does it work? And what role does data play?

NIST is the National Institute of Standards and Technology (the measurement standards laboratory in the United States).

1. An AI system should supply “evidence, support, or reasoning for each output.”

2. An AI system should provide explanations that its users can understand.

3. Explanation accuracy. An explanation should accurately reflect the process the AI system used to arrive at the output.

4. Knowledge limits. An AI system should operate only under the conditions it was designed for and not provide output when it lacks sufficient confidence in the result.

According to the Royal Society

Explainable AI: the basics Policy briefing

“There has, for some time, been growing discussion in research and policy communities about the extent to which individuals developing AI, or subject to an AI-enabled decision, are able to understand how AI works, and why a particular decision was reached. These discussions were brought into sharp relief following adoption of the European General Data Protection Regulation, which prompted debate about whether or not individuals had a ‘right to an explanation’.”

The ‘black box’ in policy and research debates

“Some of today’s AI tools are able to produce highly-accurate results, but are also highly complex if not outright opaque, rendering their workings difficult to interpret. These so-called ‘black box’ models can be too complicated for even expert users to fully understand.”

Perhaps Google should behave responsibly and provide an explanation.

What about a class action lawsuit? Are there enough other sites being affected by this (it seems not from the footnotes)? The readers and contributors could be a party? Sorry, not a lawyer and this is probably unhelpful.

https://vdocuments.mx/computers-dont-argue.html?page=10#google_vignette

Which, as you see, I got ironically by using Google.

A spookily prescient story by Gordon R Dockson.

I don’t why I bother to post here. Nevertheless

Consider this as preparation for KOSA implementation. Analyzing sites like NC gives google engineering practice at moderating at scale. They can resell such technology when KOSA becomes law.

Such implementation will be an iterative process as 1st amendment concerns provide pushback, but something like it will happen. Disintermediation will limits imposed

This is really bad. And here I was wondering why my small tiny company website with three pages got threats of suspension from Google for “non compliance with cookie policy” three weeks ago. Although there is nothing more than HTML and CSS on the site. The site is not spying on users. Google is. No cookies and it is mentioned in “Conditions”. But the demonetisation thread to news websites is way more scary.

Techno-Totalitarian-Tyranny: not just Google, but even Celebrity-Oligarch Elon Musk and Co. censor journalists on his Twitter/X. Being an oligarch who claims to support “free speech” is not only hypocrisy, it’s oxymoronic.

https://thegrayzone.com/2024/03/19/banned-elons-free-speech-x-app/

I’ve always presumed Musk is controlled opposition.

I am an old analog refugee in this new digital world. So I don’t understand the digi-technological issues involved.

But I trust my intuitive sense of smell for conspiratorial combines of bad actors. I agree with the commenters who say that some humans-in-command want googol to demonetize this blog in hopes of shutting in down through borderline lack-of-money attrition. And shutting it down with more blunt force bluntly applied if their first approach doesn’t work.

” AI” and “algorithms” are used as the shroud of plausible deniability cover for bad actor persons driving these decisions. I sympathise with the views expressed above that someone ordered a hit on Naked Capitalism and said to do it through the “AI” or the “algorithm” to make it look like the ” AI” or the “algorithm” did it all on its own.

Lavrenti Beria once said: ” Show me the man and I will show you the crime”.

https://www.oxfordeagle.com/2018/05/09/show-me-the-man-and-ill-show-you-the-crime/

An art blogger by the name of Christa Zaat feels your pain. She posts art images with citations in different categories and by artists and movements and has two or three different Facebook pages devoted to them, one under her own name and one of the pages is Female Artists in History. Christa posted these images for years and sometimes reposts them. She does fantastic work showing the work of artists who would have been otherwise forgotten.

After ten years of blissfully filling her pages with art, Christa has been over the last several months been flagged over and over for “violent and disturbing images” and when you click on the image there is absolutely nothing violent or disturbing about them. She’s gone over and over through whatever process Facebook allegedly has only to be told assuming she’s even writing or talking to a human that there is nothing they can do. The blame keeps being shifted to the algorithm. She’s been flagged over 50 times at this point and is in fear of losing her pages and all of her work. Christa has documented everything regarding the flagging and Facebook’s frustratingly useless responses.