A compact comprehensive discussion of the present state and future prospects of AI technologies applied to armed conflict is an impossible mission, but I will undertake it here. What gives me encouragement is the great difficulty of prognostication regarding AI. I believe AI will be the most profound transformation of human affairs in the history of civilization, and nobody has a good grasp of where this is going, so the deficiencies of my predictive efforts may not stand out among the shortcomings of others.

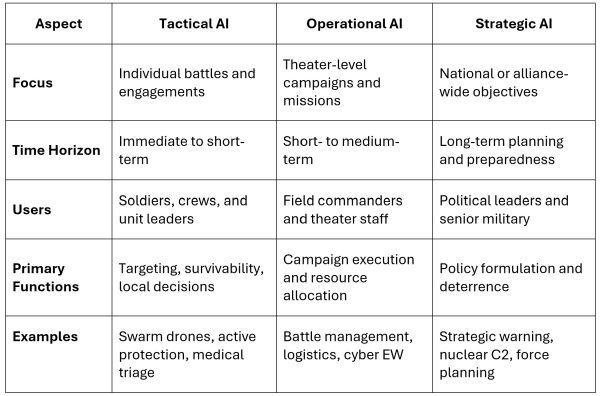

Warfare has a structural hierarchy that recapitulates its evolution. Early hand-to-hand combat and tribal battles were entirely tactical. As tribes aggregated into forces resembling armies, operational logistics and strategies became necessary, and when nation states formed enormous armies, navies, and air forces, grand strategies developed. Technological advances have generally permeated all levels of the art of war. Cavalry, gunpowder, high explosives, steel ships, mechanized armor and transport, aviation, and computerization have all affected tactical, operational, and strategic levels of warfare. I will discuss AI in terms of its role in each of these levels of armed conflict.

Tactical AI

Starting at the bottom of the art of war hierarchy, consider a soldier with a rifle. It currently takes four to eight weeks for the military to train a highly-proficient marksman. Such a soldier, a squad-designated marksman, can hit targets effectively at 600 yards. Today, rudimentary AI-integrated electronics in a smart rifle sight can give almost anyone an even higher degree of accurate shooting capability. This equipment automatically adjusts aim for wind, temperature, atmospheric pressure, rifle position, target motion, and range, and it does it consistently, even in the heat of battle.

Developments like smart rifle sights foreshadow the extinction of soldiers on the battlefield. The same high accuracy will be available to any aerial or terrestrial drone armed with an automatic rifle. Ultimately, human soldiers will not be survivable on future battlefields where smarter, faster, and deadlier robotic combatants are deployed.

Another tactical weapon prominent on today’s battlefields is the guided combat drone. Although most attack drones are directed over a video link by a drone operator, AI technology is enabling autonomous operation for advanced drones capable of loitering over the battlefield and identifying and striking targets with minimal human guidance. Moreover The concept of AI-enabled intelligent drone swarms is under development by multiple militaries, and this will add a new dimension of lethality to drone weaponry.

Operational AI

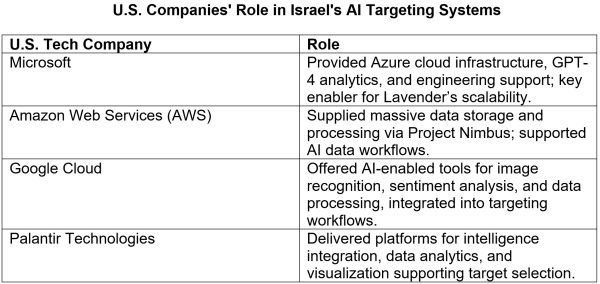

At the operational level, military advantage is attained by the large-scale direction of resources through superior information and communication. This pertains to areas such as logistics, handling of casualties, and detection and targeting of enemy forces. AI technology can confer advantages across all operational domains. A chilling example of such AI utilization is the Israeli “Lavender” system used to direct strikes against Hamas in the current war in Gaza.

U.S. technology companies, such as Palantir Technologies, have come under increasing criticism for supplying components of military AI systems to foreign governments, particularly Israel. U.S. citizens are concerned that these systems are or will be used domestically for repressive purposes.

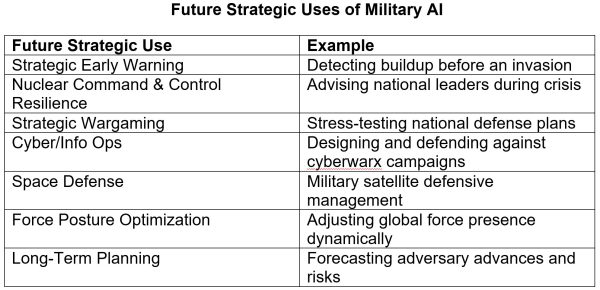

Strategic AI

At the highest levels of strategic military planning, AI can potentially contribute significantly to the quality of decision making. With its ability to rapidly integrate vast amounts of information across many domains, AI decision support systems can provide leaders with clearer estimates of strategic threats, risks, and opportunities that ever before. However, if AI facilities are granted autonomy to make strategic decisions, there is a grave danger of undesired conflict escalation resulting from undiscovered flaws in the AI programming.

The development of safeguards for strategic military AI systems is hindered by the wall of secrecy behind which national defense projects are created. Independent review mechanisms for such projects should be created to allow oversight of classified systems, particularly those related to cyberwarfare and nuclear weapons.

Escalation and the Dangerous Future of Military AI

Contemporary military theory puts a high premium on speed of execution of what is called the OODA loop (Observe, Orient, Decide, Act). An adversary that can execute this loop faster has a potent advantage over the slower opponent. Because AIs can accelerate all aspects of military decision making, there is a powerful evolutionary pressure to grant AI systems increasing autonomy for fear of losing a decision loop race against an enemy. This creates the potential for very rapid escalation of an armed conflict between opposing AIs. If unchecked, such escalation could culminate in a global nuclear war.

AI Ensembles

Perhaps the greatest source of uncertainty regarding future AI developments is the evolution of ensembles of collaborative AI systems. Just as military effectiveness is enhanced by close cooperation among manned units, the future architecture of military AI facilities will benefit from close integration of multiple AI systems. The compounding effects of anomalies in the interacting systems could result in emergent failure modes undetectable in unit testing. This is a disturbing possibility in systems associated with weaponry. A related danger is the potential enabling of self-modifying capabilities in the AIs, which would enable learning-based enhancement of functionality, but with unpredictably hazardous consequences. The extraordinary speed and power of AI ensembles would be a double-edged sword that could result in serious unrecoverable errors, such as friendly fire incidents or false threat detection triggering counter-strikes.

Conclusion

There is an AI arms race underway spanning all aspects of armed conflict. Like the nuclear arms race, it is dangerously unstable. If AI military systems are granted increasing autonomy there is a possibility of rapid conflict escalation that could be catastrophic. For decades the world has been bedeviled by unexpected flaws and bugs in relatively simple software. The vastly greater complexity of AI systems and the likely future interaction among multiple AI entities substantially increases the risk of unknown operational failure modes. Of particular concern is the potential for AIs to modify their own programming and implement capabilities unknown to their human proprietors. Political leaders and policy makers should work to develop international protocols and treaties implementing safeguards to prevent runaway AI technology from launching enormously destructive wars. Never has human intelligence been more necessary to guide its artificial counterparts.

The scary thing about all this is that you have half smart politicos hiring wildly overconfident tech bros to implement systems no one really understands.

It’s not like anything could go wrong 😏

My first thought is actually, if you ultimately remove soldiers from the battlefield, what then? We have zero casualty conflicts?

It seems more likely instead that targeting your opponent’s civilian population centers becomes even more central. There you’ll find the means of production, and those doing the production. I don’t think you can divorce killing from war.

There are so many possible avenues to all of this. Sadly an end to violent conflict is likely not one of them.

“This creates the potential for very rapid escalation of an armed conflict between opposing AIs. If unchecked, such escalation could culminate in a global nuclear war.”

Years ago, I read a list compiled by Google of various ways experiments with AI systems based on neural networks went awry.

A number of these trials dealt with multi-player games of the EVE kind — with AI systems configured and trained to be participants in complex simulated wars. Not only did the AI players cheat liberally, some also inferred that since the objective was to prevent adversaries from winning, then the best option was to go nuclear right away and blow up everything (including self)…

A Taste of Armageddon.

Maybe. But the military has always over-promised what their latest wunderwaffen could do. I remember during the Vietnam War when the latest sensors were said to “make the jungle transparent.” The Vietnamese hung bags of urine in trees and the Americans bombed acres of empty jungle. Forty-five years ago Reagan promised his “star wars” anti-missile system would stop ICBMs. I don’t think they ever had a successful, honest test. I’m always skeptical of claims made for new weapon systems–particularly when the claims are made at appropriations time.

I keep seeing that phrase “what could go wrong?” or some variation of it in comments skeptical of AI and I think it will be similar as the last tech-boondoggle (self driving cars): less than we expect, since it doesn’t actually work, and is 99% marketing.

Not that we shouldn’t be concerned & wary of it, but it’s just the latest “e-shit” from an industry running on fumes, trying desperately to squeeze $$$ out of an increasingly dry American economy.

Do not exclude the factor quantity, which as we all know, has a quality of its own.

Perhaps a few isolated AI systems — which as you surmise may well not be as effective as advertised, or even not work properly at all — will not cause much trouble. Put many of them in action simultaneously, and the result might be sheer destructive chaos.

Relevant example, since you mention self-driving cars: they fall short of what is expected from all the hype, but individually their failures always had a limited impact. However, in such cities as Austin or San Francisco, where driverless shuttle services have been put in operation on a significant scale, those defective AI-based capabilities already generated unexpected, massive traffic jams.

And then make them with explosive warheads…

mostly grift is just about right, i expect.

but every once in a while, the miltech works more or less as advertised(drones upon weddings)…just not against peer great powers.

but they’ll work well enough on uppity americans who dont toe the line, i suppose.

i have long considered…when in driving around the pasture in the Falcon(dead for a year, now, lack of $)…the need for something akin to giant shotguns pointed up all around my place.

dont know how to do that(im not really a gun guy, but can hit a rabbit at 100 yards)

alternative is painting a giant red cross on the roofs of my various structures.

and…in local news…this is supposedly the last day of rain for me…we’ll see how it shakes out,lol.

it’s rained every day but one since july 3rd.

and its beginning to harsh my mellow.

i miss 12% humidity in july, dernit.

“alternative is painting a giant red cross on the roofs of my various structures.”

Knowing how Israelis specifically target Palestinian hospitals, red-cross convoys, and medical professionals; that Saudis attacked medical facilities over 130 times in Yemen, including the destruction of an entire MSF hospital; that Ukrainians repeatedly used medical buildings to house soldiers; and that Israelis put a military command centre under a hospital, it seems to me that marking something with a red cross is increasingly becoming equivalent to adorning it with a bullseye.

GIGO.

Same as it ever was.

Although I’m anything but an authority on Sun Tzu, I’m with Mikel on this one. This-gen AI is uncannily good at predicting natural phenomena like protein folds, but there’s nothing in nature so fickle as human nature. Know thine enemy?? Good luck with that one.

It’s more likely military AI will end in a great Kesslef**k while we the people starve in a derailed ecosystem.

You’d have to extrapolate superlinearly from the current state of AI to get to the future of warfare hinted at here. We are perched at the top of the Gartner AI hype cycle and are told, rather breathlessly, that consumer AI applications are going to be transformative for the way we live, enterprise-grade AI applications are going to change the way we work (and eventually replace us entirely) while supercharging corporate productivity and profits, a step change in scientific breakthroughs is all but certain with AI, and now military supremacy is also going to be dictated by AI. One would have to be hyperbullish on AI to believe that all these things are true and not see that this “AI powering everything” thesis is undermined by the simple fact that the critical inputs to AI (training data, compute, and algorithms) are finite. This is before we get to the very real constraint of insufficient electricity generation capacity imposed by the sobering reality that many countries, including the so-called “advanced” ones, are running 21st century economies on top of creaking 20th century grids. Electricity isn’t something you spin up on the fly like you would additional servers when using cloud auto-scaling but this hardly ever gets mentioned when laying out the bull case for AI. Bitcoin is hitting record highs so you can be sure those miners are also chomping at the bit to put even more strain on national grids. If, on the other hand, any of this does come to pass, the real atomic unit of competitiveness, the thing that will dictate economic and military supremacy, will be electricity generation.

“I believe AI will be the most profound transformation of human affairs in the history of civilization”

Indeed. And shockingly – at least in public – this gets zero consideration.

In entertainment business which might be closer to most of our home bases than weapons and which will be the first victim of AI you get the impression they fear to look the beast into the eyes knowing that they have no solution prepared.

So let the flood come instead and then we’ll see who survives.

To be honest, I do not like living in “interesting times.”

p.s. Most likely some in entertainment will try to find feeding troughs in the MIC where some elements of entertainment media are already rooted. It will be a pretty fucked up planet.

Quoting a comment on this subject here at NC from somebody working in the entertainment biz:

Indeed.

Thanks.

But I don’t think so.

Any.

More.

“This is the final report of the Nostromo.”

I talked to a lawyer pal. She does´t believe in what I too suggested – draconian laws. She instead stated that those who have libraries with IP will focus on protecting those. One reason she claims Netflix is producing so much content at the moment to increase size and value of future Netflix libraries.

So that part is currently under negotiations in the legal sphere.

To set the rules of the future.

The rest is out in the wild.

So the question is, how would a collapse happen? I am only speculating now. But as soon as financiers are pulling out because the business won’t create revenue it will fall apart.

Lets assume in 20 years any one person with a then computer can create a decent Blockbuster the concept of movies as paid mass entertainment that was invented merely 150 years ago will be gone. Like the era of speculation for tulips.

What will be sustained I guess is a certain degree of national state-funded TV/film production on a rather limited level. Until it will be phased out over a few decades.

So actors, directors, art directors, and all the other departments will find themselves back in the ancient era where doing performative art was a leasure time activity. Nothing more.

Because everything that does not need human interaction on a stage e.g. will be created virtually.

So providing energy or data storage will be more of a business model than “making actual movies”. A bit like McDonald´s – not the burgers are the real value but real estate.

25 years ago the talk of the end of film stock was mostly mocked in public. 10 years later Kodak was bankrupt.

Fully automated industrial production will follow.

So I still stand by Haig’s memorable statement.

I am not happy to write this.

Well, I don’t see how that speaks to the point.

And meanwhile are Disney et al going to just stand by while their IP is ripped off?

No, of course they’re not:

Disney and Universal Sue A.I. Firm for Copyright Infringement

https://www.nytimes.com/2025/06/11/business/media/disney-universal-midjourney-ai.html

Granted, if we assume that “in 20 years any one person with a then computer can create a decent Blockbuster”, then of course the scenario you describe probably follows.

But this is a yuge assumption. It’s pretty much “assume a can opener”.

And the analogy to digital film stock doesn’t work at all. That is just about technology (i.e., film vs. digital), but now we are talking about art, i.e., the art of storytelling, the art of mise-en-scène, the art of montage, etc. Is AI going to suddenly become better at producing art than humans, and how many thousands of tries will it take to get actors with five fingers, etc.? Again: “assume a can opener”.

Remember The Blair Witch Project (1999), and how everybody was saying “this changes everything!” — except then nothing really changed? Where are all the successful indie films that followed The Blair Witch Project? Sure, there are a few films like Tangerine and maybe a half-dozen others shot using an iPhone, but a half-dozen or a dozen films shot by big name directors like Steven Soderbergh — that hardly seem like a revolution.

And even if the hypothetical “one person with a then computer can create a decent Blockbuster” pipe dream were to materialize in 20 years, would you be interested in going to see those cookie-cutter AI slop “blockbusters”?

I wouldn’t.

Just cause I saw it this very moment – HOLLYWOOD REPORTER with an – ahem – long-read…

Not been able to read myself yet:

Rise of the Machines: Inside Hollywood’s AI Civil War

The technology is already transforming the industry — and could forever change the entertainment we consume. But the battle to contain it has just begun.

by Steven Zeitchik

July 16, 2025

https://www.hollywoodreporter.com/business/digital/ai-future-hollywood-creativity-1236315046/

Thanks for this. I read it quickly.

Kind of a curious article that careens between breathless techno-optimism and sober concern. Regarding the former:

Circumventing consent? Giving tech bros a seat in Hollywood to push “digital humans”?

What could go wrong?

But the article also mentions not only the current lawsuits against AI but explores many concerns within the industry about what will happen to the art and creativity of cinema if this tech is used at scale, e.g.:

That said, I’m not sure the author really understands the difference between LLMs and the kind of CGFX technology that is already being used extensively in studio film production. Already by the late 90s, it was estimated that 80% of studio feature films use some form of digital image manipulation. However, none of that would be considered “AI”, and the artistic decisions were always being made by humans.

Reading, this, my sense is that for the people who are most serious about cinema, the jury is still out. This article points to a lot of exploration, testing, and VC money, but not really much in the way of commitment at the level of feature film production. We learn that Darren Aronofsky is exploring this tech, but also that he finds most of what he’s seeing is slop.

Not mentioned in this article, but I’ll throw this in: Albert Serra, director of Pacifiction (excellent film, BTW):

And this concern is also highlighted by the article: by definition, AI looks backward, consuming, cannibalizing and distilling existing images, so how will we get something new from that? The output of LLMs have an “unexpected” quality in large part due to their probabilistic and outright random behavior. The output is shaped by a random “seed” value, but I don’t see how randomness yields creativity any more than I am persuaded the infinite monkey theorem is a meaningful path to artistic creation (the theorem has recently been challenged, btw).

Finally, also no mention in this article of Ari Folman’s The Congress (2013), in which an actress gives consent to be digitized and signs a contract agreeing that the studio can use he digital clone “in any film they want”. It’s a very interesting meditation on what the world might look like when everybody can become a digital avatar of themselves.

No doubt there will be putatively “ethical-AI” elements in films that at the level of production are in fact closer to what we see in Alex Garland’s Ex Machina (2014), which is another deeply disturbing film about where all of this is going, especially when we’re talking about circumventing consent.

Thanks.

There was once this, Spielberg´s “AI” (a movie I never liked)

A.I.: Artificial Intelligence (2001) – Dinner Malfunction | Movieclips

2:44

https://www.youtube.com/watch?v=ofzIdn6PeSc

Since you mention it, ARTE – the public TV broadcaster for Germany and France – had “Pacification” available in their program recently (not any more though). There is still a short interview with Serra. Outside Europe it won’t work I guess https://www.arte.tv/de/videos/108672-025-A/ein-gespraech-mit-albert-serra/

Directed my first feature in 1983. Still at it. Crowdfunding is the independent filmmaker’s friend. Distribution is another matter. We must hang together or we will hang alone.

Just finally saw your Walker. Really, really brilliant film; loved it, and I’ve seen lots. You were robbed of the career path you deserved; but, capitalism. Needn’t tell you. Staying in the game is a Fuck You to the money suits . . . you’ve stayed good at that I saw with a bit of research. Stay Red. Two thirds of the good new films I’ve seen in recent years at least were small independents. Distribution is the howling bitch on the path to glory, fer sure.

I’d love to see a film made on an alternative life path for Louise Brooks on her beating the system she hated and becoming the richest person in the world, a concept burning its way out of my brain for the past few years. Your approach would be the only way to ever do it. There are so many stories out there beyond the same curdled swill the corporate industry forces upon the minds of the public . . . .

“There are so many stories out there beyond the same curdled swill the corporate industry forces upon the minds of the public . . . .”

One of the biggest problems and hurdles in mainstream production is the incapability and unwillingness to do those “many stories”.

It’s sickening to see that it’s instead the recycling over and over and over again of the same – fuck the term – “content”.

p.s. But to get something made à la “Pacification” as Acacia above mentions has obstacles of its very own kind, try to navigate the Cannes circles, the funders for today´s art movie scene, to get money….

A Parisian friend said very clearly French filmmakers of that category are your Paris-based bourgeoisie. There it´s not much difference to the perverse elitism of the era of French Restauration as described by Balzac…

So fiction filmmaking is in general a rather dark territory (which in my personal view makes MeToo an inherent part of the business as such. Abuse of any kind on all levels is inscribed into it.)

You’re one of my idols. I think your most of your films are either in the Criterion Collection and on DVD and Blu-ray elsewhere, which at least means we can see them at home without having to pay the skim to all the tech bros in the streaming supply chain. And hopefully no one ever makes an AI clone of Joe Strummer!

Great to see you here in the comments.

The problem with letting an AI run things is knowing what it will decide on. So in a war it might decide to commit continuous massacres on a country’s civilian population in order for that county to stop fighting. You know, what Israel did with Hezbollah whom they could never defeat. The Geneva Convention? That does not compute. Look at Israel’s AI “Lavender” system. The point of it was not to kill Hamas members but to murder their wives and children as well as their neighbours for bonus points. But the IDF was only following – AI – orders. Didn’t work at Nuremberg and doesn’t work here.

Another point. Met this British soldier back in the 80s and he was telling me that all sorts of entrenching gear was being packed up at their base to be sent back to the UK and noted that that was a lot of their gear. It was soon found that through a software error that ALL the British army’s entrenching equipment was being sent back to the UK that would have left the British Army of the Rhine with zip. You have to have a human in the loop or else errors like this could easily be repeated.

“Didn’t work at Nuremberg and doesn’t work here.

Same conversation with lawyer friend I referred above: As knowing people here will confirm, AI in test-solving cases as part of law studies is happening.

And whether the AI system is right or not won’t actually matter – at least not to the powers to be.

It´s not that different from now: Israel is the biggest violator of international law since 1967. Did knowing this as a fact change the course of events? Will ethnic cleansing stop because we know it’s wrong?

As AI will be a tool of power it will serve to suppress resistance. The Orwellian argumentation made up now in Europe offers a meaningless glimpse into the most fascist transformation and instrumentalization of law we will have witnessed as a species so far.

Eventually I guess resistance will of course use AI too.

And probably guerilla warfare with drones – for instance.

I am not saying it´s all lost. But people in the West are not prepared to resist to what will be happening under their noses. I would advice to become undertakers in 50 years from now.

And indeed, the difference between China/Russia and the West will remind of the stories we heard 3000 years ago when Europe was a piece of forest populated by a few hunter tribes while in the Near and Far East wondersome technologies and life styles and civilisations were built.

I watched an interesting podcast from Dwarkesh Patel, an AI researcher/podcaster, and Victor Shih, a political Science. They were talking about how the Chinese government has been working to have humans monitoring many steps of AI outputs and research to be able to put breaks on this and that this functionality is being built into the models themselves. They also touched a bit on the difference in economies and how AI plays into that.

Video for those interested: https://youtu.be/b1TeeIG6Uaw

Unrelated to the above but one of his videos really put into context how disruptive AI can potentially be. He talked about it will give businesses the ability to train position-specific agents and replicate them. This will not only help scale but eliminate company wisdom being lost to people leaving.

Rev Kev: You have to have a human in the loop or else errors like this could easily be repeated.

Doesn’t always help. When I was stationed in Germany, the new quarterly supply microfiche (yes, it was a while ago) came out with a decimal shifted typo: A set of sheets and pillowcases, which should cost $5.05, were now listed at $50.50. Which meant that any poor troop who’d lost or mangled or used up or otherwise needed to replace his sheets HAD to be charged fifty bucks for the set. NO EXCEPTIONS.

Much work went into scrounging sheets and pillowcases for that quarter until the new ‘fiche arrived with the price corrected.

It’s the old garbage in, garbage out. And as AI models are being trained on the internet at large, that is a lot of garbage in

Thanks, Haig!

Pretty straightforward logic chain: Wars are bad. Wars are caused by humans. Therefore, eliminating all humans will eliminate all wars.

I, for one, would like to be among the first to welcome our new robotic overlords and offer the heartiest of welcomes to their arrival, “HELLLLOOOOOO, SKYNET!!!!”

And then there is the Black Box problem.

The interesting thing about all this is that apart from computer-corrected rifle sights (which surely are artificial intelligence in the same way that a thermostat is artificial intelligence) virtually all the things being talked about here are things which can be done, almost certainly more effectively and definitely more controllably, by humans.

Why would anyone outsource something like strategic analysis or tactical assessment to a machine? The only conclusion is either a) the people doing it automatically believe that machines are better; b) the people doing it are financially linked to the makers of the machines; c) the people doing it are planning to commit unspeakable crimes and want to be able to blame them on the machines; d) the people doing it don’t know what they are doing.

The more factors involved in an optimization, the greater the advantage of an AI over a human problem solver. Directing numerous elements in a coordinated manner in a large-scale battle is an optimization problem. A human commander has limited bandwidth in terms of input of operational detail and speed of evaluation and decision making. An AI can handle more input and do it faster. Moreover, human leadership quality is not uniform. Countless battles have been lost because of mistakes made in the chain of command. In contrast, each instance of an AI performs consistently and tirelessly.

The moving goal post tactic is commonly used against AI claims, with each advance declared to be falling short of “real intelligence,” but AI moves too. Humans do not become more capable with each passing year, but AIs do.

My suspicion is that “humans + AI” hybrid approaches will defeat both all-human and all-AI approaches alike.

But I expect to be long dead before there’s sufficient empirical evidence to either prove or disprove my hypothesis.

Consistently? I guess. Hallucinations are consistent, too.

How do you get consistency when LLMs are probabilistic, ergo inherently random systems? You might want to read up on the parameters like temperature, topP, topK, and especially the seed value to a query that is a random number.

Again, LLMs are not AGI. They do not “think”. They do not have consciousness. And different models and little coding tweaks are not going to make them “think”. “Improved reasoning” is just marketing hype.

If we’re talking about some future subjunctive AGI, I gather that falls under “assume a can opener”.

This is the incrementalist account of technology that we often hear. Regarding AI, this is not what Rodney Brooks has been arguing, and I’ll go with his account, not the incrementalist one, which falls prey to the Beagle fallacy.

If you look at videos of the fighting in Ukraine, you will not see pictures of combat between individual soldiers armed with rifles. Indeed, whilst soldiers have to be taught to fire accurately, that hasn’t been much of a factor in combat since at least WW2. These days, the Russians bombard Ukrainian forces from a distance, then rush them and use grenades or sometimes drones to mop up the survivors. What you could describe as AI is, of course, already very present on the battlefield, particularly in generating situational awareness and, in the Russian case at least, allowing drones to have targets selected for them automatically and then being automatically guided to their destination.

Since the most that AI could ever realistically do, and we are a long way from that, is to approximate human decision-making, it’s hard to see what advantages it would have in taking strategic decisions. There is no way, in any case, that political leaders would agree to take themselves out of the loop.

Unfortunately, time pressure distorts decision making. The Kaiser didn’t want WWI, but once the mobilization wheels started turning, the generals took over using the use it or lose argument. The seductive speed advantage of AI will undermine human judgement (already rather poor) if proper safeguards do not exist. Speed kills in more ways than one.

The scary key words are “fake news”.

Am I the only one who senses a Y2K vibe around AI

Read Ed Zitron. You’ll get confirmation of your feelings and then some.

I’m with Amfortas up-thread — I fear its use upon domestic populations most of all, as is generally the case with all tools of oppression employed by the empire … 😑